The report from Electronic Enthusiasts (by Li Wanwan) states that the NPU is a processor specifically designed for artificial intelligence (AI) computations, primarily used for efficiently executing operations related to neural networks (such as matrix multiplication, convolution, activation functions, etc.). Compared to traditional CPU/GPU, the NPU has advantages in energy efficiency and computational speed, making it particularly suitable for mobile devices, edge computing, and embedded AI scenarios.The NPU has several core features: first, its dedicated architecture is optimized for neural network computations, supporting parallel processing of large matrix operations (such as INT8/FP16 low-precision calculations), with typical operations including convolution, pooling, and attention mechanisms (Transformer); second, it has a high energy efficiency ratio, with lower power consumption compared to GPUs, making it suitable for scenarios requiring high battery life, such as smartphones and IoT devices; third, it has low latency, optimized for AI inference and training, reducing data transfer overhead; fourth, it supports heterogeneous computing, often working in conjunction with CPUs, GPUs, DSPs, etc. (such as the “AI engine” in mobile SoCs).NPUs are widely used in various AI-driven devices and systems, with typical scenarios including: smartphones, enabling real-time image enhancement (such as AI photography in Huawei’s Kirin chips), voice assistants, AR/VR interactions, etc.; wearable devices, supporting low-power localized AI computations (such as health monitoring in smartwatches).Autonomous driving, processing sensor data to achieve real-time object detection, path planning, and decision-making (such as Tesla’s FSD chip). In terms of computational power requirements, L4-level autonomous vehicles have NPUs with computing power exceeding 1000 TOPS.Edge computing, such as smart cameras for facial recognition and abnormal behavior detection. IoT devices, localized voice assistants (such as wake word recognition in smart speakers).Data centers, accelerating AI model training and inference, with typical applications like Google’s TPU (Tensor Processing Unit). Industrial quality inspection, compressing inference latency to within 20ms, with deployment volume increasing by 120% annually.Typical NPU chips include Huawei’s Kirin chip (Da Vinci NPU) for mobile AI photography and voice recognition; Qualcomm’s Hexagon DSP+NPU (Snapdragon platform), supporting AI noise reduction and image enhancement; Apple’s Neural Engine (A/M series chips), providing underlying support for features like Face ID and Siri.In edge computing and autonomous driving, there is Tesla’s FSD chip, which integrates an NPU for processing autonomous driving vision models; Horizon’s Journey chip (BPU architecture) for intelligent driving perception computing.In the cloud, there are Cambricon’s MLU series for cloud AI training/inference acceleration; NVIDIA’s Grace Hopper (integrated NPU module) for large model inference.Key technologies of NPU include quantization compression, supporting INT4/INT8 low-precision calculations to enhance energy efficiency; sparse computing, skipping zero-value calculations to accelerate sparse models (such as networks after pruning); compiler optimization, with dedicated toolchains (such as Huawei’s Ascend CANN, Qualcomm’s AI Engine) converting frameworks (TensorFlow/PyTorch) models into NPU instructions.From a trend perspective, there are several clear development directions for NPUs: optoelectronic fusion computing, combining photonics technology to further enhance computing power and energy efficiency; neuromorphic chips, mimicking biological neuron structures to achieve lower power AI computations; Chiplet technology, enhancing computing power through interconnecting multiple NPUs (such as Cerebras’ wafer-scale chips).Overall, as the core hardware for AI computing, NPUs are profoundly changing the way artificial intelligence technology is implemented. Their high parallelism, low power consumption, and hardware acceleration capabilities make them the preferred solution in fields such as mobile devices, autonomous driving, and edge computing. With technological evolution and explosive market demand, NPUs will play an increasingly important role in the future AI ecosystem.

Disclaimer: This article is originally from Electronic Enthusiasts, please indicate the source above when reprinting. If you want to join the group for discussion, please add WeChat elecfans999, for submission inquiries, please email [email protected].

More hot articles to read

-

Impact of tariff storm on the storage industry

-

“Four-in-One” computing power platform, with over 90% localization rate of chips, compatible with 8 types of domestic AI chips

-

Made in the USA iPhone, cost doubled? Apple has failed twice to build factories!

-

H20 ban reversal! Domestic AI chips rise strongly, who will become the game-changer?

-

US wields tariff stick, how strong is China’s chip industry chain?

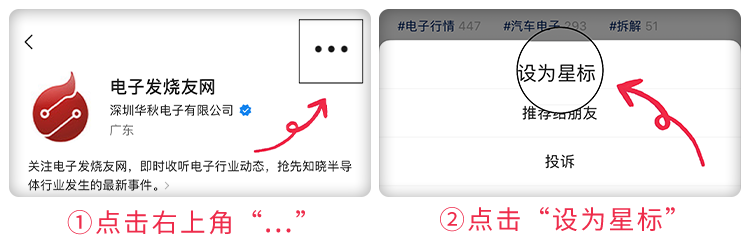

Click to follow and star us

Set us as a star to not miss any updates!