In 2024, embodied intelligence and large models have become focal topics. Many believe the humanoid robot industry is on the brink of a “boom,” but how long is this night really? Another important carrier of embodied intelligence—the robotic arm—shows a dual strong prospect from commercialization to the application of new technologies in embodied multimodal large models.

Source | Deep Blue Academy Group

Humanoid robots are the hype, but robotic arms are making the money

In 2024, as the curtain rises on technological transformation.

There are the trending “large models” engaging in price wars, and there’s “intelligent driving,” which is almost falling from grace and is now focusing on new technologies.

There’s also a rising star, prophesied to be the “Ziweixing” of the year—humanoid robots.

Humanoid robots are like the protagonists of this drama, quickly capturing public imagination and media attention upon their debut.

However, amid this fervor, voices of skepticism, concern, and pessimism are also emerging.

When the spotlight dims, the big players turn to the capital markets.

They suddenly discover that the real money-maker is the seemingly ordinary—robotic arms.

Historically, capital markets driven by profit have started to take notice.

What have robotic arms been doing behind the scenes?

In recent years, the most “sensational” event in the robotic arm industry was undoubtedly the video uploaded by the then underfunded creator Zhihui Jun (Peng Zhihui) on Bilibili, showcasing his self-made robotic arm stitching grapes remotely in a bathroom! The comments were filled withWC (frog sounds).

Fast forward three years, and the once “young man” from Huawei, Zhihui Jun, has now become the CEO of “Zhiyuan,” a humanoid robot company that has secured over 1 billion in funding.

The development prospects for robotic arms are increasingly promising.

For instance, at the recent World Robot Conference, there were applications using depth cameras combined with robotic arms for spatial recognition and object tracking, and others employing facial tracking technology for automatic control of robotic arms…

Indeed, there’s a strong driving force behind this—the rapid development of [embodied multimodal large models].

More and more work in the robotics field is attempting to construct embodied multimodal large models to endow robots with high-level reasoning and low-level control capabilities.

So, why have robotic arms been able to apply embodied intelligence before humanoid robots?

There are several reasons; robotic arms have indeed not been idle over the past few decades, such as:

-

Clearer application scenarios: Compared to humanoid robots, robotic arms have clearer and broader application scenarios in industrial automation, logistics, and service industries, and they also have a price advantage.

-

More mature technical systems: Robotic arm technology has developed over decades and formed a relatively mature technical system and industrial chain, which is more conducive to integrating embodied multimodal large models.

-

More competitive cost pricing: The R&D and manufacturing costs of humanoid robots are much higher than those of robotic arms, especially in achieving complex human movements and perceptual capabilities. The high costs result in a very long investment return cycle and profit cycle for humanoid robots.

-

Greater policy support: From automobile manufacturing to electronic product assembly, robotic arms, with their high efficiency, high precision, and high reliability, have become an indispensable part of industrial automation, with increasing policy support.

Therefore, compared to the current humanoid robot track, “robotic arms” have become a more stable and attractive business choice.

Moreover, the future of “humanoid robots” is believed to be even more vibrant, relying on the support of “robotic arms”!

Thus, let’s take this opportunity to discuss modern “robotic arms” and catch the capital wave.

Intelligent Robotic Arms Emerge: Solutions to Technical Challenges

In this series, we will core discuss several technical issues of the promising “intelligent” robotic arms:

-

One is the generalization problem of constructing the “base model” for robotic arms and the motion control schemes in real scenarios.

-

Another is the currentcombination with the trending “fusion of vision, language, and action” embodied multimodal large models.

-

Finally, we will focus on exploring how robotic manipulation can approach human capabilities.

Of course, to further explore, a dedicated group has been established, with nearly 400 like-minded friends joining. Interested friends are welcome to join the discussion.

1. Embodied Multimodal Large Models

With the rapid development of embodied multimodal large models, big data, cloud computing, and other technologies, robotic arms are undergoing a profound transformation from single-function to multifunctional, from fixed scenarios to flexible applications, moving towards smarter and more complex directions.

This brings new challenges and opportunities for algorithm technicians and the entire industry.

The combination of embodied multimodal large models and robotic arms effectively pushes the boundaries of robotic arms in executing complex tasks.

Modern intelligent robotic arms are expected to land first in industrial manufacturing, flexible logistics, and commercial services, such as intelligent sorting and handling in automated factories, and natural language instruction execution for household service robots.

The “application field” of robotic arms is further expanded.

But! It is well known that the current combination of multimodal large models and embodied intelligence has gradually risen from the most direct perception level to more complex issues such as efficient planning, control, and reasoning.

How to effectively integrate and fine-tune multimodal information for specific robotic tasks, and how to enhance the efficient reasoning and manipulation capabilities of embodied multimodal large models, has become a significant research direction.

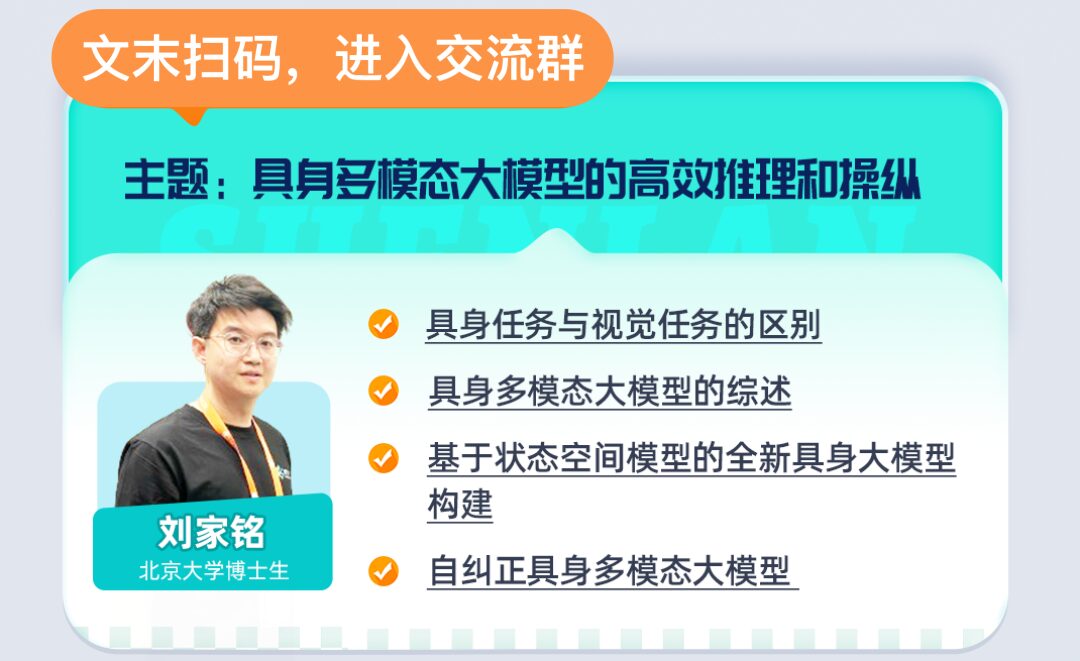

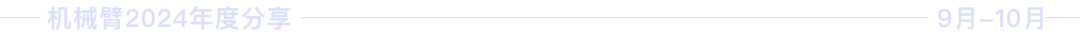

To address this issue, Peking University’s HMI Lab has developed the RoboMamba model, innovatively integrating a visual encoder with an efficient state-space language model (Mamba), constructing a new multimodal large model that possesses reasoning capabilities for visual common sense tasks and robotic-related tasks.

In September, the first author of this work, Dr. Liu Jiaming from Peking University (winner of the ICCV 2023 Continuous Generalization Learning Competition, ICCV 2023 Robust Multitask Learning Competition, and CVPR 2023 Autonomous Driving Occupancy Prediction Competition bronze medal).

Will specifically share on the topic of “Embodied Multimodal Large Models” in Efficient Reasoning and Manipulation of Robots. Friends interested in Dr. Liu’s research can join the group for discussion, and later, Dr. Liu will conduct an online public sharing to explore this cutting-edge issue together.

“Robot Grasping and Manipulation” Theme Exchange Month🦾

2. Generalizable Robot Manipulation

In the field of robotics, achieving generalizable robot manipulation has always been a hot research topic and challenge.

Generalizable robot manipulation refers to the ability of robots to autonomously understand and execute tasks based on existing knowledge and experience when facing unseen objects, environments, or tasks. The emergence of kinematics-guided prompt learning frameworks and embodied large models (such as ManipLLM) enables robots to understand complex instructions, predict physical actions, and execute tasks in real environments.

However, achieving generalizable robot manipulation often relies on massive data for imitation learning, but collecting sufficient robot data in real scenarios is prohibitively expensive, leading to significant cost issues.

Regarding the “generalizable robot manipulation problem,” Dr. Xia Wenkai from Renmin University of China’s Gao Ling Artificial Intelligence Institute will provide a focused sharing. (There is an entry for participation at the end of the document)

3. How Can Robots’ Manipulation Capabilities Approach Humans?

Over the past few decades, robotics technology has made significant progress, and many commercial robot platforms have been put into use, showcasing high precision and repeatability.

However, this outstanding precision often fails to effectively translate into manipulation capabilities for many everyday objects. Currently, the object manipulation performance of robots still cannot compare with that of humans.

Dr. Zhao Chao from Hong Kong University of Science and Technology believes that the breakthrough in future object manipulation lies in achieving a form of “imprecise dexterity”—even when control, motion, and perception are imprecise, robots can still accomplish dexterous manipulation through robust and adaptive behaviors.

This issue is also worthy of in-depth discussion and analysis by technical researchers. Dr. Zhao Chao (mentored by Professor Chen Qifeng) will specifically share on this topic.

(This group will operate for about 30 days and has invited 7 guests for in-depth sharing, currently free to open.)

Delving into Robotic Arms, Aligning with New Technological Innovations

Thanks to the enormous potential market, broad application fields, mature technical systems, and strong policy support exhibited by embodied intelligence in emerging technological fields, the “robotic arm” track is heating up again, with its commercialization prospects even surpassing the highly anticipated humanoid robots.

Thus, this month, the Deep Blue Academy has specially organized a “robotic arm” theme month. In addition to establishing a discussion group for everyone, six researchers from different subfields have been invited to hold 7 live exchange sessions to promote domestic research and understanding of this theme. (Free to open)

(This group will operate for about 30 days and has invited 7 guests for in-depth sharing, currently free to open.)

Delving into Robotic Arms, Aligning with New Technological Innovations

Thanks to the enormous potential market, broad application fields, mature technical systems, and strong policy support exhibited by embodied intelligence in emerging technological fields, the “robotic arm” track is heating up again, with its commercialization prospects even surpassing the highly anticipated humanoid robots.

Thus, this month, the Deep Blue Academy has specially organized a “robotic arm” theme month. In addition to establishing a discussion group for everyone, six researchers from different subfields have been invited to hold 7 live exchange sessions to promote domestic research and understanding of this theme. (Free to open)

This content covers:Generalizable object manipulation strategies based on base models, interactive robotic arm motion control, completing complex robotic manipulation using a single RGB camera, efficient reasoning and manipulation of embodied multimodal large models, and ultimately how robotic manipulation capabilities can approach humans.

(Scan to enter the group⬆️)

We believe that excellent people will eventually meet.

See you in our group! Let’s chat during the live session!