Recent advancements in artificial intelligence (AI), edge computing, and the adoption of Internet of Things (IoT) devices have converged to create opportunities for edge AI.

This has opened new possibilities for edge AI that were previously unimaginable—from assisting radiologists in identifying diseases, to driving cars on highways, to helping us pollinate plants.

The concept of edge computing, which is being discussed and implemented by countless analysts and companies, can be traced back to the 1990s when content delivery networks were created to deliver web and video content from edge servers deployed near users.

Today, almost every business has work functions that can benefit from the adoption of edge AI. In fact, edge applications are driving the next wave of AI to improve our lives at home, work, school, and on the road.

This article will provide a detailed understanding of what edge AI is, its advantages and how it works, use cases for edge AI, and the relationship between edge computing and cloud computing.

What is Edge AI?

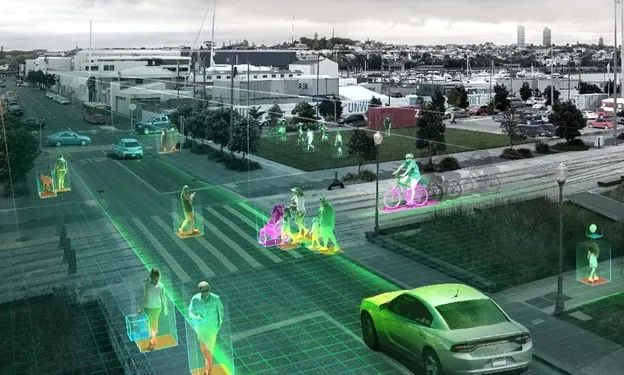

Edge AI refers to the deployment of AI applications in devices throughout the physical world. It is called “edge AI” because AI computations are performed near the user at the edge of the network, close to where the data resides, rather than being centralized in cloud computing facilities or private data centers.

Since the internet has a global impact, the edge of the network can be virtually anywhere. It can be a retail store, factory, hospital, or devices around us, such as traffic lights, phones, and automated machines.

Why Now for Edge AI?

Businesses across various industries are seeking to enhance automation to improve processes, efficiency, and safety.

To assist them, computer programs need to identify patterns and repeatedly execute tasks safely. However, the world is unstructured, and the range of tasks performed by humans covers an infinite variety of environments that cannot be fully described in programs and rules.

Advancements in edge AI have opened opportunities for machines and devices to operate with human-like cognition of “intelligence” no matter where they are located. AI-powered intelligent applications can learn to perform similar tasks in different situations, just like in real life.

The rise of deploying AI models at the edge stems from recent advancements in three areas:

-

Maturity of Neural Networks: Neural networks and related AI infrastructure have finally evolved to allow for generalized machine learning. Businesses are learning how to successfully train AI models and deploy them in edge production environments.

-

Advancements in Computing Infrastructure: Running AI at the edge requires powerful distributed computing capabilities. Recent advancements in highly parallel GPUs are suitable for executing neural networks.

-

Adoption of IoT Devices: The widespread adoption of IoT has driven explosive growth in big data. With the sudden ability to collect data across various aspects of a business—from industrial sensors, smart cameras, robots, etc.—we now have the data and devices needed to deploy AI models at the edge. Furthermore, 5G provides faster, more stable, and more secure connectivity for IoT.

Why Deploy AI at the Edge?

AI algorithms are particularly useful in places where end users with real problems are located, as they can understand unstructured information in the form of language, vision, sound, smell, temperature, faces, and other analog forms. It is impractical, if not impossible, to deploy all these AI applications in a centralized cloud or enterprise data center due to issues related to latency, bandwidth, and privacy.

The benefits of edge AI include:

-

Intelligence: AI applications are more powerful and flexible than traditional applications, which can only respond to inputs expected by the programmer. In contrast, AI neural networks are not trained to answer a specific question but rather how to answer a specific type of question, even if that question itself is new. Without AI, applications cannot handle the infinite diversity of inputs, such as text, speech, or video.

-

Real-Time Insights: Since edge technology analyzes data locally rather than in a distant cloud with remote communication delays, it can respond to user needs in real time.

-

Cost Reduction: By bringing processing power closer to the edge, applications require less internet bandwidth, significantly reducing network costs.

-

Increased Privacy: AI can analyze information from the real world without exposing it to humans, greatly enhancing the privacy of anyone needing to analyze appearances, sounds, medical images, or any other personal information. Edge AI enhances privacy by containing the data locally and only uploading analyses and insights to the cloud. Even if some data is uploaded for training purposes, it can be anonymized to protect user identity. By protecting privacy, edge AI simplifies challenges related to data compliance.

-

High Availability: Decentralization and offline capabilities make edge AI more robust, as processing data does not require internet access. This provides higher availability and reliability for mission-critical, production-grade AI applications.

-

Continuous Improvement: As AI models are trained on more data, they become increasingly accurate. When edge AI applications encounter data they cannot process accurately or confidently, they often upload the data so that AI can retrain and learn from it. Thus, the longer a model operates at the edge, the more accurate it becomes.

How Does Edge AI Work?

In order for machines to see, perform object detection, drive cars, understand speech, talk, walk, or otherwise mimic human skills, they need to functionally replicate human intelligence.

AI uses a data structure called deep neural networks to replicate human cognition. These DNNs are trained to answer specific types of questions by showing many examples of that type of question along with the correct answers.

This training process, known as “deep learning,” typically runs in data centers or the cloud because training an accurate model requires massive amounts of data and collaboration from data scientists to configure the model. Once trained, the model can become a “reasoning engine” capable of answering real-world questions.

In edge AI deployments, the reasoning engine can run on some computer or device in remote areas such as factories, hospitals, cars, satellites, and homes. When AI encounters problems, troublesome data is often uploaded to the cloud for further training of the original AI model, eventually replacing the reasoning engine at the edge. This feedback loop plays a crucial role in enhancing model performance; once deployed, edge AI models only become smarter.

What Are Some Examples of Edge AI Applications?

AI is the most powerful technological force of our time. We are now in an era where AI is radically transforming the largest industries in the world.

In sectors such as manufacturing, healthcare, financial services, transportation, and energy, edge AI is driving new business outcomes across various fields, such as:

Energy Intelligent Forecasting: For critical industries like energy, discontinuous supply threatens the health and welfare of the general public, making intelligent forecasting crucial. Edge AI models help combine historical data, weather patterns, grid operating conditions, and other information to create complex simulations that provide customers with more efficient energy production, distribution, and management information.

Predictive Maintenance in Manufacturing: Sensor data can be used to detect anomalies early and predict when machinery will fail. If a machine needs maintenance, sensors on the equipment scan for defects and manage alerts to resolve issues early, avoiding costly downtime.

AI Instruments in Healthcare: Modern medical instruments at the edge are enabling AI for minimally invasive surgeries and on-demand insights using ultra-low latency surgical video streams.

Smart Virtual Assistants in Retail: Retailers aim to improve digital customer experiences by introducing voice ordering to replace text-based searches. With voice ordering, shoppers can easily search for products, inquire about product information, and place orders online using smart speakers or other smart mobile devices.

What Role Does Cloud Computing Play in Edge Computing?

AI applications can run in data centers in the public cloud or on-site at the edge of the network near users. The advantages offered by cloud computing and edge computing can be combined when deploying edge AI.

The cloud provides benefits related to infrastructure costs, scalability, high utilization, server fault recovery, and collaboration. Edge computing provides faster response times, lower bandwidth costs, and resilience against network failures.

The cloud can support edge AI deployments in several ways:

-

The cloud can run models during training.

-

The cloud continuously runs models as it retrains them using data from the edge.

-

The cloud can run AI reasoning engines when high computational power is more important than response time; these engines can supplement on-site models. For example, a voice assistant may respond to its name but send complex requests back to the cloud for processing.

-

The cloud provides the latest versions of AI models and applications.

-

The same edge AI typically runs on a fleet of on-site devices with software installed in the cloud.

Future of Edge AI

With the commercial maturity of neural networks, the proliferation of IoT devices, parallel computing, and advancements in 5G, there is now powerful infrastructure for generalized machine learning. This enables businesses to leverage this tremendous opportunity to bring AI into their applications and act based on real-time insights while reducing costs and increasing privacy. We are only in the early stages of edge AI, and the potential applications seem endless.

*Author: Tiffany Yeung is the product marketing manager for NVIDIA’s edge and enterprise computing solutions.