In Nginx, the HTTP module uses configurations related to HTTP long connections (mainly the keepalive directive) and explains the principles of HTTP long connections.

1. HTTP Long Connections

1.1 Prerequisites

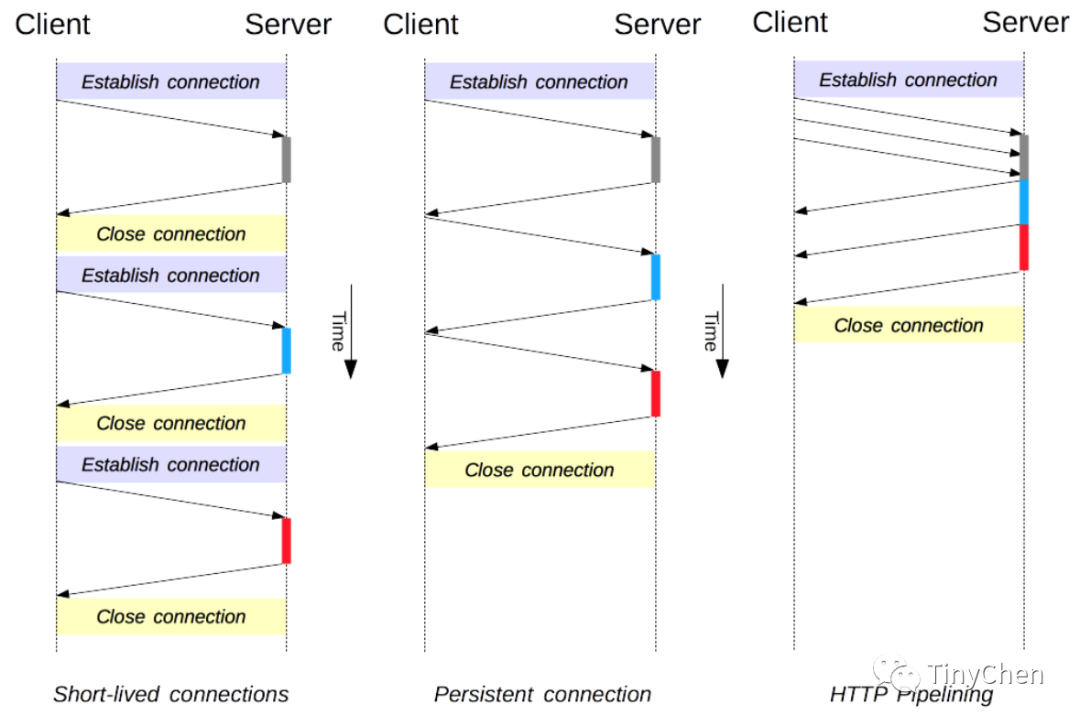

Connection management is a key topic in HTTP: opening and maintaining connections greatly affects the performance of websites and web applications. In HTTP/1.x, there are multiple models: short connections, long connections, and HTTP pipelining. Before explaining these three models, we need to clarify some prerequisite knowledge:

•HTTP is an application-layer (Layer 7) protocol, while its transport layer (Layer 4) uses the TCP protocol. This means that the essence of HTTP’s long and short connections is the long and short connections of TCP;•HTTP is a stateless, connection-oriented protocol (using TCP, connection-oriented, reliable transmission), which means the protocol has no memory of transaction processing; the server does not know the state of the client. Stateless does not mean that HTTP cannot maintain a TCP connection, nor does it mean that HTTP uses UDP (connectionless);•Establishing and closing TCP connections requires a three-way handshake and a four-way handshake. Since this is basic knowledge in computer networking, we will not elaborate on the principles here;

Next, we will begin the explanation.

1.2 HTTP Short Connection Model

In the early days, HTTP used a simple model to handle such connections. These connections have a short lifecycle: a new connection is created for each request, and it is immediately closed upon receiving a response. This is similar to the three-way handshake mentioned above. In the early development of the Internet, a webpage’s resources were not as abundant as they are today; many were just simple static pages, so this model was obviously acceptable. After the client retrieves the required resources, it disconnects, no longer occupying server resources.

Using the TCP connection’s three-way and four-way handshake model as an example:

Three-way handshake:

A→B: Are we having dinner together after work tonight?

B→A: Sure, let’s have dinner together after work tonight.

A→B: Okay, I know you accepted my invitation to dinner after work tonight.

Then they go to dinner, and when it’s time to go home:

Four-way handshake:

A→B: I’m done eating and getting ready to leave.

B→A: Wait a moment, I’m almost done.

B→A: Okay, I’m done; we can leave now.

A→B: Okay, I know you’re done, and we can leave now.

Then they each go home after dinner.

The HTTP short connection model is the earliest model and the default model of HTTP/1.0. Each HTTP request is completed by its own independent connection; this means that there is a TCP handshake before initiating each HTTP request, and it is continuous. In fact, the TCP protocol handshake itself is time-consuming, so TCP can maintain more active connections to adapt to the load. Short connections undermine the capabilities of TCP, and new cold connections degrade performance.

In HTTP/1.0, if the

Connectionprotocol header is not specified or is set toclose, the short connection model will be enabled. To enable the long connection model in HTTP/1.0, the protocol header must specifyConnection: Keep-Alive, but this is not recommended.In HTTP/1.1, the long connection model is used by default, and the short connection model is only used when

Connectionis set toclose. The protocol header does not need to be declared (but it is generally still added to prevent falling back to HTTP/1.0 for some reason).

1.3 HTTP Long Connection Model

As the number of resources that a webpage needs to request increases, the short connection model has clearly become inadequate. The short connection has two major issues: the time spent creating new connections is particularly noticeable (the three-way handshake takes time), and the performance of a TCP connection can only improve after it has been used for a period of time (hot connection). Therefore, the long connection model and pipelining model were introduced in HTTP/1.1.

Before HTTP/1.1, long connections were also known as keep-alive connections.

A long connection will be maintained for a period, repeatedly used to send a series of requests, saving the time required for establishing new TCP connection handshakes, and can take advantage of TCP’s performance enhancement capabilities. Of course, this connection will not be kept open indefinitely: it will be closed after being idle for a period (the server can use the Keep-Alive protocol header to specify a minimum connection keep-alive time).

Using the above example to explain further:

Three-way handshake:

A→B: Are we having dinner, shopping, and watching a movie after work tonight?

B→A: Sure, let’s have dinner, shopping, and watching a movie tonight.

A→B: Okay, I know you accepted my invitation to dinner, shopping, and watching a movie tonight.

Then they start having dinner, but this time they don’t immediately perform the four-way handshake to disconnect; instead, A and B also go shopping and watch a movie (equivalent to using the established connection to transfer multiple resources without the three-way handshake).

Four-way handshake omitted here.

Finally, they each go home.

Long connections also have drawbacks; as mentioned earlier, there is a resource occupation issue. Even in an idle state, it still consumes server resources and is more vulnerable to DDoS attacks. Essentially, long connections are used because the resources consumed by continuously establishing connections through three-way handshakes exceed the resources needed to maintain the connection. If the server is under high load or is being attacked by DDoS, it can use non-long connections, i.e., quickly close idle connections to improve performance.

1.4 HTTP Pipelining Model

The implementation of the pipelining model is much more complex, and the results are not particularly good. Various compatibility issues must also be considered, so this pipelining model is not enabled by default. In HTTP/2, pipelining has been replaced by better algorithms, such as multiplexing.

By default, HTTP requests are sent sequentially. The next request will only be sent after the current request has received a response. Due to network delays and bandwidth limitations, the next request may need to wait a long time before being sent to the server. Pipelining sends continuous requests on the same long connection without waiting for a response to return. This can avoid connection delays. Theoretically, performance may also improve because two HTTP requests can potentially be packed into one TCP message packet. Even if the HTTP requests continue, the size may increase, but setting the TCP MSS (Maximum Segment Size) option will still be sufficient to contain a series of simple requests.

Not all types of HTTP requests can utilize pipelining: only idempotent methods, such as GET, HEAD, PUT, and DELETE, can be safely retried. This is because when a fault occurs, the content of the pipeline should be easily retried, and the cost of retrying in case of issues should be as low as possible; otherwise, it is better to use the long connection model.

Correctly implementing pipelining is complex: the size of resources in transit, how many effective RTT will be used, and the effective bandwidth all affect how significant the improvements brought by pipelining can be. If these are not known, important messages may be delayed behind less important messages. This concept of importance may even affect page layout! Therefore, the improvements brought by HTTP pipelining are not significant in most cases. Moreover, pipelining is subject to the HOL problem.

Excerpt from wiki

Head-of-line blocking (HOL blocking) is a performance-limiting phenomenon in the realm of computer networks[1]. It occurs when the first packet in a queue (the head) is blocked, causing the entire queue of packets to be blocked. For example, it may occur in buffered input switches, due to out-of-order transmission, or in cases of multiple requests in HTTP pipelining.

We will use the above example to explain this; this time the handshake request changes, and A sends three requests to B at once:

Three-way handshake:

A→B: Are we having dinner, shopping, and watching a movie after work tonight?

B→A: Sure, let’s have dinner, shopping, and watching a movie tonight.

A→B: Okay, I know you accepted my invitation to dinner, shopping, and watching a movie tonight.

In fact, this carries significant risk.

If following the long connection model, A can decide whether to continue shopping and watching a movie based on B’s reaction during dinner. That is to say, if the connection remains after transmitting one set of data, it can continue transmitting. If the connection suddenly drops or is unstable, it may need to be re-established. (If B is unhappy during dinner and does not want to continue shopping or watching a movie, they can wait for the next time to have dinner and go shopping and watch a movie.)

However, if following the pipelining model, A sends three requests at once. Although it is more convenient during the request sending (the TCP packaging of requests during the three-way handshake is more convenient), no one knows what might happen during dinner, shopping, and watching a movie. The longer the time, the more unstable it becomes, and it is also easy to encounter situations where B wants to lose weight and only wants to shop and watch a movie (the HOL problem).

Finally, here is a comparison image showing the differences between the three models:

2. The Keepalive Directive in Nginx

When we configure Nginx as a proxy server, to support HTTP long connections, both the client to Nginx and Nginx to the server must be long connections, because at this time, Nginx is both the client of the client and the server of the server.

Having understood the principles above, the keepalive directive in Nginx is very easy to understand. There are three main directives that we will explain one by one:

2.1 keepalive

Syntax: keepalive connections;

Default: —

Context: upstream

This directive appeared in version 1.1.4.

Configured in the upstream module, it enables caching connections to the servers in the upstream. The connections parameter mainly serves to set the maximum idle connection count for each Nginx worker process to the server in the upstream. When this number is exceeded, the least used connection will be closed.

•

For HTTP, the proxy_http_version directive should be set to “1.1“, and the Connection header field should be cleared.

•

For FastCGI servers, fastcgi_keep_conn needs to be set to enable keepalive connections.

upstream http_backend {

server 127.0.0.1:8080;

keepalive 16;

}

server {

location /http/ {

proxy_pass http://http_backend;

proxy_http_version 1.1;

proxy_set_header Connection "";

}

location /FastCGI/ {

fastcgi_pass fastcgi_backend;

fastcgi_keep_conn on;

}

}

It is important to note that the keepalive directive does not limit the total number of connections that all Nginx worker processes can open to the upstream servers. Therefore, if set too high, it can lead to too many idle connections occupying the server resources in the upstream, preventing new connections from being established. Thus, this value should be adjusted based on the number of worker processes.

2.2 keepalive_requests

Syntax: keepalive_requests number;

Default: keepalive_requests 100;

Context: upstream

This directive appeared in version 1.15.3.

keepalive_requests sets the number of requests that can be sent through a single connection. Once the maximum request count is exceeded, the connection will be closed. Periodically closing connections is necessary to free up memory allocated for each connection. Therefore, it is not recommended to set keepalive_requests too high, as it may lead to excessive memory usage.

2.3 keepalive_timeout

Syntax: keepalive_timeout timeout;

Default: keepalive_timeout 60s;

Context: upstream

This directive appeared in version 1.15.3.

Sets the timeout duration for connections. Within this specified time, the idle keepalive connections between the client and the upstream server will remain open. Additionally, although the official documentation states that the default value is 60s, the configuration file nginx.conf for Nginx version 1.17.9 is set to 65s upon installation.

References

[1] Computer Networks: https://zh.wikipedia.org/wiki/计算机网络