The discussion of HTTP performance here is based on the simplest model, which is the HTTP performance of a single server. Of course, this also applies to large-scale load-balanced clusters, as such clusters are composed of multiple individual HTTP servers. Additionally, we exclude scenarios where the client or server itself is under heavy load or where different I/O models are used in the HTTP protocol implementation. We also ignore the DNS resolution process and the inherent flaws in web application development.

From the perspective of the TCP/IP model, HTTP is based on the TCP layer, so the performance of HTTP largely depends on the performance of TCP. If HTTPS is involved, the TLS/SSL layer must also be considered. However, it can be confirmed that HTTPS performance is definitely worse than HTTP, as the more layers involved, the greater the performance loss.

Under the above conditions, the most common factors affecting HTTP performance include:

-

TCP connection establishment, which is the three-way handshake phase

-

TCP slow start

-

TCP delayed acknowledgment

-

Nagle’s algorithm

-

Accumulation of TIME_WAIT and port exhaustion

-

Server-side port exhaustion

-

Server-side HTTP process reaching the maximum number of open files

TCP Connection Establishment

Typically, if the network is stable, establishing a TCP connection does not consume much time and the three-way handshake process is completed within a reasonable time frame. However, since HTTP is stateless and operates as a short connection, once an HTTP session is accepted, the TCP connection will be disconnected. A single webpage usually has many resources, which means multiple HTTP sessions will be conducted. Compared to HTTP sessions, establishing a TCP connection through the three-way handshake seems too time-consuming. However, existing connections can be reused to reduce the number of TCP connection establishments.

TCP Slow Start

TCP congestion control mechanism, during the initial transmission phase after TCP is established, limits the maximum transmission speed of the connection. If data transmission is successful, the transmission speed will gradually increase afterward; this is called TCP slow start. Slow start limits the number of IP packets that can be transmitted at a given moment. So why is there a slow start? The main reason is to prevent the network from collapsing due to large-scale data transmission. A crucial aspect of data transmission on the internet is routers, which are not inherently fast, and many traffic requests may be sent to them for routing. If the volume of data arriving at a router at a given time far exceeds the amount it can send, the router will drop packets when its local cache is exhausted. This behavior is known as congestion, and when one router experiences this issue, it can affect many links, potentially leading to widespread collapse. Therefore, both parties in TCP communication need to perform congestion control, and slow start is one of the algorithms or mechanisms for this control. Imagine a situation where we know that TCP has a retransmission mechanism. Suppose a router in the network experiences significant packet loss due to congestion; as the sender, the TCP stack will detect this situation and initiate the TCP retransmission mechanism. Moreover, the affected sender is not the only one; many TCP stacks will begin retransmission, which means that more data packets will be sent over an already congested network, exacerbating the problem.

From the above description, we can conclude that even in a normal network environment, establishing a TCP connection for each HTTP message will be affected by slow start. Since HTTP is a short connection that disconnects after one session, it can be imagined that when the client initiates an HTTP request and completes the retrieval of a resource on the webpage, the HTTP connection will disconnect before the TCP slow start process is fully completed. This means that subsequent resources on the webpage will need to establish new TCP connections, each of which will experience the slow start phase, which significantly impacts performance. Therefore, to improve performance, we can enable HTTP persistent connections, also known as keep-alive.

Additionally, we know that TCP has a concept of a window, which exists on both the sender and receiver sides. The purpose of the window is to ensure that the management of packets between the sender and receiver is orderly. Furthermore, based on this order, multiple packets can be sent to improve throughput. The size of the window can also be adjusted to prevent the sender from sending data faster than the receiver can receive it. While the window resolves the rate issue between both parties, it must pass through other network devices, so how does the sender know the receiver’s capacity? This is where congestion control comes into play.

TCP Delayed Acknowledgment

First, we need to understand what acknowledgment means. It indicates that the sender sends a TCP segment to the receiver, and upon receipt, the receiver sends back an acknowledgment indicating receipt. If the sender does not receive this acknowledgment within a certain time, the TCP segment needs to be retransmitted.

Acknowledgment messages are usually small, meaning one IP packet can carry multiple acknowledgment messages. Therefore, to avoid sending too many small packets, the receiver will wait to see if there is other data sent to it before sending the acknowledgment. If there is, it will combine the acknowledgment and the data in one TCP segment. If no data is available within a certain time frame, typically 100-200 milliseconds, the acknowledgment will be sent in a separate packet. The purpose of this is to reduce network load as much as possible.

A simple analogy is logistics: if a truck’s capacity is fixed at 10 tons, you would want it to be as full as possible when traveling from City A to City B, rather than making a trip for a small package.

Thus, TCP is designed not to send an acknowledgment for every single data packet. Instead, it typically accumulates acknowledgments for a period, and if there is additional data in the same direction, it includes the previous acknowledgments in the data packet. However, it cannot wait too long, or the other party will assume a packet loss has occurred, triggering a retransmission.

Different operating systems have different approaches to whether or not to use delayed acknowledgment and how to implement it. For instance, Linux can enable or disable it; disabling means that an acknowledgment will be sent for each packet, which is known as fast acknowledgment mode.

It is essential to note that whether to enable or how many milliseconds to set depends on the scenario. For example, in online gaming, quick acknowledgment is critical, while SSH sessions may benefit from delayed acknowledgment.

For HTTP, we can disable or adjust TCP delayed acknowledgment.

Nagle’s Algorithm

This algorithm is also designed to improve the utilization of IP packets and reduce network load. It involves small packets and full-sized packets (based on Ethernet standards, an MTU of 1500 bytes is considered a full-sized packet; anything smaller is a non-full-sized packet). However, regardless of how small a packet is, it will not be smaller than 40 bytes, as the IP header and TCP header each occupy 20 bytes. If you send a 50-byte small packet, it means that the effective data is too little. Like delayed acknowledgment, small packets do not pose problems in a local area network but significantly affect wide area networks.

The algorithm states that if the sender has sent packets in the current TCP connection but has not yet received an acknowledgment for them, any small packets that the sender wants to send must be held in the buffer until the previous packets are acknowledged. Once the acknowledgment is received, the sender will collect and assemble small packets in the buffer into one packet for transmission. This means that the faster the receiver returns ACKs, the faster the sender can send data.

Now let’s discuss the issues that arise when combining delayed acknowledgment and Nagle’s algorithm. It is easy to see that due to delayed acknowledgment, the receiver will accumulate ACKs over a period. During this time, if the sender does not receive ACKs, it will not continue sending the remaining non-full-sized packets (data is divided into multiple IP packets, and the number of packets for the response data sent by the sender is unlikely to be an exact multiple of 1500, leading to the possibility of small-sized packets). This highlights the conflict, as this problem in TCP transmission will affect transmission performance, and since HTTP relies on TCP, it will naturally impact HTTP performance. Typically, we disable this algorithm on the server side. It can be disabled at the operating system level or in the HTTP program by setting TCP_NODELAY. For example, in Nginx, you can use tcp_nodelay on; to disable it.

Accumulation of TIME_WAIT and Port Exhaustion

This refers to the client or the party that actively closes the TCP connection. Although the server can also initiate a closure, we are discussing HTTP performance here. Typically, the client initiates the active closure.

When the client makes an HTTP request (this refers to a request for a specific resource, not opening a so-called homepage, which can lead to multiple HTTP requests), after this request ends, the TCP connection will disconnect, and the connection will enter a TCP state called TIME_WAIT. This state will last for 2MSL[4] before it is finally closed. We know that when the client accesses the HTTP service of the server, it uses a random high-numbered port on its machine to connect to the server’s port 80 or 443 to establish HTTP communication (which is essentially TCP communication). This means that it consumes the available port numbers on the client. Although the client will release this random port upon disconnecting, during the 2MSL duration from TIME_WAIT to CLOSED, that random port cannot be used (if the client initiates another HTTP access to the same server). One of the purposes is to prevent dirty data from appearing on the same TCP socket. From the above conclusion, we can see that if the client accesses the server’s HTTP service too frequently, it may lead to a situation where the speed of port usage exceeds the speed of port release, ultimately resulting in an inability to establish connections due to a lack of available random ports.

As mentioned earlier, it is typically the client that actively closes the connection.

The TCP/IP Illustrated, Volume 1, Second Edition, P442, states that for interactive applications, the client usually performs the active closing operation and enters the TIME_WAIT state, while the server usually performs passive closing and does not directly enter the TIME_WAIT state.

However, if the web server has keep-alive enabled, it will also actively close the connection once the timeout duration is reached. (I am not saying that TCP/IP Illustrated is wrong; it primarily discusses TCP and does not introduce HTTP, and it states that this is usually the case, not always.)

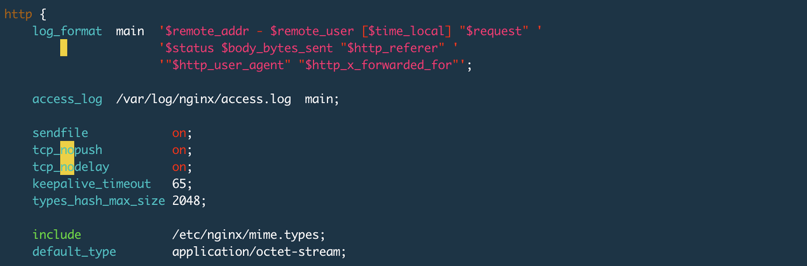

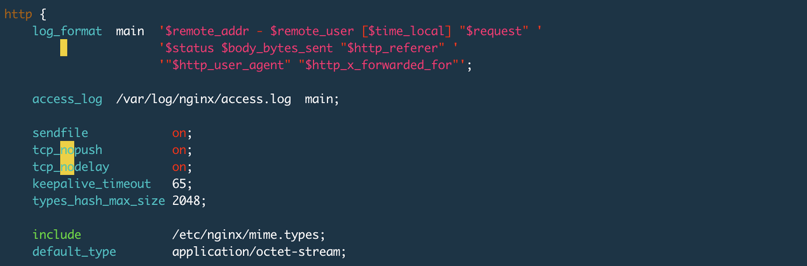

I tested using Nginx and set keepalive_timeout 65s; in the configuration file. The default setting for Nginx is 75s, and setting it to 0 disables keep-alive, as shown in the image below:

Next, I accessed the default Nginx homepage using the Chrome browser and monitored the entire communication process using a packet capture tool, as shown in the image below:

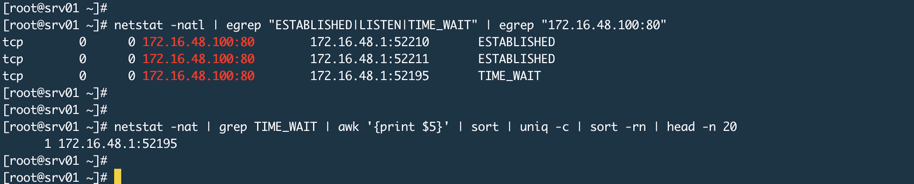

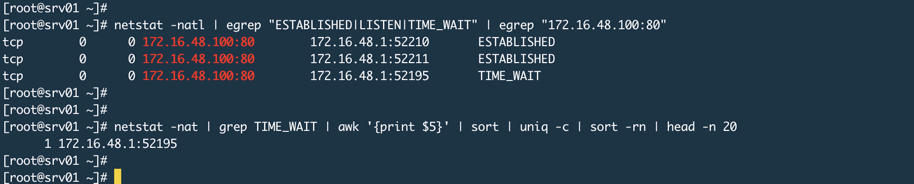

From the above image, we can see that after the effective data transmission is completed, there is a communication marked with Keep-Alive, and the server actively closes the connection after 65 seconds of inactivity. In this case, you will see the TIME_WAIT status on the Nginx server.

Server-Side Port Exhaustion

Some may wonder how Nginx, which listens on ports 80 or 443, can experience port exhaustion when clients connect to this port. As illustrated in the image below (ignoring TIME_WAIT, which is generated due to the keepalive_timeout setting on Nginx),

In fact, it depends on the working mode of Nginx. When we use Nginx, it usually works in proxy mode, meaning that the actual resources or data are on the backend web application, such as Tomcat. The characteristic of proxy mode is that the proxy server retrieves data from the backend on behalf of the user. Therefore, in this case, Nginx acts as a client to the backend server, and it will use random ports to send requests to the backend. The range of available random ports is fixed, which can be viewed using the command sysctl net.ipv4.ip_local_port_range to see the random port range on the server.

Considering the previously discussed delayed acknowledgment, Nagle’s algorithm, and the proxy mode where Nginx acts as a client to the backend using random ports, it means that there is a risk of server-side port exhaustion. If the speed of random port release is slower than the speed of establishing connections with the backend, exhaustion may occur. However, this situation is generally rare. At least in our company’s Nginx setup, I have not observed such phenomena occurring. This is primarily because static resources are hosted on a CDN, and most backend operations are REST APIs for user authentication or database operations, which are usually quick if there are no bottlenecks. However, if there are indeed bottlenecks on the backend and scaling or restructuring is costly, then when faced with high concurrency, you should implement rate limiting to prevent overwhelming the backend.

Server-Side HTTP Process Reaching Maximum Open File Limit

As mentioned earlier, HTTP communication relies on TCP connections, and a TCP connection is a socket. For Unix-like systems, opening a socket is akin to opening a file. If there are 100 requests connecting to the server, once the connections are successfully established, the server will open 100 files. However, in Linux systems, the number of files that a process can open is limited (ulimit -f), so if this value is set too low, it will also affect HTTP connections. For Nginx or other HTTP programs running in proxy mode, typically, one connection will open 2 sockets, thus occupying 2 files (except for cases where Nginx hits its local cache or directly returns data). Therefore, the number of files that can be opened by the proxy server process should be set higher.

Persistent Connections Keepalive

First, we need to understand that keepalive can be set on two levels, and the meanings of these two levels are different. TCP keepalive is a mechanism for probing activity, such as the heartbeat information we commonly refer to, indicating that the other party is still online. The sending of this heartbeat information occurs at regular intervals, meaning that the TCP connection between them must remain open. On the other hand, HTTP keep-alive is a mechanism for reusing TCP connections to avoid frequent TCP connection establishment. It is crucial to understand that TCP Keepalive and HTTP Keep-alive are not the same thing.

HTTP Keep-Alive Mechanism

Non-persistent connections will disconnect the TCP connection after each HTTP transaction is completed. The next HTTP transaction will then re-establish the TCP connection, which is clearly not an efficient mechanism. Therefore, in HTTP/1.1 and enhanced versions of HTTP/1.0, HTTP allows the TCP connection to remain open after a transaction ends, enabling subsequent HTTP transactions to reuse this connection until either the client or server actively closes it. Persistent connections reduce the number of TCP connection establishments while maximizing the avoidance of traffic limitations caused by TCP slow start.

Now let’s look at this image, where the keepalive_timeout 65s setting enables the HTTP keep-alive feature and sets the timeout duration to 65 seconds. Another important option is keepalive_requests 100;, which indicates the maximum number of HTTP requests that can be initiated on the same TCP connection, with the default being 100.

In HTTP/1.0, keep-alive is not used by default; the client must include Connection: Keep-alive in the HTTP request to attempt to activate keep-alive. If the server does not support it, then it will not be used, and all requests will proceed in the conventional manner. If the server supports it, the response header will also include Connection: Keep-alive.

In HTTP/1.1, keep-alive is used by default unless explicitly stated otherwise; thus, all connections are persistent. If a connection needs to be closed after a transaction, the HTTP response header must include Connection: Close; otherwise, the connection will remain open. However, idle connections must be closed as well; as mentioned with Nginx’s settings, it can keep idle connections open for a maximum of 65 seconds before the server will actively close the connection.

TCP Keepalive

In Linux, there is no unified switch to enable or disable TCP Keepalive functionality. To check the system’s keepalive settings, use sysctl -a | grep tcp_keepalive. If you have not modified it, it will display the following on Centos systems:

net.ipv4.tcp_keepalive_intvl = 75 # Interval between two probes in seconds

net.ipv4.tcp_keepalive_probes = 9 # Probe frequency

net.ipv4.tcp_keepalive_time = 7200 # Indicates how long to wait before probing, in seconds, which is 2 hours

According to the default settings, the overall meaning is that probing occurs every 2 hours. If the first probe fails, it will probe again after 75 seconds. If all 9 attempts fail, it will actively close the connection.

To enable TCP-level Keepalive on Nginx, there is a directive called listen used to set which port Nginx listens on within the server block. It has additional parameters used to set socket attributes, as shown in the following settings:

# Indicates enabling TCP keepalive with system defaults

listen 80 default_server so_keepalive=on;

# Indicates explicitly disabling TCP keepalive

listen 80 default_server so_keepalive=off;

# Indicates enabling it, setting probing every 30 minutes, with default interval settings and a total of 10 probes; this setting overrides the system defaults

listen 80 default_server so_keepalive=30m::10;

Whether to set so_keepalive on Nginx depends on the specific scenario. Do not confuse TCP keepalive with HTTP keepalive; the absence of so_keepalive on Nginx does not affect your HTTP requests using the keep-alive feature. If there are load balancing devices between the client and Nginx or between Nginx and backend servers, and both requests and responses pass through this load balancer, then you need to pay attention to so_keepalive. For example, in the direct routing mode of LVS, it is unaffected since responses do not pass through LVS, but in NAT mode, you need to be cautious because LVS maintains TCP sessions for a certain duration. If this duration is shorter than the time it takes for the backend to return data, LVS will disconnect the TCP connection before the client receives the data.

Link: https://www.cnblogs.com/rexcheny/p/10777906.html

(Copyright belongs to the original author, please delete if infringed)