This article comes from the trial activity of the Listen AI CSK6 Visual AI Development Kit in the Extreme Art Community. The author combines the gesture recognition capability supported by the CSK6 chip with an 8X8 dot matrix to create a game of Rock-Paper-Scissors.

1 Setting Up the Development Environment

1.1 Hardware Environment

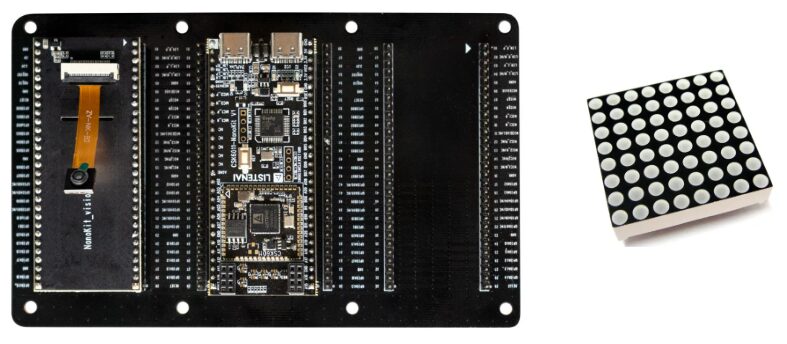

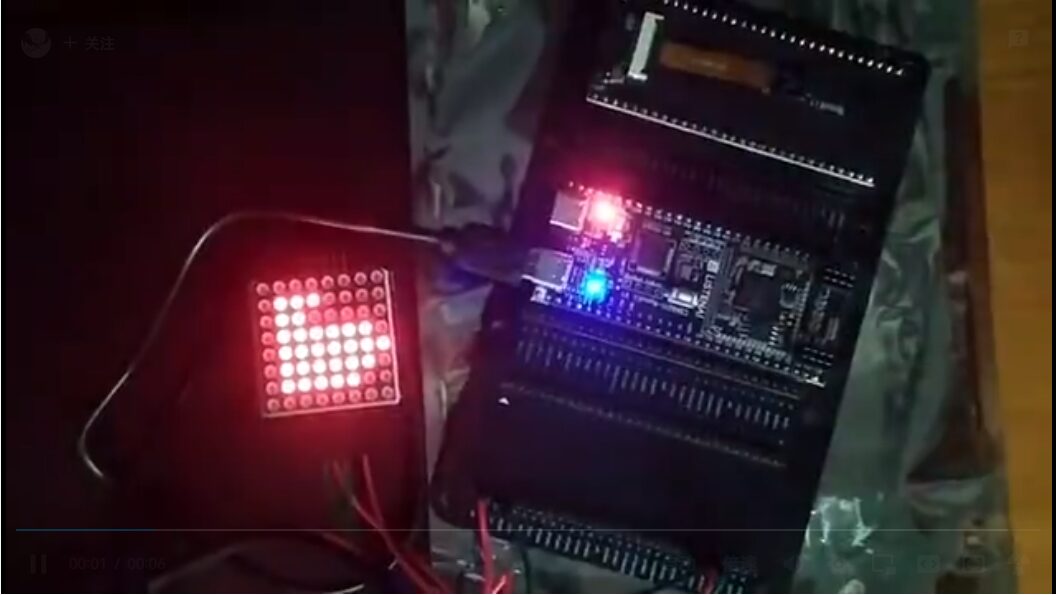

As shown in the figure below, this product consists of the Listen CSK6011-NanoKit visual development kit and an 8×8 dot matrix. The CSK6011-NanoKit is responsible for gesture recognition, while the dot matrix is responsible for graphic display, and the two are connected via the SPI bus.

1.2 Software Environment

The setup of the software development environment can refer to CSK6 Environment Setup (https://docs.listenai.com/chips/600X/application/getting_start). Listen AI thoughtfully provides a complete packaged development environment. Using the Lisa tool, you can complete project creation, compilation, and downloading. Additionally, the vendor provides a complete IDE development environment based on the VSCODE plugin.

2 Using the Dot Matrix

2.1 Hardware Connection

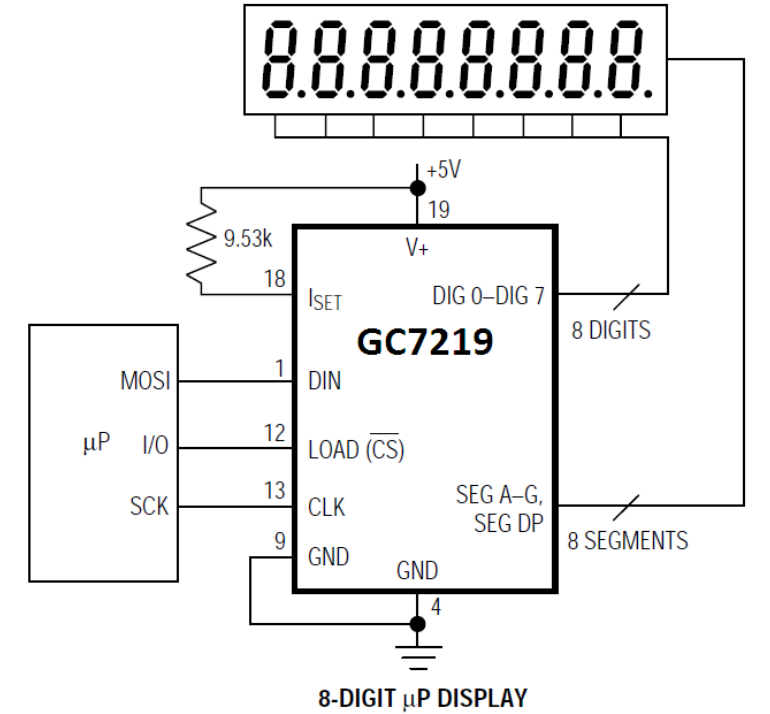

The main controller of the 8×8 dot matrix is the GC7219 (fully compatible with MAX7219), and its typical application diagram is shown below:

The chip can be driven via GPIO or SPI. The official provides a reference demo for SPI, and this implementation will control the dot matrix based on that. Connect the GC7219 (dot matrix) to the SPI0 of the CSK6011, powered by the onboard 3.3V pin. The connection diagram of the related pins is as follows:

2.2 Icon Modeling

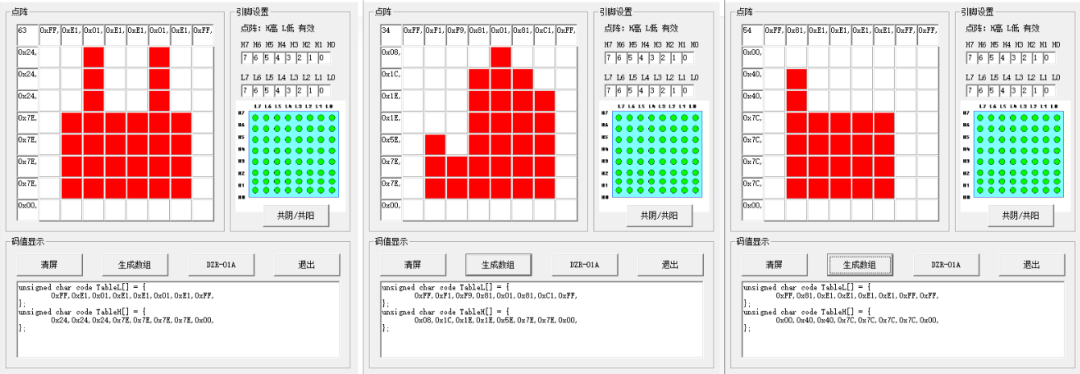

Currently, the CSK6011 supports five gestures: LIKE (👍), OK (👌), STOP (🤚), YES (✌️), and SIX (🤙). Following a similar principle, we take LIKE = Hammer, STOP = Cloth bag, YES = Scissors. The related icon modeling (common cathode) is as follows:

2.3 Driver Development

2.3.1 Establish SPI Development Project

Refer to SPI Reference Project (https://docs.listenai.com/chips/600X/application/peripheral/samples/spi) for the implementation and set up the SPI driver development project.

2.3.2 GC7219 Adaptation

According to the GC7219 manual, it supports MSB mode, and the maximum frequency is only 10M, so the SPI configuration needs to be modified as follows.

/* spi master 8bit, LSB first*/ spi_cfg.operation = SPI_WORD_SET(8) | SPI_OP_MODE_MASTER | SPI_TRANSFER_LSB; spi_cfg.frequency = 10 * 1000000UL;Change to:

/* spi master 8bit, MSB first*/ spi_cfg.operation = SPI_WORD_SET(8) | SPI_OP_MODE_MASTER | SPI_TRANSFER_MSB; spi_cfg.frequency = 5 * 1000000UL;2.3.3 Add Dot Matrix Driver

As shown in Figure 2.2, the GC7219 supports common cathode dot matrix, and the array definitions for each image are as follows:

unsigned char jiandao_table[8][2] = { {0x01,0x24},{0x02,0x24},{0x03,0x24},{0x04,0x7E}, {0x05,0x7E},{0x06,0x7E},{0x07,0x7E},{0x08,0x00}};

unsigned char shitou_table[8][2] = { {0x01,0x00},{0x02,0x40},{0x03,0x40},{0x04,0x7C}, {0x05,0x7C},{0x06,0x7C},{0x07,0x7C},{0x08,0x00}};

unsigned char bu_table[8][2] = { {0x01,0x08},{0x02,0x1C},{0x03,0x1E},{0x04,0x1E}, {0x05,0x5E},{0x06,0x7E},{0x07,0x7E},{0x08,0x00}};From the Zephyr API calls, we know that its send function spi_write requires passing a data linked list, which will then send the relevant data one by one. The complete code is as follows:

/* * SPDX-License-Identifier: Apache-2.0 */

#include <kernel.h>#include <string.h>#include <errno.h>#include <zephyr.h>#include <sys/printk.h>#include <device.h>#include <drivers/spi.h>

unsigned char work_state[2]={0x0C, 0x1}; // normal work mode

unsigned char test_state[2]={0x0F, 0x0}; // no test mode

unsigned char decode_cfg[2]={0x09, 0x0}; // no decode

unsigned char scan_range[2]={0x0B, 0x7}; // scan 0-7

unsigned char jiandao_table[8][2] = { {0x01,0x24},{0x02,0x24},{0x03,0x24},{0x04,0x7E}, {0x05,0x7E},{0x06,0x7E},{0x07,0x7E},{0x08,0x00}};

unsigned char shitou_table[8][2] = { {0x01,0x00},{0x02,0x40},{0x03,0x40},{0x04,0x7C}, {0x05,0x7C},{0x06,0x7C},{0x07,0x7C},{0x08,0x00}};

unsigned char bu_table[8][2] = { {0x01,0x08},{0x02,0x1C},{0x03,0x1E},{0x04,0x1E}, {0x05,0x5E},{0x06,0x7E},{0x07,0x7E},{0x08,0x00}};

#define TX_PACKAGE_MAX_CNT 8

void main(void){ int idx=0; const struct device *spi; struct spi_config spi_cfg = {0}; struct spi_buf_set tx_set; unsigned char digit[2]={0};

printk("spi master example\n"); spi = DEVICE_DT_GET(DT_NODELABEL(spi0)); if (!device_is_ready(spi)) { printk("SPI device %s is not ready\n", spi->name); return; }

/* spi master 8bit, LSB first*/ spi_cfg.operation = SPI_WORD_SET(8) | SPI_OP_MODE_MASTER | SPI_TRANSFER_MSB; spi_cfg.frequency = 5 * 1000000UL;

/* Make spi transaction package buffers */ struct spi_buf *tx_package = k_calloc(TX_PACKAGE_MAX_CNT, sizeof(struct spi_buf)); if (tx_package == NULL) { printk("tx_package calloc failed\n"); return; }

/* Init 7219 */ tx_package[0].buf = work_state; tx_package[0].len = 2; tx_package[1].buf = test_state; tx_package[1].len = 2; tx_package[2].buf = decode_cfg; tx_package[2].len = 2; tx_package[3].buf = scan_range; tx_package[3].len = 2;

tx_set.buffers = tx_package; tx_set.count = 4;

printk("Init 7219 ...\n"); spi_write(spi, &spi_cfg, &tx_set);

do { k_msleep(1000); printk("spi master sending jiandao_table data ...\n"); for (idx=0; idx<8; idx++) { digit[0]=jiandao_table[idx][0]; digit[1]=jiandao_table[idx][1]; tx_package[0].buf = digit; tx_package[0].len = 2; tx_set.buffers = tx_package; tx_set.count = 1; spi_write(spi, &spi_cfg, &tx_set); }

k_msleep(1000); printk("spi master sending shitou_table data ...\n"); for (idx=0; idx<8; idx++) { digit[0]=shitou_table[idx][0]; digit[1]=shitou_table[idx][1]; tx_package[0].buf = digit; tx_package[0].len = 2; tx_set.buffers = tx_package; tx_set.count = 1; spi_write(spi, &spi_cfg, &tx_set); }

k_msleep(1000); printk("spi master sending bu_table data ...\n"); for (idx=0; idx<8; idx++) { digit[0]=bu_table[idx][0]; digit[1]=bu_table[idx][1]; tx_package[0].buf = digit; tx_package[0].len = 2; tx_set.buffers = tx_package; tx_set.count = 1; spi_write(spi, &spi_cfg, &tx_set); } } while (1);}2.3.4 Building AI Project

Follow the relevant steps in the document AI Capability – Vision (https://docs.listenai.com/chips/600X/ai_usage/hsgd/intro) to set up the AI project. Here, I will mainly explain a few issues and precautions encountered.

1) Because this uses the latest git project, the print output may differ from the document; the git version and print output correspond as follows:

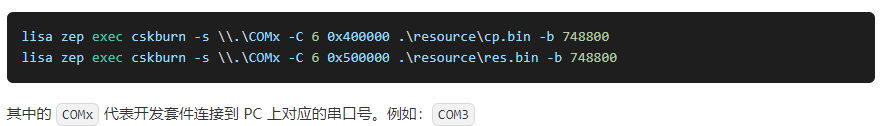

2) If you download the git project directly for compilation and upgrade, the PC tool may not work properly. You need to execute the following two commands. Refer to the link One-click Pull – Sample – and SDK Exception Solution (https://docs2.listenai.com/x/T7H8NYpx58), and then execute the compilation and flashing operation.

lisa zep init-app

lisa zep update3) If you directly open the Online PC Tool (https://tool.listenai.com/csk-view-finder-spd) in Edge browser and click “Windows System”, a 404 error will occur. Therefore, it is recommended to download the PC tool project locally for offline use.

git clone https://cloud.listenai.com/zephyr/applications/csk_view_finder_spd.git4) After executing “lisa zep flash”, if you immediately execute the following resource download command, it may fail. In this case, it is recommended to unplug and reinsert the DAP port or check if the serial port is occupied.

After the above steps, the effect viewed through the PC tool is as follows:

2.3.5 Recognition and Display

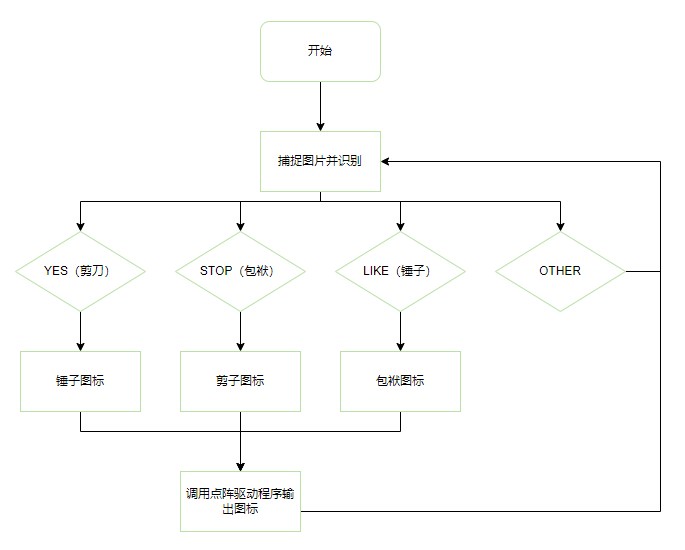

Integrate the SPI-GC7219 project and the AI gesture recognition project together. The program flow is as follows:

3 Effect Display

The effect is as follows; the machine will respond to human gestures, making it quite interesting for simple demonstrations.

Click the image to watch the video

Click the image to watch the video

4 Conclusion

Overall, the experience is good. Listen AI has performed well in terms of the ease of use of SOM, completeness of interfaces, richness of documentation, and timeliness of support. Following the documentation step by step, one can quickly get started with creating some simple products. However, it is a pity that Listen AI’s AI training and tuning tools are not yet open to the public, possibly due to their technical complexity, which reduces some of the fun. In the demo of gesture AI recognition, it is also evident that lighting and distance significantly affect recognition accuracy. However, achieving such a level of performance at such low power consumption is already impressive. Finally, I wish Listen AI’s products continue to improve, and the Extreme Art Community grows larger, bringing more rich activities to developers interested in this field.

Source | Arm Technology Academy

Copyright belongs to the original author. If there is any infringement, please contact for deletion.

END

关于安芯教育

安芯教育是聚焦AIoT(人工智能+物联网)的创新教育平台,提供从中小学到高等院校的贯通式AIoT教育解决方案。

安芯教育依托Arm技术,开发了ASC(Arm智能互联)课程及人才培养体系。已广泛应用于高等院校产学研合作及中小学STEM教育,致力于为学校和企业培养适应时代需求的智能互联领域人才。