In fact, there is another story behind ADAS, with two companies becoming the epitome of the shift in ADAS goals: Mobileye and Tesla. At that time, a new perspective on ADAS was brought to the table, which is autonomous driving. During the implementation of ADAS, sudden events caused turmoil for both companies.

On May 7, 2016, hardcore fan Joshua Brown died when his Tesla Model S Autopilot (which is actually an ADAS system) collided with an 18-wheeler while using Mobileye EyeQ3 technology. This became the first fatality caused by autonomous driving technology.

In July 2016, Mobileye announced that it would no longer provide technical support for Tesla Autopilot after the current contract expired, and their collaboration would only be limited to EyeQ3.

Eight months later, Mobileye was acquired by Intel for $15.3 billion, at the time, it was powering global autonomous vehicles with a $50 SoC. By 2020, for most people, ADAS meant a technology that helps climb the autonomous driving ladder defined by SAE (Society of Automotive Engineers) in 2014—despite the lack of evidence proving it can climb a rung. The technology of ADAS has been exaggerated with L2, L2+, and now L2++ performances. But in terms of autonomous driving, the reality may be another story.

How to Advance Computing Power, Sensors, and Chip Technology?

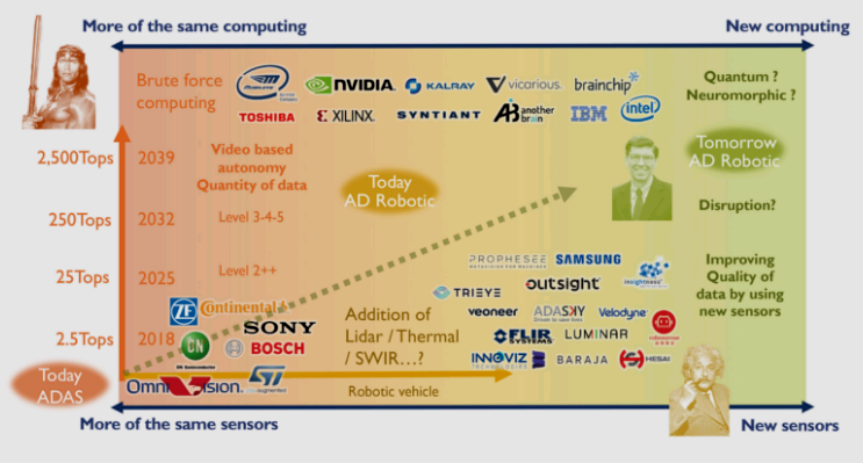

This chart from Yole is a bit complex and worth studying closely. What we need to consider is: Can today’s ADAS reach tomorrow’s AD? In this process, new computing and new sensors are needed; along with the continuously increasing computing power, up to brute force computing; will it be quantum computing or neural computing? With so many companies involved, all being leading enterprises, how should we choose?

As for computing power, what about Moore’s Law? For automotive applications, does semiconductor technology making chips shrink to a few nanometers make that much sense? After all, cars are not phones; what matters is not the small size of the chips themselves but the harsh application environment. How to solve the heat problem of increasingly smaller chips? There are too many issues to research and solve; it’s too difficult!

Innovation Directions for Autonomous Vehicles

Safety Performance Changes Little

As early as 2012, the European Union enacted regulations requiring all new cars produced in 2014 to be equipped with AEB systems.40 countries, led by Japan and the EU, hope that by 2022, all new cars and light commercial vehicles will be equipped with AEB systems.

China’s performance seems to be acceptable; a survey by Zosi Automotive Research indicated that the AEB system installation rate in China’s passenger car market reached 33.9% in 2020, nearly doubling year-on-year.

However, the safety performance of ADAS has not changed much. In June 2019, the American regulatory body IIHS held a meeting confirming that AEB reduced rear-end collisions by 50%, but the related injury claim rate only decreased by 23%… In the same year, a test by AAA (American Automobile Association) showed that in many cases, pedestrian detection has significant limitations, such as with children, groups of people, or those walking at night.

Despite ten years of technological upgrades, efforts in safety seem to have been set aside.The reason should be the desire to do better in autonomous driving, but unfortunately, the reality is not so. A significant step that everyone in the industry is waiting for is L3—allowing the driver to take their eyes off the road. This level is defined as entering autonomous driving; besides Tesla, Audi was the first company to test L3 on its 2018 A8, but it abandoned it in 2020. This is a billion-euro lesson for other autonomous driving players. Currently, Lexus only labels one model with similar technology, the LS, as “L2”.

Industry experts believe that the current mobility methods are facing five major limitations, the most criticized being the deteriorating safety for pedestrians. The other four limitations are: public transport faces challenges in efficiency and cost; traffic congestion and the cost of owning a vehicle diminish the status of cars as an important mobility solution; air travel is rapidly expanding, but the traffic conditions from cities to airports remain poor; all existing mobility methods generate carbon dioxide emissions, and transformation is urgent.

Where Is the Bottom Line for “False Autonomous Driving”?

The Tesla Model S involved in the “5.7 tragedy” used forward radar and cameras as its Autopilot’s vision. Unfortunately, during that crash, neither of its “eyes” saw a white trailer in clear sky.Similar white trucks were targeted by Model 3 in June 2020.

The first pedestrian fatality occurred in March 2018 when an Uber self-driving test vehicle, a Volvo XC90 armed to the teeth, struck a pedestrian. The system recorded radar and LiDAR observations of the pedestrian about six seconds before impact, while the vehicle was traveling at 43 miles per hour.At the time of the incident, the vehicle did not intervene or take action.

The first two fatalities involving drivers and pedestrians and the similar fate of the white behemoth

So how do we explain the gap between ADAS expectations and delivery? Is it a problem with the performance of the sensor group? Or is there a need for more sensors (redundancy)? For instance, LiDAR (all-weather) and thermal cameras (to perceive humans)? Is more computing power needed? Or is it a combination of all these factors?

The answers can indeed be many. While many automotive professionals claim that autonomous vehicles are not suitable for tomorrow and may just be a fantasy, there are already over 4,000 autonomous vehicles roaming the streets worldwide, mainly for research purposes.

By the end of 2019, with Waymo launching its commercial ride-hailing service—Waymo One, driverless taxis have become a reality. Currently, about a quarter of its fleet of 600 vehicles provides driverless taxi services to customers, while preparing to retrofit 100,000 vehicles annually when the Michigan factory opens.

Companies developing autonomous vehicles are not alone. General Motors has Cruise, Lyft is backed by Aptiv, Baidu has the Apollo project, Amazon has Zoox, Ford and Volkswagen invest in Argo.ai, Alibaba supports Autox, and Toyota invests in Pony.ai. More than 20 leading companies worldwide have joined this competition, with real autonomous vehicles on the roads.

Yole analysts believe that while it is certainly easy to have a car with some autonomous driving capabilities on the road, very few companies can reach the level of confidence required for true autonomous driving. Waymo may be the first company to provide the necessary “suitable quality” benchmarks for autonomous driving.

AV Is a Money-Burning Game

Waymo’s “suitable quality” starts with a sensor group that provides data 10 times that of current ADAS systems, using 2 to 3 times the number of sensors, with a resolution that is also 2 to 3 times that of current ADAS systems.

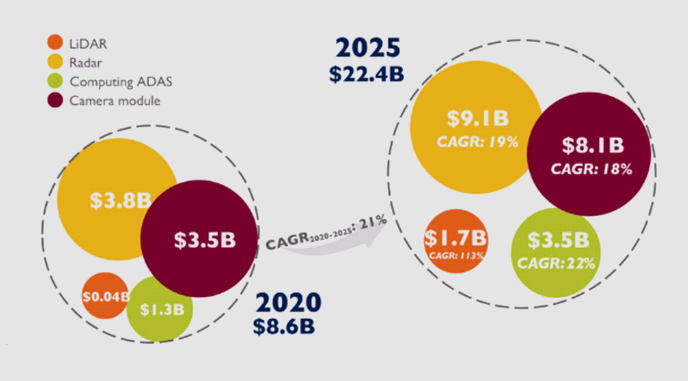

In terms of computing power, Waymo is looking for more than 100 times the capability.The computing power of a typical ADAS system using Intel Mobileye EyeQ4 chips has increased from 0.25TOP (10 times that of a high-end laptop) to 2.5TOP, while the highest computing power for autonomous vehicles has exceeded 250TOP. Both Zhiji and NIO are using chips with thousands of TOPs, which is a world of difference.But does computing power equate to true performance, or is it more accurate to measure how many frames of images are accurately identified per second? There are differing opinions in the industry.

When the cost of an L2 ADAS system is around $400, the cost of unmanned retrofit equipment has reached $130,000, which is more than 300 times that of ADAS. However, people still question whether this unmanned device performs better than an ordinary human driver.

Players in the ADAS space may eventually climb the ladder to autonomous driving, but it will take more money, more time… and require more innovation.Like every disruptive technology, unmanned taxis have not been valued by most enterprises.

Only emerging manufacturers like Tesla are prepared to invest heavily in developing their own FSD (Full Self-Driving) chips, while FSD can only reach 70TOP (with dual-chip computing power of 144TOP).Despite the heavy promotion of ADAS, true AV disruption is approaching, with Amazon and Alibaba potentially being the biggest threats to Volkswagen and Toyota, even though all the Teslas and XPengs are flaunting AV copyrights.

Traditional Automaker Structures Struggle

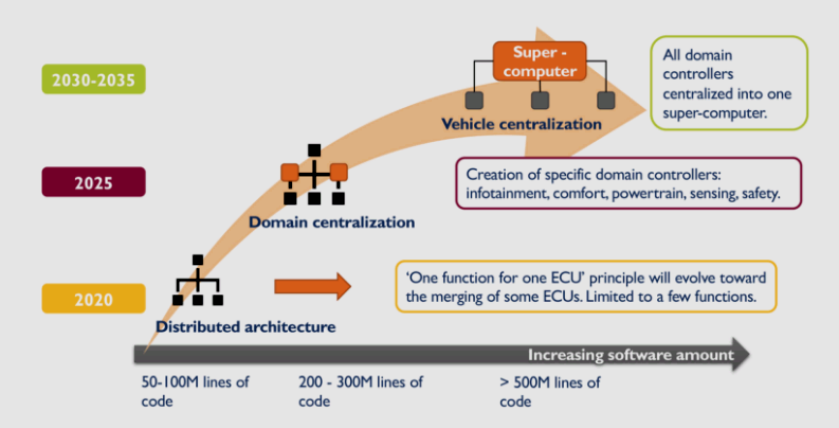

Even though ADAS vehicles are gradually reaching SAE levels, the E/E (Electrical/Electronic) architecture used by most automakers has not changed since the 1960s and the beginning of electrical architecture.The distributed E/E architecture, which uses the principle of “one ECU, one function,” still exists.