Note:This article is based on publicly available market information and is for reference only.

In backlit photography, faces appear completely dark while the background is overly bright; at the moment of exiting a tunnel, the camera briefly becomes “blind” due to the drastic change in light… These scenarios expose the core pain point of image sensors: insufficient dynamic range.Dynamic range refers to the span of brightness between the brightest and darkest areas that a sensor can capture simultaneously, directly determining whether an image can accurately reproduce the light and dark details of the real world. When the dynamic range of a sensor is insufficient, either highlights are overexposed or shadow details are lost, a problem particularly prominent in scenarios involving smartphones and automotive cameras.

1. Insufficient Dynamic Range: The Dilemma of Bright and Dark Light

-

The physical limitations of traditional sensors

The dynamic range of image sensors is limited by the “full well capacity” (FWC) of the pixels, which is the maximum charge that a single pixel can store. When the light is too strong, charge overflow leads to highlight overexposure; when the light is too weak, the signal is drowned in noise, resulting in lost shadow details. According to existing technical documentation, the dynamic range of ordinary smartphone sensors is about 60-80 dB, while the dynamic range perceivable by the human eye is much higher.

-

The challenges of extreme scenarios

In automotive ADAS (Advanced Driver Assistance Systems), insufficient dynamic range can pose safety risks. For example, the automotive industry widely recognizes that the brightness change when entering and exiting tunnels is significant, presenting a huge challenge to the dynamic range of traditional sensors. Traditional sensors struggle to capture both road signs in bright light and obstacles in dark areas simultaneously, leading to algorithm misjudgments. Similar issues also arise in the smartphone domain: tests by DXOMARK laboratories indicate that in backlit portrait mode, when the brightness difference between the face and background exceeds the sensor’s capability, details in the photo are significantly lost. For instance, in backlit photography scenarios on smartphones, if the background light is too strong while the subject is relatively dark, insufficient dynamic range can lead to the loss of subject details.

2. The Technical Route Debate: Multi-Frame Synthesis vs. Single Super Exposure

To break through the dynamic range bottleneck, the industry has developed two major technical paths:

-

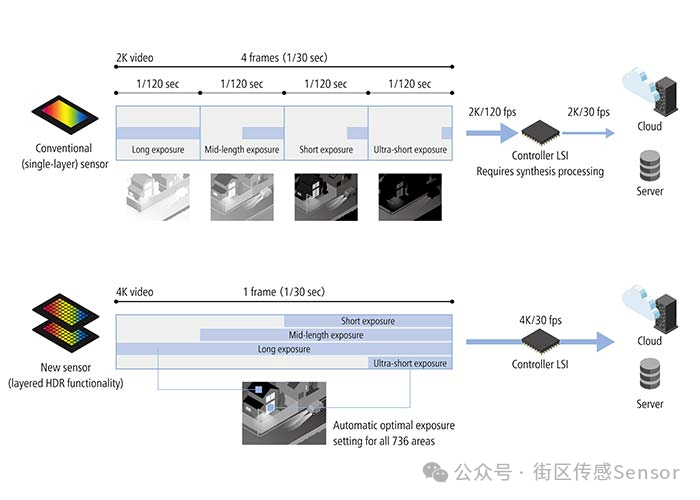

Multi-frame synthesis HDR: A reluctant compromise.

By quickly capturing multiple images with different exposures (such as short exposure to retain highlights and long exposure to capture shadows), an algorithm synthesizes a high dynamic range image. This technology is widely used in smartphones and digital cameras but has two fatal flaws:

-

Motion artifacts: According to public information, smartphones like Google Pixel use multi-frame HDR technology, which requires capturing multiple photos in a short time. In dynamic scenes, if the subject or camera moves, it can easily lead to synthesis errors, resulting in ghosting and other artifacts in the image.

-

Latency and power consumption: Technical documentation from mobile platforms like Qualcomm Snapdragon indicates that multi-frame HDR processing increases power consumption and may lead to frame rate drops. For example, multi-frame HDR processing typically requires more computational resources, which can lead to increased power consumption and slower processing speeds.

-

Single super exposure technology: Pixel-level innovation.

In recent years, single exposure technology represented by the onsemi Hyperlux series has become a new direction. According to relevant sensor data sheets released by onsemi, some models use super exposure (SE) pixel technology to achieve high dynamic range in a single exposure. For example, sensors like AR0823 support up to 150 dB HDR+LFM (High Dynamic Range + Flicker Mitigation) at full resolution. Core innovations include:

-

Pixel overflow capacitor (OFC): By expanding the well capacity, the charge storage capacity of a single pixel is significantly enhanced.

-

LOFIC low crosstalk architecture: Some sensors adopt a single pixel + single microlens design, which helps reduce light crosstalk and improve image quality. This technology has been applied in Mobileye EyeQ series automotive chips, supporting real-time scene perception for advanced driver assistance systems and even higher levels of autonomous driving.

Source: onsemi

3. Case Analysis: Zone Exposure and Motion Artifact Elimination

-

Canon’s zone exposure sensor

Canon has disclosed a patented technology that divides the image sensor into hundreds or even more independent regions. The cleverness of this design lies in the fact that each region can independently adjust its exposure time based on the detected light intensity. In backlit scenarios, different parts of the image often have significant brightness differences; for example, the sky may be very bright while the ground objects are relatively dark. Through zone exposure, the sensor can use shorter exposure times for bright areas to prevent overexposure while using longer exposure times for darker areas to capture more details. This way, the final image can achieve a better balance between bright and dark light, retaining more image information.

-

BYD Semiconductor’s exposure control technology

BYD has proposed an innovative time-sharing drive scheme for controlling the exposure of image sensors. The core idea of this technology is to divide the pixel rows of the sensor into several groups, allowing different pixel rows to be exposed at different time intervals. For example, some pixel rows may be exposed multiple times in a short period to capture details in the highlights of the image, while other pixel rows may be exposed for a longer time to gather more information in the shadows. By rapidly switching exposure in this manner, the sensor can effectively suppress the impact of LED light source flicker on image quality, especially when capturing scenes containing LED light sources, significantly enhancing the clarity and stability of the image.

Source: Canon

4. Future Directions: Balancing Dynamic Range and Scene Adaptation

-

Multi-technology integration

Sony has released the LYTIA series image sensors that combine LOFIC (or similar pixel isolation technology) with DCG (dual conversion gain) technology, aiming to further enhance the dynamic range of single exposure. Some models have a dynamic range exceeding 140 dB. Their automotive versions have passed AEC-Q100 Grade 2 certification, allowing stable operation over a wide temperature range to meet automotive application needs.

-

Algorithmic collaborative optimization

Advanced autonomous driving computing platforms like NVIDIA’s DRIVE Hyperion integrate AI algorithms to predict changes in scene lighting and adjust sensor parameters in advance when entering tunnels or similar environments to optimize dynamic range performance. For example, intelligent exposure prediction using AI algorithms can quickly adjust sensor parameters before changes in lighting conditions occur, thereby enhancing dynamic range performance.

-

Standardization and safety

According to the ISO 26262 standard, automotive-grade sensors must meet certain functional safety level requirements, such as ASIL-B level, and have a high fault detection coverage. According to ISO 26262-5:2011, for random failures in the hardware part, the single point fault metric (SPFM) for ASIL-B level must reach ≥90%. Products like the onsemi Hyperlux series have already passed relevant certifications, meeting the safety and reliability requirements of automotive electronics.

Source: SONY

Conclusion

The enhancement of dynamic range is not only a technical competition but also a reverence for the real world. From the compromise solutions of multi-frame synthesis to the innovative breakthroughs of single super exposure, image sensors are making pixel-level technological advancements to bring the machine’s eye closer to human visual capabilities. In the future, with the proliferation of technologies like autonomous driving and AR/VR, the demand for high dynamic range image sensors will continue to grow, and the “dark war” will persist, with answers perhaps hidden in more advanced microphysical structures and smarter algorithmic collaborations.