0 Introduction

Artificial intelligence, by simulating human thinking and decision-making patterns, endows machines with human-like capabilities and is influencing and transforming human society across various fields such as economy, transportation, healthcare, security, and education. Currently, artificial intelligence has become the focal point of major power competition and serves as a new engine for economic development, bringing new opportunities for social progress. Since the United States released the “National Artificial Intelligence Research and Development Strategic Plan” in 2016, many countries including China, France, the UK, Germany, Japan, and Canada have successively released their own artificial intelligence strategic plans. China’s artificial intelligence technology has rapidly developed and, through the stimulation of scale effects, has become a leading nation in the field of artificial intelligence, emerging as a major competitor to the United States. However, there are still shortcomings in the number of artificial intelligence enterprises, industrial layout, talent pool, and investment trends in China. Among these, weak foundational technology is a key factor restricting further development of artificial intelligence in China, and the talent pool, which is a fundamental factor in the competition between China and the US, has not met the rapid development needs of China’s artificial intelligence industry. In 2017, the State Council issued the “Next Generation Artificial Intelligence Development Plan”; in 2018, the Ministry of Education issued the “Artificial Intelligence Innovation Action Plan for Higher Education Institutions”; and in 2020, the Ministry of Education, the National Development and Reform Commission, and the Ministry of Finance jointly issued several opinions on promoting interdisciplinary integration and accelerating the training of graduate students in the field of artificial intelligence at “Double First-Class” universities, pointing out that universities should strengthen curriculum system construction and develop a batch of influential textbooks and national quality online open courses.

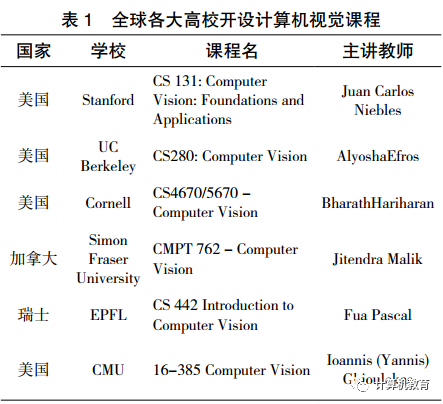

In recent years, major universities around the world have successively offered computer vision courses for undergraduates, with representative courses including the Computer Vision: Foundations and Applications course taught by Juan Carlos Niebles at Stanford University and the Computer Vision course taught by Professor Alyosha’s team at the University of California, Berkeley. Table 1 lists the status of computer vision courses offered by several foreign universities.

Domestically, reference [1] discusses the selection of teaching content for computer vision courses and the choice of engineering examples, providing some guidance for the current teaching of computer vision courses. Reference [2] discusses the establishment of a comprehensive experimental platform oriented towards the characteristics of computer vision courses and introduces four experimental platforms: panoramic stitching, biometric recognition, 3D model retrieval, and deep learning experiments. Reference [3] explores the internationalization teaching model for computer vision courses. Reference [4] introduces an innovative experimental platform for computer vision based on the NVIDIA Jetson TX1, which uses the TX1 embedded chipset with a built-in GPU, allowing innovative experiments in computer vision to no longer be limited to PCs and enabling application-oriented embedded development, thereby combining theory with practice and enhancing the effectiveness of experimental teaching. Reference [5] explores the reform of undergraduate computer vision courses oriented towards new engineering disciplines from three aspects: enhancing the applicability and timeliness of course content, increasing the depth and rigor of practical content and assessments, and setting multi-level course content that integrates professional needs. Reference [6] introduces a computer vision virtual laboratory based on OpenCV and OpenGL to address the issues of insufficient equipment and flexibility faced by current computer vision experiments in universities.

Despite certain achievements in teaching reform across various universities, insufficient practical conditions and a shortage of practical platforms remain common issues faced by local universities, particularly those in economically underdeveloped areas. Especially given the current complex international situation and the risk of decoupling in China-US technology, resources and platforms related to artificial intelligence education are still predominantly controlled by foreign entities, particularly in the field of computer vision, where widely used tools like TensorFlow, Pytorch, and OpenCV are all supported and initiated by American companies. How to cultivate talent with independently controllable technology is key to future competition in artificial intelligence among major powers.

In summary, the implementation of computer vision courses in China currently faces the following challenges: ① Computer vision is a typical interdisciplinary subject with a wide and deep range of knowledge points; ② Computer vision technology is developing rapidly with fast knowledge updates; ③ Computer vision knowledge is fragmented and lacks a systematic approach; ④ Teaching content, especially practical teaching content, is disconnected from enterprise needs; ⑤ The current international situation is complex, and there is a risk of decoupling in China-US technology, while resources and platforms related to computer vision remain predominantly controlled by foreign entities. To address these issues, this project explores the implementation of two comprehensive experimental platforms for face detection and face recognition oriented towards deep learning based on the domestically produced MindSpore platform and Atlas 200 development kit, covering disciplines such as computer vision, operating systems, networks, AI processors, image sensors, deep learning, and software development, particularly covering 70% of the knowledge points in computer vision. Upon completion of the comprehensive experiments, the experimental program can handle a complete, enterprise-oriented complex application problem. This not only helps cultivate students’ ability to solve real problems faced by enterprises but also enhances their participation in practical courses and interest in learning.

1 Experimental Teaching Project

Computer vision is one of the core courses in artificial intelligence and big data programs, with a total of 32 class hours, including 8 in-class experiments. Face recognition and face detection algorithms are two typical applications in the computer vision course, involving most knowledge points in image acquisition, image preprocessing, feature localization, feature extraction, feature representation, image segmentation, and feature matching.

1.1 Experimental Objectives

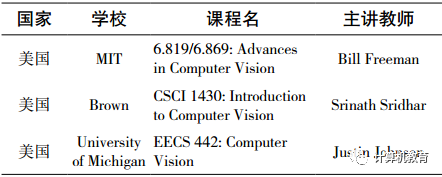

With the popularity of graphics processing units and the increase in computing power, deep learning algorithms have become a mandatory component of today’s computer vision courses due to their excellent accuracy in image processing. Currently, advanced technologies such as face recognition, image understanding, pedestrian detection, and large-scale scene recognition all utilize deep learning methods. In particular, face recognition algorithms based on deep learning employ a large number of convolutions and multiplications, necessitating high-performance chips with strong data processing capabilities. However, the use of high-performance chips inevitably limits the overall power consumption of the system, affecting the usability of face recognition systems. In actual production demands, systems must meet performance requirements while significantly reducing power consumption. Lowering power consumption not only reduces production costs but also provides users with a better experience. The objective of this experimental teaching is to enable students to develop a face detection and recognition system that can operate in real-world production environments, based on low power and high performance requirements, using embedded application systems centered around microcontrollers, leveraging their knowledge of computer vision, operating systems, and computer networks. The hardware and equipment used in this system is Atlas 200, as shown in Figure 1.

1.2 Atlas 200

As deep learning algorithms become increasingly complex, embedded devices equipped with computer vision lack high-performance computing capabilities, preventing deep learning algorithms from being directly transferred to embedded devices after being designed and trained on servers. The Atlas 200 application development board developed by Huawei addresses such needs, integrating the Huawei Ascend 310 AI processor, facilitating rapid development and verification for developers, and is widely used in scenarios such as developer validation, university education, and scientific research.

The Atlas 200 employs the Atlas 200 AI acceleration module (model 3000), which integrates the HiSilicon Ascend 310 AI processor, capable of analyzing and reasoning about various types of data, including images and videos. This chip is compact and fast-processing, with a wide range of application scenarios in today’s society. This AI acceleration module is only the size of half a credit card, meeting the enterprise’s demand for portable devices. It can also provide 22 TOPS INT8 computing power, supporting real-time analysis of 20 channels of high-definition video (1080P 25FPS, with multi-level computing power configuration supporting 22/16/8 TOPS). Additionally, this module supports milliwatt-level sleep and millisecond-level wake-up, with a typical power consumption of only 5.5W, enabling edge AI applications.

1.3 MindSpore Platform

MindSpore is an open-source deep learning framework recently launched by Huawei that better matches the computing power of Huawei Ascend processors, supporting full-scene deployment across terminals, edges, and clouds, similar to popular deep learning frameworks such as TensorFlow, PyTorch, and PaddlePaddle. MindSpore features simple programming, terminal-cloud collaboration, easy debugging, excellent performance, and open-source characteristics, lowering the threshold for AI development. The code of MindSpore is not particularly elegant, resembling Google style, but lacks strict adherence to details, and is abstract like TensorFlow, suitable for large-scale collaborative research and development while ensuring quality to a certain extent. With the full promotion of MindSpore by Huawei technologies and domestic research institutions, its applications have become increasingly widespread in recent years.

1.4 Experimental Steps

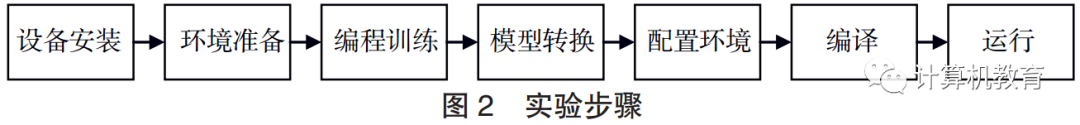

The steps for the face recognition and face detection experiments are shown in Figure 2, mainly including device installation, environment preparation, programming training, model conversion, environment configuration, compilation, and execution.

(1) Device Installation: Device installation mainly includes the installation of cameras, network cables, and USB cables, with the camera installation being slightly more complex. The Atlas 200 supports external cameras and has corresponding interfaces, supporting two MIPI-CSI interface cameras; in this experiment, a standard Raspberry Pi camera was used.

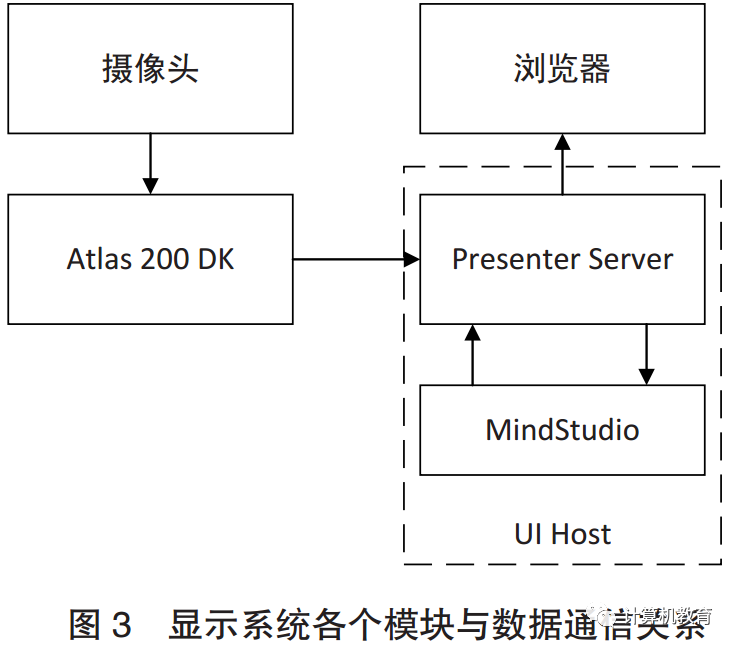

(2) Environment Preparation: The experimental system is Ubuntu, and the development software MindStudio is a development toolchain platform launched by Huawei based on the IntelliJ framework, providing application development, debugging, and model conversion functions, as well as network porting, optimization, and analysis functions to assist users in development. Before development, the system must be burned onto the storage card in the Atlas 200 development board, and relevant hardware drivers must be installed. The user PC must have the Ubuntu system installed. The development language is Python, and after the software installation is complete, relevant environment variables must be configured. Figure 3 shows the communication relationships among various modules and data in the system.

(3) Programming Training: This experiment uses the YOLOV3 model. Students must produce a training dataset, generating training set samples and corresponding labels based on the images prepared by the teacher, and after preprocessing, model training, and optimization, obtain a model for face recognition or detection.

(4) Model Conversion: Convert the original network model to a model compatible with the Ascend AI processor, then use MindStudio for model conversion to generate the .om file that can be run on Atlas 200.

(5) Environment Configuration: This mainly involves configuring the runtime environment on Atlas 200, installing packages such as PresentAgent, Autoconf, Automake, and finally setting the environment variables correctly.

(6) Code Compilation: After configuring the environment, compile the code and publish it to Atlas 200.

(7) Execution: After the code has been compiled and published, run the code; the system can recognize faces, mark face boxes, and display the confidence of face recognition.

2 Teaching Implementation

Computer vision is one of the core courses in artificial intelligence, directly targeting 140 students in 4 teaching classes of the artificial intelligence and big data program at our university, with this project directly serving 4480 person-hours. Moreover, the cutting-edge rapid transformation, systematic training methods, and problem-oriented teaching approach of this project can also be promoted to other artificial intelligence courses.

Due to limited class hours, the author has clearly defined the course focus in the computer vision course, emphasizing key areas while allowing for less emphasis on relatively minor content: in-depth discussion and practice on certain key topics, while adopting a simple introduction or browsing strategy for less critical content. Target detection and face recognition are prioritized in the course, with substantial time dedicated to explaining theories and organizing student practice. This arrangement optimizes teaching content, deepens teaching levels, and stimulates students’ curiosity and autonomous learning abilities within the limited teaching time, allowing the main instructor to focus on teaching key and difficult points and strengthen students’ practical and hands-on skills.

During the implementation of practical teaching, a “five-step teaching method” is employed, namely pre-class preparation, interest introduction, collaborative inquiry, teacher feedback, and extension.

(1) Pre-class Preparation: Before the experimental class, theoretical knowledge is first taught, and additionally, the experimental guidebook is distributed to students two weeks in advance, allowing them to self-study and familiarize themselves with the experimental content.

(2) Interest Introduction: In the theoretical lessons involving face detection and recognition, the application value of these technologies is emphasized, guiding students to understand the face recognition applications used in daily life. During the experimental classes, completed products after experiments are showcased to spark student interest.

(3) Collaborative Inquiry: Experiments are conducted in small groups, typically with 2-3 people per group, with one person responsible for the theoretical part and another for practical coding and operations, enhancing students’ collaboration and communication skills.

(4) Teacher Feedback: In class, the teacher primarily addresses problems encountered by students during experiments and facilitates discussions and guidance. This helps cultivate students’ ability to independently solve problems and alleviates the teacher’s burden.

(5) Extension: Thought-provoking questions are set, such as having students complete recognition and detection of other objects to solve daily life problems.

3 Teaching Evaluation and Reflection

After the teaching concluded, a questionnaire survey was conducted to evaluate the teaching effectiveness, focusing on students’ basic information, experimental outcomes, and issues encountered during experiments, targeting two teaching classes, with 70 questionnaires distributed and 69 returned.

3.1 Basic Information

First, a statistical analysis of students’ gender was conducted to see if there were differences in interest levels towards the experimental project. The results showed that in the artificial intelligence and big data program, the male-to-female ratio is 69.6% to 30.4%, with males still being the majority.

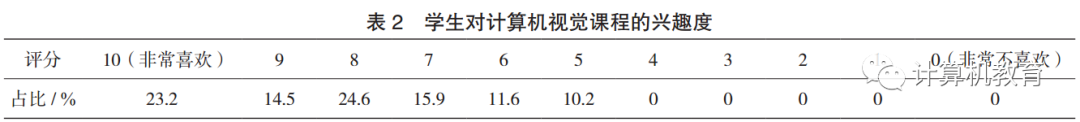

Additionally, the interest levels of students in the computer vision course are statistically represented in Table 2, showing a median score of 8 points, indicating that most students are quite interested in the computer vision course.

3.2 Experimental Outcomes

Statistics on whether students ultimately completed the Atlas 200 face recognition and detection experiments showed that 79.7% of students did not complete the experiment, while 20.3% did. The majority of students did not fully complete the experiment, indicating that project-oriented teaching poses certain challenges for both students and teachers, particularly in the context of limited time. An analysis of the completion status of students of different genders revealed that among the 14 students who completed the experiment, 4 were female (28.6%) and 10 were male (71.4%). Among the 55 students who did not complete the experiment, 17 were female (30.9%) and 38 were male (69.1%). The results indicate that there is not a significant correlation between gender and experiment completion, suggesting that in the artificial intelligence and big data program, females do not exhibit the commonly perceived weaker hands-on abilities.

3.3 Issues Encountered and Analysis

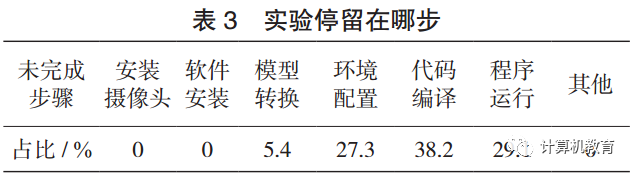

As many students did not complete the experiment, an analysis was conducted on the reasons for non-completion and the steps at which they stalled, aiming for targeted improvements in the next course implementation. The survey results on which steps students stalled in the experiment are shown in Table 3, indicating that most students were close to completing the experiment but were primarily stuck at code compilation and environment configuration. The main reason is that students were unclear about the operating environment of Atlas 200, particularly their grasp of operating system-related knowledge was insufficient.

The reasons for students not completing the experiment are analyzed in Table 4, showing that students primarily felt there was insufficient time for the experiment and that the explanation in the experimental guidebook was not clear enough. This indicates that the experiment is quite comprehensive and requires students to master related knowledge in operating systems, computer networks, and computer vision, which sets a high bar. To ensure students complete the experiment, it is necessary to increase the experimental teaching time.

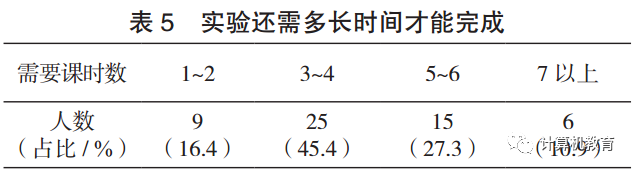

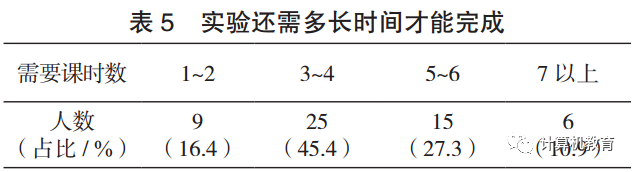

Since students who did not complete the experiment generally reported insufficient time, a survey was conducted on how much longer they felt they would need to complete the experiment, as shown in Table 5, indicating that most students believed they needed at least four additional class hours. To address the issue of insufficient class hours, there are mainly two solutions: first, increase the class hours, and second, increase students’ extracurricular study time. In the future, more class hours should be added to the computer vision course, and new teaching models such as MOOCs should be employed to increase students’ self-study time; at the same time, the management model of experimental equipment should be changed to establish open laboratories, allowing students to operate valuable equipment during their spare time.

4 Conclusion

Due to the lack of domestically produced, controllable teaching resources and experimental platforms for computer vision courses, the Huawei MindSpore platform and Atlas 200 kit were utilized to develop face recognition and detection experimental practice projects for the computer vision course, and teaching was implemented for undergraduate students in artificial intelligence and big data at our university. The results of the investigation and evaluation of teaching effectiveness indicate that this experiment is quite comprehensive and presents certain challenges, with low student completion rates, primarily due to insufficient teaching hours. In the next round of teaching, it is necessary to increase the teaching hours, stimulate students’ extracurricular learning enthusiasm, adopt MOOC teaching models, and change the management model of experimental equipment to establish open laboratories, allowing students to operate equipment during their spare time.

References:

[1] Zhuang Yanwen. A Brief Discussion on the Innovation of Computer Vision Course Teaching in Universities [J]. Education Teaching Forum, 2016(20): 118-119.

[2] Zhang Lin, Shen Ying. Comprehensive Experimental Platform for Computer Vision Courses [J]. Computer Education, 2017(5): 136-139.

[3] Wang Yuehuan, Sang Nong, Gao Changxin. Internationalized Teaching Model for Computer Vision Courses [J]. Computer Education, 2014(19): 101-103.

[4] Ye Chen, Ding Yongchao. Development of Innovative Experimental Platforms for Computer Vision [J]. Experimental Technology and Management, 2019, 36(5): 144-149.

[5] Shi Yunyue, Tang Xian, Wei Yu. Exploration of Computer Vision Course Reform for New Engineering Disciplines [J]. Modern Education, 2018, 5(43): 71-72.

[6] Tao Yongze, Guo Tiantai, Hu Jiacheng, et al. Development of Computer Vision Virtual Experiments Based on OpenCV and OpenGL [J]. Laboratory Research and Exploration, 2021, 40(4): 108-114.

[7] Huawei Technologies. Connecting the Atlas 200 DK Developer Board to Ubuntu Server [EB/OL]. [2021-03-18]. https://support.huaweicloud.com/usermanualatlas200dkappc30/atlasug_03_c30_0011.html.

[8] Huawei Technologies. Huawei Atlas 200 DK Developer Kit, Technical White Paper (Model 3000) [EB/OL]. [2021-03-17]. https://e.huawei.com/cn/material/serve

r/2c6bd7fa84c3475690b587dc653f28f1.

Funding Project: Collaborative Education between the Ministry of Education and Huawei Technologies Co., Ltd. — New Engineering Construction Project (W2020029).

First Author Introduction: Zhou Junwei, Male, Associate Professor at Wuhan University of Technology, research interests: computer vision, multimedia coding, [email protected].

(WeChat Editor: Shi Zhiwei)

More Exciting:

[Directory] Computer Education, 2022 Issue 3

[Directory] Computer Education, 2022 Issue 2

[Directory] Computer Education, 2022 Issue 1

[Editorial Board Message] Professor Li Xiaoming from Peking University: Reflections on the “Year of Classroom Teaching Improvement”…

Professor Chen Daoxu from Nanjing University: Teaching students to ask questions and teaching students to answer questions, which is more important?

[Ten Series]: Trends in Computer Science Development and Their Impact on Computer Education

Professor Li Xiaoming from Peking University: From Interesting Mathematics to Interesting Algorithms to Interesting Programming — A Path for Non-specialized Learners to Experience Computational Thinking?

Reflections on Several Issues in Building a First-class Computer Science Discipline

Professor Zhan Dechen from Harbin Institute of Technology Answers 2000 AI Questions

Professor Zhou Aoying, Vice President of East China Normal University: Accelerating the Advancement of Computer Science Education and Becoming a Pathfinder in Data Science Education — An Interview with Professor Zhou Aoying

New Engineering and Big Data Professional Construction

Learning from Others — Compilation of Research Articles on Computer Education at Home and Abroad