★ Click the blue text above to follow ★Author: Beijing Huaxing Wanbang Management Consulting Co., Ltd. Xiangyu Shangrui

As large models continue to evolve, they are pushing inference applications to edge and endpoint devices on a large scale. New AI application scenarios and models such as IoT intelligence, embodied intelligence, AI agents, and physical AI are rapidly emerging. Designers of main control chips for AI-enabled devices are facing new challenges. Especially for edge and endpoint devices, they can either serve as carriers for large models or provide better core functionalities for applications through intelligence. The new product definition direction forces main chip architects to consider how their chips can respond to the rapid evolution of large models while also empowering traditional applications and realizing emerging functionalities through intelligent means.

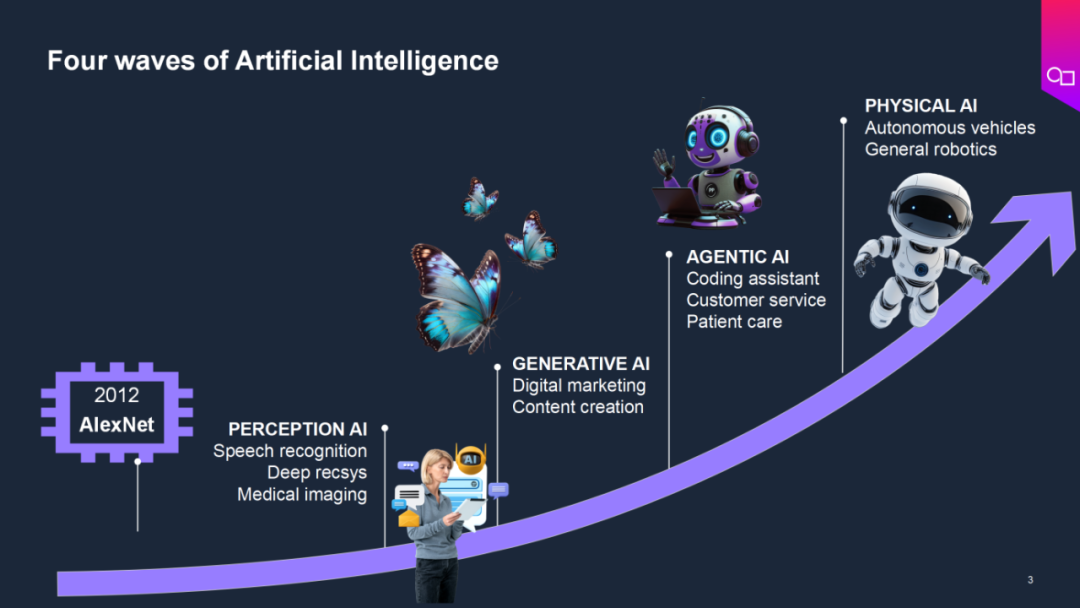

Therefore, beyond pursuing extreme performance, power consumption, and area (PPA), architects need to proactively choose architectures that are high-performance, highly flexible, upgradable, and developer (ecosystem) friendly. Let us first review the history of AI development, from perceptual AI to generative AI, and then to agent AI and physical AI, with application scenarios continuously expanding. In the perceptual AI stage, AI technology has made significant progress in areas such as speech recognition, deep recommendation systems, and medical imaging; generative AI has played an important role in digital marketing and content creation; agent AI has assisted in programming, customer service, and patient care; and physical AI has driven the development of autonomous vehicles and general-purpose robots.

With the development of AI technology, new hardware data processing accelerators such as TPU, NPU, and DPU, which are specifically designed for certain algorithms or models, have begun to emerge alongside traditional computing technologies like CPU, GPU, and FPGA. They bring high efficiency and have been applied in many scenarios. Meanwhile, AI technology is continuously penetrating new scenarios and applications, making architectures like NPU, which are targeted at specific models and scenarios, struggle to cope with model changes and scenario diversification. As a result, traditional architectures with higher flexibility, such as CPU and GPU, still occupy an important position in the computing field.

However, the advancement of AI technology and the emergence of new scenarios are forcing semiconductor intellectual property (IP) providers and chip design companies to make rapid changes. Whether they are manufacturers using traditional architectures or new xPU providers, they need to respect industry rules. Huaxing Wanbang also believes that from the perspective of technological economics and actual business operations, high R&D costs and marketing expenses are the most significant costs faced by most chip design companies, and flexible and scalable architectures can cover a broader market and achieve longer product lifecycles, making them an important means to amortize these costs and enhance profitability..

Architectural Innovation is Urgent

Architectural Innovation is Urgent

Huang Yin, head of business development for Imagination Technologies in China, analyzed at the AI Technology Innovation Forum at the Munich Electronics Show: “Current main chip design not only requires chip companies to invest a large amount of R&D resources but also needs to coordinate the technical routes of ecosystem partners. In the face of the challenges posed by the rapid iteration of AI algorithms, the industry must explore innovative architectures while still valuing the long-validated foundational computing architecture. Taking GPU as an example, its architecture maintains high parallel computing advantages, while the new generation of designs is adapting to different AI workload requirements through modular expansion capabilities (such as configurable shader clusters and elastic memory subsystems). As an IP vendor focused on graphics computing, Imagination observes that an ideal AI acceleration architecture needs to achieve a balance across three dimensions: supporting fine-grained parallel computing unit design, meeting the configurability required for dynamic algorithm adjustments, and maintaining continuous compatibility of the development toolchain.“

“Scalability is the direction of Imagination’s GPU development evolution: while possessing powerful rendering capabilities, it integrates AI parallel computing capabilities, providing flexible and efficient computing power in edge AI scenarios. Therefore, Imagination will help chip designers discover the real breakthrough points, assisting them in building an architecture platform that can continuously adapt to model and algorithm evolution and support emerging applications—rather than creating one-time ‘custom hardware’ for a specific model, thus avoiding the problem of hardware (processors) always struggling to keep up with algorithms.” Huang Yin added.

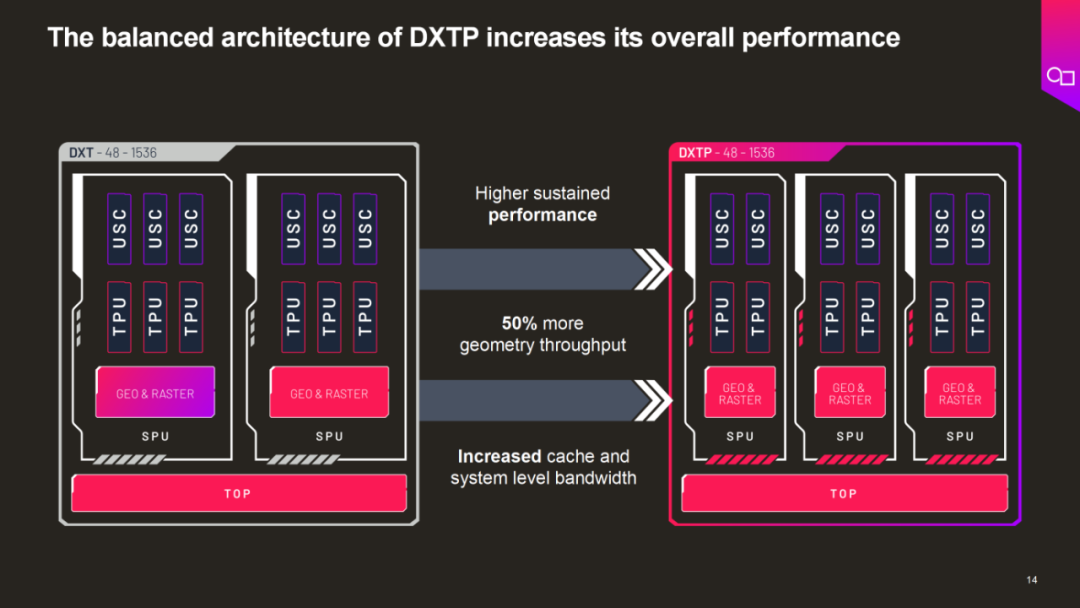

Imagination is helping customers adopt more flexible architectures. For example, the recently released Imagination DXTP GPU IP features an advanced balanced architecture, increasing cache and system-level bandwidth, achieving higher sustained performance, with geometric throughput improved by 50%. It can easily handle both graphics and computing tasks simultaneously, and its power efficiency has also improved by 20% compared to its predecessor, providing an ideal GPU platform for edge AI. The DXTP GPU has already been adopted by globally renowned technology companies for AI multi-data type processing, computing task acceleration, and local memory support.

The Three Grounding Points are Key to Success

The Three Grounding Points are Key to Success

Of course, for chip designers, this requires achieving three essential “grounding points”: model algorithm grounding, vertical functionality grounding, and open ecosystem grounding. Regarding model algorithm grounding, Imagination’s breakthrough is to insist on building a general programmable parallel architecture platform and support customers’ hardware-software co-design and provide adaptation paths through open compilers and inference backends, helping its customers quickly implement models such as Transformer, Diffusion, and cutting-edge algorithms onto GPUs. For this reason, the company will help customers realize that in an era of constantly evolving algorithms, the architecture’s “adaptability” is far more important than a temporary TOPS value.

In terms of vertical functionality grounding, Imagination has been deeply involved in mobile, automotive, cloud, and desktop fields for decades, accumulating rich experience and many innovative supporting technologies that can help customers avoid potential risks and quickly create advantages in the field. This can be seen from the product functionality innovations of the company’s D series GPU IP. For example, the DXT GPU is a new generation of GPU IP launched by Imagination for mobile applications, high-end gaming, and professional graphic design. It not only provides scalable ray tracing capabilities on mobile platforms but also includes technologies such as 2D dual-rate texture mapping that can enhance processing speed and optimize memory bandwidth.

To help desktop and data center customers achieve high-performance cloud GPU innovative solutions, Imagination has launched the DXD GPU IP, which for the first time extends Imagination’s API coverage to DirectX, significantly enhancing DXD’s compatibility with applications and games on the Windows platform. At the same time, Imagination’s hardware virtualization technology HyperLane supports running multiple operating systems securely and independently on a single GPU, greatly improving server utilization efficiency, reducing operational costs for cloud gaming, and bringing innovative operational models to the cloud gaming industry.

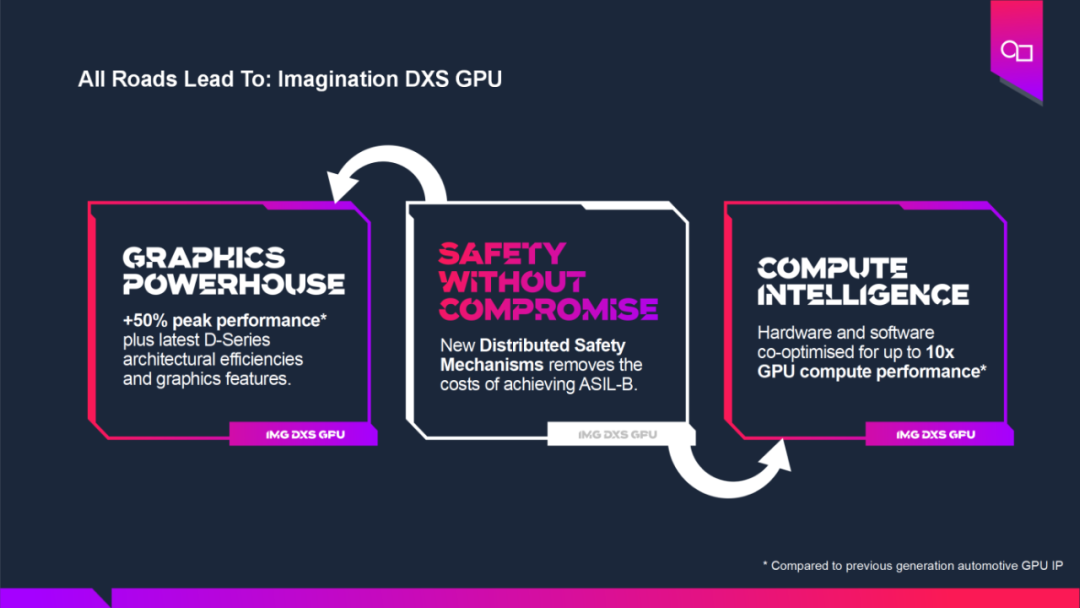

Imagination’s dedicated IP for automotive intelligent driving chips is another example of the company supporting chip design companies in vertical functionality grounding. The hard lessons learned have led to stricter safety regulations, making intelligent driving chip design companies seriously consider functional safety beyond computing power, ecosystem, and lifecycle. To help chip design companies meet global automotive intelligence demands, Imagination has launched the DXS series GPU, which not only provides matching computing power for applications such as intelligent cockpits and advanced driver assistance systems (ADAS) but also develops a distributed functional safety mechanism (DSM) that combines the characteristics of GPU computing modes and significantly reduces costs for applications with stringent functional safety requirements, such as automotive processors, and has passed ASIL-B certification. This brings tremendous innovation to electronic systems in automotive and industrial sectors that increasingly require GPU graphics processing and computing capabilities.

Imagination is also putting considerable effort into supporting customers in achieving industry ecosystem grounding. Its GPU IP fully supports open standards such as OpenCL, SYCL, and Vulkan Compute, and is perfectly compatible with mainstream frameworks like PyTorch and TensorFlow. For instance, Imagination has optimized support for LiteRT through collaboration with the Android ecosystem, providing developers with rich tools and examples to facilitate the development of high-performance AI applications, fully demonstrating the adaptability of its GPU architecture. This open ecosystem simplifies the integration process of new hardware and devices, avoiding vendor lock-in issues, allowing customers to easily deploy across different platforms. By integrating multiple resources, Imagination can help customers achieve collaborative optimization, enhancing resource utilization and execution efficiency, solidifying its leading position in the GPU market, and providing solid support for enterprises to cope with the rapid iteration of AI algorithms and products.

Conclusion and Outlook

The sinking of large models, algorithm innovation, and the rise of edge and endpoint AI have brought new development opportunities for GPU-based main control chips. Huge demand has already emerged in fields such as AI all-in-one machines, new IoT, intelligent security, and autonomous driving, where these devices increasingly require high-performance graphics processing and AI inference simultaneously. Therefore, more flexible and scalable architectures can enable chip design companies’ products to cover a broader market while also having longer product lifecycles, leading to higher potential profitability.

References: “Understanding Easy Parallel Computing: Definitions, Challenges, and Solutions,” click “Read the original text” to access the full text.

Recommended ReadingRecommended Reading

Executive Insights: Custom IP and Verification Solutions Open Doors for High-Quality Chip Design

Follow the Money: The Ten Most Profitable Domestic Chip Design Listed Companies in 2023 and Their Performance

The Fourth Industrial Revolution is Rapidly Approaching, and We Must Guard Against Three Major Dependencies on Overseas AI Technology for Great Rejuvenation

Arm’s Stock Price Soars Nearly 50% in One Day, with a Market Value of $100 Billion Validating the Themes of Intelligence and Security

Chinese Chips: The Urgency to Find New Tracks

After Falling from Abundance, Is Scene-Based Embedded Computing the Breakthrough Path for Domestic MCUs and Other Chips?

March in the Semiconductor Industry: The Glory of Moore’s Law and the Ecological Expansion of the Embedded World

Chinese Chips and Buffett’s Investment in TSMC: How Should Enterprises/Industry Capital Be Greedy in Times of Market Fear?

Breaking Free from Ecological Dilemmas—Welcoming the Second Spring for Chip Enterprises

Award-Winning Imagination GPUs How They Help Chip Design Companies Enhance Innovation Baselines

Recommended Reading

Executive Insights: Custom IP and Verification Solutions Open Doors for High-Quality Chip Design

Follow the Money: The Ten Most Profitable Domestic Chip Design Listed Companies in 2023 and Their Performance

The Fourth Industrial Revolution is Rapidly Approaching, and We Must Guard Against Three Major Dependencies on Overseas AI Technology for Great Rejuvenation

Arm’s Stock Price Soars Nearly 50% in One Day, with a Market Value of $100 Billion Validating the Themes of Intelligence and Security

Chinese Chips: The Urgency to Find New Tracks

After Falling from Abundance, Is Scene-Based Embedded Computing the Breakthrough Path for Domestic MCUs and Other Chips?

March in the Semiconductor Industry: The Glory of Moore’s Law and the Ecological Expansion of the Embedded World

Chinese Chips and Buffett’s Investment in TSMC: How Should Enterprises/Industry Capital Be Greedy in Times of Market Fear?

Breaking Free from Ecological Dilemmas—Welcoming the Second Spring for Chip Enterprises

Achieving Artificial Intelligence and Machine Learning on Low-Power MCUs

New Heights of Automotive GPU Computing Power Support Intelligent Driving Chip Architecture Innovation

What Happens When TPU Meets GPU: A “Feature+AI” Phone, Tablet, and PC?

Intelligent Mobility Brings New Horizons for Functional Safety Innovation

Welcome to follow the “Huaxing Wanbang Technology Economics” WeChat public account: