(Click the public account above to quickly follow)

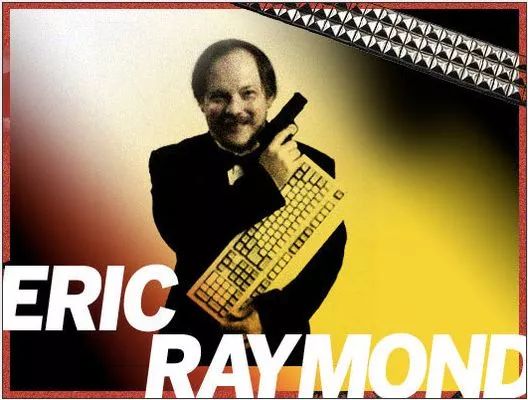

Compiled by: Linux China / Yu Haixin, English: Eric Raymond

https://linux.cn/article-9268-1.html

Author: Eric Raymond, a renowned computer programmer and a leading figure in the open-source software movement.

In 1990, he edited the “New Hacker’s Dictionary”. Since then, he has been fascinated by hacker culture, becoming a historian of the hacker tribe and a scholar of hacker culture, culminating in the writing of “The Cathedral and the Bazaar” in 1996. He began to position himself as an anthropologist: “The work of an anthropologist is to study human behavior and society as a whole, to study the formation of human culture, how culture operates, how it changes over time, and how humans adapt to different cultural environments. What I think about the most is the culture of computer hackers, focusing more on analyzing it from a social perspective rather than their technical skills and programming.”

In May 1997, his work “The Cathedral and the Bazaar” was presented at the Linux Kongress and received unprecedented acclaim. The greatest achievement of this book was that it led to Netscape Mozilla becoming open-source software in 1998, marking the first time mainstream commercial circles fully recognized his theories. Writing this article opened up a whole new direction for him. On February 3, 1998, at a meeting in Silicon Valley, the term “Open Source” was proposed by the first participant, which later developed into the open-source movement and eventually became the founding organization of open source. Raymond became one of the main founders of the Open Source Initiative, the theorist leading this movement.

—【Main Text】—

Recently, I have been contemplating the new programming languages that are challenging the leadership of C in system programming, especially Go and Rust. During this reflection, I realized a shocking fact — I have 35 years of experience with the C language. I write a lot of C code every week, but I can no longer remember the last time I created a new C language project.

If you don’t find this situation shocking, you are probably not a system programmer. I know many programmers work with higher-level languages. However, I have spent most of my time deeply refining things like NTPsec, GPSD, and giflib. Proficiency in C has been my specialty for decades. But now, not only am I no longer writing new projects in C, I can’t even remember when I started doing this, and… looking back, I feel like this hasn’t happened in this century.

This is a big deal for me because if you ask me what my five core software development skills are, “C language expert” is definitely one of the most likely answers you would hear. This has also sparked my thoughts. What does the future hold for the C language? Is C language heading towards obsolescence like COBOL after its glory days?

I happened to start programming in the years when C was rapidly developing and pushing assembly language and many other compiled languages out of mainstream existence. That transition occurred roughly between 1982 and 1985. Before that, many compiled languages were vying for programmers’ attention, and there was no clear leader among them; but after that, niche languages quietly exited the stage. Mainstream languages (FORTRAN, Pascal, COBOL) either remained limited to legacy code or clung to a single domain, or they struggled to survive under increasing pressure from the C language’s edge.

This situation continued for nearly 30 years. Despite new trends emerging in application development: Java, Perl, Python, and many other less successful competitors. Initially, I paid little attention to these languages, largely because their runtime overhead was too high for the actual hardware at the time. This made C’s success unassailable; to use and interface with a large amount of existing C code, you had to write new code in C (some scripting languages attempted to break this barrier, but only Python had a chance of success).

Looking back, I should have noticed the more significant implications of these languages when I was writing applications in scripting languages in 1997. At that time, I was writing auxiliary software called SunSITE to help librarians distribute source code, using Perl.

This application was entirely about handling text input and only needed to cope with human response times (about 0.1 seconds), so writing it in C or another language without dynamic memory allocation and string types would have seemed silly. However, at the time, I merely viewed it as an experiment and never thought that I would almost never type int main(int argc, char **argv) in a new project’s first file again.

I say “almost” mainly because of SNG in 1999. I think that was the last project I started from scratch in C.

Since then, all the C code I have written has been for those old projects that existed in the last century or for maintaining projects like GPSD and NTPsec.

I shouldn’t have used C to write SNG back then. Because at that time, the rapid iteration of Moore’s Law made hardware cheaper, and the execution efficiency of languages like Perl was no longer an issue. Just three years later, I would have undoubtedly used Python instead of C to write SNG.

Learning Python in 1997 was a watershed moment for me. This language is wonderful — just like the Lisp I used in my early years, and Python has cool libraries! It even fully adheres to POSIX! And it has a pretty good object system! Python did not push C out of my toolbox, but I quickly got used to writing in Python whenever I could, and only writing in C when I had to.

(After that, I began to point out what I call the “Perl lesson” in my interviews, which is that any language that fails to implement POSIX with semantics equivalent to C is doomed to fail. In the history of computer science, many academic languages have left behind their skeletons because their designers did not realize this important issue.)

Clearly, one of the main advantages of Python for me is its simplicity; when I write Python, I no longer have to worry about memory management issues or program crashes that lead to core dumps — which are incredibly annoying for C programmers to deal with. The less obvious advantage became apparent when I changed languages; when I wrote code for applications and non-core system services in the late 1990s, I tended to choose languages with automatic memory management but higher overhead to balance cost and risk, offsetting the aforementioned shortcomings of C. Just a few years earlier (even in 1990), the overhead of those languages was still prohibitive; at that time, the hardware industry was still in its early stages, and Moore’s Law had not had enough time to take effect.

Whenever possible, I would choose C over Python — as long as I could, I would switch from C to Python. This is an effective strategy for reducing engineering complexity. I applied this strategy in GPSD, and for NTPsec, I adopted this strategy even more systematically. This is why we were able to reduce the size of the NTP codebase by a quarter.

But today, I am not here to talk about Python. Although I think it stands out in competition, Python may not be the real reason I completely stopped using C for new projects before 2000, as any new academic dynamic language could have led me to stop using C. It is also possible that after writing a lot of Java for a while, I gradually distanced myself from C.

I write this memoir because I feel I am not an exception; similar developments and transitions have changed the coding habits of many C language veterans at the turn of the century. Like me, they were also unaware that this transition was happening at the time.

After 2000, although I was still using C/C++ for previous projects like GPSD, the game War of the Roses, and NTPsec, all my new projects were written in Python.

Many programs cannot be written in C at all, especially projects like reposurgeon and doclifter. Due to the limited ontology of data types in C and its fragile underlying data management issues, attempting to write in C could be terrifying and doomed to fail.

Even for smaller projects — those that could be implemented in C — I also write in Python because I do not want to waste unnecessary time and energy dealing with kernel dump issues. This situation continued until the end of last year, when I created my first Rust project and successfully wrote my first project using Go.

As mentioned earlier, although I am discussing my personal experience, I believe my experience reflects the trends of the times. I look forward to the emergence of new trends rather than merely following them. In 1998, I was an early user of Python. Data from TIOBE indicates that within a few months of Go emerging from a company experimental project and just breaking out of niche language status, I began implementing my first Go project.

In summary: only now are the first languages that could potentially challenge the traditional status of C emerging. My standard for judging this is simple — as long as a language can make us C veterans accept the fact that we no longer write in C, that language is “potentially” challenging C’s status — look, there is a new compiler that can convert C to the new language, and now you can let it do all your work — then the C veterans will be happy.

Python and similar languages have not done enough in this regard. Implementing NTPsec in Python (for example) could be a disaster, ultimately failing due to excessive runtime overhead and latency variations caused by garbage collection mechanisms. If the demand is for a single user and only needs to run at a human-acceptable speed, using Python is certainly good, but for programs running at machine speed, this is not always the case — especially under high multi-user loads. This is not just my judgment — because with Go, its existence is primarily due to the fact that Google, a major supporter of Python, encountered the same performance pain points when implementing some projects in Python.

Go was designed to handle tasks that Python could not manage, most of which were implemented in C. Although there is no fully automated language conversion software, writing system programs in Go is not troublesome for me; I find that I enjoy writing in Go. Many of my C coding skills can still be applied, and I also gain garbage collection and concurrent programming mechanisms, which is a win-win!

(Here is more information about my first experience writing in Go: https://blog.ntpsec.org/2017/02/07/grappling-with-go.html)

I initially wanted to consider Rust as an example of “C language becoming obsolete”, but after learning and trying to program in this language, I feel that it is not yet ready. Perhaps in five years, it will become a competitor to C.

As 2017 comes to a close, we have discovered a relatively mature language that is similar to C and capable of handling most of C’s work scenarios (I will describe this accurately below), and in a few years, this new star in the language world may achieve success.

This is significant. If you do not look back at history in the long term, you may not see the greatness of this matter. For thirty years — this is almost my entire career as a programmer — we have not seen a successor to the C language, nor can we foresee what system programming will look like after C. And now, suddenly, we have two different prospects for the post-C era…

…The other prospect is left to us by a language that my friend is developing, which he calls “Cx”. This language makes minimal changes to C to support type safety; his project’s goal is to create a program that can modify classical C into a new language with minimal human involvement. I will not mention my friend’s name to avoid putting too much pressure on him to make unrealistic promises. However, his implementation method is indeed interesting, and I will try to fund him.

Now, we see three different possible paths for implementing system programming as alternatives to C. Just two years ago, we were still in the dark. I repeat: this is significant.

Am I saying that the C language is going to become extinct? Not at all; in the foreseeable future, C will still be the mainstream language for operating system kernel programming and device firmware programming, where the old rules of squeezing hardware performance still apply, even if it may not be as safe.

The areas that will be breached by C’s successors are those I previously mentioned, which I often engage in — such as GPSD and NTPsec, system services, and processes that were written in C for historical reasons. Also, DNS servers and mail transfer agents — those systems that need to run at machine speed rather than human speed.

Now we can catch a glimpse of the future of the post-C era, where the code in the aforementioned areas can be implemented using those alternatives to C that have strong memory safety features. Go, Rust, or Cx, whichever it is, could weaken C’s presence. For example, if I were to re-implement NTP now, I would likely use Go without hesitation.

If you found this article helpful, please share it with more people.

Follow “The Programmer’s Matters” to improve your programming skills.