Machine Heart ReportEditor: Xiao Zhou

Karpathy: for fun.

A few days ago, Andrej Karpathy, former head of Tesla Autopilot and OpenAI scientist, released a project called “llm.c” that implements GPT-2 training in just 1000 lines of code on CPU/fp32.

llm.c aims to simplify large model (LM) training — using pure C language / CUDA, without the need for 245MB of PyTorch or 107MB of cPython. For example, training GPT-2 (CPU, fp32) requires only about 1000 lines of clean code in a single file, which can be compiled and run immediately, and it can fully compete with the PyTorch reference implementation.

Project link: https://github.com/karpathy/llm.c

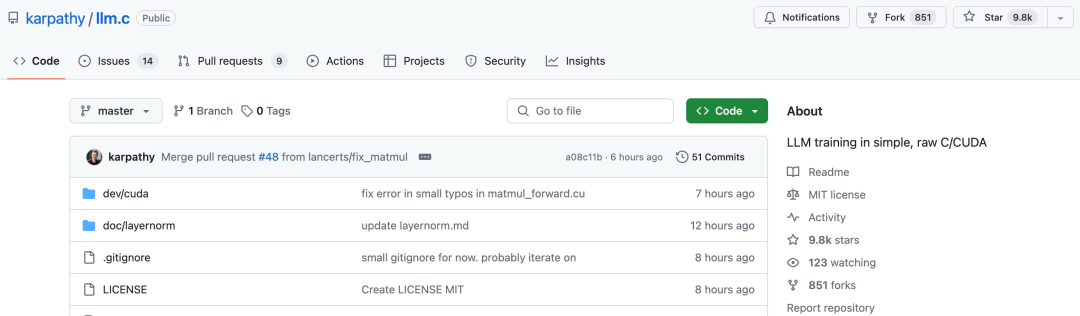

The project received 2000 stars in less than seven hours and is now approaching ten thousand stars. Many netizens exclaimed how impressive it is: “Even with pointer PTSD, I can feel the beauty of this code.”

However, the llm.c project has received not only praise but also many questions. For example, one netizen directly asked: “Can you describe what this project does and what problem it solves?”

In response, Karpathy addressed the netizens’ questions today, elaborating on the significance of the llm.c project and its pros and cons.

Machine Heart has compiled and organized Karpathy’s original narrative without changing its meaning. Let’s see what Karpathy said:

Training large language models (LLMs), such as ChatGPT, involves a lot of code and high complexity. For example, typical LLM training may use the PyTorch deep learning library. PyTorch itself is quite complex because it implements:

-

A very general tensor abstraction (a way to arrange and operate on arrays used to store neural network parameters and activations);

-

A very general backpropagation Autograd engine (an algorithm for training neural network parameters);

-

A large number of deep learning layers used in neural networks.

The PyTorch project has 3,327,184 lines of code across 11,449 files. Most importantly, PyTorch is written in Python, which is itself a very high-level language — it requires running a Python interpreter to convert training code into low-level computer instructions. For example, the cPython project that performs this conversion contains 2,437,955 lines of code across 4,306 files.

The llm.c project aims to remove all this complexity and simplify LLM training to its most basic elements, directly communicating with the computer using a very low-level language (C language) without any other library dependencies, with the only abstraction being the assembly code itself.

Surprisingly, training an LLM like GPT-2 actually only requires about 1000 lines of C code in a single file. I achieved this compression by directly implementing the neural network training algorithm for GPT-2 in C. This is actually quite difficult because you need to have a detailed understanding of the training algorithm, be able to derive the forward pass and backward pass for all layers’ backpropagation, and be very careful in implementing all array index calculations since there is no available PyTorch tensor abstraction. But once this is done, and verified against PyTorch for correctness, you end up with something very simple, small, and elegant.

So, why don’t people always do it this way?

First: this sacrifices a lot of flexibility. If you want to modify the neural network, in PyTorch you might only need to change one line of code. In llm.c, changes are likely to involve more code, may be more difficult, and require more expertise. For example, if a new operation is involved, you might need to do some calculus and write out its forward pass and backward pass for backpropagation, ensuring it is mathematically correct.

Second: it sacrifices speed, at least initially. There is no free lunch — one should not expect that just 1000 lines of code can achieve state-of-the-art speed. PyTorch does a lot of work in the background to ensure that neural networks are very efficient. Not only are all tensor operations carefully calling the most efficient CUDA kernels, but there are also tools like torch.compile to further analyze and optimize neural networks for effective execution on computers.

Now, in principle, llm.c should be able to call all the same kernels and run directly. But this requires more work, just like the first point; if you change anything in the neural network or the computer it is running on, you may have to call different kernels with different parameters and may need to make more manual changes.

Overall, llm.c is a direct implementation for training GPT-2. The result of this implementation is surprisingly concise. However, llm.c does not support other neural networks, only GPT-2, and if you want to change anything about the neural network, expertise is required. Fortunately, all state-of-the-art LLMs are actually not very different from GPT-2. Moreover, llm.c will require additional adjustments and refinements, but in principle, I believe it should be able to almost match or even surpass PyTorch because we have eliminated all overhead.

Finally, why did I do this work? Because it is fun. It is also educational because it only requires those 1000 lines of very simple C code. It is just some arrays and performing simple mathematical operations on their elements, such as + and *. For ongoing work, it may become practically useful.

Reference link: https://twitter.com/karpathy/status/1778153659106533806

© THE END

For reprints, please contact this public account for authorization

Submissions or inquiries: [email protected]