In the application of computer vision, “recognition” is just a relatively basic technology. Many people often question during the execution of deep learning inference applications, “What is the use of the recognized categories?”

Confirming how many types we want to recognize in each frame of the image and their positions in the image is just the first step of the entire application. Without the capability of “object tracking”, it is difficult to provide the basic functionality of video analysis.

In the standard OpenCV framework, there are 8 mainstream object tracking algorithms, which interested individuals can search for and study online.

The basic logic of the algorithm requires comparing the “category” and “position” of adjacent frames in the video. Therefore, this part of the computation is quite resource-intensive. When the video analysis software “enables” the object tracking function, its recognition performance will inevitably decline; this is something everyone must recognize first.

DeepStream is positioned for “video analysis” applications, so “object tracking” is one of its most basic functionalities.

In the previously used myNano.txt configuration file, you only need to adjust one setting to enable or disable this tracking function, which is very simple.

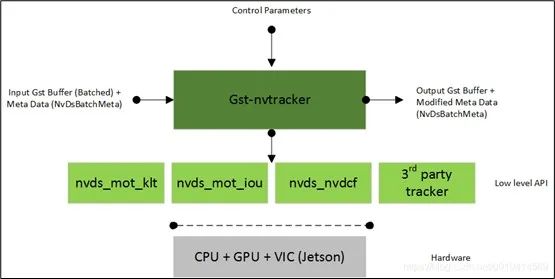

DeepStream supports three object tracking algorithms: IOU, KLT, and NVDCF (as shown in the figure below). Among them, IOU has the best performance, achieving approximately 200FPS on the Jetson Nano 2GB; NVDCF has the highest accuracy, but currently, its performance is only about 56FPS; the KLT algorithm balances performance and accuracy well, achieving around 160FPS overall, so it is usually chosen for demonstration.

We will not elaborate on the details of the algorithm; please find relevant technical documents to study. Here, we will directly enter the experimental process. We will still use the myNano.txt configuration file from a previous article. If you are unsure, you can copy the source8_1080p_dec_infer-resnet_tracker_tiled_display_fp16_nano.txt file and modify the parameters inside to experience the DeepStream object tracking functionality.

01

Switch for Object Tracking Functionality

At the bottom of myNano.txt, you can see the [tracker] setting group, where there is a parameter “enable=1”, which is the object tracking function.

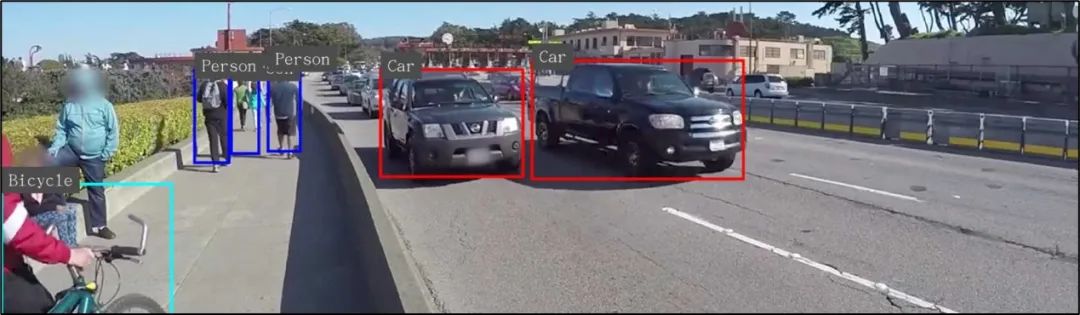

Now, let’s execute the tracking function once. As shown in the figure below, you can see that each recognized object has a category and bounding box, and next to it, there is a number. This number will always follow the object, thus forming the “tracking” functionality.

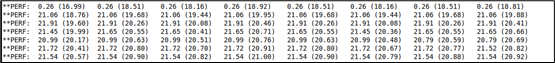

The recognition performance at this time is shown in the figure below, with a total performance (sum of 8 numbers) of approximately 160FPS.

If we change the [tracker] setting to “enable=0” and execute again, what will the result be? In the figure below, we can recognize the categories and bounding box positions of the objects, but there are no numbers.

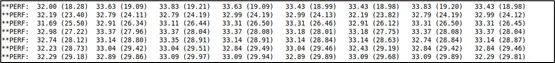

After disabling the tracking function, the recognition performance is shown in the figure below, with a total recognition performance of about 250FPS.

02

Switching Trackers

As mentioned earlier, DeepStream 5.0 currently supports three types of trackers. So how do we choose? Similarly, under the [tracker] parameter group, there are three lines of parameters:

|

#ll-lib-file=/opt/nvidia/deepstream/deepstream-5.0/lib/libnvds_mot_iou.so #ll-lib-file=/opt/nvidia/deepstream/deepstream-5.0/lib/libnvds_nvdcf.so ll-lib-file=/opt/nvidia/deepstream/deepstream-5.0/lib/libnvds_mot_klt.so |

The lines prefixed with a “#” are in a disabled state. Please first set the [tracker] back to “enable=1” to enable it. Next, you can adjust the position of the “#” to switch between the tracker options and test the differences between these three trackers, including recognition performance and tracking capability.

This part must be experienced directly in the video, so we will not provide screenshots. The test results show that the IOU tracker has the best performance, reaching around 200FPS, but the numbering of the same object is not very stable. The NVDCF tracker has the most stable numbering, but its performance is only about 1/4 of IOU, capable of handling a maximum of 2 streams of real-time analysis.

The KLT algorithm can achieve an overall performance of 160FPS and can support real-time recognition of up to 8 streams, with tracking capability significantly better than IOU. However, this algorithm has a high CPU usage, which is its main drawback. The choice depends on the actual scene and the resources of the computing device.

03

Obtaining Tracking Data

The purpose of enabling the object tracking function is not just to see it on the display, but to use this data for more valuable applications. But where do we obtain this data? Typically, it needs to be accessed through Python or C++ from the interfaces provided by DeepStream.

Here, we provide a method to obtain object tracking data without needing to understand the DeepStream interface. You just need to add a line in the [application] parameter group in myNano.txt, specifying a path with “kitti-track-output-dir=<PATH>”. Assuming we want to store the data in the path “/home/nvidia/track”, you can add a line in myNano.txt:

|

[application] … … kitti-track-output-dir=/home/nvidia/track |

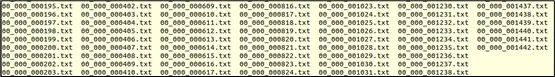

After executing “deepstream -c myNano.txt”, you will see many files generated in the /home/nvidia/track directory, as shown in the screenshot below:

Each file stores the tracking results for “one frame”. For example, if the test video sample_1080p_h264.mp4 is 48 seconds long and has 30 frames per second, it will generate 1440 files.

The first 6 digits “00_000” represent the video source number, starting from “0”. If there are 4 video sources, the numbering will be from “00_000” to “00_003”. The last 6 digits are the serial number, for example, this test video will generate “000000.txt” to “001440.txt”, combined from these two parts to form the filename.

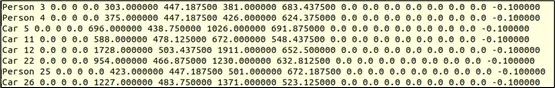

Finally, let’s take a look at the content of the files, as shown below:

This is KITTI format data. The first column is the category of the object, the second column is the “tracking number” of the object, and the meaning of the subsequent data can be referenced in the KITTI format definition.

Now we can sequentially read these tracking files or send these files back to the control center for file parsing and information extraction. Isn’t that convenient? I believe this content will be very helpful for development.

Recommended Reading

NVIDIA Jetson Nano 2GB Series Article (1): Unboxing Introduction

NVIDIA Jetson Nano 2GB Series Article (2): System Installation

NVIDIA Jetson Nano 2GB Series Article (3): Network Setup and Adding SWAPFile Virtual Memory

NVIDIA Jetson Nano 2GB Series Article (4): Experiencing Parallel Computing Performance

NVIDIA Jetson Nano 2GB Series Article (5): Experiencing Visual Function Libraries

NVIDIA Jetson Nano 2GB Series Article (6): Installing and Calling the Camera

NVIDIA Jetson Nano 2GB Series Article (7): Calling CSI/USB Cameras via OpenCV

NVIDIA Jetson Nano 2GB Series Article (8): Executing Common Machine Vision Applications

NVIDIA Jetson Nano 2GB Series Article (9): Adjusting CSI Image Quality

NVIDIA Jetson Nano 2GB Series Article (10): Dynamic Adjustment Techniques for Color Space

NVIDIA Jetson Nano 2GB Series Article (11): What You Should Know About OpenCV

NVIDIA Jetson Nano 2GB Series Article (12): Face Localization

NVIDIA Jetson Nano 2GB Series Article (13): Identity Recognition

NVIDIA Jetson Nano 2GB Series Article (14): Hello AI World

NVIDIA Jetson Nano 2GB Series Article (15): Hello AI World Environment Installation

NVIDIA Jetson Nano 2GB Series Article (16): The Power of 10 Lines of Code

NVIDIA Jetson Nano 2GB Series Article (17): Changing Models for Different Effects

NVIDIA Jetson Nano 2GB Series Article (18): Utils VideoSource Tool

NVIDIA Jetson Nano 2GB Series Article (19): Utils VideoOutput Tool

NVIDIA Jetson Nano 2GB Series Article (20): “Hello AI World” Extended Parameter Parsing Functionality

NVIDIA Jetson Nano 2GB Series Article (21): Identity Recognition

NVIDIA Jetson Nano 2GB Series Article (22): “Hello AI World” Image Classification Code

NVIDIA Jetson Nano 2GB Series Article (23): “Hello AI World” Object Recognition Application

NVIDIA Jetson Nano 2GB Series Article (24): “Hello AI World” Object Recognition Application

NVIDIA Jetson Nano 2GB Series Article (25): “Hello AI World” Image Classification Model Training

NVIDIA Jetson Nano 2GB Series Article (26): “Hello AI World” Object Detection Model Training

NVIDIA Jetson Nano 2GB Series Article (27): Introduction to DeepStream and Enabling

NVIDIA Jetson Nano 2GB Series Article (28): First Experience with DeepStream