1. Weight Initialization — The “Morning Star” of Neural Networks

In the vast universe of neural networks, weight initialization serves as the morning star, guiding the direction of model training. It is not merely about randomly assigning initial values to weights; rather, it is a crucial factor that determines whether the model can learn efficiently and converge accurately. Proper weight initialization allows the neural network to embark on a stable path during the early stages of training, avoiding the pitfalls of gradient vanishing or exploding, thus accelerating convergence to an ideal state. Conversely, improper initialization may cause the model to struggle in vain during prolonged training, potentially never reaching the optimal solution. Especially when exploring neural networks in a C++ environment, a deep understanding and precise application of weight initialization methods is the key to unlocking efficient model construction, enabling us to navigate accurately through the complex sea of data towards the shores of success.

2. Fundamental Techniques for Neural Network Weight Initialization in C++

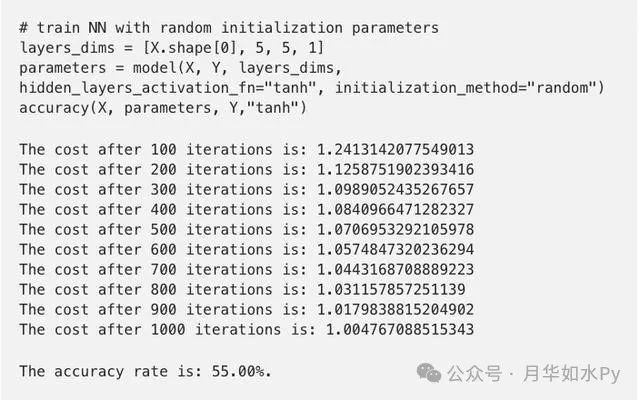

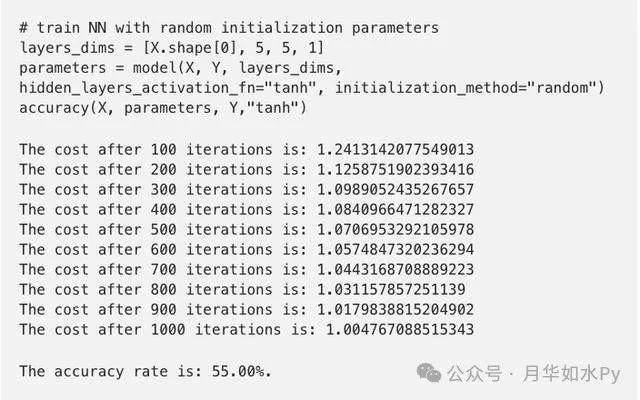

(1) Random Initialization: Exploration in Chaos

Random initialization is like sowing seeds of infinite possibilities in chaos. The core principle is to randomly draw values from a specific probability distribution (such as a normal distribution with a mean of 0 and a small standard deviation, or a uniform distribution over a given interval) to assign to the weights of the neural network. For a simple fully connected neural network, we can initialize the weight matrix weights in C++ code using tools from the <random> header:

#include <random>

#include <vector>

#include <iostream>

// Assume this is a simple fully connected layer class

class FullyConnectedLayer {

public:

std::vector<std::vector

// Other member variables and function declarations

// Constructor for weight initialization

FullyConnectedLayer(int input_size, int output_size) {

std::random_device rd;

std::mt19937 gen(rd());

std::normal_distribution

weights.resize(output_size, std::vector

for (int i = 0; i < output_size; ++i) {

for (int j = 0; j < input_size; ++j) {

weights[i][j] = dis(gen);

}

}

}

};

This operation ensures that each neuron’s weight starts with a different random value, effectively giving them unique “personalities” and breaking symmetry. During forward propagation in the neural network, different neurons process input data through varied paths, allowing them to capture more diverse feature information, thus laying a solid foundation for the model to learn complex patterns.

However, random initialization has its “soft spot.” If the distribution range of random values is not properly controlled, it can easily lead to gradient issues. When the initial weight values are too large, such as when drawn from a normal distribution with a large standard deviation, using activation functions like Sigmoid or Tanh can cause the input signal, after being weighted, to fall into the saturation region of the activation function, resulting in gradients approaching 0, leading to gradient vanishing, making model training feel like wading through mud and difficult to progress; conversely, if the initial values are too small, the signal weakens progressively during layer-to-layer transmission, also facing the gradient vanishing dilemma, making it hard for the model to learn effectively.

(2) Fixed Value Initialization: A Steady Approach

Fixed value initialization is akin to following an ancient maritime chart, selecting a specific constant value for the weights. The operation is straightforward; in C++ code, we can set all weights to the same value like this:

class SomeLayer {

public:

std::vector<std::vector

// Constructor for fixed value weight initialization

SomeLayer(int input_size, int output_size) {

weights.resize(output_size, std::vector

}

};

This method has its utility in certain specific scenarios. For instance, when we are conducting preliminary debugging of the model architecture and wish to quickly verify the functionality of parts other than the weights, fixed value initialization can provide a stable initial state, allowing us to focus on testing other key aspects. Additionally, in some simple tasks where precision is not critical and the data distribution is relatively uniform, fixed value initialization can serve as a convenient starting point.

However, its limitations are also apparent. Since all neurons have the same weight, they treat the input data with identical “attitudes” during forward propagation, yielding indistinguishable output results, causing a loss of diversity among neurons and preventing the extraction of complex features from the data. This is akin to using the same key to unlock a myriad of locks, making it difficult to meet the demands of complex tasks, significantly weakening the model’s learning and expressive capabilities.

3. Advanced Techniques: Common Intelligent Initialization Strategies

(1) Xavier Initialization: The Art of Balance

Xavier initialization is like an experienced conductor, ensuring harmonious and orderly signal transmission within the neural network. It is born from exquisite theoretical derivation, with the core idea being to adaptively calculate the variance of weight initialization based on the number of input and output neurons in each layer.

Assuming that in a certain layer of the neural network, the number of input neurons is n_in and the number of output neurons is n_out. The goal of Xavier initialization is to keep the variance of the input signal as stable as possible after it has been weighted and passed through the activation function, without experiencing drastic changes as the depth of the network increases. During derivation, considering that the weights w and inputs x are typically initialized to a distribution with a mean of 0 and are independent, the variance of the weighted input z for a certain neuron during forward propagation can be expressed as Var(z) = Var(w) * Var(x). To stabilize the signal, the ideal condition is Var(z) = 1, but considering that the signal must also remain stable during backpropagation, a comprehensive balance leads to the variance calculation formula for Xavier initialization being Var(w) = 2 / (n_in + n_out).

When using a normal distribution for initialization, weights w can be sampled from N(0, Var(w)); if using a uniform distribution, the sampling interval is [-limit, limit]. In C++ code, using the Eigen library, the code snippet for implementing Xavier uniform distribution initialization is as follows:

#include <Eigen/Dense>

#include <random>

#include <iostream>

Eigen::MatrixXd xavierUniformInitialize(int rows, int cols) {

std::random_device rd;

std::mt19937 gen(rd());

double limit = std::sqrt(6.0 / (rows + cols));

std::uniform_real_distribution

Eigen::MatrixXd matrix(rows, cols);

for (int i = 0; i < rows; ++i) {

for (int j = 0; j < cols; ++j) {

matrix(i, j) = dis(gen);

}

}

return matrix;

}

This initialization ensures that the range of weight values is just right, allowing signals to traverse smoothly between layers during forward propagation, avoiding saturation of the activation function due to excessively large weights, which can lead to gradient vanishing; and also preventing the signal from becoming too weak due to excessively small weights, thus eliminating the risk of gradient explosion, laying a solid foundation for efficient model training.

(2) He Initialization: The “Best Partner” for ReLU Activation Functions

He initialization serves as a “dedicated guard” tailored for ReLU activation functions, deeply adapting to their unique output characteristics. The ReLU function outputs linearly when the input is greater than 0 and outputs 0 when the input is less than or equal to 0, which results in the variance of neuron outputs being different from traditional activation functions (like Sigmoid and Tanh).

Based on this, He initialization calculates the weight variance considering only the number of input neurons n_in, with its variance formula being Var(w) = 2 / n_in. This is due to the “half-linear” nature of the ReLU activation function, which means the variance of the output signal is primarily influenced by the number of input connections. Compared to Xavier initialization, it is precisely optimized for ReLU. In the scenario of normal distribution initialization, weights w are sampled from N(0, std_dev). Below is an example code for implementing He initialization in C++ using the Eigen library:

#include <Eigen/Dense>

#include <random>

#include <iostream>

Eigen::MatrixXd heInitialize(int rows, int cols) {

std::random_device rd;

std::mt19937 gen(rd());

double std_dev = std::sqrt(2.0 / rows);

std::normal_distribution

Eigen::MatrixXd matrix(rows, cols);

for (int i = 0; i < rows; ++i) {

for (int j = 0; j < cols; ++j) {

matrix(i, j) = dis(gen);

}

}

return matrix;

}

When a neural network extensively uses ReLU activation functions, He initialization shines. It cleverly maintains a reasonable distribution of activation values, effectively avoiding the gradient vanishing problem, allowing gradients to flow smoothly during backpropagation, enabling the model to quickly and robustly learn complex patterns within the data. It excels in various fields such as image recognition and speech processing, helping neural networks tackle complex tasks and uncover the deep value of data.