Introduction: The Rise of AI and Mixed Precision Computation

In recent years, AI has become one of the hottest topics in the tech field, from intelligent voice assistants to image recognition software, from autonomous vehicles to precise medical diagnostics, AI is omnipresent, profoundly changing our lives and work methods. Behind this AI boom is the rapid development of neural network models, whose complexity and scale are continually increasing, leading to unprecedented demands for computational resources.

To train more accurate and powerful neural network models, researchers and engineers need to invest massive computational resources. Taking the GPT series models as an example, the number of parameters has grown from an initial 117 million to over 100 trillion in GPT-4, with the computational power required increasing exponentially. This enormous demand for computational power has made traditional computing methods increasingly inadequate, creating a bottleneck in AI development.

In this context, mixed precision computation has emerged as a magical key, opening a new door to breaking through computational bottlenecks and accelerating the training of neural network models. Today, we will delve into mixed precision computation of neural network models in C++ and explore its charm.

1. Understanding Neural Network Models in C++

1. Understanding Neural Network Models in C++

In the vast realm of AI, C++ is a powerful “veteran.” With its outstanding execution efficiency, excellent low-level operation capabilities, and fine control over hardware resources, C++ has firmly established itself in many AI applications that demand high performance, such as real-time decision-making for intelligent NPCs in game development, precise path planning in robotic navigation systems, and the rapid processing of changing road conditions in autonomous driving technology—all of which rely on the strong support of C++.

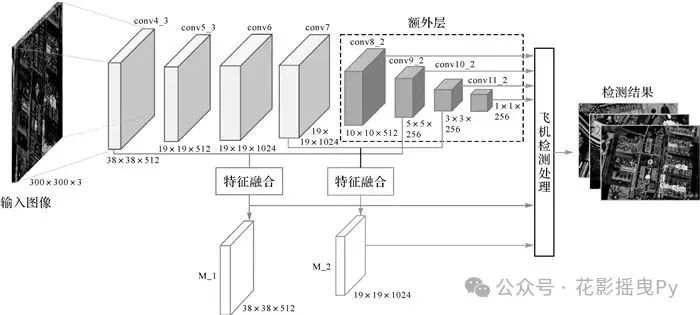

So what does a neural network model look like? In simple terms, it is a super complex network made up of a vast number of interconnected neurons, simulating how the human brain processes information. Structurally, common types include feedforward neural networks, where information flows unidirectionally from the input layer through hidden layers to the output layer without turning back; a typical example is the convolutional neural network (CNN) used for image recognition, which can quickly extract image features. There’s also feedback neural networks, where neurons have “memories,” allowing information to circulate; recurrent neural networks (RNNs) and their variants, long short-term memory networks (LSTMs), fall into this category, excelling in processing sequential information such as text sequences and speech data, capturing the relationships between preceding and succeeding information.

In the training process of neural network models, traditional approaches mostly use single precision (32-bit floating point, FP32) to represent the model’s parameters, activation values, and gradients. While this high precision ensures computational accuracy, as models become increasingly large and data volumes explode, the drawbacks become more pronounced. On one hand, the extensive storage and computation of 32-bit data place extremely high demands on memory bandwidth, akin to a narrow road trying to accommodate a massive influx of vehicles, easily causing “traffic jams,” slowing down data transmission and training speeds; on the other hand, the consumption of computational resources is enormous, like a “power-hungry monster,” leading to skyrocketing energy costs, making it impossible for ordinary computing devices to cope, thus limiting the scale and efficiency of model training.

2. The Principles of Mixed Precision Computation Revealed

Before diving into the fascinating world of mixed precision computation, we need to first understand the commonly used floating-point data types in C++. Generally speaking, these include single precision floating point (FP32), double precision floating point (FP64), and half precision floating point (FP16).

FP32 is our “old friend,” widely used in traditional neural network training. It uses 32 bits to represent a floating point number, providing relatively high precision and a broad numerical range to meet most conventional computational needs. As for FP64, it is the high-precision “representative,” with its 64-bit storage allowing for extremely high precision, shining in scientific computing fields where precision is crucial, but at the cost of occupying double the storage space and relatively slower computation speed.

One of today’s protagonists, FP16, is quite impressive; it uses only 16 bits to store data. From a storage perspective, it directly saves half the space compared to FP32, which is particularly advantageous when dealing with large-scale models and massive data, significantly reducing memory usage. Moreover, on specific AI acceleration chips, such as NVIDIA’s Volta architecture GPUs, FP16 can compute several times faster than FP32, greatly enhancing computational efficiency, like equipping model training with a “super engine.”

However, this “little guy” also has its “quirks.” Due to its limited bit size, its numerical representation range is relatively narrow, and it has fewer significant digits, which can lead to numerical stability issues. For instance, underflow can occur with FP16, as its effective data representation range is 5.96×10⁻⁸ to 65504, while FP32 ranges from 1.4×10⁻⁴⁵ to 1.7×10³⁸, showing a significant gap; during deep learning computations of gradients, the gradient values are often very small, and FP16 is prone to underflow, leading to substantial deviations in computation results; rounding errors can occur when smaller values represented by FP32 are converted to FP16, potentially causing loss of data precision.

The core idea of mixed precision computation is to cleverly combine the advantages of FP32 and FP16, taking the best of both worlds. During computation, for those parts that involve massive calculations but do not have stringent precision requirements, FP16 is boldly employed to accelerate computation and reduce memory usage; whereas at critical points, such as model parameter updates and gradient accumulation—steps crucial to the stability and accuracy of the model—high-precision FP32 is used to ensure numerical stability, allowing the model to converge in the correct direction. Through this collaborative approach, we can enjoy the efficiency and low memory usage brought by FP16 while leveraging FP32 to ensure the precision and stability of model training, paving a new fast track for neural network model training.