Machine Heart Editorial Team

The top AI conference ICLR 2020 will be held on April 26 in Addis Ababa, Ethiopia. Among the final 2594 submitted papers, 687 were accepted, with an acceptance rate of 26.5%. This article introduces a paper accepted by the team of Zhang Quanshi from Shanghai Jiao Tong University – “Interpretable Complex-Valued Neural Networks for Privacy Protection.” In this paper, the researchers propose a novel privacy protection mechanism aimed at mid-level features.

The training and inference processes of deep neural networks often involve the leakage of dataset information.With the release and promotion of various machine learning services, users typically only need to preprocess data locally, extract shallow features, and then send them to third-party platforms or the cloud for further learning.However, during this process, users’ private data face significant leakage risks:Attackers can attack the mid-level features collected by third-party platforms to recover users’ input data, such as facial information and fingerprint data.Therefore, the researchers aim to propose a new privacy protection mechanism that ensures even if attackers know the internal parameters of the neural network, they cannot recover input data from mid-level features.

The core of this work is to study a neural network where mid-level features can be encrypted.Mid-level features of neural networks often contain rich information and play a crucial role in bridging the upper and lower layers.Encrypting mid-level features requires that the encrypted features do not leak sensitive user information (such as input data), while also allowing the encrypted features to continue through the neural network to complete downstream tasks.This necessitates finding a balance between data privacy and the accuracy of the network model.

In this paper, researchers from Shanghai Jiao Tong University propose using complex neural networks for privacy protection, encoding the target private information hidden in the specific phases of complex-valued features to achieve privacy protection.Overall, the algorithm proposed in this paper is similar to homomorphic encryption, but compared to the extremely high computational complexity of traditional homomorphic encryption algorithms, this complex neural network can perform computations very efficiently, only increasing the computational complexity to twice that of traditional networks, thus enhancing the application potential of the encryption algorithm.Generally, even if attackers crack the internal parameters and mid-level features of the neural network, they cannot decipher private information.

Core Algorithm

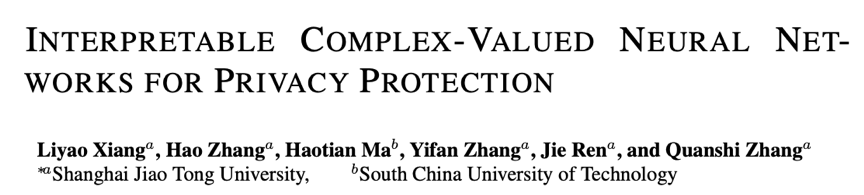

The researchers propose using complex neural networks for privacy protection and tested the security of the network through a series of possible attacks.Specifically, they transformed the original real-valued features (real-valued mid-level features) of the neural network into complex features, building a complex neural network, as shown in the figure below (a).

The complex neural network can be divided into three modules:

-

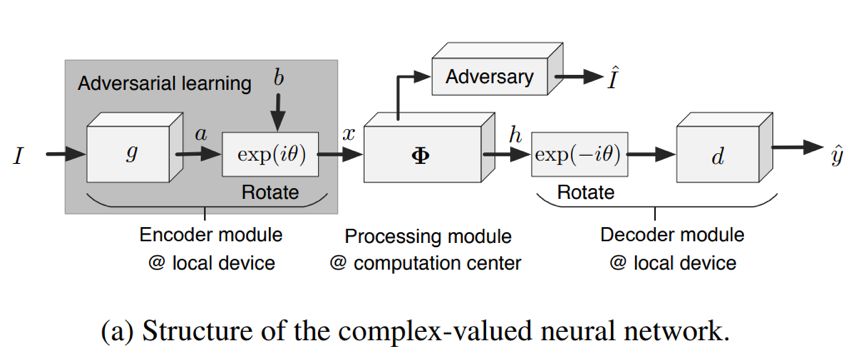

Local encoding module.The input image I is first processed by the encoder g to extract shallow features a=g(I);then rotated by a certain angle for encryption, thus hiding the real features in the specific phase of the complex-valued features:

where θ is the random rotation angle, and b is the disturbance term that does not contain any information related to a;finally, the encrypted result x is sent to the cloud.

where θ is the random rotation angle, and b is the disturbance term that does not contain any information related to a;finally, the encrypted result x is sent to the cloud. -

Cloud processing module.The processor Φ processes the encrypted data x sent by the user, obtains deep information h=Φ(x), and sends the processing result back to the local user.

-

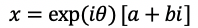

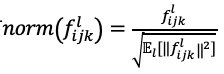

Local decoding module.After receiving the processing result h from the cloud, it first performs inverse rotation decryption on h, and then decodes the decrypted information using decoder d to obtain the final output:

,where R(⋅) represents the real part of the complex number.

,where R(⋅) represents the real part of the complex number.

Note that if the feature a+bi is rotated by angle θ, all features corresponding to it in the processing module Φ should be rotated by the same angle to ensure that h can be decrypted by rotation and then decoded to obtain the correct result.Thus, the operations of the processing module must satisfy the following equation, where Φ_j represents the j-th layer of the processing module, and f_j is the output of the j-th layer.

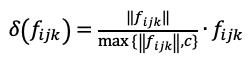

To ensure this equation holds, adjustments need to be made to the implementation details of each layer in the neural network.For convolution layers, the bias term needs to be removed;for ReLU layers, use as a replacement;for batch-normalization layers, there is

as a replacement;for batch-normalization layers, there is ;for max-pooling layers, select the point with the largest magnitude from the real and imaginary parts of each feature;for dropout layers, randomly select some features and discard them.

;for max-pooling layers, select the point with the largest magnitude from the real and imaginary parts of each feature;for dropout layers, randomly select some features and discard them.

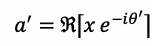

To further enhance the ability to protect privacy and prevent attackers from guessing the real feature a, the researchers utilized the concept of GAN to train the encoder g.If a’ represents the feature obtained by decrypting using a random angle θ’, that is , ideally, a and a’ should follow the same distribution, making it difficult for attackers to distinguish the real feature.Therefore, they introduced a discriminator D, ensuring it cannot distinguish between a and a’.The loss function for training the encoder based on WGAN is as follows:

, ideally, a and a’ should follow the same distribution, making it difficult for attackers to distinguish the real feature.Therefore, they introduced a discriminator D, ensuring it cannot distinguish between a and a’.The loss function for training the encoder based on WGAN is as follows:

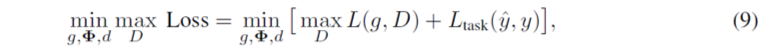

The total loss of the complex neural network can be expressed as:

Experimental Design

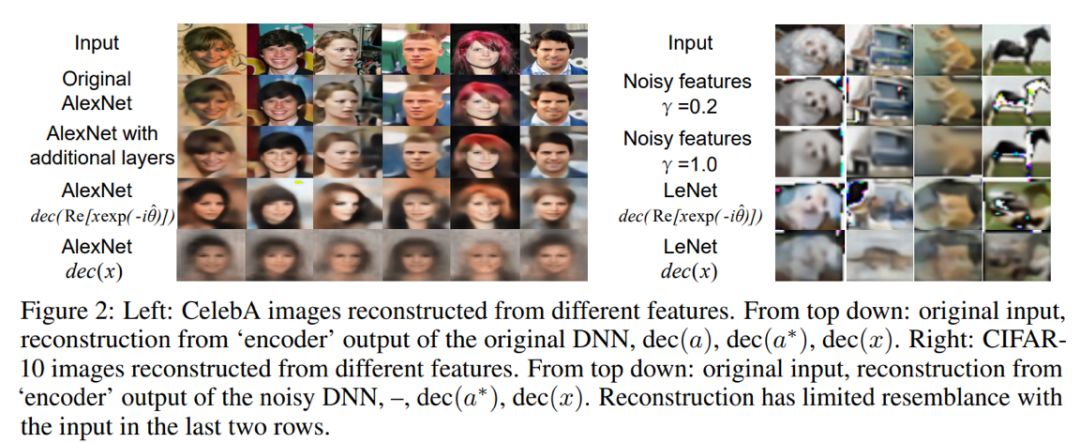

Researchers conducted experiments based on network structures such as ResNet-20/32/44/56/110, LeNet, VGG-16, and AlexNet on datasets like CelebA, CIFAR-10, and CUB-200, testing against different attack methods. The results demonstrate that the proposed complex neural network effectively resists various attacks, ensuring the security of user data without compromising the network’s classification ability.

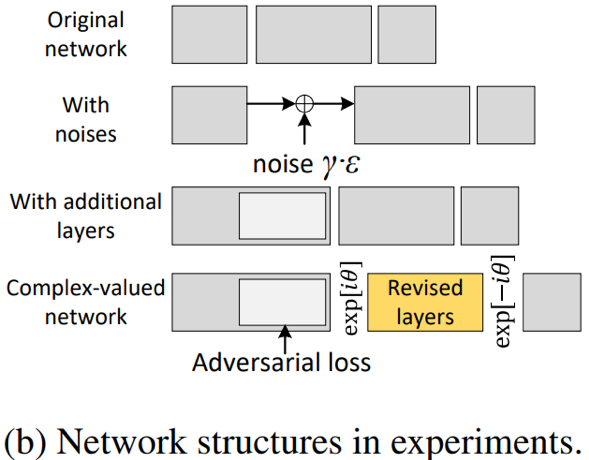

They designed three network structures as baselines for comparative experiments, as shown in the figure above (b).The Original network refers to a standard deep neural network;With noises refers to adding noise directly to the real features a in the original network to achieve encryption, where γ controls the noise amplitude;With additional layers refers to adding extra layers contained in GAN to the original network.Since the GAN structure was introduced in the encoding part of the complex neural network for fairness in comparison, the same modifications were made to the network structure here, but without introducing adversarial training.

Researchers designed experiments against the following attack methods:

Reconstruction-Based Image Attacks

Such attacks may directly use the encrypted features for image reconstruction or may use the discriminator to find the optimal angle θ ̂, then reconstruct the image using the decrypted feature a^*=dec(R(xe^(-iθ ̂ ))). Some reconstruction results are shown in the figure below.

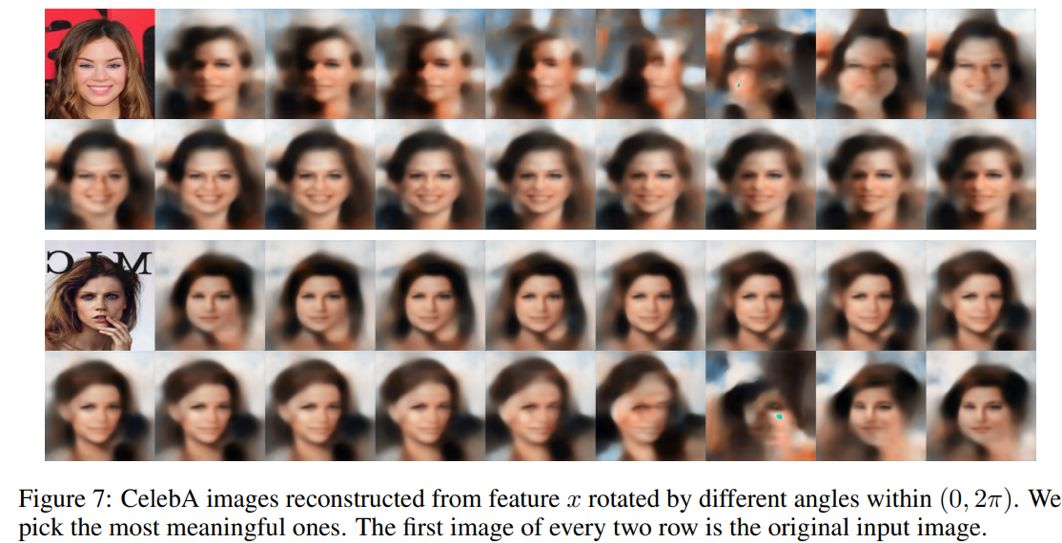

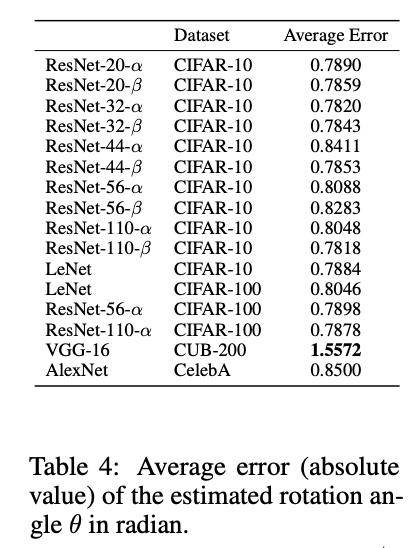

For the attack method using the discriminator to find the optimal angle θ ̂, the figure below provides the results of decrypted feature reconstruction images using different angles on the CelebA dataset, while they recorded the differences between the optimal angle found using the discriminator and the real angle, as shown in the table below, indicating that the discriminator cannot accurately identify the correct rotation angle.

Sensitive Information-Based Attacks

Some features in the input image may contain sensitive information (such as gender, skin color); traditional neural networks cannot effectively hide sensitive features, allowing attackers to train a new classifier using mid-level features to obtain sensitive information.Researchers designed a series of experiments to evaluate the protective effect of complex neural networks on sensitive information.Such attacks can be categorized into the following types:

-

Train a classifier for sensitive information using the original images from the user dataset, then reconstruct the input image using dec(a^*) and extract sensitive information via the classifier;

-

Directly use a^* to train a classifier and extract sensitive information;

-

Train a classifier using images reconstructed from dec(a^*) and extract sensitive information;

-

Use the k-NN algorithm to find the K nearest a* in the dataset to extract corresponding sensitive information.

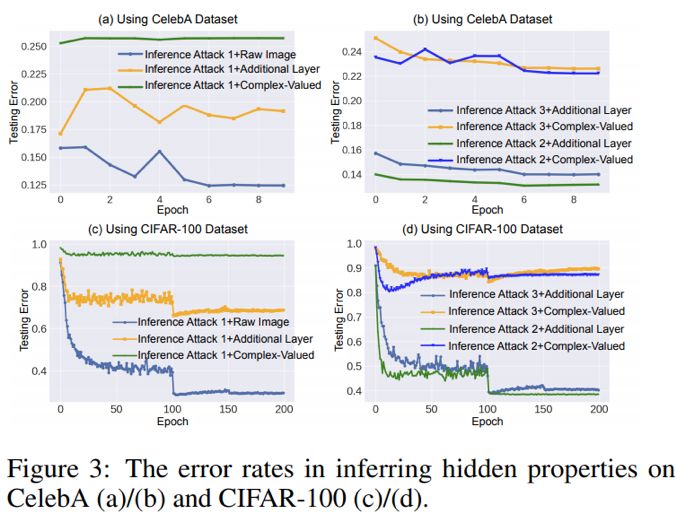

The experimental curves for the first three types of attacks are shown in the figure below.

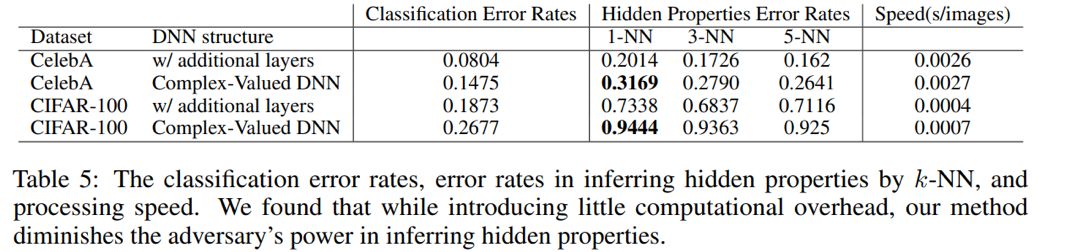

The results of the fourth type of attack are shown in the table below, which also provides the classification error rates and image processing speeds for each network.It can be seen that despite the introduction of a small amount of computation, the complex neural network can effectively resist attacks aimed at inferring sensitive information.

Author contact: [email protected], qszhang.com