Introduction

In this age of information explosion, data has become one of the most valuable resources. Python web scraping, as an efficient technique for acquiring web data, is becoming increasingly important. Today, I will guide you to learn Python web scraping from scratch, making it easy for even programming novices to get started!

1. Basic Concepts of Web Scraping

1. What is a Web Crawler?

A web crawler, also known as a web spider, is a program that automatically fetches information from the internet. In simple terms, a crawler simulates human browsing behavior to automatically gather the data we need from the web.

2. What Can a Crawler Do?

-

Data collection (news, product information, social media content, etc.)

-

Search engine data collection

-

Automated testing

-

Price monitoring

-

Sentiment analysis

3. Basic Workflow of a Crawler

-

Send an HTTP request to fetch the webpage content

-

Parse the webpage content to extract useful data

-

Store the extracted data

-

(Optional) Follow links to continue crawling

2. Setting Up the Python Scraping Environment

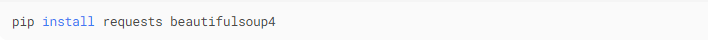

Before we begin, we need to prepare the Python environment:

-

Install Python (recommended version 3.6+)

-

Install necessary libraries:

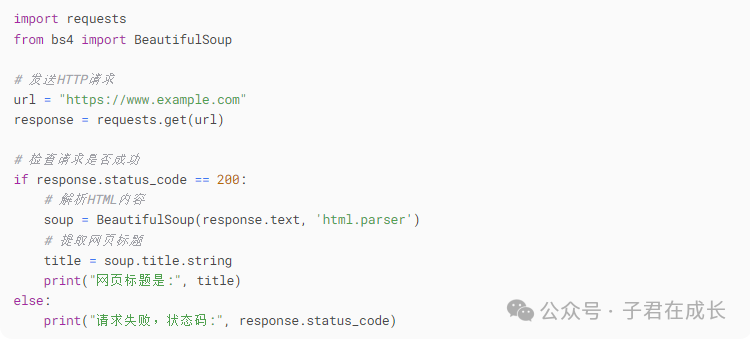

3. Your First Scraping Example: Fetching a Webpage Title

Let’s start with the simplest example:

Run this code, and you will see the title of the specified webpage printed out. This is the simplest crawler!

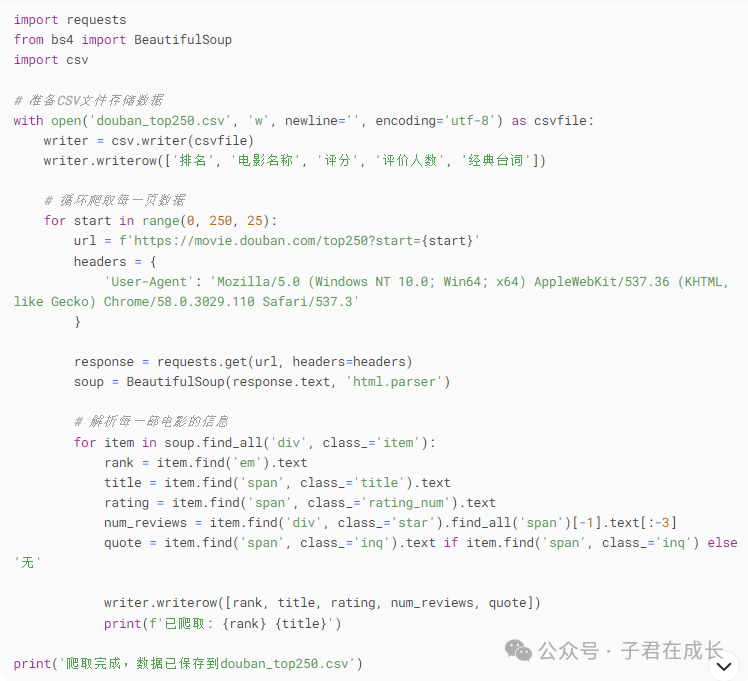

4. Advanced Example: Scraping Douban Movie Top 250

Let’s try a more interesting example: scraping information from Douban Movie Top 250.

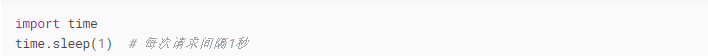

5. Considerations for Web Scraping

-

Follow the robots.txt protocol: Check the target website’s robots.txt file (e.g., https://www.example.com/robots.txt)

-

Set request intervals: Avoid putting too much pressure on the server

-

Use User-Agent: Simulate browser behavior

-

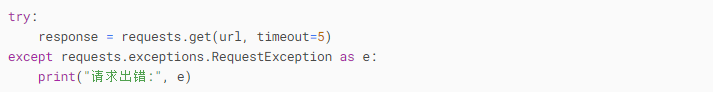

Handle exceptions: Network requests may fail, so exception handling is necessary

6. Anti-Scraping Strategies and Countermeasures

Websites often implement anti-scraping mechanisms to prevent being crawled:

-

User-Agent detection: The solution is to set a legitimate User-Agent

-

IP restrictions: Use a proxy IP pool

-

CAPTCHA: You can use a captcha solving platform or machine learning recognition

-

Dynamic loading: Use Selenium or analyze API interfaces

7. More Powerful Scraping Frameworks

When you need to scrape large-scale data, consider using professional scraping frameworks:

-

Scrapy: A powerful scraping framework

Selenium: Used for handling JavaScript-rendered pages

Conclusion

Through this tutorial, you have mastered the basic knowledge of Python web scraping and can complete simple data scraping tasks. Remember, scraping technology is a double-edged sword; when using it, you must comply with laws and regulations and the rules of the website, and avoid putting too much burden on the target site.

Disclaimer: This tutorial is for learning and communication purposes only. Please do not use it for illegal purposes. When scraping data, you should comply with the terms of use of the relevant websites and respect data copyright and privacy.