Container Technology

- File Isolation: chroot

- Access Isolation: namespaces

- Resource Isolation: cgroups

- LXC: Linux Containers

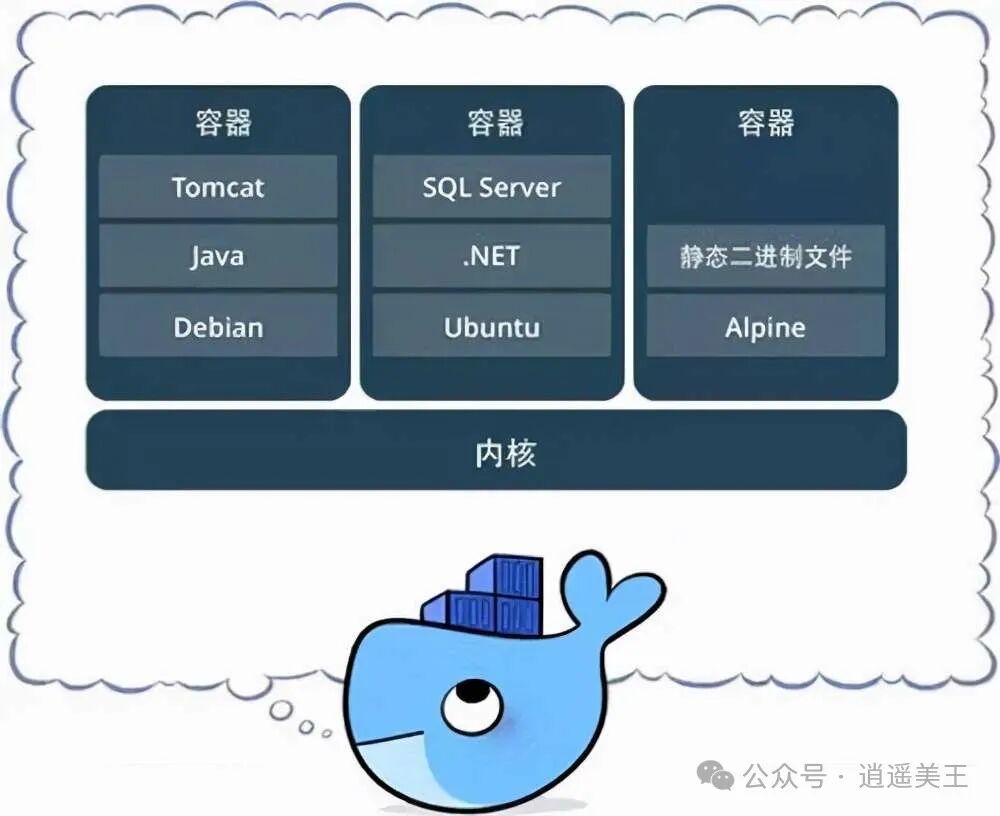

What is a Container

A completely new way of packaging and deploying software. It encapsulates the application and its dependencies (including code, runtime, system tools, system libraries, etc.)

Differences Between Docker and VM

- Docker is an application layer abstraction, where containers are isolated through network namespaces, sharing the same operating system kernel.

- VM is an abstraction of the physical hardware layer, with each VM containing an independent operating system, which starts slowly.

- VMs are primarily for providing system environments, while containers are mainly for providing application environments.

What Advantages Does Containerization Offer Compared to Virtualization

Extremely lightweight; second-level deployment; build once, deploy anywhere; elastic scaling.

Docker

Why Use Docker Instead of LXC

- Defines a way to package applications and their environment dependencies.

- Supports multiple versions.

- Image repository for distribution.

Differences Between Containers and Images

- An image is a read-only template that includes the data required to run a container, and its content does not change after being built, allowing it to create new containers.

- An image consists of multiple read-only layers, while a container has an additional read-write layer on top of the read-only layers.

How to View Docker-Related Processes

- The

docker pscommand: This command displays a list of running Docker containers. - The

docker topcommand: This command shows the process list of a specified container. - The

docker inspectcommand: This command displays detailed information about the container, including process information within the container.

What is the Purpose of a Dockerfile

Docker can automatically build images by reading instructions from a Dockerfile.

A Dockerfile is a configuration file that contains all the commands that users can call on the command line to assemble an image. Each instruction builds a layer.

Most Common Instructions

FROM: Establishes a base image for subsequent instructions.

RUN: The RUN instruction can execute any command in the current layer of the image and create a new layer, adding functionality to the image layers; the most recent layer may depend on it.

CMD: The CMD instruction provides default values for the executed container. If multiple CMD instructions are added in a Dockerfile, only the last CMD instruction will run.

ADD: Copies files to the image and automatically unpacks tar, zip, and other archive files.

COPY: Copies files to the image.

WORKDIR: Sets the working directory.

EXPOSE: Exposes the container's process port.

ENTRYPOINT: Specifies which commands to run when the container starts; if there are multiple ENTRYPOINTs, only the last one takes effect; if both CMD and ENTRYPOINT exist in the Dockerfile, CMD or parameters after docker run will be passed as arguments to ENTRYPOINT.How to Ensure Container A Runs Before Container B When Using Docker Compose

The depends_on specifies the dependency order.

How to Run Multiple Processes in a Container

Use supervisord to manage multiple processes.

docker-shim

A new docker-shim process is started for each container.

Kubelet interfaces with Docker’s container runtime (RunC does not support the CRI standard) to create a container.

How to Limit Container CPU and Memory

docker run --cpu-shares --memory

Docker Network Modes

bridge, host, overlay

The default is the bridge network model, and the first startup of the container will virtualize a new network card named docker0.

Veth (Virtual Ethernet Devices)

Is a device in Linux that simulates a hardware network card through software, and Veth always appears in pairs, hence also called Veth-Pair.

Veth implements point-to-point virtual connections and can connect two namespaces through Veth.

VxLAN

Virtual Extensible Local Area Network, an extension protocol for VLAN.

VxLAN is essentially a tunneling encapsulation technology. It uses the encapsulation/decapsulation technique of the TCP/IP protocol stack to encapsulate L2 Ethernet frames into L4 UDP datagrams, which are then transmitted over L3 networks, effectively making L2 Ethernet frames appear to be transmitted within a broadcast domain, while actually crossing L3 networks without perceiving their existence.

Data Persistence

<span>volumes:</span>Directories managed by Docker, no need to create directories manually.<span>bind mounts:</span>A directory specified by the user, noting the different file path formats of different operating systems.<span>tmpfs:</span>Stored only in the host system’s memory, not persistently stored on disk. Containers can use it to share simple state or non-sensitive information.

Where Are Local Image Files Stored

Under the /var/lib/docker/ directory, where the container directory stores container information, the graph directory stores image information, and the aufs directory stores the specific underlying files of the images.

OCI

- Open Container Initiative: runtime standards, image standards, distribution standards.

- runC is the reference implementation for runtime.

- containerd interacts with the runtime, responsible for container execution, building, networking, logging, monitoring, etc.

K8s

A platform for automating deployment, scaling, and management of containerized applications.

CNCF

Cloud Native Computing Foundation.

CRI

Container Runtime Interface.

Defines how the container runtime should interface with the kubelet specification.

CNI

Container Network Interface standard.

CSI

Container Storage Interface standard.

Components

The master node includes components such as: kube-api-server, kube-controller-manager, kube-scheduler, etcd. The node includes components such as: kubelet, kube-proxy, container-runtime.

What is the Port of kube-api-server

The ports for kube-api-server are 8080 and 6443, the former is for HTTP and the latter is for HTTPS.

Common Controllers

Deployment Controller

ReplicaSet Controller

Deployment Update Strategy

<span>Recreate</span>: Rebuild update: will kill all running pods and then recreate them;

2. <span>rollingUpdate</span>: Updates pods one by one in a rolling manner, while controlling the update process through the two parameters <span>maxUnavailable</span> and <span>maxSurge</span>.

What is the Role of Namespaces in K8s

Used to achieve resource isolation for multiple environments or multi-tenant resource isolation.

Pod

Process group role.

Default shared namespaces: UTS (hostname), network, IPC (semaphores), time.

What is the Role of the Pause Container

Each pod runs a special container called the pause container, also known as the root container, while other containers are referred to as business containers; the pause container is created primarily to provide Linux namespaces for business containers, sharing the foundation: including pid, ipc, net, etc., and to start the init process and reap zombie processes.

Pod Lifecycle

- Pending: The API server has created the pod, but one or more container images have not yet been created, including the process of downloading the image;

- Running: All containers in the pod have been created, and at least one container is in a running state, starting, or restarting;

- Succeeded: All containers in the pod have exited and will not restart;

- Failed: All containers in the pod have exited, and at least one container has exited with a failure status;

- Unknown: For some reason, the API server cannot obtain the status of the pod, possibly due to network issues;

What are the Pod Restart Policies

The pod restart policy refers to the restart policy for all containers within the pod, not the pod itself, configured through the <span>restartPolicy</span> field.

<span>Always</span>: Always restart the container after it terminates, the default policy is<span>Always</span>.<span>OnFailure</span>: Restart the container only when it exits abnormally, with a non-zero exit status.<span>Never</span>: Never restart the container regardless of the exit status when it terminates.

What are the Pod Image Pull Policies

The pod image pull policy can be configured through the <span>imagePullPolicy</span> field.

<span>IfNotPresent</span>: Default value, pull the image only if it does not exist on the node host.<span>Always</span>: Always pull the image, meaning every time a pod is created, it will pull the image from the repository again.<span>Never</span>: Never actively pull the image, only using local images, requiring you to manually pull the image to the node; if the node does not have the image, the pod startup will fail.

What are the Pod Liveness Probes

Checks whether the container is still running; a liveness probe can be defined for each container in the pod, and Kubernetes will periodically execute the probe; if the probe fails, it will kill the container and decide whether to restart it based on the <span>restartPolicy</span>.

<span>httpGet</span>: Sends an HTTP request to the container’s IP, port, and path, returning a status code in the range of 200-400 indicates success.<span>exec</span>: Executes a shell command inside the container, judging based on the command’s exit status code; 0 indicates healthy, non-zero indicates unhealthy.<span>TCPSocket</span>: Establishes a TCP Socket connection to the container’s port.

What are the Pod Readiness Probes

Used to detect whether the business process of the container in the pod has completed initialization.

<span>exec</span>: Executes a command in the container and checks the command’s exit status; if the status is 0, the container is considered ready;<span>httpGet</span>: Sends an HTTP GET request to the container, judging readiness based on the HTTP status code of the response;<span>tcpSocket</span>: Opens a TCP connection to the specified port of the container; if the connection is established, the container is considered ready.

What is a Service

Used to expose pod network services, mapping the pod’s IP and port to a virtual IP address and port, achieving load balancing and service discovery.

PV Reclamation Policies

- Retain: This policy allows manual reclamation of resources; when deleting PVC, the PV still exists, and the PV is considered released, allowing the administrator to manually reclaim the volume.

- Delete: If the Volume plugin supports it, the PV will be deleted along with the PVC; dynamic volumes default to Delete, currently supporting Delete storage backends include AWS EBS, GCE PD, Azure Disk, OpenStack Cinder, etc.

- Recycle: If the Volume plugin supports it, the Recycle policy will execute

<span>rm -rf</span>to clean the PV and make it available for the next new PVC; however, this policy will be deprecated in the future, currently only NFS and HostPath support this policy. (This policy has been deprecated).

Helm

Package and application management tool.

Operator

Encapsulates, deploys, and manages K8s methods.

Abstracts applications as resources.

Release Strategies

Rolling upgrades implement traffic control.

Blue-Green Deployment

Reduces downtime during releases, allowing for quick rollback.

The system currently serving is marked as green, while the system being prepared for release is marked as blue; the blue system does not provide external services and is used for pre-release testing; any issues found during testing can be directly modified on the blue system; once standards are met, switch to the blue system; destroy the green system.

Canary Release (Gray Release)

Only one system, stateless service.

Prepare several servers to deploy and validate the new version; after validation, switch part of the online service to the validated version; after no exceptions, switch all services.

Implementation

K8s deployment rolling update strategy.

<span>rollingUpdate.maxSurge</span> maximum number of nodes that can exceed expectations, percentage 10% or absolute value 5.

<span>rollingUpdate.maxUnavailable</span> maximum number of unavailable nodes, percentage or absolute value.

Ingress-Nginx configuration for traffic forwarding.

Follow us for more technical insights!