In the current revolutionary wave of artificial intelligence empowering various industries, robots are gradually becoming the “core workforce” of modern factories. The performance limits of robots are determined not by the mechanical arms or sensors, but by their “brains”—the controllers. The Xinchang Haihe Laboratory’s Unmanned Systems Research Center focuses on “autonomous control” and “high-reliability embodied intelligent systems,” successfully developing a domestically produced autonomous mobile robot controller based on Xinchang technology. This controller achieves domestic substitution and technological innovation from the underlying hardware to the upper-level algorithms, creating a new paradigm for robot control.

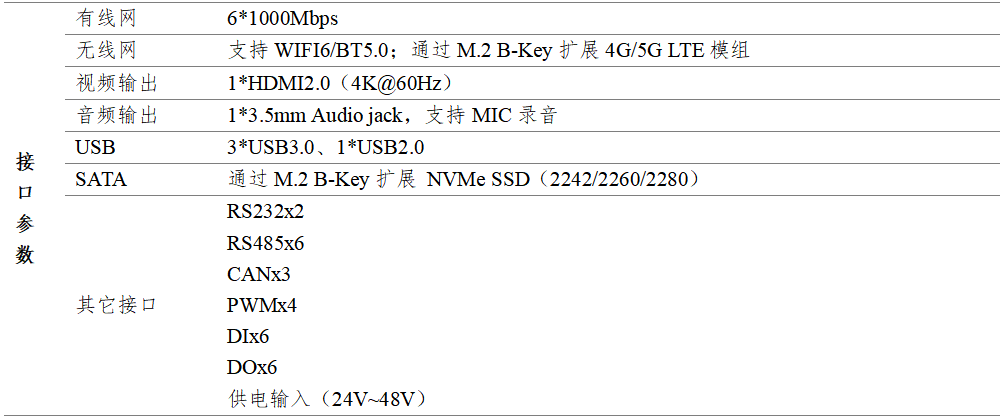

Rich Device ConnectivityThe robot controller is equipped with a variety of interfaces. From common digital I/O interfaces to high-speed Ethernet interfaces, USB interfaces, and more, it provides comprehensive connectivity. This allows robots to easily connect to various sensors (such as LiDAR, cameras, inertial sensors, etc.), actuators (such as motor drivers, solenoid valves, etc.), and other auxiliary devices, greatly enhancing the robot’s functional extensibility.

Multi-Operating System SupportTo ensure good technical inclusivity, the controller currently supports multiple operating systems and virtualization container deployment, isolating critical tasks from untrusted environments to prevent fault propagation.

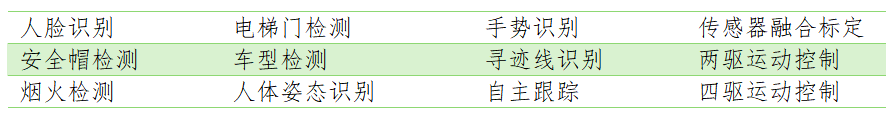

Powerful Data Processing CapabilitiesThe controller adopts a high-performance embedded solution, providing strong computational speed and data processing capabilities. Whether for complex motion trajectory planning or real-time sensor data processing, it can handle tasks with ease. Its multi-core architecture design ensures stability and efficiency during multi-task processing. The built-in LiDAR + vision + IMU multimodal perception fusion engine provides solid support for precise robot operation in high-dynamic environments. Through advanced motion control algorithms, whether for linear motion or complex curved motion, the robot’s motion accuracy can reach millimeter-level precision, while also possessing high dynamic response capabilities. It can simultaneously handle 4-axis coordinated control, achieving a 400% increase in motion trajectory planning speed compared to traditional PLC controllers, allowing real-time adjustments to the robot’s motion state to adapt to different working environments and task requirements.The built-in Neural Processing Unit (NPU) supports deployment and conversion of mainstream AI frameworks such as Tensorflow, Pytorch, Caffe, MxNet, ONNX, and supports models like DeepSeek R1:1.5B, LLaMA, Qwen, Qwen2, Phi-3, ChatGLM3, Gemma, InterLM2, MiniCPM. The controller also integrates AI recognition algorithms and motion control algorithms with driving force, and will continue to expand its software ecosystem to accelerate the intelligent transformation process for different application scenarios.

Wide Application Fields

The robot controller can be widely applied in various fields, such as industrial manufacturing, logistics warehousing, education and research, and special operations.Industrial Manufacturing: From “Automation” to “Adaptive” By deeply integrating with MES systems, it can respond in real-time to changes in production rhythm; reduce downtime due to material shortages; improve narrow passage capabilities, accurately navigating small-batch, multi-variety production line scenarios such as electronic SMT production lines.Logistics and Warehousing: Dynamically Optimized Smart NetworksReal-time analysis of warehouse orders and path congestion, dynamically planning AGV operation routes to enhance efficiency.Combining visual recognition with robotic arm control to achieve precise package grabbing and sorting.Special Operations: Intelligent Survival in Extreme EnvironmentsNuclear Power Plant Inspection:The AI controller drives the robot to adapt to high-radiation environments, autonomously completing equipment inspection and fault diagnosis.Reliable Teaching Equipment Base

Through the robot controller, students can learn core knowledge such as motion control and sensor fusion, hands-on build and debug robotic systems, such as robotic arm trajectory planning and mobile robot navigation; integrating automation, computer science, artificial intelligence, and other disciplines to develop comprehensive courses on “Robots + AI.” For example, based on the controller, visual servoing, voice interaction, or group collaboration can be used to cultivate interdisciplinary talents.

Future VisionWith the further integration of large models, edge computing, and 5G technologies, the Xinchang robot controller will advance towards higher levels of intelligence:Cloud Collaborative Control: Achieving continuous evolution of the controller through the “edge instant reasoning and real-time control + cloud training” model.Human-Machine Interaction: Understanding human needs through natural interaction methods (voice, gestures) to complete more complex collaborative tasks.Open Ecosystem: Attracting industry partners to jointly build application ecosystems, accelerating intelligent upgrades across the industry; through a dual-driven approach of “technology empowerment + ecosystem construction,” redefining the productivity boundaries of robots.

The Xinchang robot controller uses technology to firmly establish a safety baseline, leveraging artificial intelligence to help application scenarios achieve a leap from “manufacturing” to “intelligent manufacturing.” Choose Xinchang + AI, choose the future!

Written by: Wang TianweiProofread by: Zhao JiaqingReviewed by: Xu Zhiwei