The migration of AI, including generative AI, to edge devices has raised widespread questions: If cloud or data centers already possess excellent AI inference capabilities that far exceed those of edge devices, why do we still need to deploy edge or on-device AI?

Until we realized that automotive ADAS/AD decision-making must prioritize real-time performance; otherwise, even if the decision accuracy is high, a one-second response time from the cloud to the vehicle is sufficient to cause an accident. The same applies to operational safety in industrial manufacturing; AI decisions that do not emphasize real-time performance will inevitably lead to production losses, making it unnecessary to discuss how intelligent predictive maintenance solutions are.

Moreover, generative AI, which we once deemed to have low real-time requirements, has also discovered high real-time application scenarios during its implementation process: for example, AI coaching assistants in gaming on PCs—these AI assistants provide guidance and support to players through screen recording and visual detection, using AI CV (computer vision) recognition in a floating window format, and have already been used in some competitive games and training events.

This is a typical application scenario of generative AI that requires real-time performance. In highly interactive gaming scenarios, it is almost impossible to complete the entire process through cloud AI, not to mention when “generative AI” involves not only text and image generation but also the generation of robotic arm movements and brainwaves, the problem becomes even more complex.

Therefore, edge AI truly possesses value. At the IIC Shenzhen 2024 event, Amichai Ron, Senior Vice President of Embedded Processing at TI (Texas Instruments), stated in his keynote speech that edge AI brings computing power and data sources closer together, making decisions at the device level rather than in the cloud.

“Bringing intelligence to the chip is about response speed, power consumption, and data privacy, making real-time decisions with low latency.” Amichai said, “The system needs to make decisions within microseconds to save lives.” “Just as wireless connectivity has become a part of our daily lives, edge AI will continue to spread into more and more applications.”

This round of edge AI compared to the previous round of AIoT

Edge AI has been a hot topic in technology and market trends for the past two years. “Edge AI refers to the ability to design and train models that can run specific functions in the cloud and execute those models on embedded devices using neural network engines,” Amichai defined edge AI.

Perhaps more companies define edge AI as extending the local execution capability of AI models to all non-data center edges and endpoints, including mobile phones, PCs, cars, robots, home appliances, AIoT, and edge embedded devices in various industries such as industrial, medical, and retail, as well as high-performance edge devices or clusters established by enterprises for manufacturing.

Market participants like Intel and NVIDIA may define “edge” differently from TI and Renesas. Therefore, I prefer to expand the scope of “edge” to encompass all industry edges. However, regardless, edge AI emphasizes the technology of performing AI model inference locally with limited computing power and scalability.

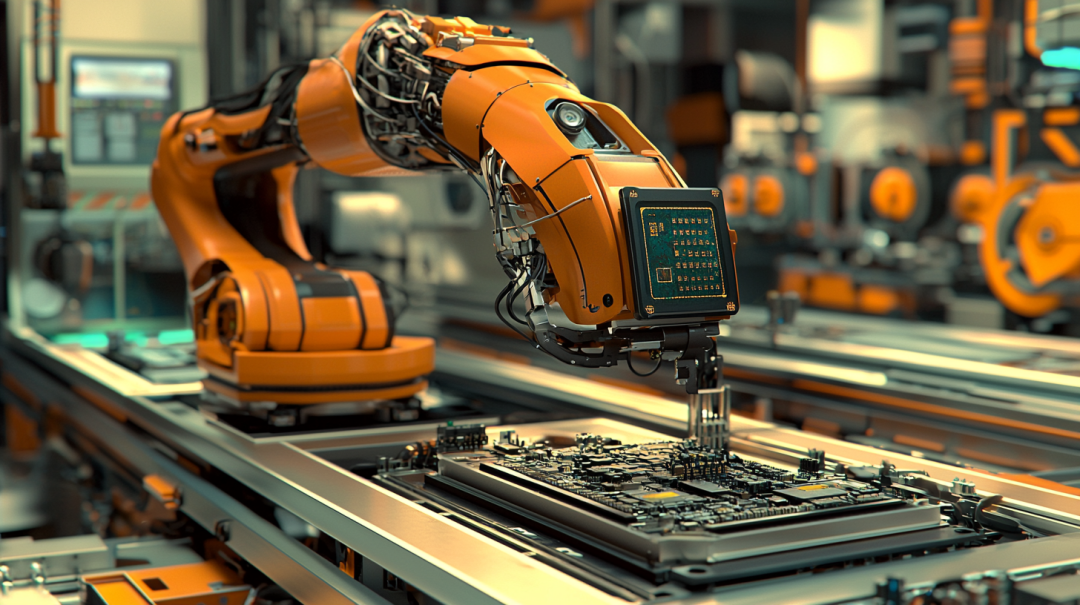

Figure 1: Robots are also typical industrial edge applicationsFrom this definition, executing AI computations at the edge or endpoint has not been new since as early as 2018 or even earlier. However, back then, terms like “edge AI” and “TinyML” (referring to machine learning technology on ultra-low power, resource-constrained micro-devices) were not popular; it was mostly referred to as “edge computing” and “AIoT.” At that time, NVIDIA’s stock price fluctuated around $5, and generative AI had not yet exploded. So, is the recent rise of “edge AI” just a rehash of an old concept?At the 2024 Import Expo, Renesas Electronics’ Global Sales and Marketing Vice President and President of Renesas Electronics China, Lai Changqing, mentioned three compelling differences in an interview with International Electronic Business: (1) Contemporary edge AI technology is significantly more mature than before; (2) The range of AI applications has become broader; (3) The paradigm shift in edge development. These were issues we did not discuss in previous years.In terms of technological maturity, “key technologies including perception, communication, motor control, human-machine interface, and security” are all different from before. Consequently, this wave of AI technology development has “spawned a larger market.” “Smart homes, autonomous driving, smart cities, etc., have become increasingly widespread,” and AI has achieved “depth and breadth of popularization” at the edge.Regarding the change in embedded development paradigms, “traditional embedded engineers still design based on existing rules. However, artificial intelligence is designed based on data,” Lai Changqing noted. In other words, more and more embedded designs and algorithms are shifting from rule-driven to data-driven.Another practical reflection of the differences between this round of edge AI and the previous round of AIoT is the emergence of AI MCUs in the past two years—although this is not the only type of chip for implementing edge AI: companies including Renesas, Infineon, and TI are actively promoting their AI MCUs, not only in MCU + DSP/NPU acceleration modes but also in the practice of AI acceleration at the CPU level from the instruction set perspective, which has already been reflected in the AI MCUs that have been launched. It is hard to imagine that contemporary microcontrollers can independently process visual data captured by cameras, detect objects in the images, and achieve considerable frame rates without requiring additional accelerators.Considering a broader definition of “edge,” I believe that in addition to the three points explained by Lai Changqing, we can add a fourth: “(4) Generative AI may thrive at the edge, not only in resource-rich edges like PCs, mobile phones, and cars but also in embedded applications.” This point will be elaborated further below.

Figure 1: Robots are also typical industrial edge applicationsFrom this definition, executing AI computations at the edge or endpoint has not been new since as early as 2018 or even earlier. However, back then, terms like “edge AI” and “TinyML” (referring to machine learning technology on ultra-low power, resource-constrained micro-devices) were not popular; it was mostly referred to as “edge computing” and “AIoT.” At that time, NVIDIA’s stock price fluctuated around $5, and generative AI had not yet exploded. So, is the recent rise of “edge AI” just a rehash of an old concept?At the 2024 Import Expo, Renesas Electronics’ Global Sales and Marketing Vice President and President of Renesas Electronics China, Lai Changqing, mentioned three compelling differences in an interview with International Electronic Business: (1) Contemporary edge AI technology is significantly more mature than before; (2) The range of AI applications has become broader; (3) The paradigm shift in edge development. These were issues we did not discuss in previous years.In terms of technological maturity, “key technologies including perception, communication, motor control, human-machine interface, and security” are all different from before. Consequently, this wave of AI technology development has “spawned a larger market.” “Smart homes, autonomous driving, smart cities, etc., have become increasingly widespread,” and AI has achieved “depth and breadth of popularization” at the edge.Regarding the change in embedded development paradigms, “traditional embedded engineers still design based on existing rules. However, artificial intelligence is designed based on data,” Lai Changqing noted. In other words, more and more embedded designs and algorithms are shifting from rule-driven to data-driven.Another practical reflection of the differences between this round of edge AI and the previous round of AIoT is the emergence of AI MCUs in the past two years—although this is not the only type of chip for implementing edge AI: companies including Renesas, Infineon, and TI are actively promoting their AI MCUs, not only in MCU + DSP/NPU acceleration modes but also in the practice of AI acceleration at the CPU level from the instruction set perspective, which has already been reflected in the AI MCUs that have been launched. It is hard to imagine that contemporary microcontrollers can independently process visual data captured by cameras, detect objects in the images, and achieve considerable frame rates without requiring additional accelerators.Considering a broader definition of “edge,” I believe that in addition to the three points explained by Lai Changqing, we can add a fourth: “(4) Generative AI may thrive at the edge, not only in resource-rich edges like PCs, mobile phones, and cars but also in embedded applications.” This point will be elaborated further below.

The Internal Logic of Industry Discussions on “Edge AI”More specific application cases of edge AI can be found in historical articles from International Electronic Business. For example, CITIC Pacific Special Steel Group has already adopted AI technology in its production processes, such as predicting the internal workings of blast furnaces, optimizing process parameters in real-time, increasing throughput by 15%, and reducing energy consumption by 11%. This is also a typical contemporary industry edge AI application that could not be achieved by AIoT a few years ago.The above is a concrete phenomenon of edge AI observed from the semiconductor field. Next, we need to delve into industries and applications to understand the essential differences between this round of “edge AI” and the previous round of “AIoT” trends from a relatively macro perspective.Listing any research institution’s market value figures cannot express the true market potential: let’s look at some more specific data. In a market trend article from early 2024, McKinsey & Company described AI as the fourth industrial revolution. Of course, this description itself is not remarkable, as many technologies have been referred to as the “fourth industrial revolution” in recent years. McKinsey provided data showing that the U.S. manufacturing industry has declined by 1.4% over the past 20 years.However, with the support of AI, digital technology, sustainable development trends, and higher skill levels, the market has regained vitality in the past five years. Compared to the previous 15 years, U.S. industrial companies have created more than 400 basis points of shareholder returns in these five years.Moreover, McKinsey’s Global Lighthouse Network has identified two key factors that have contributed to this “fourth industrial revolution.” The first is that machine intelligence technology has reached unprecedented maturity. Here, machine intelligence specifically refers to AI, enabling machines to produce in both virtual and physical worlds, endowing them with specific intelligence to execute complex tasks. The second is that leading global enterprises no longer experiment with new technologies in isolated use cases but expand cutting-edge technologies at the level of entire factories or factory clusters.In other words, McKinsey’s research subjects have deployed AI technology at the factory level or even across factories, rather than remaining at the initial small-scale trials and validations. Taking the more advanced generative AI as an example, these factories are at least trialing one generative AI application in their processes; some can implement, test, and iterate generative AI use cases within days or weeks. Specifically, regarding the systematic advanced use cases of the fourth industrial revolution defined by McKinsey, the vast majority of enterprises require less than six months, with 30% taking less than three months.The reason for being able to complete the deployment and iteration of new technologies in such a short period is that the necessary infrastructure has already been built in the previous phase, including essential data, technological infrastructure, talent reserves, and flexible operational models. This article does not intend to elaborate on what AI has specifically accomplished, and McKinsey’s research on AI in industrial manufacturing is not solely about edge AI. However, we must recognize the essential differences between the current market discussions on edge AI and the previous AIoT topic. That is, technological innovation, especially at the level of “industrial revolution,” is often a long process.

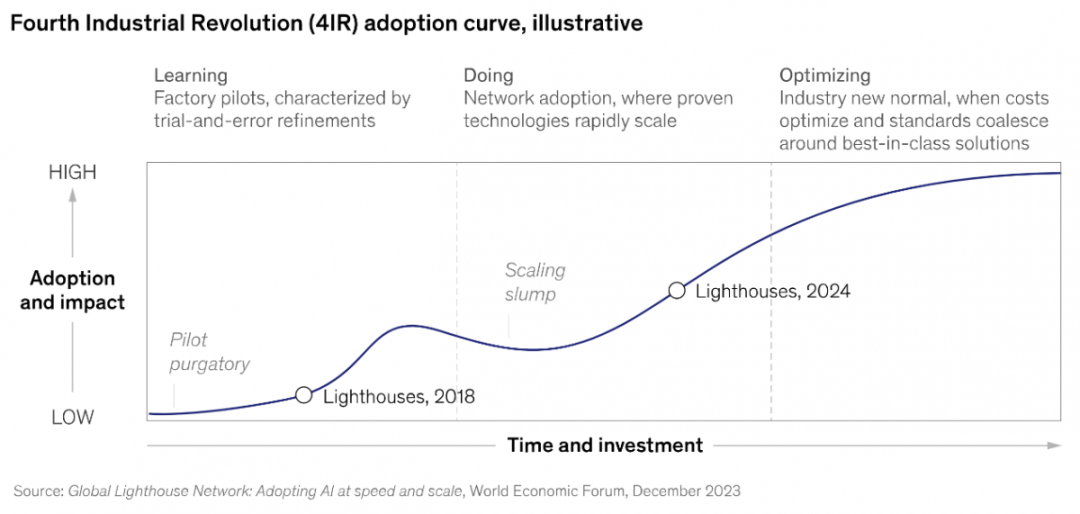

The Internal Logic of Industry Discussions on “Edge AI”More specific application cases of edge AI can be found in historical articles from International Electronic Business. For example, CITIC Pacific Special Steel Group has already adopted AI technology in its production processes, such as predicting the internal workings of blast furnaces, optimizing process parameters in real-time, increasing throughput by 15%, and reducing energy consumption by 11%. This is also a typical contemporary industry edge AI application that could not be achieved by AIoT a few years ago.The above is a concrete phenomenon of edge AI observed from the semiconductor field. Next, we need to delve into industries and applications to understand the essential differences between this round of “edge AI” and the previous round of “AIoT” trends from a relatively macro perspective.Listing any research institution’s market value figures cannot express the true market potential: let’s look at some more specific data. In a market trend article from early 2024, McKinsey & Company described AI as the fourth industrial revolution. Of course, this description itself is not remarkable, as many technologies have been referred to as the “fourth industrial revolution” in recent years. McKinsey provided data showing that the U.S. manufacturing industry has declined by 1.4% over the past 20 years.However, with the support of AI, digital technology, sustainable development trends, and higher skill levels, the market has regained vitality in the past five years. Compared to the previous 15 years, U.S. industrial companies have created more than 400 basis points of shareholder returns in these five years.Moreover, McKinsey’s Global Lighthouse Network has identified two key factors that have contributed to this “fourth industrial revolution.” The first is that machine intelligence technology has reached unprecedented maturity. Here, machine intelligence specifically refers to AI, enabling machines to produce in both virtual and physical worlds, endowing them with specific intelligence to execute complex tasks. The second is that leading global enterprises no longer experiment with new technologies in isolated use cases but expand cutting-edge technologies at the level of entire factories or factory clusters.In other words, McKinsey’s research subjects have deployed AI technology at the factory level or even across factories, rather than remaining at the initial small-scale trials and validations. Taking the more advanced generative AI as an example, these factories are at least trialing one generative AI application in their processes; some can implement, test, and iterate generative AI use cases within days or weeks. Specifically, regarding the systematic advanced use cases of the fourth industrial revolution defined by McKinsey, the vast majority of enterprises require less than six months, with 30% taking less than three months.The reason for being able to complete the deployment and iteration of new technologies in such a short period is that the necessary infrastructure has already been built in the previous phase, including essential data, technological infrastructure, talent reserves, and flexible operational models. This article does not intend to elaborate on what AI has specifically accomplished, and McKinsey’s research on AI in industrial manufacturing is not solely about edge AI. However, we must recognize the essential differences between the current market discussions on edge AI and the previous AIoT topic. That is, technological innovation, especially at the level of “industrial revolution,” is often a long process. Figure 2: The S-Curve of the Fourth Industrial Revolution; Source: McKinsey & CompanyEvery major technological revolution always presents an “S-curve” of progress. McKinsey believes that in the early stages of technological development, the market must first go through a “learning” curve phase—this period can be long, accompanied by various trial and error, requiring pioneers to solve various practical problems.As the next stage enters the “doing” part of the curve, it is based on the established foundational technology, and enterprise organizations begin to deploy it within their production networks. Finally, it will enter the “optimization” curve phase, where the industry will start to align on technology: standardization, protocol detailing, and stabilization of implementation costs.McKinsey’s research on the diffusion of the fourth industrial revolution’s S-curve model found that pioneering enterprises in the Global Lighthouse Network exhibited significant characteristics in technological evolution. These enterprises were already in the “learning” curve phase of the AI-driven fourth industrial revolution in 2018, and this year they have entered the later stages of the “implementation” phase, allowing them to relatively quickly build or deploy AI use cases in industrial production processes.We understand that this is also the internal logic of how the market assigns different names to AI technology at different times. Even for the vast majority of enterprises, they may still be far from reaching the position of the Lighthouse—most of them will experience a period of regression and trial and error in the “learning” phase (Scaling Slump), as expanding a new technology across the entire production network faces various challenges.Those market participants who have passed this period will truly define the entire industry and even take the lead in establishing technological standards, just like the concept of Six Sigma did back in the day.

Figure 2: The S-Curve of the Fourth Industrial Revolution; Source: McKinsey & CompanyEvery major technological revolution always presents an “S-curve” of progress. McKinsey believes that in the early stages of technological development, the market must first go through a “learning” curve phase—this period can be long, accompanied by various trial and error, requiring pioneers to solve various practical problems.As the next stage enters the “doing” part of the curve, it is based on the established foundational technology, and enterprise organizations begin to deploy it within their production networks. Finally, it will enter the “optimization” curve phase, where the industry will start to align on technology: standardization, protocol detailing, and stabilization of implementation costs.McKinsey’s research on the diffusion of the fourth industrial revolution’s S-curve model found that pioneering enterprises in the Global Lighthouse Network exhibited significant characteristics in technological evolution. These enterprises were already in the “learning” curve phase of the AI-driven fourth industrial revolution in 2018, and this year they have entered the later stages of the “implementation” phase, allowing them to relatively quickly build or deploy AI use cases in industrial production processes.We understand that this is also the internal logic of how the market assigns different names to AI technology at different times. Even for the vast majority of enterprises, they may still be far from reaching the position of the Lighthouse—most of them will experience a period of regression and trial and error in the “learning” phase (Scaling Slump), as expanding a new technology across the entire production network faces various challenges.Those market participants who have passed this period will truly define the entire industry and even take the lead in establishing technological standards, just like the concept of Six Sigma did back in the day. The Next Step for Generative AI at the EdgeIn November 2024, at Intel’s New Productivity Technology Ecosystem Conference, Wallface Intelligence discussed the concept of “model knowledge density,” which is the model capability divided by model parameters. In this concept, with the scale of model parameters remaining unchanged, the model capability doubles every eight months. It is said that a current model with 2 billion parameters can already reach the level of the previous 1.7 trillion parameter GPT-3.Combining this trend with Moore’s Law of transistor size reduction, the intersection of these two opposing trends at a certain point means that under more limited computing power, more complex functions can be accomplished. This, in our view, is the theoretical basis for large models moving to the edge: not only is Moore’s Law and accelerated computing continuously developing and enhancing computing power, but AI models and algorithms themselves are also continuously optimizing.Ultimately, not only AI PCs, AI mobile phones, AI robots, and more industry edges will be able to run generative AI, but low-power embedded applications will also gain the benefits of generative AI technology, and there are already AI chips aimed at the edge that can theoretically achieve SLM (small language model) inference with low power consumption. This opens up more possibilities for edge AI.

The Next Step for Generative AI at the EdgeIn November 2024, at Intel’s New Productivity Technology Ecosystem Conference, Wallface Intelligence discussed the concept of “model knowledge density,” which is the model capability divided by model parameters. In this concept, with the scale of model parameters remaining unchanged, the model capability doubles every eight months. It is said that a current model with 2 billion parameters can already reach the level of the previous 1.7 trillion parameter GPT-3.Combining this trend with Moore’s Law of transistor size reduction, the intersection of these two opposing trends at a certain point means that under more limited computing power, more complex functions can be accomplished. This, in our view, is the theoretical basis for large models moving to the edge: not only is Moore’s Law and accelerated computing continuously developing and enhancing computing power, but AI models and algorithms themselves are also continuously optimizing.Ultimately, not only AI PCs, AI mobile phones, AI robots, and more industry edges will be able to run generative AI, but low-power embedded applications will also gain the benefits of generative AI technology, and there are already AI chips aimed at the edge that can theoretically achieve SLM (small language model) inference with low power consumption. This opens up more possibilities for edge AI. Figure 3: The Evolution from AIoT to Edge AIFinally, to conclude with Amichai’s keynote speech on the value of edge AI: “In industrial systems, edge AI can achieve predictive maintenance, making systems more stable, efficient, and cost-effective.” For example, regarding solar energy systems, “by using edge AI for fault detection, it can identify voltage surges and shut down the system before problems arise, enhancing the safety of solar panels. This is crucial for the widespread adoption of solar panels and indirectly increases the prevalence of sustainable energy technologies.”As we defined edge AI in 2023, edge AI is a reflection of the advanced stage of digital transformation across society. Therefore, in the 2025 market trend summary of International Electronic Business, I also elaborated on edge AI as an important topic: edge AI will remain a potential growth point for the entire semiconductor industry in the long term—even if it may appear under another new name as it enters a new phase of the S-curve in the next 5-10 years.

Figure 3: The Evolution from AIoT to Edge AIFinally, to conclude with Amichai’s keynote speech on the value of edge AI: “In industrial systems, edge AI can achieve predictive maintenance, making systems more stable, efficient, and cost-effective.” For example, regarding solar energy systems, “by using edge AI for fault detection, it can identify voltage surges and shut down the system before problems arise, enhancing the safety of solar panels. This is crucial for the widespread adoption of solar panels and indirectly increases the prevalence of sustainable energy technologies.”As we defined edge AI in 2023, edge AI is a reflection of the advanced stage of digital transformation across society. Therefore, in the 2025 market trend summary of International Electronic Business, I also elaborated on edge AI as an important topic: edge AI will remain a potential growth point for the entire semiconductor industry in the long term—even if it may appear under another new name as it enters a new phase of the S-curve in the next 5-10 years.