Skip to content

According to a report from Electronic Enthusiasts (by Li Wanwan), with the explosive growth of IoT devices, massive data will accumulate at the edge, and edge AI is entering a booming development phase. Gartner predicts that by 2023, more than 50% of large enterprises will deploy at least six edge computing applications for IoT or immersive experiences, and by 2025, 75% of data will be generated at the edge.

As the core of edge AI, AI chips serve as the hardware foundation for carrying AI. In recent years, ADI, a global leader in high-performance analog technology, has been committed to pushing artificial intelligence to the edge, striving to truly integrate ML/DL into edge intelligence. In terms of products, ADI has launched the low-power microcontroller MAX78000, which integrates a neural network accelerator, becoming an important model for high-efficiency AI processing combined with ultra-low-power microcontrollers.

Performance Advantages and Applications of MAX78000

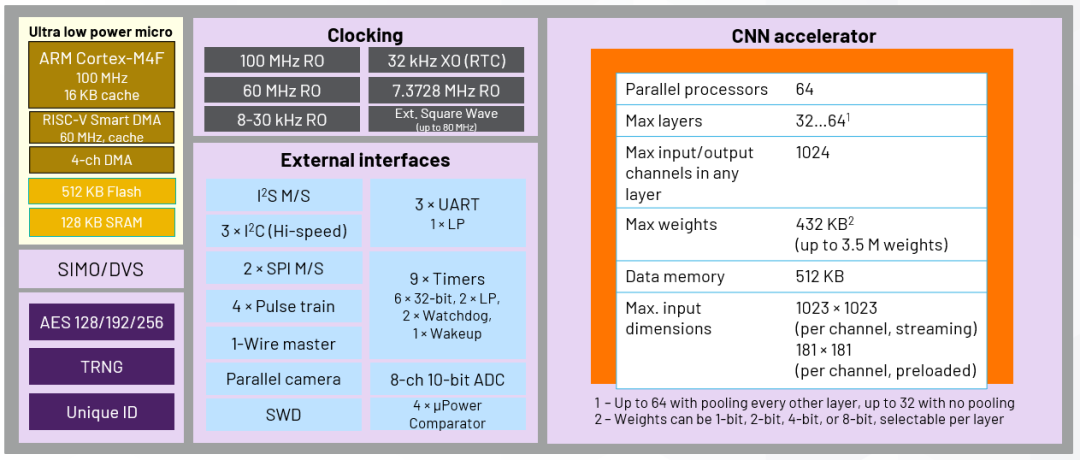

MAX78000 is an ultra-low-power neural network microcontroller featuring a hardware CNN accelerator, dual microcontrollers, memory, SIMO, and multiple communication interfaces. ADI’s senior business manager, Li Yong, stated in an interview with Electronic Enthusiasts that this product achieves a critical balance in terms of computing power, power consumption, latency, and integration.

Specifically, the MAX78000 features a dedicated CNN accelerator (equipped with 64 CNN processors), supporting up to 64 convolutional layers and 1024 channels, with a weight storage capacity of 442KB (supporting up to 3.5 million weight bits). It supports real-time updates of AI networks and regular toolset training for PyTorch and TensorFlow.

The MAX78000 is equipped with two MCU cores, Arm Cortex-M4F and 32-bit RISC-V, for system control. The Arm Cortex-M4F processor operates at 100MHz, allowing customers to write any system management code; the unique feature of the RISC-V processor is its ability to quickly load data into the neural network accelerator with low power consumption, enabling users to input data into the convolutional neural network engine using any microcontroller core.

MAX78000 also boasts advantages in low power consumption, low latency, low cost, and high integration. In terms of low power consumption, combining the hardware CNN accelerator with ultra-low-power dual microcontrollers can reduce power consumption by over 99% compared to MCU + DSP solutions. Compared to software solutions running on low-power microcontrollers, the MAX78000 runs AI inference 100 times faster after configuration and data loading, with power consumption of less than 1%.

Regarding low latency, the custom hardware accelerator for edge AI has higher data throughput compared to pure MCU solutions, increasing speed by more than 100 times. In terms of cost, the MAX78000 is far below FPGA solutions and slightly higher than conventional MCUs but can handle more complex details. In terms of high integration, the integrated neural network accelerator with the low-power microcontroller makes it possible to implement complex, real-time AI functions in battery-powered IoT devices.

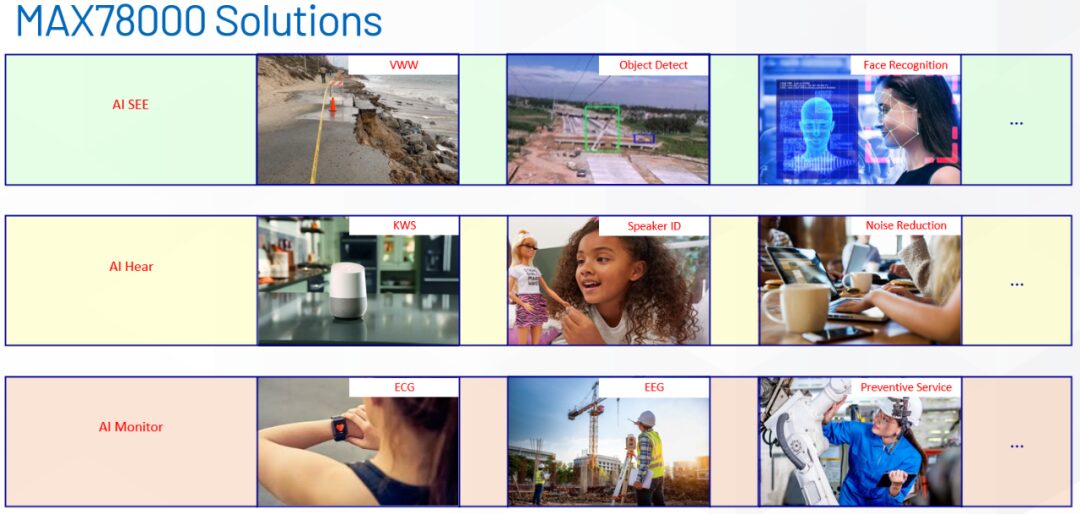

According to Li Yong, the main application areas of the MAX78000 focus on three aspects: visual recognition, sound recognition, and monitoring recognition. Visual recognition mainly includes VGA image analysis, object detection, and facial recognition; sound recognition mainly includes voice wake-up, voice authentication, and noise reduction; monitoring recognition mainly includes heart rate monitoring, EEG monitoring, and industrial prevention.

He further explained that AI technology is a powerful pattern recognition technology that requires a large amount of matrix calculations, as well as substantial storage space and system power consumption. As AI that executes locally on devices, edge AI typically needs to use batteries for power, thus requiring a balance among system power consumption, computational speed, and device cost.

ADI’s MAX78000 AI MCU achieves the aforementioned requirements through its unique architectural design, making it an ideal choice for applications such as machine vision, facial recognition, target detection and classification, time-series data processing, and audio processing. Currently, the MAX78000 has been widely applied in areas such as forest fire monitoring, geological disaster monitoring, and smart home applications.

In Li Yong’s view, in the future, applications of AI MCUs like the MAX78000 will expand into emerging scenarios such as AIoT, sports health, remote medical care, autonomous driving, robotics and automation, industrial manufacturing, smart buildings/parks/cities, and safety monitoring across various industries, including various image recognition, helmet cameras, voice control for hearing aids, and predictive maintenance for wind power equipment safety monitoring.

How MAX78000 Solves the Deployment Challenges of Edge AI

From the chip perspective, the deployment challenges of edge AI are largely related to factors such as computing power, algorithms, power consumption, latency, integration, and cost. Specifically, in terms of computing power, edge nodes often have limited AI computing resource allocation compared to cloud servers, which cannot meet the applications of edge AI. Li Yong stated that ADI’s MAX78000 AI MCU is equipped with a dedicated neural network accelerator, which can significantly enhance edge computing power from the hardware level.

In terms of algorithms, due to heterogeneous hardware and software, interface limitations, and other factors, algorithm adaptation is often challenging. The MAX78000 provides rich support for applications such as voice and facial recognition in terms of product solutions, interface configurations, tool optimization, and ecosystem support. For instance, collecting intelligent recognition data and building and training mathematical models are crucial; therefore, ADI provides demo programs for voice recognition and face ID recognition for customers to download and learn, allowing customers to modify based on these foundations. Additionally, ADI has very experienced third-party ecosystem partners who can provide training for mathematical models and data collection.

In terms of power consumption, many edge AI applications often lead to high device power consumption. The MAX78000, based on the CNN accelerator and dual-core processor architecture, can complete intelligent decision-making without consuming high power to upload data, while also supporting efficient on-chip power management, integrating single-inductor multiple-output (SIMO) switch-mode power supplies, effectively reducing device power consumption and extending the battery life of IoT devices.

Regarding latency, various data monitored by edge devices are transmitted to the cloud, and once bandwidth is limited, intolerable latency issues may arise. However, compared to conventional software solutions, the MAX78000 can enhance the speed of AI inference by 100 times after configuration and data loading.

In terms of integration, edge AI applications such as wearable devices require high product integration and small size, but solutions based on MCU, GPU, or FPGA are often larger and do not meet the size requirements of applications. Moreover, these solutions usually need to be equipped with external memory and PMICs, presenting significant challenges in terms of cost, size, and power consumption. The MAX78000 can balance being small yet comprehensive and small yet efficient.

In terms of cost, cloud-based edge intelligence may only be suitable for applications by large enterprises, where tens of thousands or hundreds of thousands of customers support a single server to bear the costs of the cloud. However, this is clearly unrealistic for edge AI devices, while the MAX78000 can realize many basic control applications, making edge-end autonomous intelligence more cost-effective.

Specifically, let’s look at a few cases. For example, forest fires and disasters such as road, railway, or dam collapses often occur in remote locations where communication network bandwidth is limited, yet fast decision-making and early warning are crucial, thus requiring rapid determination at the edge. The time sensitivity of such applications is very high; traditional cloud monitoring may require sending a set of images to the cloud, demanding high network traffic. Edge computing, being closer to the data source, can provide low-latency, high-reliability services while ensuring real-time data processing.

Because the MAX78000 features a dual-core processor architecture and is equipped with an independent CNN accelerator, it significantly enhances edge AI computing power. Through autonomous intelligent recognition at the monitoring end, once an emergency occurs, the MAX78000 can quickly analyze, make decisions, and raise alarms, preventing more severe disaster losses.

Additionally, the current market’s common MCU or FPGA intelligent solutions face significant challenges in low-power edge-end intelligent applications powered by batteries, where the operational cost of replacing batteries may exceed the cost of the device itself. Therefore, low power consumption has become a key requirement for many edge intelligent application scenarios.

The market urgently requires new generations of edge AI solutions with ultra-low power characteristics, such as the MAX78000: firstly, the edge intelligent decision-making capability of the MAX78000 avoids high power consumption caused by frequent data transmission and collection; secondly, the MAX78000 provides efficient on-chip power management, integrating single-inductor multiple-output (SIMO) switch-mode power supplies, which can maximize the battery life of IoT devices and reduce costs.

Future Trends of Edge AI Chips

From the current situation, edge AI is rapidly rising, and 2022 may become its explosive growth year. Renowned market research firm Forrester predicts that investments in intelligent infrastructure will increase by 40% in 2022.

According to ADI, the explosive growth of terminal devices combined with opportunities for the digital transformation of industries means that more and more edge devices will need to possess certain “learning” capabilities, allowing them to analyze, train, optimize, and update models locally based on newly collected data. Of course, this will also pose a series of new requirements for edge devices and the entire AI implementation system. On one hand, edge AI chips, as the underlying hardware support for artificial intelligence, need to work in coordination with other components to push AI efficiency to the extreme; on the other hand, besides hardware, software is also core to achieving AI. Therefore, future AI chips must possess an important characteristic: the ability to dynamically change functions in real-time to meet the ever-changing computational needs of software, which is known as “software-defined chips.”

Disclaimer: This article is original from Electronic Enthusiasts, please indicate the source above when reprinting. If you need to join the group for communication, please add WeChat elecfans999, for submission of interview requests, please send an email to [email protected].

More Hot Articles to Read

-

Elon Musk: Human trials for brain chips expected in 6 months, willing to implant in his own child

-

Silicon price decline turning point arrives, photovoltaic market expected to restart installation boom

-

New car-making forces November sales: Neta first, Weilai in top three, Xiaopeng still at the bottom

-

Industry organizations frequently issue alarms, global semiconductor market faces decline risk in 2023

-

Foxconn loses exclusive qualification to produce iPhone 14 Pro! Luxshare and Pegatron rise, Apple supply chain pattern changes dramatically?