Recent work has required frequent interaction with testing, but I am not an expert in this niche area. Looking at thousands of test cases and a variety of testing devices and tools, along with the complex curves and charts presented by engineers, I found it quite overwhelming and difficult to understand, leading me to deep contemplation…

01

Differences Between System and Software Testing

In ECU development testing, the two are usually distinguished. We can look at the differences from the following perspectives:

-

Test Objects: Software testing focuses on the software integrated into the chip; system testing targets the ECU, which includes software, hardware, and calibration.

-

Testing Purpose: Software testing aims to find errors in the software and prove that the software meets its requirements; system testing seeks to identify errors in the ECU, which consists of software, hardware, calibration, and structural components, proving that the system meets its requirements.

-

Testing Environment: Software testing should be as independent of hardware as possible, using simulation methods such as CANoe to send signals; system testing should be as realistic as possible, involving real wiring harnesses, real loads, etc.

02

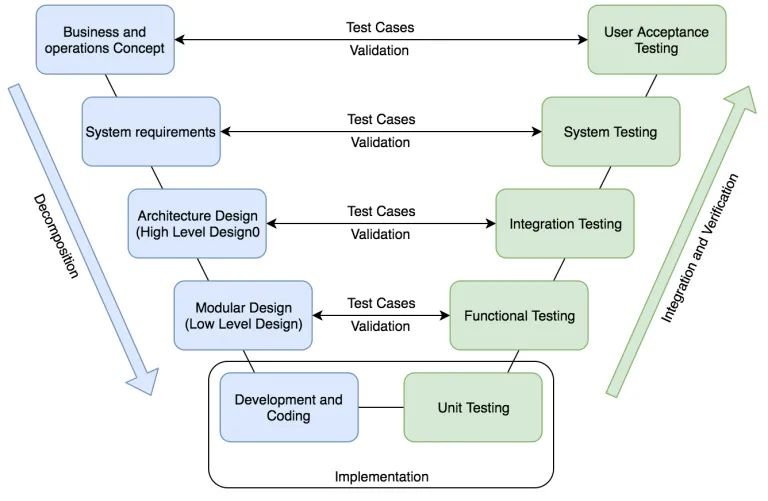

Order of Testing

Ideally, testing should start from the bottom layer of the V-model and proceed upwards in order. However, the best outcomes are often hard to achieve, and we generally do not have enough time for such waterfall development.

Therefore, we need to consider some major principles and appropriately parallelize.

-

Unit-level testing, which is a non-typical test, should ideally be completed first, even binding it through the toolchain and code generation, meaning that code cannot be generated unless certain conditions are met. Early coverage testing of some code logic will greatly reduce painful rework later.

-

Smoke or basic functionality testing is the second priority, as basic usability is a fundamental requirement for developers.

-

Software functionality, system integration, and system testing can be conducted in appropriate parallel considering architectural changes, historical interface issues, and other real project situations.

03

Testing Entry Criteria

Testing cannot be conducted at will; certain conditions must be met before handing over to the testing team, which are the testing entry criteria. These rules are crucial for large team collaborations.

-

First, we need to check whether the necessary testing order and results meet the requirements for higher-level testing.

-

Hardware devices must be in place, such as ECU engineering samples, wiring harnesses, peripheral sensors, and counterpart devices.

-

Test bench must be available and calibrated.

-

Test information input must be completed, such as software and hardware versions, configuration parameters, test plans, and delivery information.

-

Calibration must be in place.

-

Documentation (requirements, test specifications, etc.) must be completed with review and baselining.

-

Software delivery must be completed according to the process with review.

04

Testing Exit Criteria

Testing cannot just start or stop at will; we also need exit criteria.

Exit actually has two meanings: the first is normal completion, and the second is abnormal termination.

4.1 Normal Completion

Generally, we must meet the following conditions to proceed to normal completion.

-

All planned tests have been executed as scheduled.

-

Abnormal items in the test results have been analyzed and reviewed.

-

Discovered bugs have been entered into the corresponding ALM tool.

4.2 Abnormal Termination

Aside from process enforcement, most reasons for termination are based on cost and time considerations; in some cases, it is unnecessary to continue testing.

-

Software or ECU quality is too poor to support testing.

-

After testing begins, it is found that entry criteria are not met.

-

If discovered bugs affect the validity of certain tests, those tests must be halted.

-

If certain bugs need to be retested after fixing, those cases should be retested after the fixes.

-

If new hardware or software is soon available (the definition of ‘soon’ needs to be specified), all testing can be halted, waiting for the new hardware and software.

05

Selection of Test Cases

Before starting testing, we usually have a test case library. Testing every version fully naturally incurs high costs and long cycles, so cases need to be selected, which is what we refer to as Delta testing.

-

The risk level of the product itself is high; for products with a higher ASIL level, some critical functionality tests must be enforced.

-

Implementation status of features.

-

Known hardware and software changes.

-

Workload assessment.

-

Testing status of previous versions and related versions.

-

Impact of changes on unchanged parts.

-

Scheduling strategies between different project variants.

-

For such subjective and experience-based decisions, each unexecuted test case should ideally have a documented reason.

In addition to Delta testing, a strategy for full functionality testing should also be established, such as at least once a year for full functionality, once before SOP, once after platform software upgrades, once after more than 5 releases, and once when hardware changes occur…

06

Test Management

Testing is a complex and lengthy task, and necessary management is essential.

6.1 Test Management

The goal of test management is to obtain the corresponding test deliverables (e.g., test specifications, test execution, test reports, test reviews, defect submissions, etc.) according to the test plan, and to ensure that the deliverables meet the milestones defined in the project schedule.

6.2 Test Resources

A major prerequisite for timely acquisition of deliverables is that test resources are satisfied, which includes personnel capabilities, testing equipment, test samples, etc.

6.3 Test Scheduling

To determine possible exit conditions as early as possible, tests with a higher probability of failure must be executed first, for example, in the following order.

-

Bug retesting

-

Testing new features

-

Testing modified or optimized features

-

Testing unchanged features (i.e., regression testing)

6.4 Test Planning and Monitoring

Based on project schedule requirements and the results of “test assessment” and “test scheduling,” we can provide a deadline for the completion of the testing phase, thus deriving a detailed plan.

The level of detail required for the plan depends on the complexity of the project and the number of testing personnel involved.

07

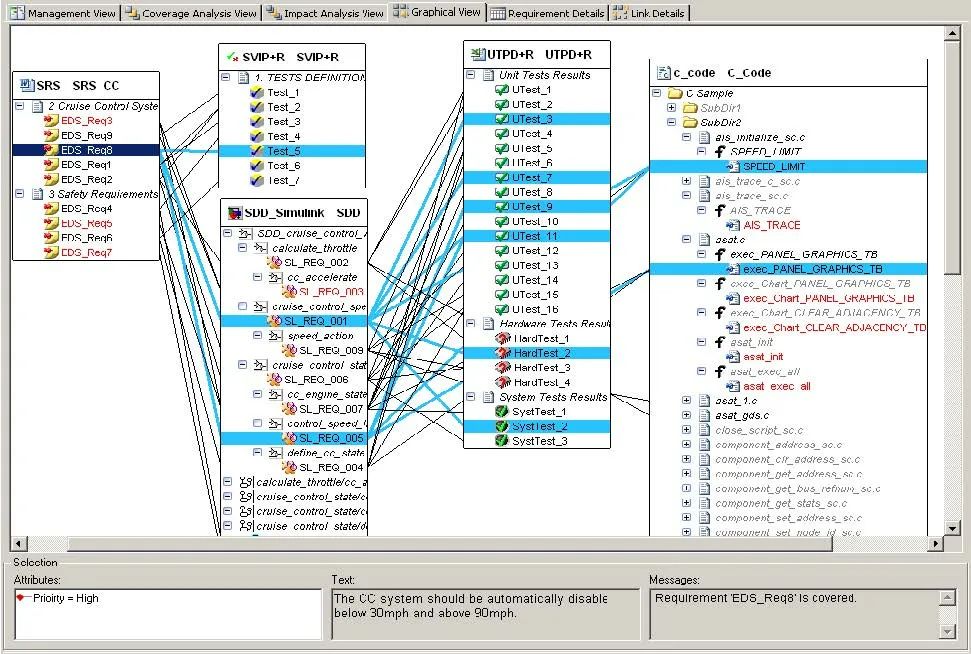

Bidirectional Traceability and Test Coverage

Every testable requirement of the system or software must be covered by at least one test case. To check test coverage, traceability between test reports, test specifications, and corresponding requirements can be facilitated using appropriate requirement coverage tools, such as Reqtify.

If test coverage is incomplete, the information needs to be exposed at the project level, and risk assessment and deviation permission must be completed.

08

Final Thoughts

Testing is a very complex topic that deserves repeated study. Due to limitations in energy and time, this article provides a simple summary, touching on the key points.

The End