He Tengpeng, Zhang Rongfen, Liu Chao, Fang Lenan, Liu Yuhong

(Guizhou University, School of Big Data and Information Engineering, Guiyang, Guizhou 550025, China)

Abstract: This paper proposes a design scheme for a smart seeing glasses system based on machine vision. The system uses the S5PV210 processor based on Samsung’s Cortex-A8 architecture, runs on a Linux operating system, and is equipped with six core functional modules: binocular image acquisition, GPS positioning, voice broadcasting, GSM messaging, voice calling, and wireless transmission, to build the hardware platform for the smart seeing glasses system. By combining deep learning algorithms on a remote cloud server, the system achieves intelligent recognition of target scenes and provides accurate guidance for blind individuals in real-time through voice prompts. Test results indicate that the smart seeing glasses system can not only navigate blind individuals correctly in the testing environment but also possesses certain target recognition capabilities, assisting blind users in simple object classification. Additionally, the system includes multiple auxiliary functions such as GPS positioning, voice calling, and GSM messaging.

Keywords: machine vision; Cortex-A8; binocular image acquisition; intelligent recognition

Classification Number: TN929

Document Identification Code: A

DOI: 10.16157/j.issn.0258-7998.2017.04.015

Chinese Citation Format: He Tengpeng, Zhang Rongfen, Liu Chao, et al. Design of smart seeing glasses based on machine vision[J]. Application of Electronic Technique, 2017, 43(4): 58-61.

English Citation Format: He Tengpeng, Zhang Rongfen, Liu Chao, et al. Design of smart seeing glasses based on machine vision[J]. Application of Electronic Technique, 2017, 43(4): 58-61.

1 Introduction

According to statistics from the World Health Organization, there are approximately 78 million blind individuals worldwide, with 90% living in developing countries. In China, the number of blind individuals accounts for 18% of the global total, reaching as high as 14 million. As a vulnerable group in society, visual impairments and eye diseases bring many inconveniences to their lives. On the other hand, with the continuous increase in the aging population in recent years, the number of visually impaired individuals is also rising sharply. Ensuring the safe and effective travel of blind individuals and those with visual impairments has become particularly important. Therefore, this paper designs a smart seeing glasses system based on machine vision, aimed at helping blind individuals safely and effectively avoid obstacles on the road during their travels, maximizing their travel safety. Compared to the less effective white canes and the expensive guide dogs available on the market, the machine vision-based smart seeing glasses system is more competitive.

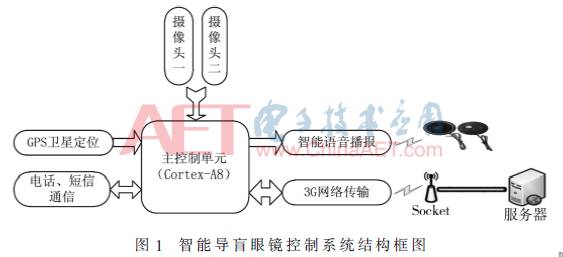

2 Overall Design of the Smart Seeing Glasses Control System

The smart seeing glasses control system consists of two main parts: the front-end embedded acquisition and transmission system and the remote cloud platform server. The embedded acquisition and transmission system is based on the Samsung Cortex-A8 architecture S5PV210 processor, running on a Linux kernel, and is equipped with core functional modules such as binocular image acquisition, GPS positioning, voice broadcasting, GSM messaging, voice calling, and wireless transmission to build the hardware platform for the smart seeing glasses system. It mainly completes information acquisition, transmission, and intelligent guiding functions. The cloud platform server serves as the remote data processing center for the smart seeing glasses. For server configuration, Alibaba Cloud is selected as the cloud server, integrating deep learning, binocular ranging, and other related algorithms to achieve image recognition, distance detection, and orientation judgment of the target scenes in front of the smart seeing glasses. Additionally, the server constructs a GPS satellite data matching platform, enabling real-time and effective positioning of the glasses user. The overall design block diagram of the system is shown in Figure 1.

3 Hardware Design of the Smart Seeing Glasses Control System

3.1 Design of the Binocular Image Acquisition Module

The binocular image acquisition module uses two identical CMOS high-definition cameras to capture scene information in front of the smart seeing glasses, helping blind individuals obtain information about targets and corresponding scenes ahead.

3.2 Design of the GPS Positioning Module

The Global Positioning System (GPS) provides low-cost, high-precision three-dimensional positioning for users worldwide, enabling all-weather, all-direction real-time positioning. The system uses the NEO-6M module from Swiss Ublox as the core unit of the GPS module, primarily for real-time acquisition of the geographical coordinates (latitude and longitude) of the blind individual’s location.

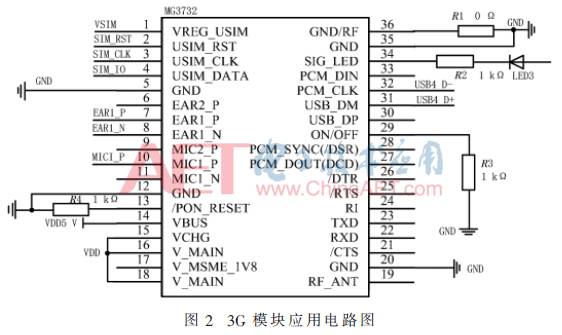

3.3 Design of the Wireless Communication Transmission Module

The wireless communication transmission module mainly consists of a 3G chip and corresponding peripheral circuits, utilizing 3G wireless technology to achieve bidirectional communication between the smart seeing glasses and the remote cloud platform server. On one hand, it transmits images captured by the binocular camera module and geographical coordinates obtained by the GPS module to the remote cloud server via the 3G network. On the other hand, it sends back the image recognition and geographical location matching results from the server to the smart seeing glasses for voice broadcasting, informing the blind individual of the results in real-time. Additionally, utilizing the GSM messaging function of the 3G module, the smart seeing glasses system will also inform the blind individual’s family members of the results obtained from the cloud server via text messages. In special circumstances, blind individuals can also use the 3G phone function to directly communicate with relatives. Figure 2 shows the application circuit diagram of the wireless communication transmission module.

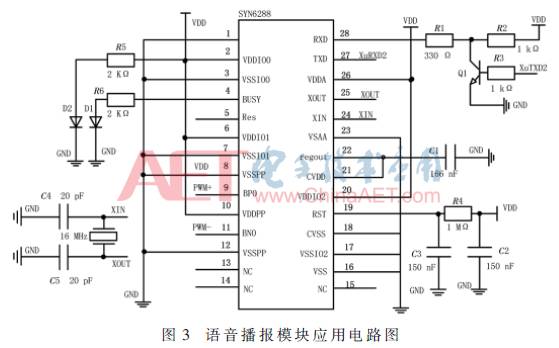

3.4 Design of the Voice Broadcasting Module

The voice broadcasting function of the smart seeing glasses control system is primarily used to play the image recognition results, distance, orientation information, and geographical location of the targets in front of the glasses through the voice module, promptly informing blind individuals of their surrounding environmental conditions. The system uses the SYN6288 Chinese speech synthesis chip as the main component of the voice broadcasting module to achieve text-to-speech conversion, with its peripheral circuit shown in Figure 3.

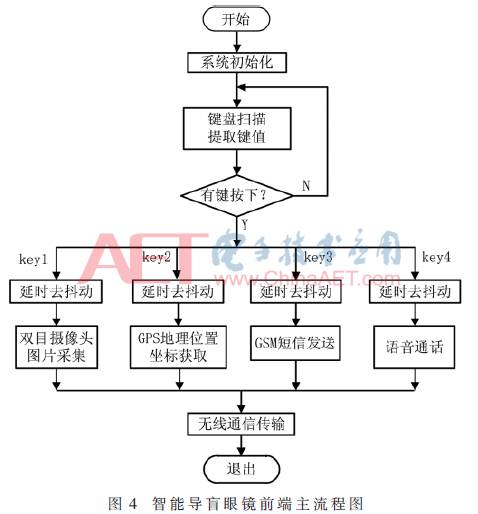

4 Software Design of the Smart Seeing Glasses Control System

The software design of this system is divided into two parts: program design on the remote cloud platform server and program design on the front end of the smart seeing glasses. The software design on the remote cloud platform server mainly uses high-level programming languages such as C/C++ to convert algorithms related to image recognition, distance measurement, and orientation detection into program instructions recognizable by the computer system, thereby achieving functions such as recognition, distance measurement, and orientation detection on the smart seeing glasses’ remote cloud server. Additionally, the parsing of GPS latitude and longitude is also implemented through software programming on the cloud server. The front-end software design of the smart seeing glasses mainly includes subprograms for image acquisition from the binocular camera, obtaining GPS geographical coordinates, data transmission and reception of the wireless communication transmission module, scheduling of the voice broadcasting module, and configuration of key interrupts, with the main program flowchart shown in Figure 4.

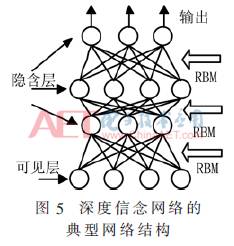

5 Natural Scene Recognition Based on Deep Belief Networks

Deep Belief Networks (DBN) are one of the most widely used algorithm models in deep learning, commonly applied in handwritten character recognition and natural scene recognition. In the smart seeing glasses control system, deep belief networks are mainly used for object recognition in common natural scenes, reflecting an application of deep learning in the field of machine vision. Figure 5 shows a typical network structure model of a deep belief network.

As shown in Figure 5, the deep belief network consists of multiple Restricted Boltzmann Machines (RBM). In this network, the training process of the DBN adopts a layer-wise training method, where each RBM is trained separately, and parameters are adjusted individually. After training one layer, the training results of that layer are used as input for the next RBM until all RBMs are trained, a process known as pre-training. Once all RBMs in the deep belief network are trained, the backpropagation algorithm is used to fine-tune the network based on the sample label values.

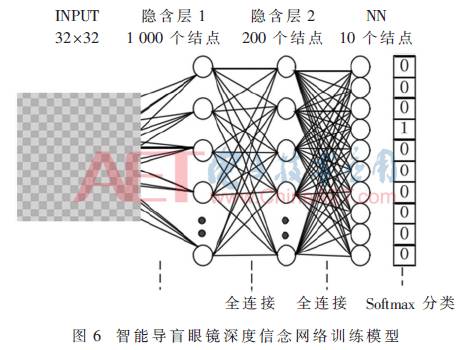

In the image training process of the smart seeing glasses, an improved CIFAR-10 natural scene dataset is used as the test training sample. The original CIFAR-10 dataset contains 60,000 32×32 color images, divided into 10 categories: airplane, automobile, cat, bird, deer, dog, frog, horse, ship, and truck. To enhance the practicality of the smart seeing glasses, and considering the special circumstances of blind individuals, this paper incorporates common target scenes such as dining tables, chairs, people, trash cans, and trees into the actual system image training, improving the original CIFAR-10 natural scene dataset. The improved CIFAR-10 natural scene dataset is then trained and recognized using the deep belief network model shown in Figure 6.

In the training model mentioned above, each image in the improved CIFAR-10 natural scene dataset is a 32×32 color image, resulting in an input layer size of 3,072 nodes (3,072=32×32×3). The number of nodes in the two hidden layers is set to 1,000 and 200, respectively. After passing through a multi-class Softmax classifier, the output layer consists of 10 units, resulting in a final model structure of 3072-1000-200-10. During the actual image training phase of the smart seeing glasses system, the training iteration count for the two RBMs in the training model is set to 200, and the learning rate is set to 0.1. After training, the learned weights are used to initialize the neural network, fine-tuning the network parameters, and the Sigmoid function is used to activate the neural network. Due to the large number of samples and complex data, the hidden layers require a relatively high number of nodes to learn better features. Additionally, the large amount of information in the images necessitates multiple iterations. The entire training process averages 10 hours, which is significantly shorter than the training time of convolutional neural networks and autoencoder models in deep learning, while still achieving a relatively ideal recognition rate. This is the main reason for selecting deep belief networks as the recognition training model for this system.

6 System Testing Results and Analysis

The deep belief network training model mentioned above was first used to conduct demonstrative tests on 10,000 test images randomly selected from the improved CIFAR-10 dataset. Table 1 shows the recognition rates for various sample categories and the average recognition rate.

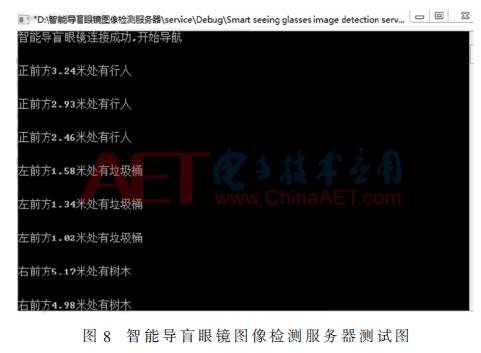

As shown in Table 1, the average recognition rate of the 10 categories of test samples in the improved CIFAR-10 dataset after training with the deep belief network model of the smart seeing glasses system is 82.9%, which exceeds the average recognition rate of the support vector machine training recognition model by nearly 10%, laying a foundation for further overall system testing of the smart seeing glasses. Finally, considering the living needs of blind individuals and integrating the other functions of the smart seeing glasses, the control system of the smart seeing glasses was systematically debugged in real scenarios. The frame rate of the binocular camera was set to 3 frames/s, and the voice navigation frequency was set to prompt every two seconds. The test results from the remote server are shown in Figures 7 and 8. Figure 7 shows that the GPS function of the smart seeing glasses can accurately and in real-time obtain the latitude and longitude of the user wearing the glasses and transmit it to the remote server via the wireless communication transmission module for accurate geographical location matching. Figure 8 demonstrates that the smart seeing glasses can not only accurately identify the category of the target ahead but also measure the distance to the target object, correctly indicating the orientation characteristics of the obstacles, and provide real-time voice guidance to help blind individuals effectively avoid obstacles, ensuring their safe travel.

7 Conclusion

This system builds a smart seeing glasses system based on machine vision with the S5PV210 as the main controller. By running on a Linux kernel and equipping six core functional modules: binocular image acquisition, GPS positioning, voice broadcasting, GSM messaging, voice calling, and wireless transmission, the system completes both hardware circuit design and software design. Through system testing, the smart seeing glasses can provide real-time voice navigation for blind individuals’ independent travel. Additionally, in special circumstances, blind individuals can use the trigger button on the smart glasses to access GPS, GSM messaging, voice calling, and other functions to seek help from friends and family in a timely manner. Furthermore, as the smart seeing glasses also possess image recognition capabilities, they can assist blind individuals in simple object classification, thereby enhancing their self-care abilities to a certain extent, which is particularly important for a country like China with a large blind population.

References

[1] Milan Sonka, Vaclav Hlavac, Roger Boyle, et al. Image Processing, Analysis, and Machine Vision[M]. Beijing: Tsinghua University Press, 2016.

[2] Yue Ronggang, Wang Shaoping, Li Kai, et al. A New Type of Binocular Ranging Method Based on Similarity Principles[J]. Optoelectronic Engineering, 2008, 35(4): 64-68.

[3] Chen Cuiping. Text Classification Algorithm Based on Deep Belief Networks[J]. Computer System Applications, 2015, 24(2): 121-126.

[4] Zhang Chunxia, Ji Nannan, Wang Guanhui. Introduction to Restricted Boltzmann Machines[J]. Journal of Engineering Mathematics, 2013(2): 159-173.

[5] Hinton G E, Srivastava N, Krizhevsky A, et al. Improving Neural Networks by Preventing Co-adaptation of Feature Detectors[J]. Computer Science, 2012, 3(4): 212-223.

[6] Wang Shuang, Ma Wenping, Xie Huiming, et al. A Polarization SAR Image Classification Method Based on Stack Encoding and Softmax[P]. CN104156728A, 2014.

[7] Zhang Xuewei, Wang Yan. Prediction of Plate Shape Based on Parameter Adjustment of Sigmoid Function in Double Hidden Layer BP Neural Network[J]. Chemical Automation and Instrumentation, 2010, 37(4): 42-44.

[8] Chen Xianchang. Research on Deep Learning Algorithms and Applications Based on Convolutional Neural Networks[D]. Hangzhou: Zhejiang Gongshang University, 2013.

[9] Wu Haiyan. Research on Semi-supervised Representation Learning and Classification Learning Based on Autoencoders[D]. Chongqing: Chongqing University, 2015.

[10] Cui Pengyu. Research on Classifier Training Based on Support Vector Machines[J]. Digital Technology and Applications, 2016(6): 58-58.