Bookmark + Pin to master cutting-edge information on embedded AIoT technologyLike + Follow, let’s become even better together!Many engineering friends often directly question after reading articles related to embedded AI: What is the use of learning embedded AI technology, and what kind of value can it generate?Wow, that question really stumped me!The current development prospects of embedded + AI technology are indeed very broad, but when chatting with many colleagues, everyone inevitably has doubts about how many AI products have truly entered the lives of ordinary people.From the perspective of embedded technology, whether it is smart home, industrial IoT, smart healthcare, or other industries, it seems that there are not many AI products or real-life scenarios implemented.To find answers, I recently went to Shenzhen to attend the Embedded and Edge AI Technology Forum organized by FLYING. That day was a Tuesday, a working day, and I thought everyone would be busy at work, but unexpectedly, quite a few engineers showed up, and the seats were full. It seems everyone is very concerned about the development direction of embedded + AI technology.

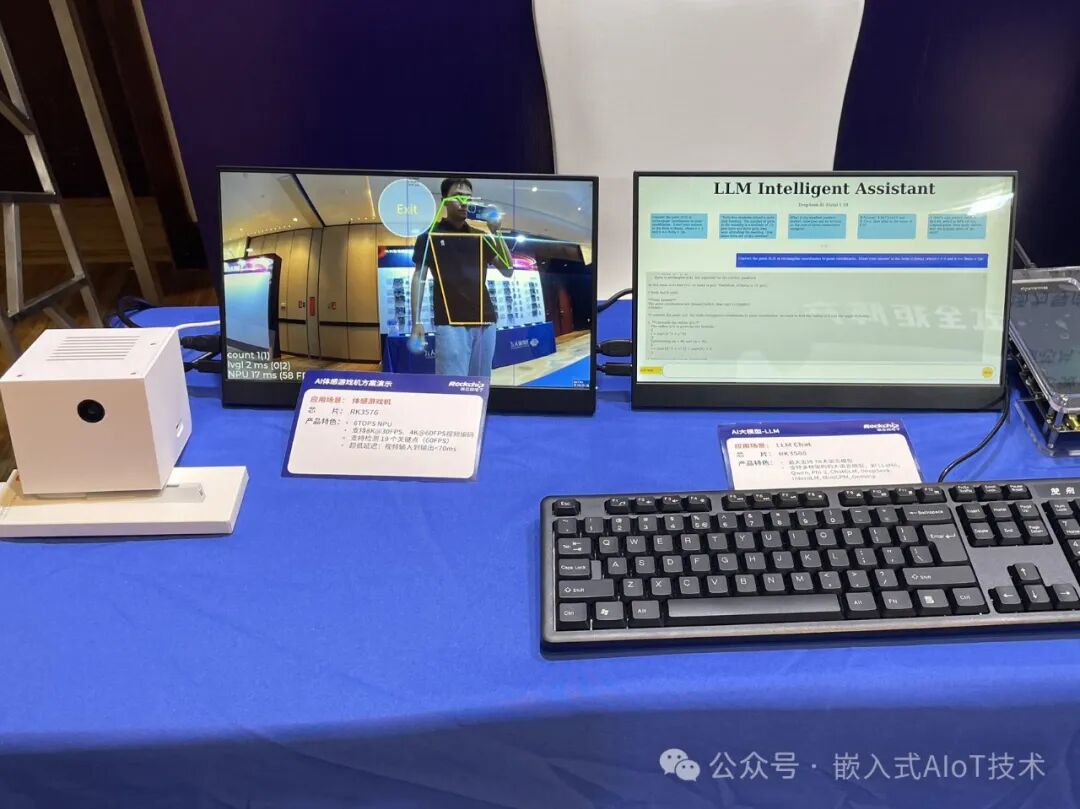

That day was a Tuesday, a working day, and I thought everyone would be busy at work, but unexpectedly, quite a few engineers showed up, and the seats were full. It seems everyone is very concerned about the development direction of embedded + AI technology. The main purpose of my visit was to explore the application scenarios of embedded + AI technology. The first booth that caught my eye was the human posture recognition solution from Rockchip’s RK3576. Although the performance of RK3576 is not as powerful as RK3588, the cost is relatively lower, making it a high-cost-performance solution.

The main purpose of my visit was to explore the application scenarios of embedded + AI technology. The first booth that caught my eye was the human posture recognition solution from Rockchip’s RK3576. Although the performance of RK3576 is not as powerful as RK3588, the cost is relatively lower, making it a high-cost-performance solution. The product director of Rockchip shared their chip development direction on stage. The current entry-level new product is RK3506J, which will later iterate to RK3508J. For traditional industrial control scenarios, entry-level chips are generally sufficient.

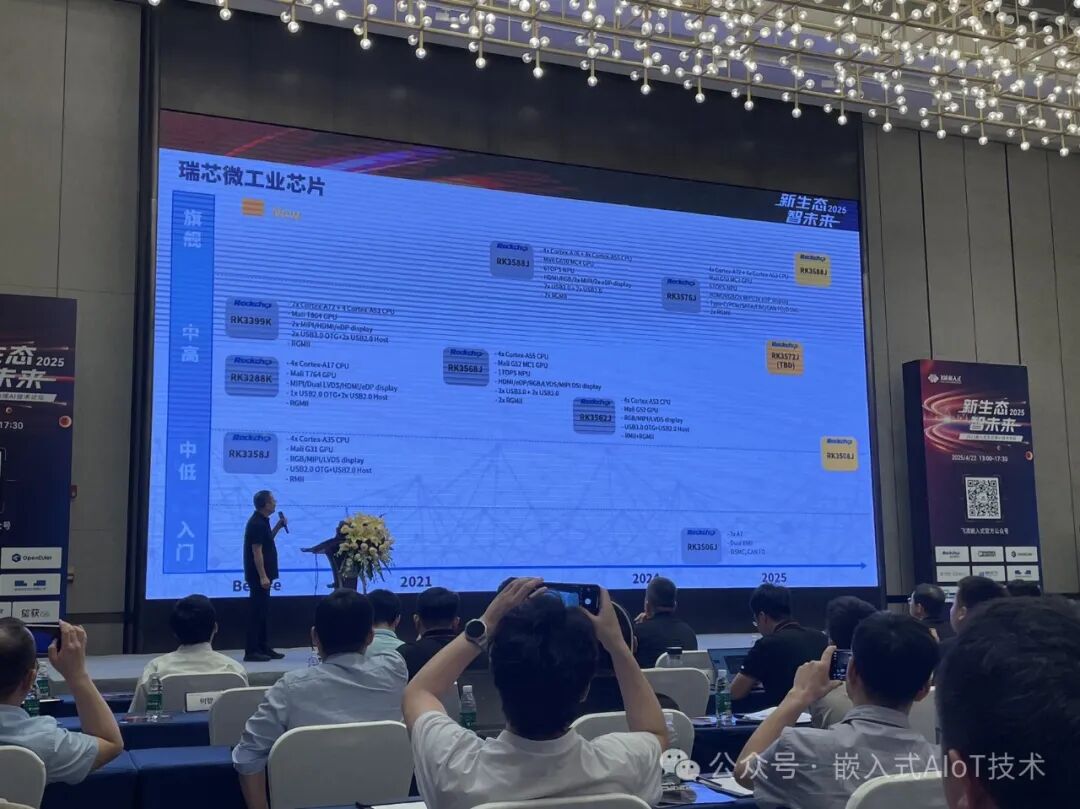

The product director of Rockchip shared their chip development direction on stage. The current entry-level new product is RK3506J, which will later iterate to RK3508J. For traditional industrial control scenarios, entry-level chips are generally sufficient.

Rockchip’s flagship chips currently include RK3588J and RK3576J, both equipped with 6 TOPS neural network processors, but RK3576J has a more obvious cost advantage. It will later iterate to RK3688J, which reportedly has significantly improved CPU and NPU performance compared to its predecessor.(Not sure about the cost?)

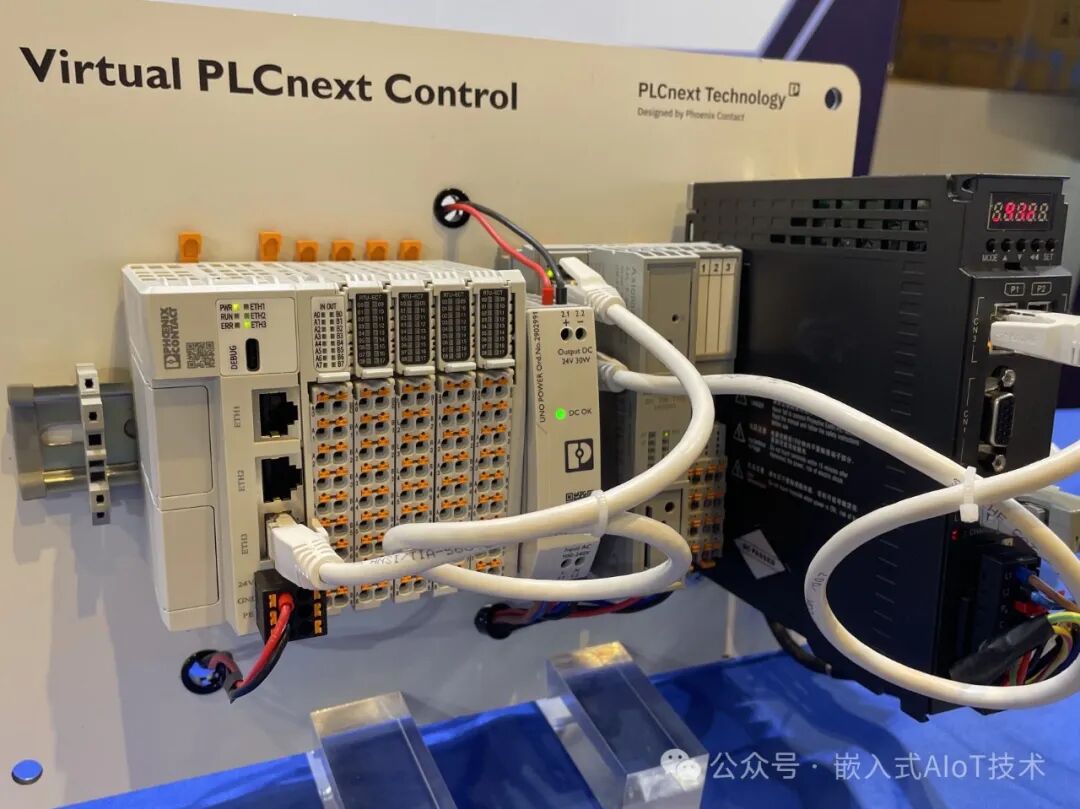

Rockchip’s flagship chips currently include RK3588J and RK3576J, both equipped with 6 TOPS neural network processors, but RK3576J has a more obvious cost advantage. It will later iterate to RK3688J, which reportedly has significantly improved CPU and NPU performance compared to its predecessor.(Not sure about the cost?) I also learned about an interesting product on-site: Phoenix’s Virtual PLCnext Control.Engineers engaged in industrial control know that traditional PLCs integrate logic operations and I/O execution into one hardware unit, making it inconvenient to expand the PLC’s computing power. However, Virtual PLCnext Control decouples the logic operations and I/O execution of the PLC.The logic operations of the PLC are made into a separate software (ecosystem) that can be deployed on more powerful hardware, while the execution I/O on the device side only requires simple hardware interfacing, with data interaction between logic operations and execution I/O conducted via reliable industrial Ethernet.

I also learned about an interesting product on-site: Phoenix’s Virtual PLCnext Control.Engineers engaged in industrial control know that traditional PLCs integrate logic operations and I/O execution into one hardware unit, making it inconvenient to expand the PLC’s computing power. However, Virtual PLCnext Control decouples the logic operations and I/O execution of the PLC.The logic operations of the PLC are made into a separate software (ecosystem) that can be deployed on more powerful hardware, while the execution I/O on the device side only requires simple hardware interfacing, with data interaction between logic operations and execution I/O conducted via reliable industrial Ethernet.

Currently, the booth also showcased a multi-axis industrial robot vision tracking solution for material stacking scenarios. Based on different material characteristics, the industrial robot can automatically classify them, which helps improve factory productivity.

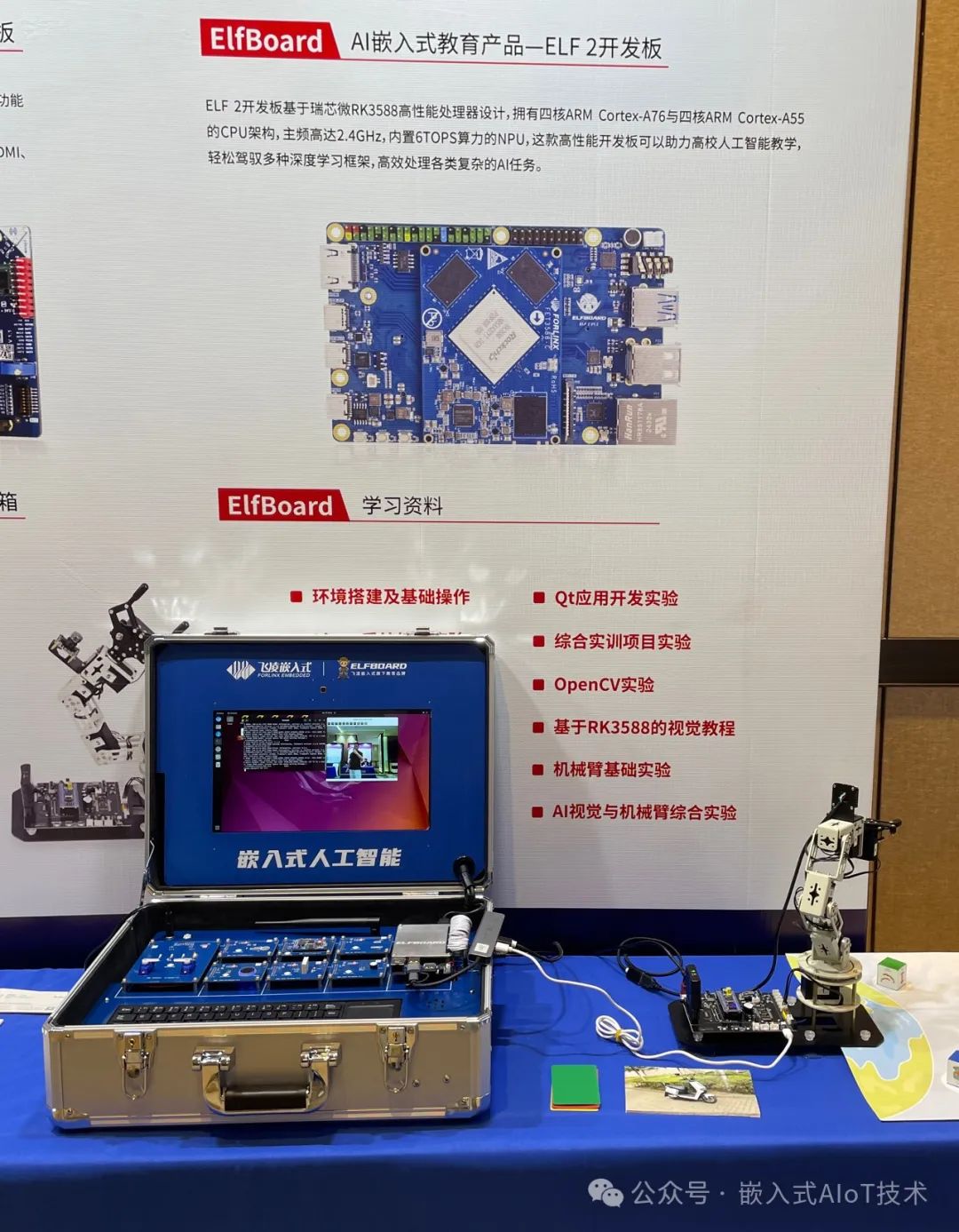

Currently, the booth also showcased a multi-axis industrial robot vision tracking solution for material stacking scenarios. Based on different material characteristics, the industrial robot can automatically classify them, which helps improve factory productivity. The site also exhibited an embedded + AI education section, featuring a multi-axis robot model that runs AI models on the ELF2 learning board to track colors of objects, with the industrial robot moving along the color trajectory. The ELF2 learning board mainly handles inference and decision-making, while the multi-axis robot is controlled by a dedicated microcontroller.

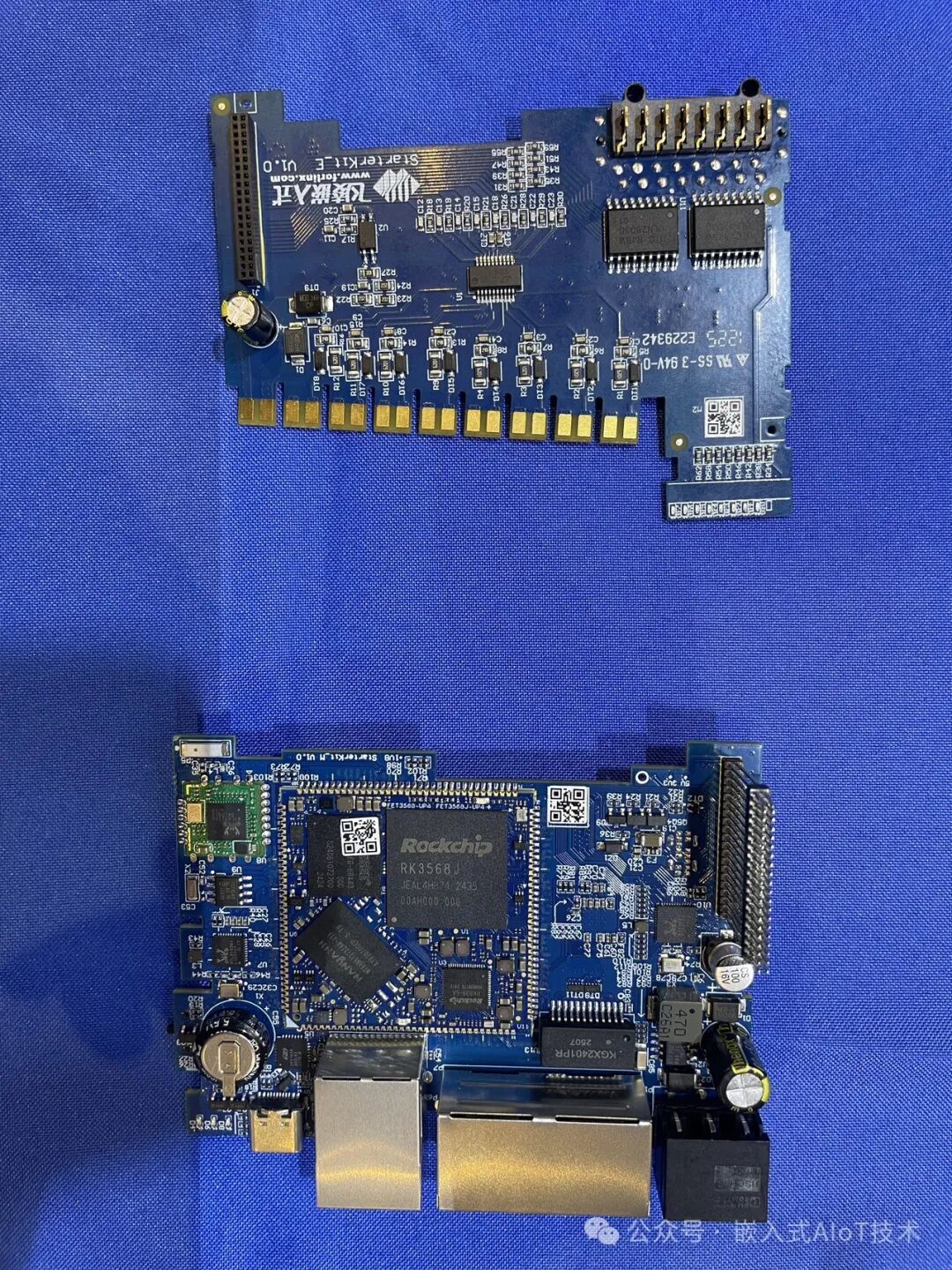

The site also exhibited an embedded + AI education section, featuring a multi-axis robot model that runs AI models on the ELF2 learning board to track colors of objects, with the industrial robot moving along the color trajectory. The ELF2 learning board mainly handles inference and decision-making, while the multi-axis robot is controlled by a dedicated microcontroller. The ELF2 uses the RK3588 flagship processor, fully utilizing its performance. I also saw a demo running “Electric Vehicle Elevator Alarm” on-site, which is quite practical.The education section also provides a comprehensive experiment kit with many modules, mainly for university education or electric competition scenarios. It is evident that universities are increasingly emphasizing the importance of introductory education in embedded + AI technology!

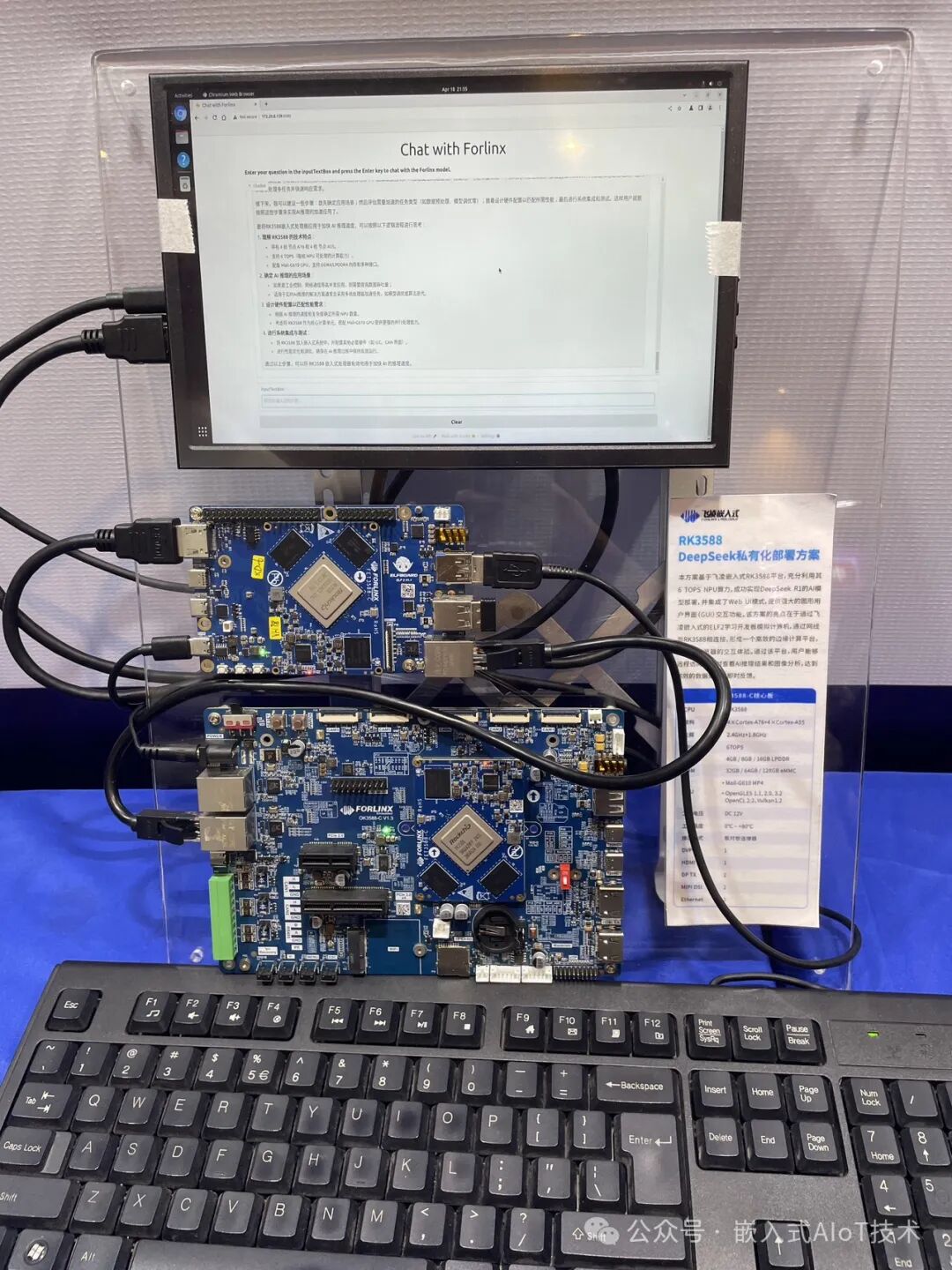

The ELF2 uses the RK3588 flagship processor, fully utilizing its performance. I also saw a demo running “Electric Vehicle Elevator Alarm” on-site, which is quite practical.The education section also provides a comprehensive experiment kit with many modules, mainly for university education or electric competition scenarios. It is evident that universities are increasingly emphasizing the importance of introductory education in embedded + AI technology! The DeepSeek private deployment solution was also showcased on-site, with two RK3588s running the DeepSeek large language model simultaneously, along with a Chat UI interface, achieving decent human-computer interaction.

The DeepSeek private deployment solution was also showcased on-site, with two RK3588s running the DeepSeek large language model simultaneously, along with a Chat UI interface, achieving decent human-computer interaction. I have previously shared several articles on deploying large models in embedded AI, and you can click the following links for a review.Step 1 to getting into embedded AI: Set up the development environment!Step 2 to getting into embedded AI: Model conversion and deployment!Step 3 to getting into embedded AI: Deploying the DeepSeek-R1 large model on the development boardStep 4 to getting into embedded AI: Hardware performance data after running the large model on the device.In summary, the site showcased many visual recognition and tracking solutions, and it feels like this area is becoming increasingly mature, with several commercial products already implemented.Machine vision is the input sensing link of AI, and if it can be combined with the currently trending large models, I believe there will be greater imaginative space.I look forward to participating in more similar technical seminars or forums in the future to continue exploring more embedded + AI technology implementation solutions. Thank you for reading!

I have previously shared several articles on deploying large models in embedded AI, and you can click the following links for a review.Step 1 to getting into embedded AI: Set up the development environment!Step 2 to getting into embedded AI: Model conversion and deployment!Step 3 to getting into embedded AI: Deploying the DeepSeek-R1 large model on the development boardStep 4 to getting into embedded AI: Hardware performance data after running the large model on the device.In summary, the site showcased many visual recognition and tracking solutions, and it feels like this area is becoming increasingly mature, with several commercial products already implemented.Machine vision is the input sensing link of AI, and if it can be combined with the currently trending large models, I believe there will be greater imaginative space.I look forward to participating in more similar technical seminars or forums in the future to continue exploring more embedded + AI technology implementation solutions. Thank you for reading!

-END-

Previous Recommendations: Click the image to jump to read

What is the top salary for embedded software engineers? Is there a career ceiling?

Common mistakes made by beginners in embedded software when writing code!

Embedded C language basics: How to pass structure information into a function?