1. Ethernet

-

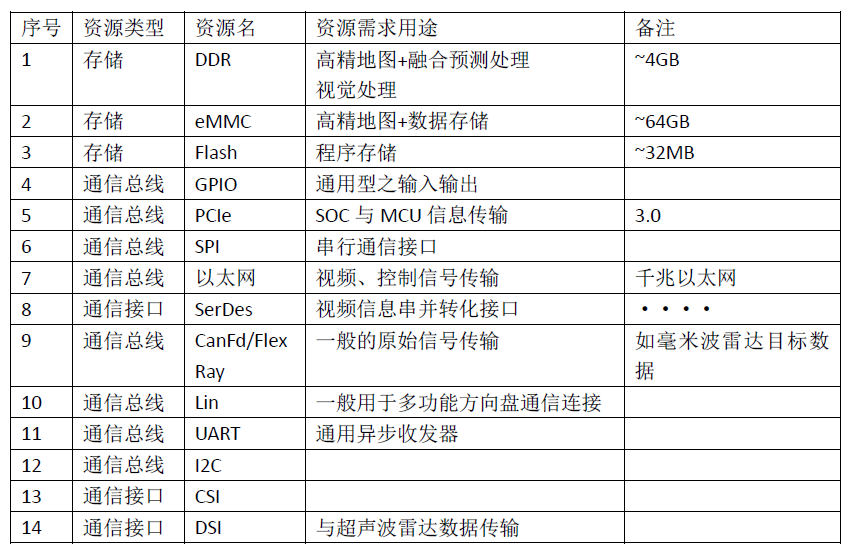

UART is used to control the central computing unit and serial devices, providing an RS-232C data terminal device interface, allowing the domain controller chip to communicate with modems or other serial devices using RS-232C interfaces;

-

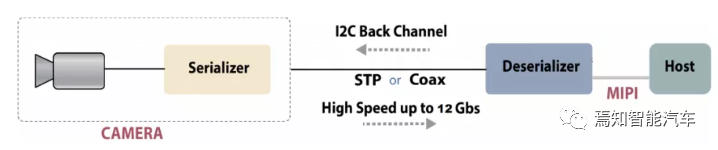

Parallel/serial conversion: converting parallel data transmitted by SOC into output serial data streams;

-

Serial byte conversion: converting incoming serial data from external CPU units into bytes for use by internal parallel data devices of MCU;

-

Parity check: adding parity bits and start/stop flags to the output serial data stream and performing parity checks on the data streams received from external sources;

-

Input/output buffer, managing data and synchronization issues between the domain controller and external serial devices (such as cameras);

◆EE Architecture | Evolution of Autonomous Driving Functional Architecture

◆Sensor Perception | Analysis of Fusion Solutions from Two Different Perspectives: FOV and BEV

◆Domain Controller | Analysis of Trends in Intelligent Driving Domain Controller Hardware Solutions

◆Sensor Perception | Understanding Feature-Level Fusion in BEV Space in One Article