Click the “IT Program Circle” above to select “Top Public Account”.

Source: Zhang Godan’s Technical Journey

Since I learned about the TCP/IP congestion control algorithm last time, I increasingly wanted to delve deeper into some underlying principles of TCP/IP. I searched a lot of information online and found a column by Tao Hui on high-performance network programming, which was very beneficial. Today, I will summarize it and add some of my own thoughts.

I am relatively familiar with the Java language, and my understanding of Java network programming stops at the use of the <span>Netty</span> framework. The source code contributor of <span>Netty</span>, Norman Maurer, made a suggestion regarding Netty network development: “Never block the event loop, reduce context-switching”. This means to avoid blocking the IO thread as much as possible and to minimize thread switching.

Why can’t we block the IO thread that reads network information? This needs to be understood from the classic C10K problem, which discusses how a server can support 10,000 concurrent requests. The root of C10K lies in the network IO model. In Linux, network processing is done using synchronous blocking methods, meaning each request is assigned a process or thread. So to support 10,000 concurrent connections, do we really need to use 10,000 threads? The scheduling, context switching, and memory usage of these 10,000 threads can become bottlenecks. The common solution to the C10K problem is to use I/O multiplexing, which is how <span>Netty</span> operates.

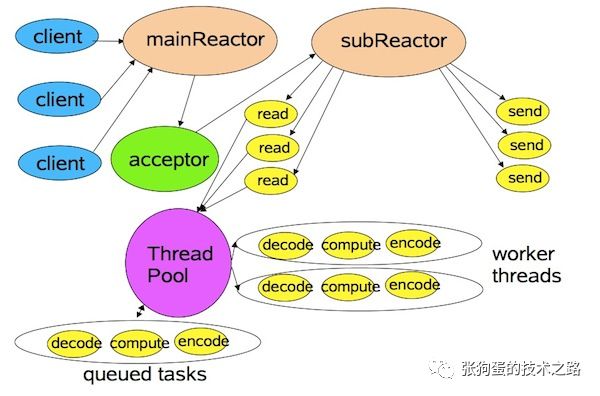

<span>Netty</span> has a mainReactor thread group responsible for listening and establishing connections and a subReactor thread group responsible for reading and writing operations. There can also be a Worker thread group (ThreadPool) specifically for handling business logic.

The three groups operate independently, which has many advantages. First, there is a dedicated thread group responsible for listening and processing network connection establishment, which can prevent the TCP/IP half-connection queue (sync) and full-connection queue (acceptable) from being filled. Second, separating the IO thread group and Worker threads allows both to process network I/O and business logic in parallel, preventing IO threads from being blocked and avoiding the overflow of the TCP/IP received message queue. Of course, if the business logic is minimal, meaning it is IO-intensive light computation, the business logic can be handled in the IO thread to avoid thread switching, which is the latter part of Norman Maurer’s advice.

Why are there so many queues in TCP/IP? Today, we will take a closer look at several queues in TCP/IP, including the half-connection queue (sync), full-connection queue (accept), and the receive, out-of-order, prequeue, and backlog queues during message reception.

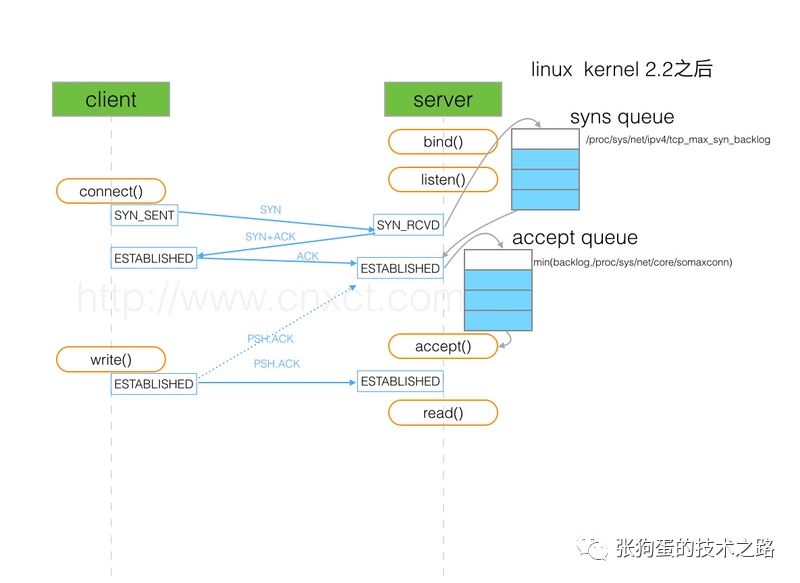

Queues When Establishing Connections

As shown in the figure above, there are two queues here: the syns queue (half-connection queue) and the accept queue (full-connection queue). During the three-way handshake, when the server receives the SYN packet from the client, it places the relevant information in the half-connection queue and replies with a SYN+ACK to the client. In the third step, when the server receives the ACK from the client, if the full-connection queue is not full, it takes the relevant information from the half-connection queue and places it into the full-connection queue. Otherwise, it executes the relevant operation according to the value of <span>tcp_abort_on_overflow</span>, either discarding it directly or retrying after a while.

Queues When Receiving Messages

Compared to establishing connections, the processing logic of TCP when receiving messages is more complex, with more related queues and configuration parameters involved.

The application receiving TCP messages and the server system where the program is located receiving TCP messages from the network are two independent processes. Both will manipulate the socket instance, but will compete through locks to determine who controls it at any given moment, leading to many different scenarios. For example, when the application is receiving messages, and the operating system receives messages through the network card, how should it be handled? If the application has not called read or recv to read the message, how will the operating system handle the received message?

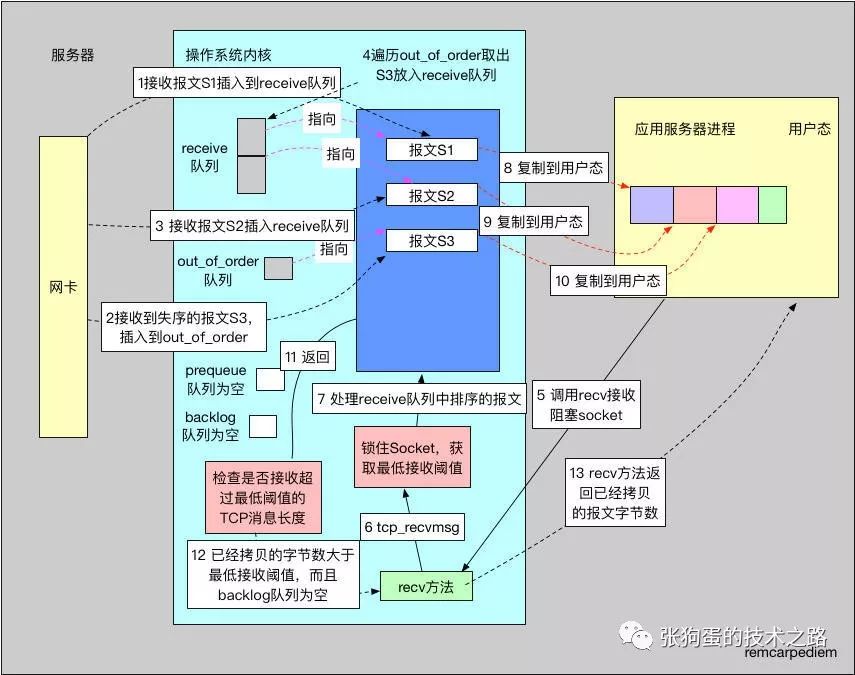

Next, we will introduce three scenarios of TCP message reception through three images and discuss four receiving-related queues.

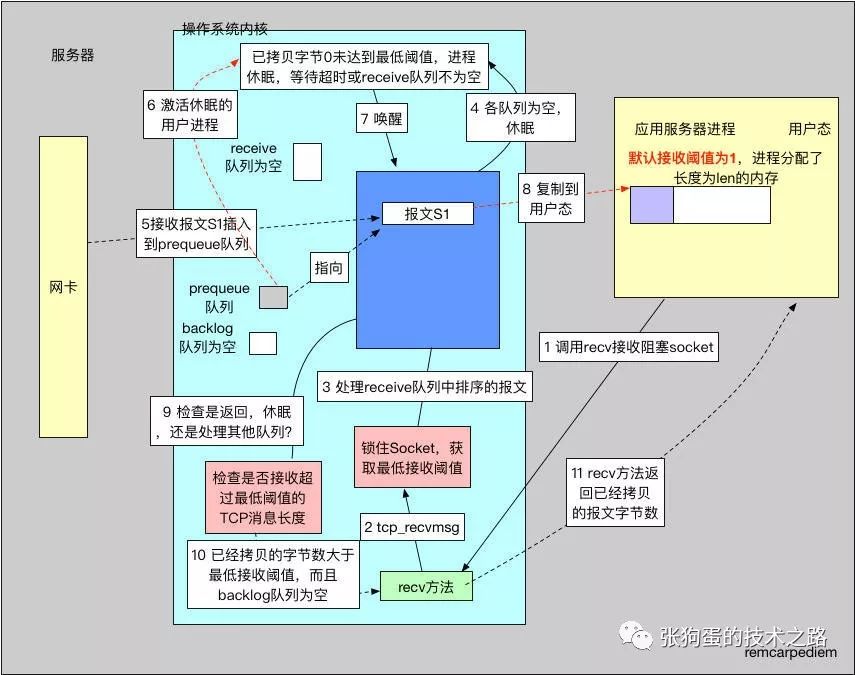

Message Reception Scenario One

The above image is a diagram of TCP reception scenario one. The operating system first receives the message and stores it in the socket’s receive queue, and then the user process calls recv to read it.

1) When the network card receives a message and determines it to be a TCP protocol, after several calls, it will eventually call the kernel’s <span>tcp_v4_rcv</span> method. Since the next message TCP wants to receive is S1, the <span>tcp_v4_rcv</span> function directly adds it to the <span>receive</span> queue. The <span>receive</span> queue holds the already received TCP messages, stripped of the TCP header, sorted, and ready for the user process to read in order. Since the socket is not in the user process context (i.e., no user process is reading the socket), and we need the message with sequence number S1, which has just been received, it enters the <span>receive</span> queue.

2) When the S3 message is received, since the next expected TCP message is S2, it is added to the <span>out_of_order</span> queue, where all out-of-order messages are stored.

3) Next, when the expected S2 message is received, it directly enters the <span>receive</span> queue. Since the <span>out_of_order</span> queue is not empty at this point, it needs to be checked.

4) Each time a message is inserted into the <span>receive</span> queue, the <span>out_of_order</span> queue is checked. Since the S2 message is received, the expected sequence number is S3, and the S3 message in the <span>out_of_order</span> queue will be moved to the <span>receive</span> queue.

5) The user process begins to read the socket, first allocating a block of memory in the process, and then calling <span>read</span> or <span>recv</span> methods. The socket has a series of default configuration properties, such as the default blocking nature of the socket and the <span>SO_RCVLOWAT</span> attribute value, which defaults to 1. Of course, the <span>recv</span> method also receives a flag parameter, which can be set to <span>MSG_WAITALL</span>, <span>MSG_PEEK</span>, <span>MSG_TRUNK</span>, etc. Here we assume it is set to the most commonly used value of 0. The process calls the <span>recv</span> method.

6) The <span>tcp_recvmsg</span> method is called.

7) The <span>tcp_recvmsg</span> method first locks the socket. The socket can be used by multiple threads, and the operating system will also use it, so concurrency issues must be handled. To manipulate the socket, the lock must be obtained first.

8) At this point, the <span>receive</span> queue already has three messages. The first message is copied to the user-space memory. Since the socket parameters in step five did not include <span>MSG_PEEK</span>, the first message is removed from the queue and released from kernel space. Conversely, the <span>MSG_PEEK</span> flag will cause the <span>receive</span> queue not to delete the message. Thus, the <span>MSG_PEEK</span> is mainly used in scenarios where multiple processes read the same socket.

9) The second message is copied, and of course, before copying, it will check whether the remaining space in user-space memory is sufficient to hold the current message. If not, it will directly return the number of bytes already copied.

10) The third message is copied.

11) The <span>receive</span> queue is now empty. At this point, it will check the <span>SO_RCVLOWAT</span> minimum threshold. If the number of copied bytes is less than it, the process will sleep, waiting for more messages. The default value of <span>SO_RCVLOWAT</span> is 1, meaning that it can return as soon as a message is read.

12) Check the <span>backlog</span> queue. The <span>backlog</span> queue is where messages received by the network card while the user process is copying data will go. If the <span>backlog</span> queue has data at this time, it will be processed as well. The <span>backlog</span> queue is empty, so the lock is released, and it prepares to return to user space.

13) The user process code begins execution, and the number of bytes returned by methods like recv is the number of bytes copied from kernel space.

Message Reception Scenario Two

The second image shows the second scenario, which involves the <span>prequeue</span> queue. When the user process calls the recv method, there are no messages in the socket queue, and since the socket is blocking, the process sleeps. Then the operating system receives a message, and at this point, the <span>prequeue</span> queue begins to take effect. In this scenario, <span>tcp_low_latency</span> is set to the default value of 0, and the socket’s <span>SO_RCVLOWAT</span> is still the default value of 1, remaining a blocking socket, as shown in the figure below.

Here, steps 1, 2, and 3 are handled the same as before. We will start from step four.

4) Since the <span>receive</span>, <span>prequeue</span>, and <span>backlog</span> queues are all empty at this point, no bytes are copied to user memory. However, the socket’s configuration requires at least one byte of message to be copied, represented by <span>SO_RCVLOWAT</span>, so it enters the blocking socket waiting process. The maximum waiting time is specified by <span>SO_RCVTIMEO</span>. Before entering the wait, the socket will release the socket lock, allowing new incoming messages to no longer only enter the <span>backlog</span> queue.

5) Upon receiving the S1 message, it is added to the <span>prequeue</span> queue.

6) After being inserted into the <span>prequeue</span> queue, the process sleeping on the socket is awakened.

7) After the user process is awakened, it re-acquires the socket lock. After this, any incoming messages can only enter the <span>backlog</span> queue.

8) The process first checks the <span>receive</span> queue, which is still empty; then checks the <span>prequeue</span> queue and finds the S1 message, which is exactly the expected sequence number, so it copies it from the <span>prequeue</span> queue to user memory and releases this message from kernel space.

9) At this point, one byte of message has been copied to user memory, and it checks whether this length exceeds the minimum threshold, which is the minimum value of len and <span>SO_RCVLOWAT</span>.

10) Since <span>SO_RCVLOWAT</span> uses the default value of 1, the number of copied bytes exceeds the minimum threshold, preparing to return to user space, while also checking if there is any data in the <span>backlog</span> queue. At this time, there is none, so it prepares to return and releases the socket lock.

11) It returns the number of bytes already copied to the user.

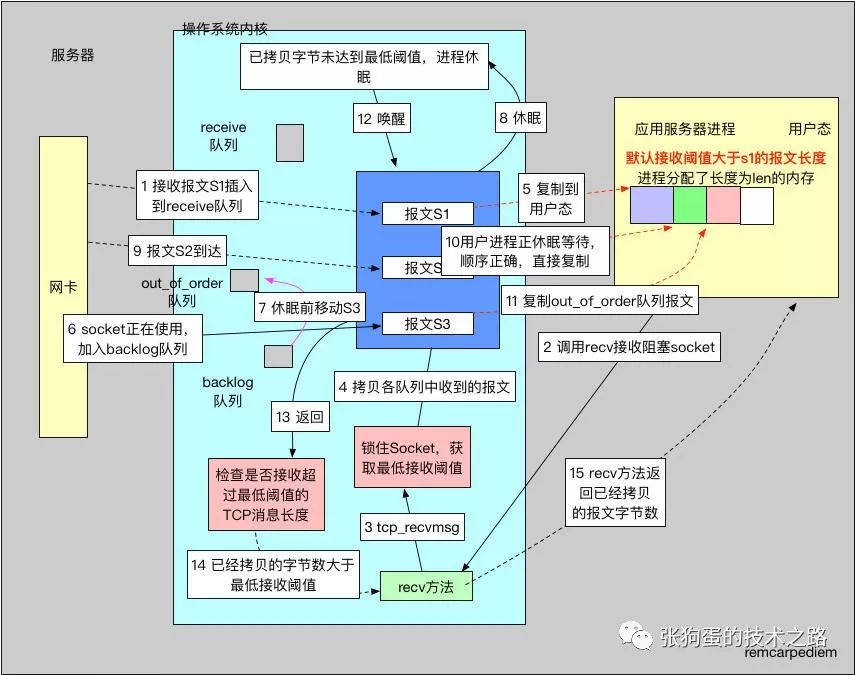

Message Reception Scenario Three

In the third scenario, the system parameter <span>tcp_low_latency</span> is set to 1, and the socket has the <span>SO_RCVLOWAT</span> attribute value set. The server first receives message S1, but its length is less than <span>SO_RCVLOWAT</span>. The user process calls the <span>recv</span> method to read; although it reads part of the data, it does not reach the minimum threshold, so the process sleeps. Meanwhile, the out-of-order message S3 received before sleeping goes directly into the <span>backlog</span> queue. Then, when message S2 arrives, since the <span>prequeue</span> queue is not used (because <span>tcp_low_latency</span> is set), and it starts with the sequence number that is next to be copied, it is directly copied to user memory, and the total number of copied bytes meets the requirements of <span>SO_RCVLOWAT</span>! Finally, before returning to the user, the S3 message in the <span>backlog</span> queue is also copied to the user.

1) Upon receiving message S1, which is the expected message sequence number, it is directly added to the ordered <span>receive</span> queue.

2) The system property <span>tcp_low_latency</span> is set to 1, indicating that the server wants the program to receive TCP messages promptly. The user calls the <span>recv</span> method to receive messages from the blocking socket, and the socket’s <span>SO_RCVLOWAT</span> value is greater than the size of the first message, and the user has allocated sufficiently large memory for len.

3) The <span>tcp_recvmsg</span> method is called to complete the receiving work, first locking the socket.

4) It is prepared to process the messages in the various receiving queues of the kernel.

5) The <span>receive</span> queue has messages that can be copied directly, and since their size is less than len, they are copied to user memory directly.

6) Simultaneously, the kernel receives message S3 while locking the socket, and this message enters the <span>backlog</span> queue. This message is out of order.

7) During the fifth step, message S1 is copied to user memory, but its size is less than <span>SO_RCVLOWAT</span>. Since the socket is blocking, the user process enters a sleep state. Before sleeping, it will process the messages in the <span>backlog</span> queue. Because S3 is out of order, it enters the <span>out_of_order</span> queue. The user process enters a sleep state after processing the <span>backlog</span> queue.

8) The process sleeps until a timeout or the <span>receive</span> queue is not empty.

9) The kernel receives message S2. Note that because the <span>tcp_low_latency</span> flag is set, the message will not enter the <span>prequeue</span> queue waiting for process handling.

10) Since message S2 is the message to be received, and a user process is sleeping waiting for this message, it is directly copied to user memory.

11) After processing an ordered message, whether copied to the <span>receive</span> queue or directly to user memory, the <span>out_of_order</span> queue is checked to see if there are any messages to process. Message S3 is copied to user memory, and the user process is awakened.

12) The user process is awakened.

13) At this point, it checks whether the number of copied bytes exceeds <span>SO_RCVLOWAT</span> and whether the <span>backlog</span> queue is empty. If both conditions are met, it prepares to return.

To summarize the functions of the four queues:

-

The

<span>receive</span>queue is the actual reception queue. TCP data packets received by the operating system are stored in this queue after inspection and processing. -

The

<span>backlog</span>queue is the “backup queue”. When the socket is in the user process context (i.e., the user is making system calls to the socket, such as recv), the operating system will save the received packets in the<span>backlog</span>queue and then return directly. -

The

<span>prequeue</span>queue is the “pre-storage queue”. When the socket is not being used by the user process, meaning the user process has called read or recv and has entered a sleep state, the operating system will directly save the received messages in the<span>prequeue</span>and then return. -

The

<span>out_of_order</span>queue is the “out-of-order queue”. This queue stores out-of-order messages. If the operating system receives a message that is not the next expected sequence number for TCP, it will be placed in the<span>out_of_order</span>queue for later processing.

References

-

http://www.voidcn.com/article/p-gzmjmmna-dn.html

-

https://blog.csdn.net/russell_tao/article/details/9950615

-

https://ylgrgyq.github.io/2017/08/01/linux-receive-packet-3/

Note: Today’s check-in is on the headline push article.

My knowledge circle, join for free for a limited time!

Understanding The Underlying Data Structure And Algorithm Of HashMap

Completely Understand Consistent Hashing Algorithm In Five Minutes

Active Technical WeChat Groups for Java and Python