Click “Read the original text” to view Liang Xu’s original video.

Click “Read the original text” to view Liang Xu’s original video.

This article explains the following content based on the principles of operating systems combined with code practice:

What are processes, threads, and coroutines?

What is their relationship?

Why is multi-threading in Python considered pseudo-multi-threading?

How to choose a technical solution for different application scenarios?

…

What is a Process

A process is an abstract concept provided by the operating system and is the basic unit for resource allocation and scheduling. It is the foundation of the operating system structure. A program is a description of instructions, data, and their organization, while a process is the entity of a program. A program itself has no lifecycle; it only exists as instructions on the disk. Once a program runs, it becomes a process.

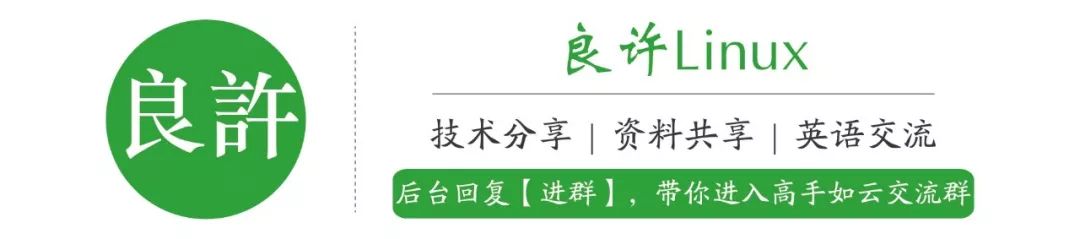

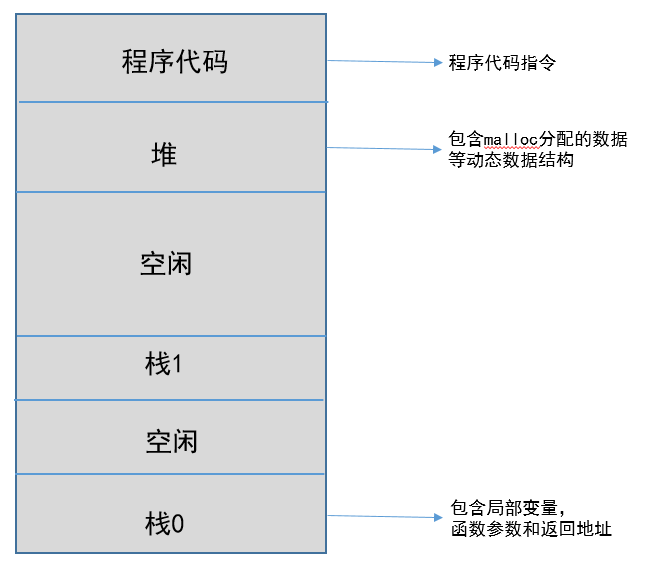

When a program needs to run, the operating system loads the code and all static data into memory and the process’s address space (each process has a unique address space, as shown below). By creating and initializing the stack (local variables, function parameters, and return addresses), allocating heap memory, and performing I/O-related tasks, the initial preparation work is completed. The operating system then transfers control of the CPU to the newly created process, and the process begins to run.

The operating system’s control and management of processes are carried out through the PCB (Process Control Block). The PCB is usually a contiguous memory area in the system’s memory that stores all the information needed by the operating system to describe the process situation and control the process’s operation (process ID, process state, process priority, file system pointer, and the contents of various registers, etc.). The PCB of a process is the only entity through which the system perceives the process.

A process has at least five basic states: initial state, executing state, waiting (blocked) state, ready state, and terminated state.

-

Initial state: The process has just been created and cannot be executed because other processes are occupying the CPU.

-

Executing state: At any moment, only one process can be in the executing state.

-

Ready state: Only processes in the ready state can be scheduled to the executing state.

-

Waiting state: The process is waiting for an event to complete.

-

Terminated state: The process has ended.

Process Switching

Whether in a multi-core or single-core system, a CPU appears to execute multiple processes concurrently, achieved through switching between processes.

The mechanism by which the operating system exchanges control of the CPU between different processes is called context switching, which saves the context of the current process, restores the context of the new process, and then transfers control of the CPU to the new process, allowing it to start from where it last stopped. Therefore, processes take turns using the CPU, which is shared among several processes, using a scheduling algorithm to decide when to stop one process and provide service to another.

-

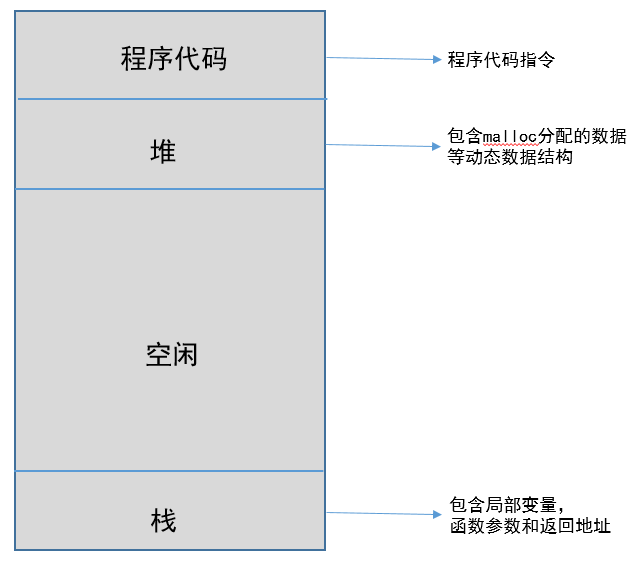

Single-core CPU with two processes

Processes directly switch mechanisms and handle I/O interruptions, taking turns using CPU resources.

-

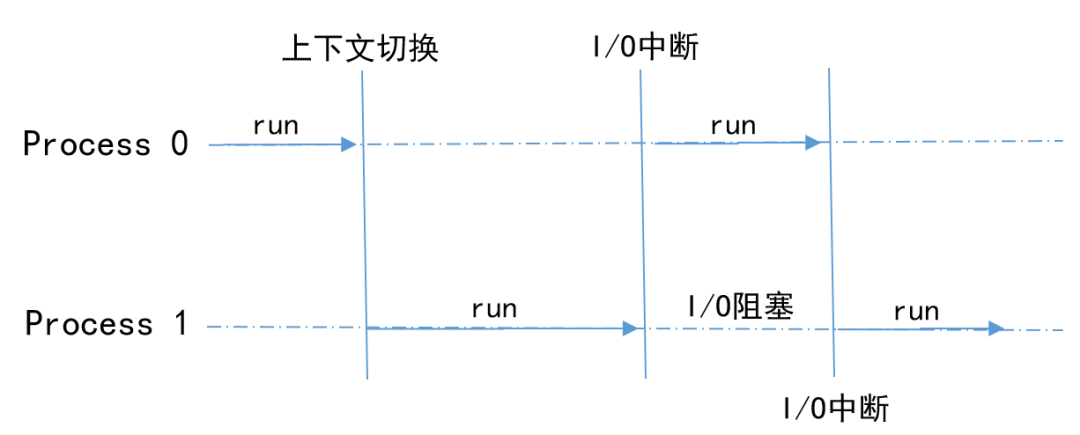

Dual-core CPU with two processes

Each process occupies a CPU core resource, while the CPU is in a blocked state when processing I/O requests.

Data Sharing Between Processes

Processes in the system share CPU and main memory resources. To better manage main memory, the system provides an abstract concept of main memory, known as virtual memory (VM). It is an abstract concept that provides each process with the illusion that it is using main memory exclusively.

Virtual memory mainly provides three capabilities:

-

It treats main memory as a high-speed cache stored on disk, keeping only active areas in main memory and transferring data back and forth between disk and main memory as needed, thus using main memory more efficiently.

-

It provides each process with a consistent address space, simplifying memory management.

-

It protects each process’s address space from being damaged by other processes.

Since processes have their exclusive virtual address space, the CPU translates virtual addresses into real physical addresses. Each process can only access its address space. Therefore, without other mechanisms (inter-process communication) to assist, processes cannot share data.

-

For example, using multiprocessing in Python.

import multiprocessingimport threadingimport time

n = 0

def count(num): global n for i in range(100000): n += i print("Process {0}:n={1},id(n)={2}".format(num, n, id(n)))

if __name__ == '__main__': start_time = time.time() process = list() for i in range(5): p = multiprocessing.Process(target=count, args=(i,)) # Test multi-process usage # p = threading.Thread(target=count, args=(i,)) # Test multi-thread usage process.append(p) for p in process: p.start() for p in process: p.join() print("Main:n={0},id(n)={1}".format(n, id(n))) end_time = time.time() print("Total time:{0}".format(end_time - start_time))-

Results

Process 1:n=4999950000,id(n)=139854202072440Process 0:n=4999950000,id(n)=139854329146064Process 2:n=4999950000,id(n)=139854202072400Process 4:n=4999950000,id(n)=139854201618960Process 3:n=4999950000,id(n)=139854202069320Main:n=0,id(n)=9462720Total time:0.03138256072998047The variable n has a unique address space in processes p{0,1,2,3,4} and the main process (main).

What is a Thread

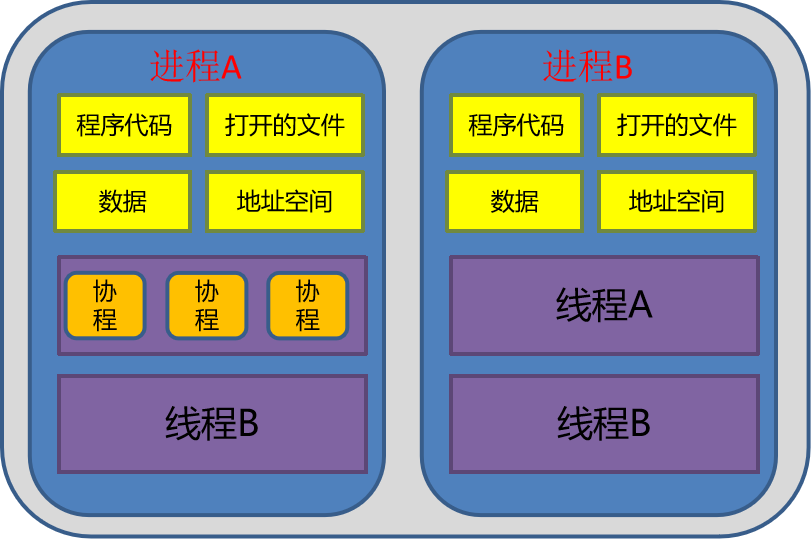

A thread is also an abstract concept provided by the operating system and is a single sequential control flow in program execution, the smallest unit of program execution flow, and the basic unit of processor scheduling and dispatch. A process can have one or more threads, and multiple threads within the same process share all system resources in that process, such as virtual address space, file descriptors, and signal handling, etc. However, multiple threads within the same process have their own call stack and thread-local storage (as shown below).

The system uses PCB to control and manage processes. Similarly, the system allocates a thread control block (TCB) for threads, recording all information needed to control and manage threads in the thread control block. The TCB usually includes:

-

Thread identifier

-

A set of registers

-

Thread execution state

-

Priority

-

Thread-specific storage

-

Signal mask

Like processes, threads also have five states: initial state, executing state, waiting (blocked) state, ready state, and terminated state. The switching between threads, like processes, also requires context switching, which will not be elaborated here.

There are many similarities between processes and threads, so what are the differences?

Process VS Thread

-

A process is an independent unit of resource allocation and scheduling. A process has a complete virtual address space, and when a process switches, different processes have different virtual address spaces. However, multiple threads in the same process can share the same address space.

-

A thread is the basic unit of CPU scheduling, and a process contains several threads.

-

Threads are smaller than processes and generally do not own system resources. The time required for creating and destroying threads is much less than that for processes.

-

Because threads can share address space, synchronization and mutual exclusion operations need to be considered.

-

An unexpected termination of a thread can affect the normal operation of the entire process, but an unexpected termination of a process does not affect the operation of other processes. Therefore, multi-process programs are safer.

In summary, multi-process programs are safer, but process switching has high overhead and low efficiency; multi-thread programs have high maintenance costs, but thread switching has low overhead and high efficiency. (Python’s multi-threading is pseudo-multi-threading, which will be detailed later.)

What is a Coroutine

A coroutine (Coroutine, also known as a micro-thread) is a lighter-weight existence than a thread. Coroutines are not managed by the operating system kernel but are entirely controlled by the program. The relationship between coroutines, threads, and processes is shown in the figure below.

-

Coroutines can be likened to subroutines, but during execution, a subroutine can be interrupted and then switch to executing another subroutine, returning to continue execution at the appropriate time. Switching between coroutines does not require any system calls or blocking calls.

-

Coroutines execute only within a single thread, switching between subroutines occurs in user mode. Moreover, the blocking state of a thread is handled by the operating system kernel and occurs in kernel mode. Therefore, coroutines save the overhead of thread creation and switching compared to threads.

-

Coroutines do not have simultaneous write variable conflicts, so there is no need for synchronization primitives, such as mutexes and semaphores, to guard critical sections, and they do not require support from the operating system.

Coroutines are suitable for scenarios with I/O blocking and a need for high concurrency. When an I/O block occurs, the coroutine scheduler schedules the coroutines by yielding the data flow and recording the current stack data. After the blocking is completed, it immediately restores the stack through the thread and runs the blocking result in that thread.

Next, we will analyze how to choose between processes, threads, and coroutines in Python for different application scenarios.

How to Choose?

Before comparing the differences between the three for different scenarios, we first need to introduce Python’s multi-threading (which has been criticized by programmers as