When discussing the philosophical significance of AI Agents, one cannot overlook the two core paths of organism development: unit enhancement and organizational enhancement. These two evolutionary paths not only witness the evolution of organisms from simple to complex in nature but also provide valuable perspectives for understanding the development of artificial intelligence, especially AI Agents.

Unit enhancement refers to the ability of organisms to adapt to their environment by enhancing individual capabilities, such as animals gradually evolving sharper senses, greater strength, or higher intelligence. Organizational enhancement, on the other hand, involves the collaboration among individuals to form more complex social structures and collective intelligence, thereby achieving an overall effectiveness that transcends the capabilities of individual members.

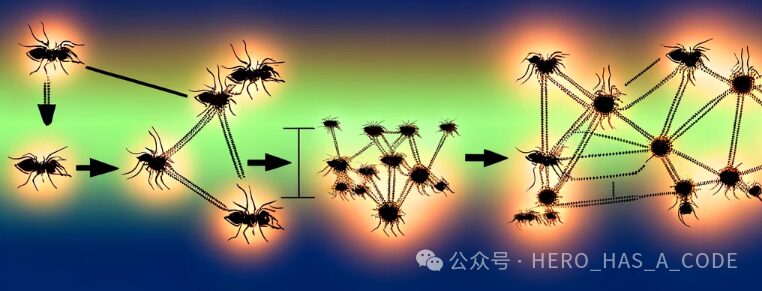

The ant society is a typical example of organizational enhancement. Although the intelligence of a single ant is limited, the entire ant colony can accomplish extremely complex tasks, such as building nests, foraging, and nurturing offspring, through division of labor and cooperation. For ants, having a larger brain may help them perform well in a large society—but, conversely, a more complex social system and more detailed division of labor might lead to the atrophy of their brains. This could be because cognitive abilities are distributed among many members of the colony, each playing different roles, which means that individuals do not need to be too intelligent under collective wisdom. The brain of an ant has only 250,000 neurons; in contrast, the human brain has about 86 billion neurons.

(https://www.bbc.com/zhongwen/simp/science-61648300)

A curious phenomenon is that Jeremy DeSilva, an anthropologist at Dartmouth College in the United States, stated that the human brain has also been shrinking over the past few thousand years, with the reduction in brain volume roughly equivalent to four ping pong balls. He and his colleagues published a report analyzing skull fossils, indicating that this brain shrinkage began about 3,000 years ago, roughly around the time of the invention of writing.

The development of human society benefits from the principles of organizational enhancement. Through tools such as language, culture, and technology, we have achieved the accumulation and transmission of knowledge, constructing complex social systems. In the evolutionary process of human society, as collaborative capabilities have increased, we have begun to rely more on “external brain” tools such as writing, language, and external knowledge bases to store and transmit knowledge. After the invention of writing and language, the capacity of the human brain has actually shrunk over the past few thousand years, a phenomenon that may be related to our reliance on these “external brain” tools.The emergence of writing allows humans to externalize knowledge, storing it in books, inscriptions, and networks, rather than relying solely on the memory function of the brain. This way, the brain can allocate resources and energy to other cognitive and collaborative abilities.

Moreover, as the division of labor becomes more refined and specialized knowledge systems are established, individuals no longer need to master all knowledge but can rely on the knowledge and skills of other members of society or experts. This mode of division and cooperation not only improves the overall efficiency of human society but also reduces the information processing burden on individuals’ brains, which may lead to a gradual decrease in brain capacity. However, this does not mean that humans become “stupid.” On the contrary, with the assistance of collective wisdom and “external brains,” humans can solve more complex problems and create unprecedented civilizational achievements. This phenomenon profoundly reflects the power of “organizational enhancement” in the evolution of life, where organisms can achieve higher cognition and civilizational progress more economically through social cooperation and technological innovation.

In the world of AI, previous large models like GPT, while demonstrating astonishing capabilities in text generation and language understanding, resemble a “brain in a jar,” lacking the ability to interact with the external world, and their expressions of intelligence are relatively limited. The emergence of AI Agents provides a new path for the evolution of AI. The core of AI Agents lies in their ability to perceive the environment and execute tasks accordingly, akin to equipping AI with “eyes, ears, and limbs.” This not only allows AI to be applied in a broader range of fields, such as autonomous driving, smart homes, and online customer service, but more importantly, it provides the possibility of organizational enhancement for AI. Through AI Agents, different AI systems can exchange information and coordinate tasks, forming a collective intelligence of AI groups. This group can not only tackle more complex problems but also adapt to the ever-changing environment, with an overall intelligence level and application scope far exceeding that of individual AI models.

From an evolutionary perspective, both natural organisms and artificial intelligence are evolving towards higher levels of intelligence.The philosophical significance of AI Agents lies in the fact that it not only signifies the evolution of artificial intelligence towards a more advanced form but also reflects the universal laws of organism evolution—achieving synergy and evolution of intelligent agents through enhanced organizational capabilities.

As large models skillfully use Agents for their external brains and tools, they no longer need to blindly pursue the growth of parameter scale but can enhance efficiency and accuracy by delegating specialized tasks to experts and tools. In this process, AI is no longer just a tool or assistant for humans but becomes an intelligent entity capable of autonomous learning, collaboration, and evolution. Learning how to use AI Agents and how to collaborate with AI Agents will become one of the most important abilities for humans in the future.

The social roles of AI Agents, their interrelationships, and their interactions with humans have become important research topics.Here, we can refer to the classification and content of the paper “The Rise and Potential of Large Language Model Based Agents: A Survey.”

Paper address:https://arxiv.org/pdf/2309.07864.pdf

-

Positioning the Social Role of AI Agents

With the rapid development of artificial intelligence technology, the capabilities of intelligent agents are continually expanding and deepening. Modern AI Agents are no longer just cold computer programs; they are beginning to exhibit more “human-like” traits. These highly intelligent Agents can autonomously learn new knowledge, reason to solve problems, and even simulate human emotions and personalities, becoming more approachable and understanding of users.

This evolution is not only a technical breakthrough but also an important step towards AI Agents becoming all-around intelligent partners. For example, through deep learning and natural language processing technologies, an AI Agent can understand and simulate human communication styles, engaging in natural and smooth conversations with human users. They can adjust the tone and content of communication based on users’ emotions and preferences, providing more personalized and thoughtful services.

-

Collaboration Among AI Agents

Division of labor can be adjusted based on the characteristics of the agents and the requirements of the tasks. For instance, for a task involving data analysis and image recognition, tasks related to data processing and pattern recognition can be assigned to agents specialized in these areas, while decision-making and planning tasks can be assigned to agents with strong decision-making capabilities. This way, each agent can focus on solving problems they excel at, improving overall efficiency and performance.

The core of collaboration is not just about task allocation and execution but also involves the ability to seek consensus when conflicts arise. Conflicts may occur among agents due to inconsistent goals, limited resources, or differences in understanding; thus, mechanisms for seeking consensus become crucial. Agents can resolve differences through negotiation mechanisms, such as introducing neutral mediating agents, adopting voting systems, or implementing priority rules to ensure that decisions during the cooperation process are both fair and efficient.

To establish trust and security mechanisms, performance-based evaluation systems and historical behavior assessments have been introduced into agent systems, along with encryption and access control technologies to ensure the security of data and interactions. Trust among agents is built on an understanding of each other’s capabilities and historical behaviors, which helps optimize task allocation and decision-making processes. Additionally, through continuous performance monitoring and feedback loops, the system can dynamically adjust collaboration strategies to respond to new challenges and environmental changes.

In this new era of intelligent collaboration, how to design, manage, and optimize the cooperation relationships among intelligent agents, ensuring that they can grow together in a safe, efficient, and harmonious environment, will be a significant challenge and opportunity we face.

-

The Relationship Between AI Agents and Humans

As individuals increasingly rely on AI Agents, these agents not only appear as tools or assistants but gradually evolve into digital avatars of individuals. They begin to interact, make decisions, and learn on behalf of individuals in the virtual world, deeply understanding users’ preferences and needs, predicting personal demands, and executing specific tasks, bringing profound changes to human life and work. Over time, these AI Agents become more and more like their owners through continuous learning and interaction, thus becoming a digital extension of individuals. However, the accompanying challenges of privacy, ethics, and emotional interconnection also need to be addressed to ensure that technological development maintains humanistic values.

-

Agent Society: From Individuals to Society

The introduction of agent personalities adds diversity to society, but care must also be taken to avoid group biases or oppositions. Through reasonable regulation and supervision, agents can be guided to develop along rational and friendly paths. The environments in which agents operate, from text-based abstract scenarios to sandbox environments closer to reality, and finally to the challenging real physical world, gradually increase the complexity and practicality of simulating society. The design of these environments aims to teach agents to adapt and act in various situations while ensuring safety and controllability.

By establishing strict regulatory mechanisms, agent society simulations not only reveal the importance of organization but also emphasize the role of checks and balances in maintaining social harmony. However, insights gained from these simulations are not always directly applicable to human society. We must carefully discern which experiences are transferable and which may lead to unintended negative effects. While exploring agent society, ethical and social risk considerations cannot be overlooked, ensuring that technological advancement is also a significant step forward for human civilization.