AI, including generative AI, is migrating to edge devices at an unprecedented pace, transitioning from the initial exploration of AIoT to a true era of edge AI. This shift is causing a seismic change in the embedded industry.

How hot is edge AI? At the embedded exhibition, all manufacturers introduced their edge AI-related products. Let’s take a look at the current status of industry leaders such as STMicroelectronics, Infineon, NXP, and Texas Instruments.

STMicroelectronics

Remi El-Ouazzane, Director of MCU, Digital IC, and RF Product Lines at STMicroelectronics, stated that ST has approximately 50 demos centered around edge AI at the embedded exhibition, facilitating in-depth communication with developers. “In fact, over the two days, I had in-depth discussions with R&D managers or developers from companies of various sizes, some with only 10 people and others with tens of thousands, which was very fulfilling for me.”El-Ouazzane emphasized that AI is no longer a passing trend; it has become the core of edge nodes. “Our customers can be divided into three categories: the first category wants to replace physical sensors with AI to reduce material costs; the second category has been using random models for a long time and is now improving inference accuracy through probabilistic models; the third category is exploring new AI application scenarios by leveraging the low power consumption and low cost characteristics of microcontrollers. These trends were just budding three years ago but have now become mainstream.”

At the embedded exhibition, El-Ouazzane attended dozens of meetings, each mentioning AI. He cited an example of a company focused on energy-harvesting sensors that, despite being limited by energy budgets, is exploring how to optimize sensor performance using convolutional models. Such applications have already landed in the industrial sector, with many cases available on ST’s official website.Similar developments are occurring in the automotive industry, gradually expanding AI from autonomous driving (which relies on high-performance computing) to regional controllers, microcontrollers, and other edge devices. ST’s automotive AI scenarios focus on “non-sexy but critical” applications, such as predicting tire leaks, data center fan failures, license plate recognition, overheating warnings for autonomous vehicle motors, and thermal runaway risk monitoring in battery management systems. While these applications may not be eye-catching, they are crucial for customers.

In terms of market size, the annual shipment of general-purpose microcontrollers is about 10 billion nodes. Although the individual value is not as high as that of data center GPUs, in five years, the deployment of edge AI devices will become the largest group of smart terminals globally.When discussing how ST can win in the AI race, El-Ouazzane stated that the key lies in the quality of the developer ecosystem. We not only optimize development tools (such as IDE features and logging mechanisms) but also enhance developer efficiency through AI technology. For example, we trained a generative AI model based on STM32 products that can quickly respond to developer inquiries, reducing manual intervention. Additionally, we assess developer satisfaction monthly to continuously optimize the toolchain and community services.“AI is not only driving technological innovation but also reshaping business models. For instance, a company developed a fan for ultra-large-scale data centers that avoids downtime due to cooling issues through predictive maintenance, thereby changing the collaboration model with customers. In the industrial sector, traditional companies are achieving digital transformation by connecting smart devices to the cloud, a process that is challenging but full of potential,” El-Ouazzane said. At the embedded exhibition, ST showcased a video capture and computation process for a foosball system using the STM32MP2 microprocessor.

At the embedded exhibition, ST showcased a video capture and computation process for a foosball system using the STM32MP2 microprocessor.

Infineon

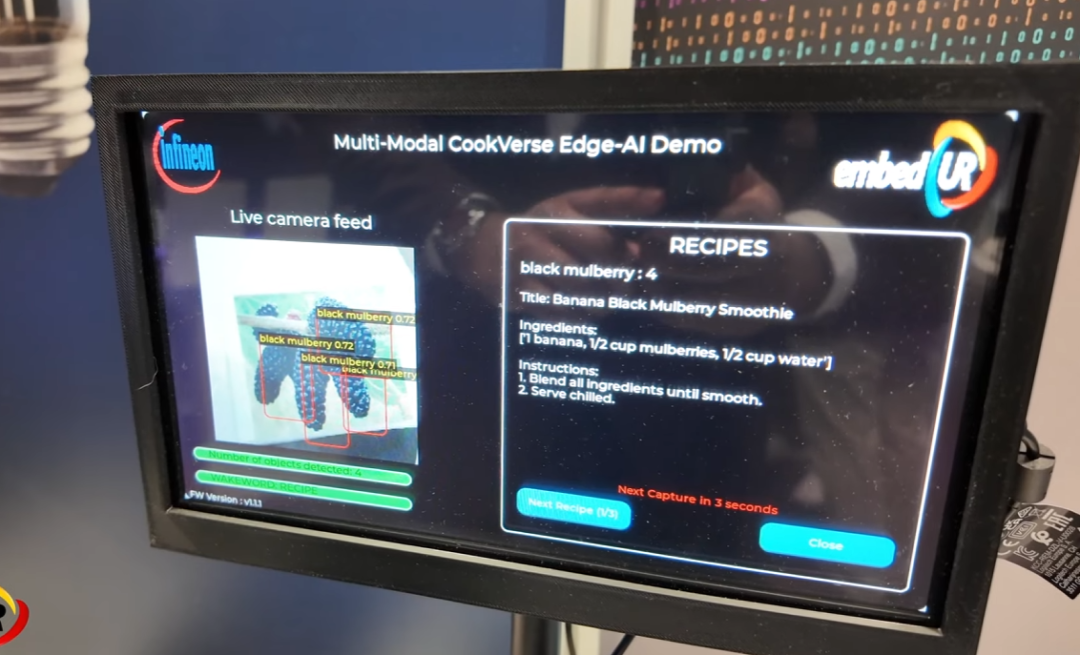

Recently, Sam Geha, Executive Vice President of IoT Computing and Wireless Business at Infineon, mentioned the opportunities for Infineon in edge AI during an Infineon podcast.Geha believes that the biggest challenge for edge AI currently is ecosystem development. Especially for AIoT customers, there is a lack of sufficient data scientists and model optimization experts. Therefore, Infineon has developed the Deep Craft Studio tool, allowing developers to upload their own data, use pre-trained models, or build models from scratch. Additionally, hardware design also needs innovation: microcontrollers (MCUs) must support big data collection, sensor fusion, and machine learning (ML) model execution. For example, Infineon’s latest generation of PSoC Edge MCUs integrates dedicated AI accelerators, achieving performance comparable to microprocessors (MPUs) while reducing costs and power consumption.Geha explained that Infineon is currently accelerating customer AI deployment through three levels: first, by providing an end-to-end development platform through Deep Craft Studio to simplify the AI toolchain; second, by offering a library of pre-trained models in Deep Craft, allowing customers to directly call or obtain models through third parties without needing to develop them themselves; and third, security enhancements are crucial to ensure that models and data are not stolen. Infineon’s PSoC Edge is the world’s first AI microcontroller (MCU) to achieve PSA Level 4 security certification.To meet customer demands for different AI performance levels, Infineon has also launched a range of product combinations, integrating different types of Infineon’s self-developed NPU or Arm Ethos-U55, flexibly applicable to various scenarios.Geha highlighted several important trends in edge AI, such as industrial predictive maintenance: using AI to analyze motor vibration data to avoid downtime; smart home optimization: automatically adjusting drying time based on clothing humidity; security certification: replacing traditional access cards with facial recognition or voice verification; and wearable devices: real-time health monitoring (e.g., snoring detection).“Our goal is to reduce the cost of AI solutions from $20 to $2 and support portable devices through low-power designs,” Geha said.Geha predicts that three major trends in AI are forming: first, the popularization of edge AI: low latency, high efficiency, and high security will become standard for the next generation of IoT devices; second, an explosion of applications: fields such as smart homes, healthcare, and industrial automation will accelerate AI integration; and third, an open ecosystem: on one hand, Infineon will build its own tools and platforms, and on the other hand, it will actively participate in third-party ecosystem development to enhance the breadth and participation of AI. Using Infineon’s PSoC Edge to achieve edge AI detection in smart kitchens.

Using Infineon’s PSoC Edge to achieve edge AI detection in smart kitchens.

NXP

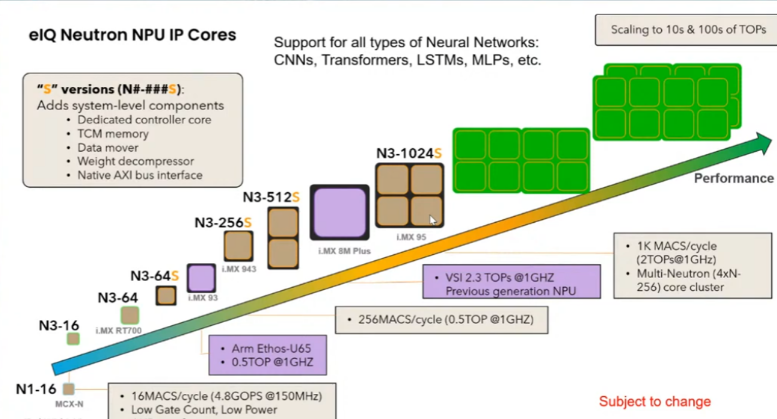

Davis Sawyer, AI Product Manager at NXP, introduced NXP’s understanding of edge AI, especially regarding some software strategies, in his speech titled “AI Born at the Edge, Built on NXP.”Of course, hardware is fundamental, so NXP has launched a series of scalable NXP products to meet different AI needs. NXP’s self-developed eIQ Neutron NPU IP has computing power ranging from GOPS to several TOPS, and plans for larger computing power NPUs in the future.

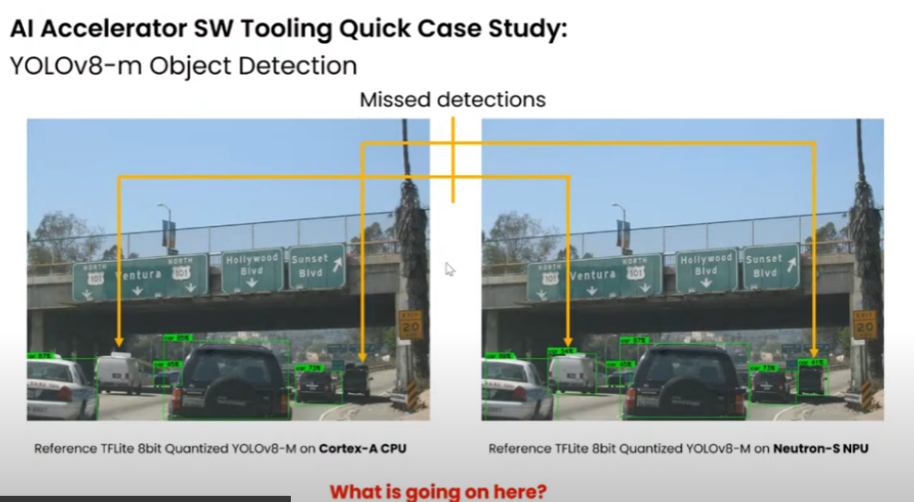

NXP’s self-developed eIQ Neutron NPU IP has computing power ranging from GOPS to several TOPS, and plans for larger computing power NPUs in the future. NXP’s NPU can better identify vehicles when running YOLOv8 object detection compared to Cortex-A, and it is certainly better than CPU calculations.Davis emphasized that edge AI is not a simple transplant of cloud models but requires native solutions tailored to device characteristics. First, there is a diversity of edge devices, so careful transplantation is needed, from microcontrollers in home appliances to industrial gateways. NXP can provide a full range from low-power MCUs to high-performance MPUs; second, the AI lifecycle needs to be restructured, with centralized training and localized inference evolving in tandem. As data improves, algorithms and models must continuously change, and the boundaries between the two will further blur in the future. Models are constantly changing, from AlexNet (2012) to today’s LLMs, and edge-native innovations are also evolving, such as TinyML and intelligent agent workflows gaining popularity.

NXP’s NPU can better identify vehicles when running YOLOv8 object detection compared to Cortex-A, and it is certainly better than CPU calculations.Davis emphasized that edge AI is not a simple transplant of cloud models but requires native solutions tailored to device characteristics. First, there is a diversity of edge devices, so careful transplantation is needed, from microcontrollers in home appliances to industrial gateways. NXP can provide a full range from low-power MCUs to high-performance MPUs; second, the AI lifecycle needs to be restructured, with centralized training and localized inference evolving in tandem. As data improves, algorithms and models must continuously change, and the boundaries between the two will further blur in the future. Models are constantly changing, from AlexNet (2012) to today’s LLMs, and edge-native innovations are also evolving, such as TinyML and intelligent agent workflows gaining popularity.

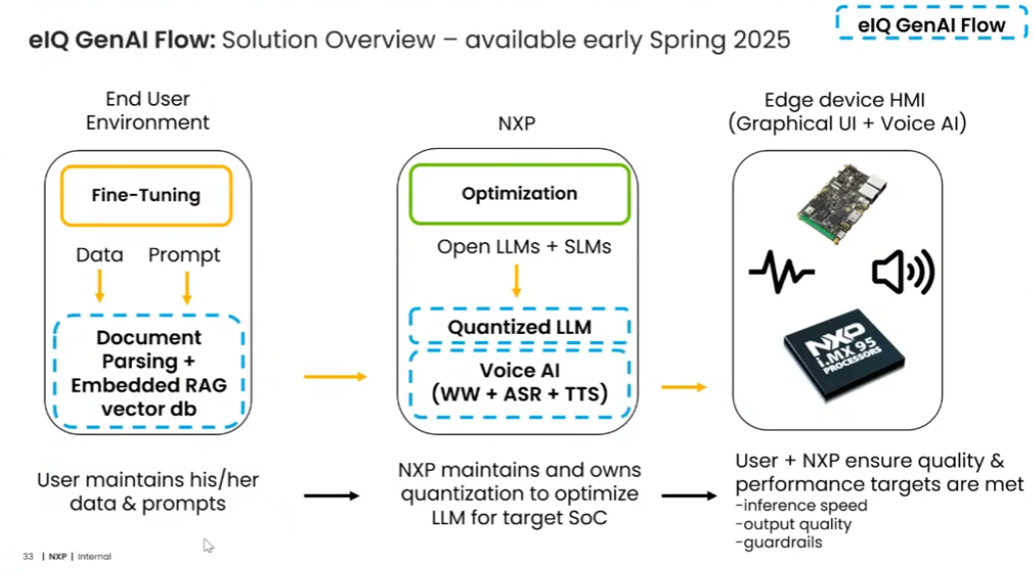

NXP achieves full-link support for edge AI through the eIQ software stack, enabling software-hardware collaboration. NXP provides a series of unique toolchains and ecosystems, such as Time-Series Studio, model optimization, and security features (such as model IP protection). Additionally, development adopts a modular design, supporting custom model/data imports and compatibility with mainstream frameworks like TensorFlow Lite and PyTorch.eIQ Time-Series Studio is a development tool launched by NXP that supports industrial monitoring, battery management, predictive maintenance, etc. The entire workflow, including data collection, model training, optimization, and deployment (supporting Kinetis, MCX, RT series), is executed on a unified software platform. Models can be generated and validated with one click, thereby lowering the AI development threshold. NXP’s software supports custom data formats (such as unlabeled sensor data).Davis also introduced NXP’s performance in edge generative AI, focusing on RAG (Retrieval-Augmented Generation) technology. Compared to cloud AGI, edge AGI can utilize local knowledge bases to avoid data leakage, thus complying with EU AI regulations. NXP’s eIQ GenAI Flow can achieve low-latency voice control (such as for smart homes and medical devices), with response speeds improved threefold compared to 2024.

Additionally, Davis introduced multimodal fusion technology, which can combine voice, visual, and sensor data to create smarter human-computer interactions (such as contactless medical monitoring).

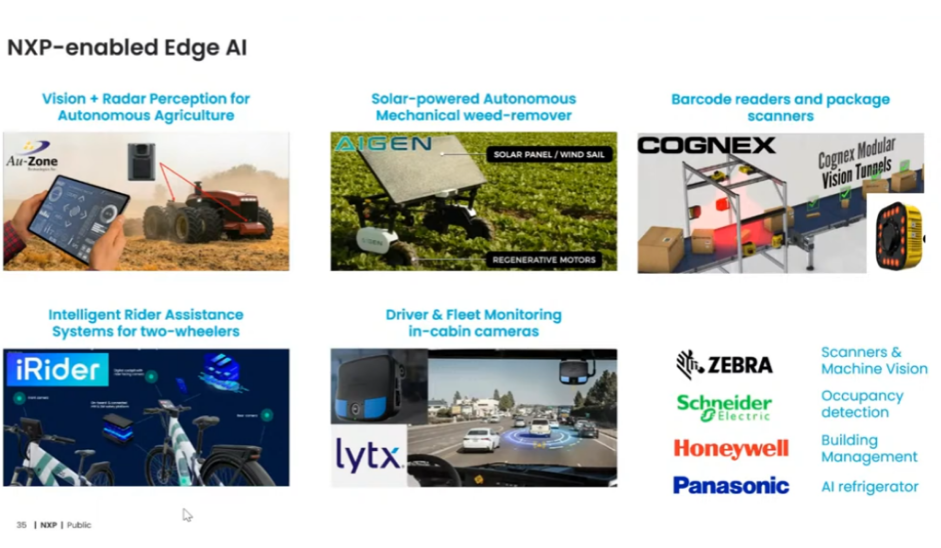

Additionally, Davis introduced multimodal fusion technology, which can combine voice, visual, and sensor data to create smarter human-computer interactions (such as contactless medical monitoring). During the customer introduction session, Davis presented numerous applications of edge AI, such as sensor fusion technology aiding precision agriculture, AI health controllers enabling remote multimodal health monitoring, edge-based industrial equipment anomaly detection systems, and voice-controlled smart appliances (such as air conditioners and ovens).

During the customer introduction session, Davis presented numerous applications of edge AI, such as sensor fusion technology aiding precision agriculture, AI health controllers enabling remote multimodal health monitoring, edge-based industrial equipment anomaly detection systems, and voice-controlled smart appliances (such as air conditioners and ovens).

Texas Instruments

Texas Instruments is also embracing the future of smart edge with a new embedded product portfolio, focusing more on the industrial and automotive markets. By providing dedicated development tools and devices, they help customers optimize system performance using sensor data to achieve fault prediction, preventive maintenance, and enhance safety, energy efficiency, and sustainability.Texas Instruments offers a full spectrum of edge AI products to cover different scenario needs:

High-end processors: suitable for vision systems (such as obstacle detection in robots or cars), supporting real-time image analysis and AI model deployment.

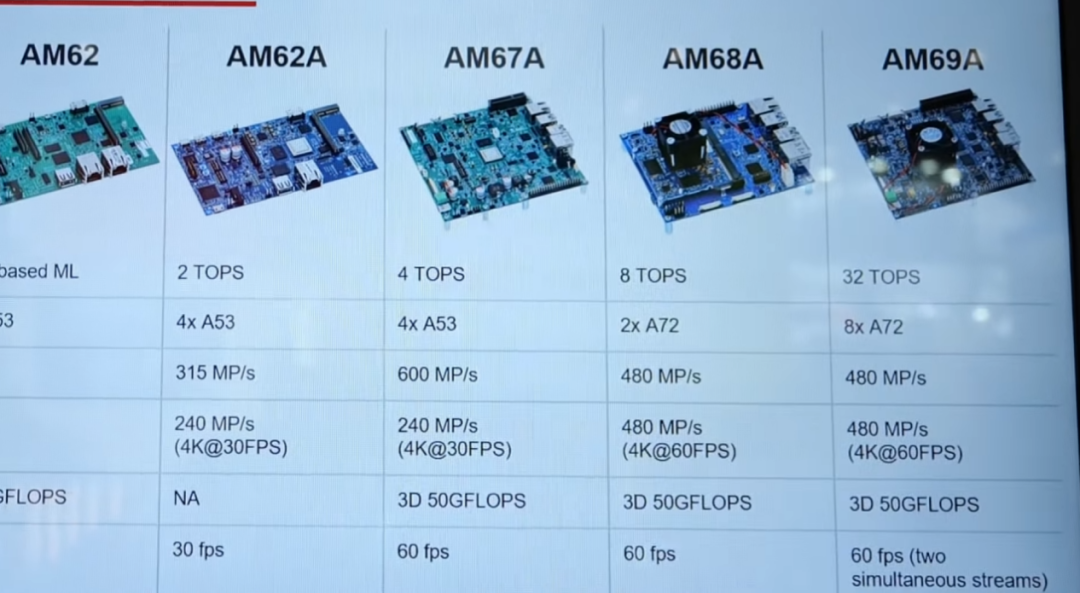

Mid-range devices: targeting time-domain analysis (such as vibration monitoring), for example, the C2000P55 chip in solar systems can achieve over 99% accuracy in detecting cable short circuits and respond quickly.Low-power solutions: for resource-constrained embedded systems, ensuring efficient data processing while reducing energy consumption.Amichai Ron, Senior Vice President of Embedded Processing at Texas Instruments, previously introduced some of TI’s innovations in the edge intelligence field, such as real-time monitoring of current and temperature in solar systems to detect faults (like cable contact short circuits, arc detection, etc.), preventing fires or equipment damage.In vehicles, analyzing sensor data to optimize driving safety and energy efficiency. In industrial equipment, predicting mechanical failures to reduce downtime and improve production efficiency.Amichai pointed out that Edge AI will drive various industries towards safer, more energy-efficient, and user-friendly directions, including sustainability, safety, and ease of use.At the 2025 embedded exhibition, Texas Instruments showcased its latest breakthroughs in edge technology—a demonstration system based on a vision processor. This system can not only achieve real-time object classification but also seamlessly expand from a single camera to 12 cameras while maintaining low power characteristics.TI’s edge AI solutions focus on addressing two major industry pain points:First, real-time responsiveness. In scenarios like smart homes and smart cities, devices need to respond quickly (e.g., video doorbells detecting visitors, industrial machinery fault warnings). TI achieves millisecond-level decision-making by integrating local AI acceleration modules into processors, eliminating reliance on the cloud.Second, system scalability. Traditional vision systems face performance bottlenecks when expanding to multiple cameras or high resolutions. TI’s vision processors support flexible expansion: from the low-power AM62A (less than 2W) for single-camera applications to the AM69A, which supports 12 cameras and can process 4K@60fps video streams simultaneously.

Technical Highlights of TI’s Vision ProcessorsIntegrated AI accelerators: all processors come with built-in dedicated AI engines, supporting real-time object detection and classification.Software compatibility: provides a unified SDK and toolchain, allowing users to smoothly transition from low-power devices (like AM62A) to high-end models (like AM69A).

Energy efficiency optimization: designed for battery-powered devices, such as video doorbells that can operate for extended periods in low-power mode while maintaining high recognition accuracy.

Real Application Scenarios

-

Smart Homes

Video doorbells: achieve human detection and classification through the AM62A processor, with power consumption below 2W, extending battery life. Smart appliances: real-time analysis of user behavior to optimize device operation modes (such as energy-saving modes).

-

Industrial Monitoring

Multi-camera systems: AM69A supports simultaneous processing of 12 video streams for defect detection on factory production lines or security monitoring.

-

Smart Cities

Traffic management: real-time identification of vehicles and pedestrians through roadside cameras to optimize traffic light control.Why Choose TI’s Edge AI Solutions?Flexible performance gradient: covers full scenario needs from single cameras to multiple devices.Low-power design: suitable for battery-powered or heat-constrained environments.Simplified development process: provides pre-trained models and development tools, lowering the AI deployment threshold. TI’s embedded vision edge AI processor product portfolio.

TI’s embedded vision edge AI processor product portfolio.

Conclusion

The active layout of semiconductor giants in the edge AI field fully demonstrates the popularity of edge AI. From optimizing development tools to hardware innovations, from expanding application scenarios to building ecosystems, various companies are making comprehensive efforts, not only meeting the diverse market demands of today but also paving a solid path for the future development of edge AI.

· END ·

Follow the subscription account of EEWorld: "Robot Development Circle"

Reply "DS" to receive the complete version of "DeepSeek: From Beginner to Expert"

Scan to add the assistant and reply “robot”

Join the group to exchange experiences face-to-face with electronic engineers