This article is based on a lecture from NVIDIA GTC2024:

I am discussing what will happen in the future. However, today’s talk will focus heavily on the practical challenges and the emerging trends that we believe can help address the challenges in artificial intelligence and robotics.

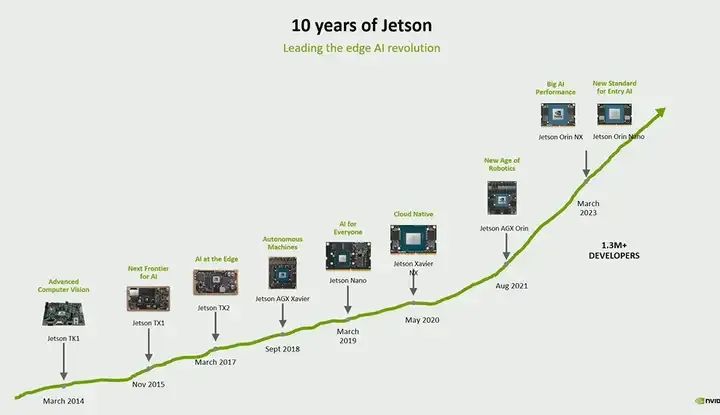

But before looking to the future, it is important to reflect on the past and present. So, we started researching robotics ten years ago. It turned out that in March 2014, we created our first product, uh, at that time we did not know it would be used for robotics. Well, this was a project we started. Since then, we have been learning about the needs of the industry, the needs of developers, and gradually building our product portfolio. Over the past decade, we have launched some truly excellent products that help developers adopt AI technology at the edge. The latest product line based on Orin is one of the most significant platform updates we have brought, making the same architecture available for both our entry-level products (like nano) and our highest performance products (like AGX) for the first time. Many of you are key members of this journey. We have 1.3 million developers, and we are very proud of that. So, thank you all for embracing this platform and for everything you have done to make it a better platform.

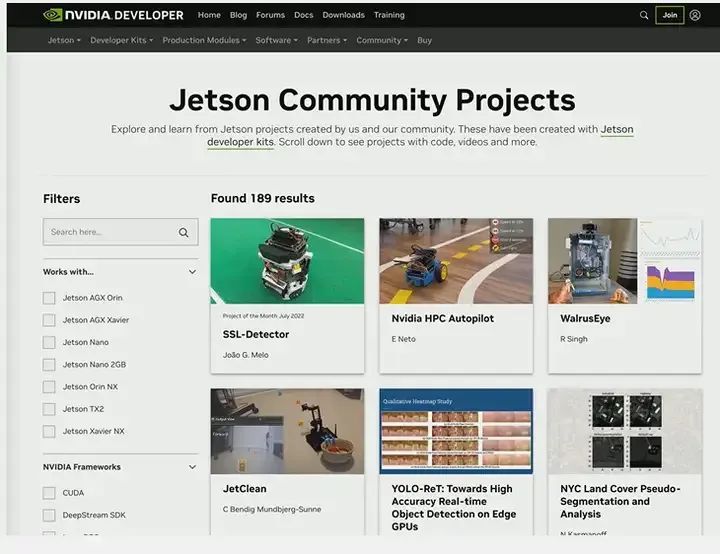

I also want to commend some of the work done by the Jetson community. We often celebrate successes with our large customers. But there is a very large developer community that actively contributes and is dedicated to embedded edge and robotics technology. If you haven’t visited this page yet, I strongly encourage you to take a look. There are only 189 projects listed here, but I know there are many more projects that the teams responsible for these projects can add. So again, let’s celebrate all the work developers have done on this platform, which helps us continue to invest and make it better.

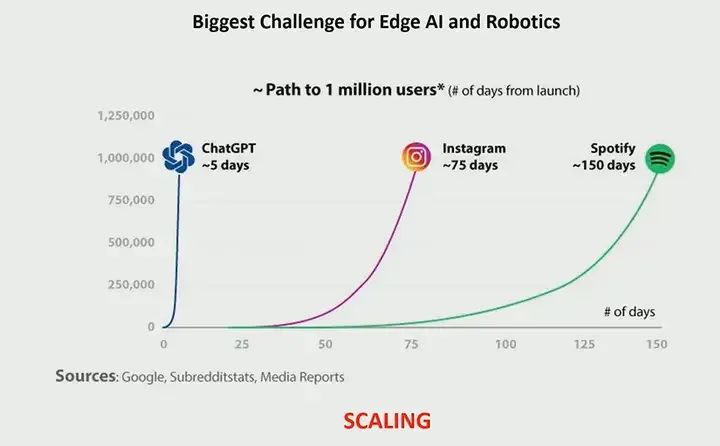

But what are the challenges facing edge AI and robotics? We have all seen ChatGPT attract a million users in just five days. I assume most of you here are working in robotics and AI. I understand that the biggest challenge you face is reality. In robotics or edge AI, we cannot see this curve showing a “hockey stick” growth. Scaling is a big issue. There are many factors that make scaling difficult. There are technical factors, market factors, and engineering challenges. Therefore, as we think about the future of edge AI and robotics, our focus is on providing scalability for our developers and customers. How can we help people scale from dozens, hundreds of devices to tens of millions of devices? So today, I will share some of the things we are doing, some of the things happening in the industry, which we firmly believe will help address the long-standing scaling challenges in edge and robotics technology.

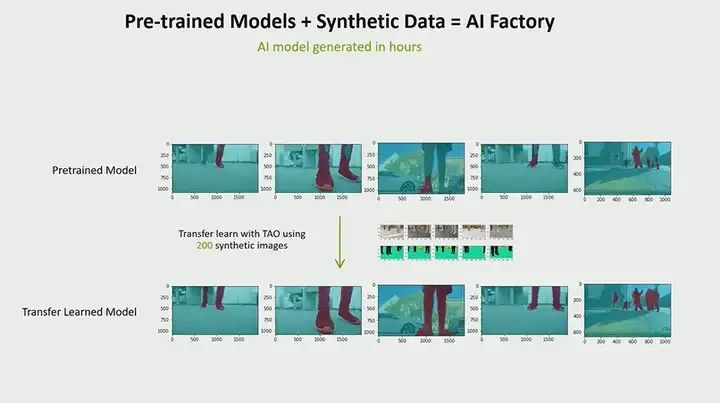

The first thing we need to do, and we are already starting to see this trend become more widespread, is the concept of the “AI factory.” When you are building applications for the edge, or deploying at many different customer sites, you encounter a problem: the lack of data required by each customer. Therefore, by combining synthetic data and pre-trained models, we are now seeing the development of workflows where you can almost have your own data factory, which is a great example of one of the projects. We start with a pre-trained model that has been trained to segment people, but it has only been trained on people viewed from a top-down perspective (for example, from a CCTV camera). However, for robotics, you need to observe from the perspective of a robot a few inches off the ground. Therefore, you can see that when we try to use this pre-trained model for segmentation, its performance is very poor. But we go back to Omnibus, which is a tool for creating synthetic data. With just 200 images, we were able to retrain and perform transfer learning on this specific model, and you can see the output of the new model below. So, how does it help with scaling? If you go from the first use case to the second use case to the third use case, I am sure each use case has its nuances and customer data you need to deal with. Someone wants to recognize shelf labels, another wants to recognize the size of labels, right? Therefore, each new customer has their own set of requirements. So, you need to have your own data factory to train on these AI models. Now, this has become possible, thanks to synthetic data generation tools and pre-trained models that allow you to perform transfer learning.

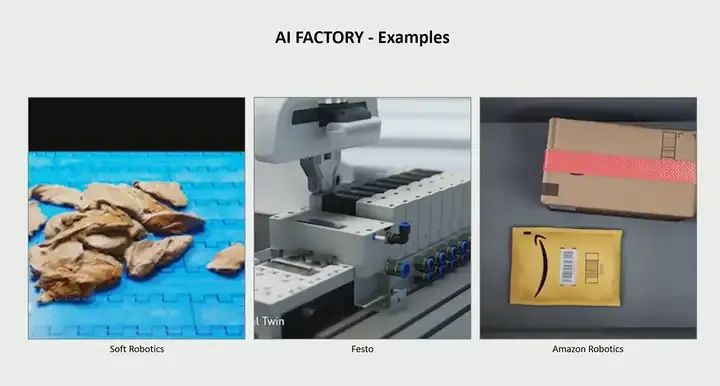

Here, I will give a few examples. The first example is a company called Soft Robotics. Their initial business was to use soft grippers to pick up chickens, but they wanted to expand into other areas. They have the technology to pick up items using special effectors and sensors. So, they started using synthetic data. From the sales team discovering new opportunities to demonstrating operations, it used to take a long time, but now it only takes a few hours. They create new models through their data factory, deploy them, and can use them immediately.

The second example is in the robotics field, a company called Festo is trying to build products for cells, where various objects will appear, and robots need to pick and stack them. They need to handle a constantly changing variety of objects, so they need to understand the characteristics of each object. So, they again used Omniverse for synthetic data generation, creating a data pipeline to quickly obtain CAD models, create synthetic data training models for object recognition and estimation, and deploy them.

The last example is Amazon Robotics. They have robots for picking packages, and these packages often change. So, how do you keep the system running smoothly while the packages change? With new holiday packaging coming up, they again built a data factory that allows them to continuously create new models and update models. This really helped them scale the number of robots that can be deployed in their distribution centers.

This is a solution, right? Having your own data factory so you can scale and address new demands.

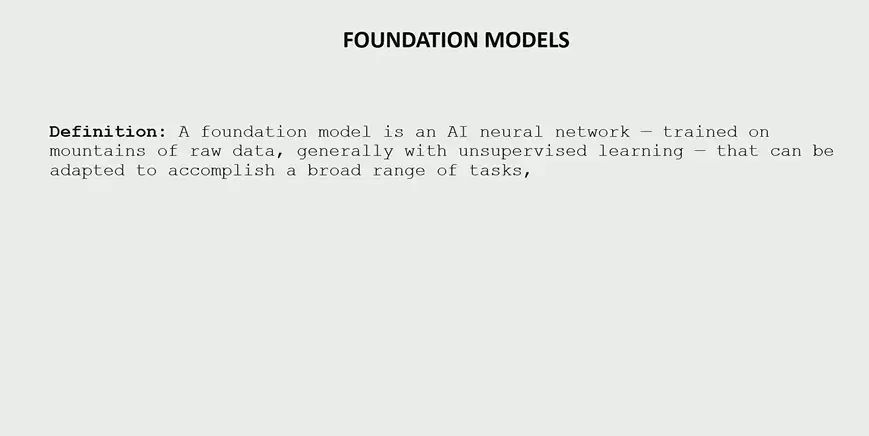

Here we discuss another emerging powerful force that will help us address a key issue, which is foundational models. For those who are new and have not heard of foundational models, here is a definition that may come from the earliest discussions of foundational models. A foundational model is a model trained on a large amount of raw data, typically using unsupervised learning, that can adapt and perform a variety of tasks.

There are two key points in this definition that are very important. One is unsupervised learning, and the other is the ability to perform various tasks. Even in the previous examples, we talked about synthetic data, which is all supervised learning. The generated data is labeled and trained. But with foundational models, all you need to do is collect data, right? These models can learn from the data itself without needing supervised labels.

The second point is their ability to perform various tasks. As I mentioned earlier, switching from one customer to another, from one use case to another, today this requires a lot of work, and so do AI applications. Each customer has different needs, which is different from search engines, where most people just want to search and get answers. You can build a single solution for them, but each customer needs something slightly different. Therefore, their ability to generalize and perform various tasks is very helpful.

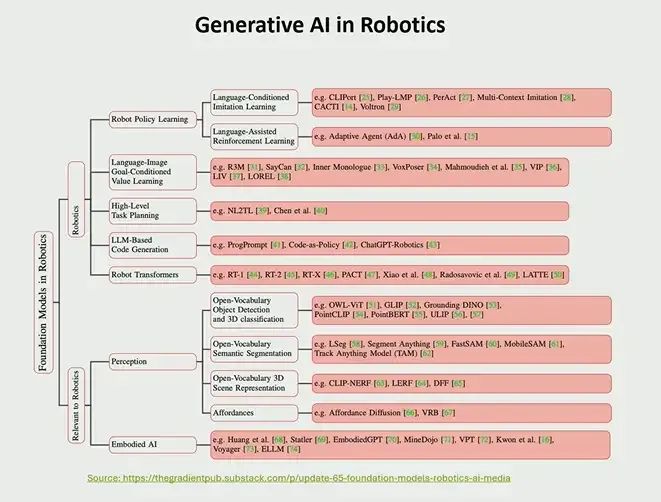

Emerging foundational models in robotics and computer vision applications:

-

Foundational models in robotics: In robotics, many tasks can be accomplished using foundational models. These models can perform various functions such as detection, segmentation, scene representation, and capability.

-

Diverse model applications: In the field of robotics, a variety of models are emerging that involve robot strategies, learning, and aspects such as language, images, and conditional pools.

-

Value learning: Value learning is the process where the model learns how to achieve a given goal. This is very important for robots as they need to understand how to act based on given instructions or goals.

-

Code generation: Foundational models excel in generating code. For robotics projects, LLM-based code generation techniques can greatly simplify programming tasks.

-

Robot transformers: One of the biggest applications is robot transformers, which can directly convert control instructions for robots from various sensor inputs (including user text input or actions).

-

Integration of hardware and software: As these foundational models are applied in robotics, it will become easier to perform more diverse tasks using the same hardware. This primarily translates into a software issue rather than a hardware limitation.

Foundational models have broad applications and great potential in robotics and computer vision, making work in these fields easier and more efficient.

Similar things are happening in the field of computer vision. Well, this chart comes from Andrew Ng.

The widespread application of foundational models in visual applications is reflected in several aspects:

-

Development in the field of computer vision: Like robotics, the field of computer vision is also undergoing tremendous changes. Various foundational models are being applied to visual tasks such as segmentation, pose estimation, detection, and depth estimation.

-

Application of foundational models: For any edge visual application, these foundational models are now available. This means developers can use these models to perform complex visual tasks without having to build models from scratch.

-

Combining foundational models with robotics and edge computing: The author poses a question about what the world will look like when we apply these foundational models to robotics and edge computing. This is a forward-looking question, suggesting that the application of foundational models will greatly change the landscape of robotics and edge computing.

Foundational models are of great importance in the field of computer vision, and their combination with robotics and edge computing will bring about significant potential transformations.

Currently, edge artificial intelligence is being widely used, as seen from the various robots deployed by Amazon, each responsible for specific tasks such as sorting, packaging, or moving items. These robots utilize AI at the edge, but they are single-purpose machines. However, the future of edge AI is expected to shift towards more general-purpose robots. Imagine a robot that can perform multiple tasks, such as picking up socks from the floor and placing them in an empty box on a conveyor belt.

The challenge in achieving this is the complexity of programming such tasks, even with good detectors and robotic manipulators. It often requires an advanced degree in robotics or extensive experience. With the capabilities of foundational models, the ability to generate code for robots, and the ability to learn through strategies, even operators who are not robotics experts can guide robots, and then the robots will translate the instructions into the specific language of the robot and complete the tasks. While the hardware of robots has been general-purpose for some time, the brain (AI) has been more specialized. The shift of robotics towards more general-purpose AI will enable the same robot to perform multiple tasks, leading to more effective industry scaling.

Currently, robots can perform tasks well, but they often cannot fully replace human workers because humans perform auxiliary tasks that are difficult for robots to accomplish. By enabling robots to perform these additional skills, automation can become more complete, addressing the remaining 20% of problems that robots currently cannot solve, thus achieving more effective scaling and automation in the industry.

This is a sketch of a use case for video analysis in hospitals. Many companies are building edge applications, but each company has a specific set of use cases. Developing new use cases for them takes three, four, or six months. For example, today you can use an edge device capable of fall detection. But if you want that device to also detect whether a person is awake, that is a huge task. The key is that human observers not only detect when a person falls but also ensure they finish their meals and do not do things the doctor tells them not to do. All these skills, all these capabilities, are what humans are doing today and are expected to be done by AI. With the visual and language models we are seeing, we believe this is possible, as you have sensors, edge computers, and user interfaces to define different tasks. I call this moving to an infinite, arbitrary application space. As edge AI developers, you design a system once, and it can be applied to a wide variety of use cases. Therefore, it is all about scale. For example, a simple thing like recording the number of times a patient coughs, if we know that this patient is at risk of falling, you could have a system that tells you whether this person is standing, but what you really care about is whether they are standing on their own or if someone is helping them, right? Today, there is a model, a single foundational model, equipped with the right user interface and tools, that can accomplish all these tasks. That’s the exciting part for AI and computer vision!

What are we doing for all developers adopting this technology? First, we are working to build several foundational models, AGTC, we announced the Isaac manipulator, which comes with three different foundational models. One is synthetic data, which is an interesting foundational model for object detection; the second is foundational pose, which provides you with a foundational model for estimating sixty poses; finally, the foundational grasping model, if you give it an object, it will tell you the correct grasping position, not just the point but also the grasping direction of the end effector, which will lead to successful grasping. These are important because we find when talking to many companies that they can do AI to handle individual parts, but these parts change every few hours. This means that with previous AI methods, it would take months to retrain a new model, which is impractical, but these foundational models allow you to update them instantly for new things.

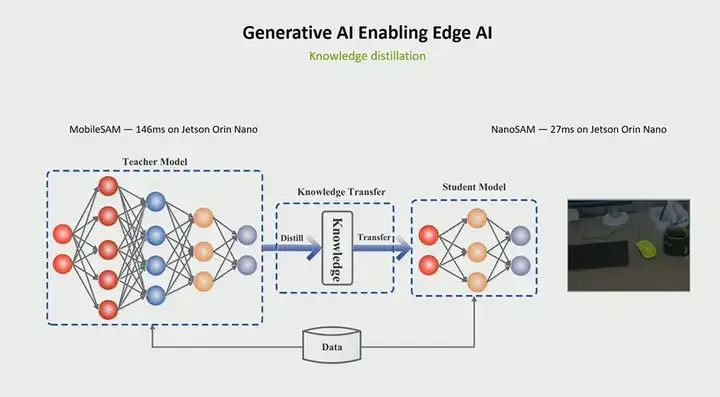

Our second effort is about the marginalization of these foundational models. We need to shrink them to fit edge environments. You know, many large models have already been created, with parameter counts reaching trillions. Considering the computational, power, and cost constraints we face, it is not feasible to deploy all these models to the edge. However, they are still very useful for edge environments. We no longer need manual labeling; these large models are being actively used for data labeling. Additionally, they are also used in student-teacher models. I think I may have mentioned that these large models designed for global-scale intelligence can be distilled into models for specific use cases. A model serving 7 billion people needs to know far more than a model that only needs to serve a hospital environment; that model only needs to understand the relevant environment, personnel, and use cases, right? Therefore, through knowledge distillation, we can extract domain-specific knowledge from a teacher model and create a new model. This is also a generative model that uses the same architecture but has been compressed. We have done a lot of work in this area. This example shows a model we created (note it is not Sam), but there are many other models in development.

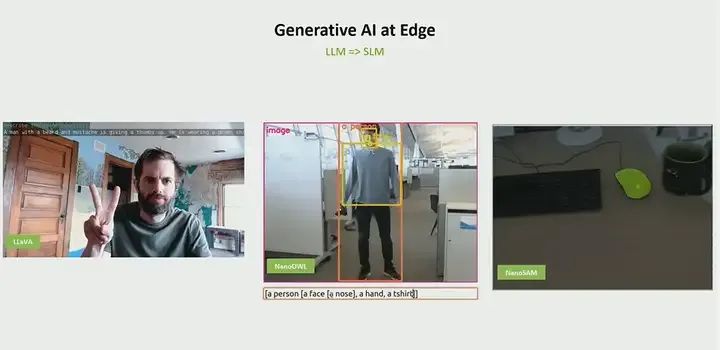

Here are some large models that can be deployed at the edge. The first model is LLama, and we are creating some smaller language models from these large models. You can change the functionality of the model simply by changing the prompt, which is very important. In the past, even if you had an AI model that could do fifty different things, if you wanted to change the camera from detecting people to detecting people’s actions, you still needed to do a lot of coding work, which is not just a model issue but also the logic associated with it, and each use case needed to be changed. But now, with simple text prompts, you can change the tasks and functionalities of edge devices in real-time. So, we have LLama, and also Nano OWL and Nano Sam. We are working on deep models and will continue to develop more models that can run at the edge.

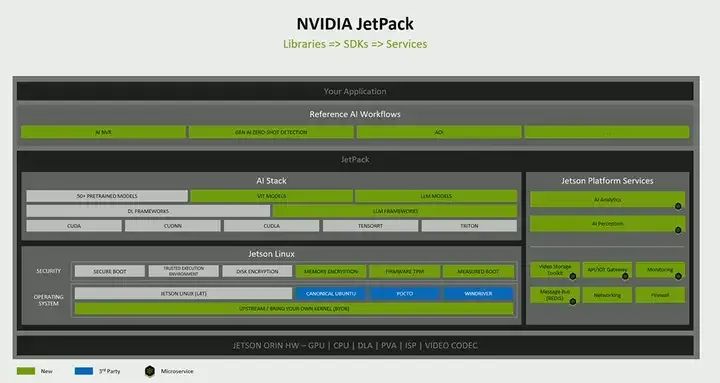

These foundational models need to be deployed on edge devices. As I mentioned earlier, your programming interface will undergo significant changes. Each new application no longer requires writing new code or new code paths. Initially, AI needed to rely on libraries, then we had SDKs, but now we believe we are reaching a stage where these things can become services. There are templated things where you just need to change configurations, right? This configuration change can be done through text prompts, which are then converted into instructions that Jetson can understand and execute to update your microservice configurations. This is the direction we are currently investing in, where you can leverage these models, and all available microservices and services can be parameterized, allowing you to develop your applications very quickly. In the latest Jetson Jetpack, we introduced these capabilities and will continue to enhance these abilities.

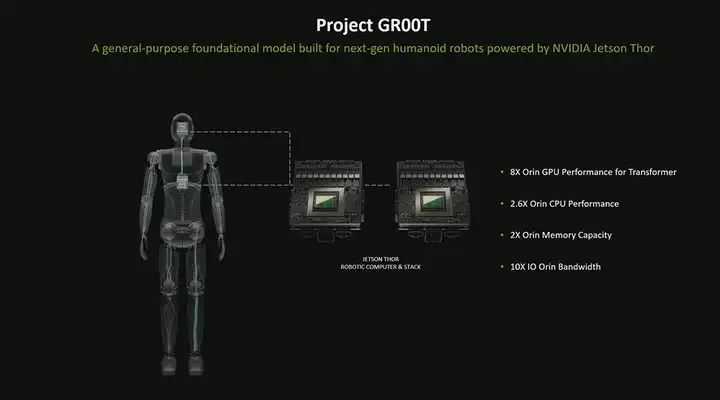

We announced the Groot project. To run these large models, especially very large multimodal models, we will need more specialized edge computers. Orin is excellent; it has all the computational power needed to run today’s models, and we have showcased several examples on edge devices. But if you consider applications like humanoid robots with 30 to 40 degrees of freedom and the significant increase in complexity, then we will need larger models. Therefore, we announced that next year we will launch Jetson Thor, which will have eight times the computational power of existing products, particularly suitable for transformer models, as we believe many edge devices will shift to transformer-based models. It is equipped with hardware specifically designed for the Blackwell architecture and has a dedicated transformer engine. Additionally, one of its highlights is support for FP8 (which may refer to 8-bit floating-point numbers). It is well known that many people encounter challenges when quantizing high-precision models during training into low-precision models for inference, but with FP8, you can use high precision during training and maintain high precision during deployment. Regarding the memory required for these large models, we will double the supported memory capacity. At the same time, to handle more sensor input data, we will increase I/O capabilities tenfold, as achieving true generality requires consuming more sensor input to make decisions.

Emphasizing that edge artificial intelligence and robotics are here. All these products are supported by NVIDIA’s edge platform, but as this new field develops, including foundational models, simulation, transformers, and engine-based edge hardware, we believe this opportunity will grow exponentially in the coming years.

More:

Status of Robotics Learning

What a qualified NVIDIA Jetson developer needs to know about Jetson development tools

Detailed introduction to NVIDIA Jetson products

Detailed introduction to NVIDIA edge computing solutions