Click below👇“AI Knowledge Exchange”Follow our official account

“The table lamp at home suddenly understands human speech, factory machines proactively report faults, and children’s toys can assist with homework… These scenarios are no longer science fiction! Recently, a technological trend has been quietly brewing—connecting the traditionally ‘silent’ Microcontroller Units (MCUs) with large AI models. What possibilities lie behind this? What disruptions will it bring?”

MCU + LLM = ?

MCU

MCU (Micro Controller Unit) is commonly known as “single-chip microcomputer”. It is a microcomputer chip the size of a fingernail designed specifically for controlling hardware devices. Typically characterized by being small, specialized, low-cost, and low-power, it may not be as powerful as smartphones or computers, but it is the ‘silent cornerstone’ of the IoT era.

- Look at the MCUs around you:

- Air conditioner remote: Press the button → MCU decodes the signal → Adjusts the temperature.

- Smart bracelet: MCU reads heart rate sensor → Computes data → Displays results.

- Car wipers: MCU detects rainfall → Automatically adjusts the swinging speed.

LLM

In 2023, ChatGPT opened a new era of AI,Large Language Models (LLM), which are AI models based on deep learning, trained on vast amounts of text data, capable of understanding and generating natural language, have revolutionized people’s perceptions of AI.

- Look at what we are doing with LLMs today:

- Text generation: When you write copy → LLM provides copy suggestions → Helps you optimize expression.

- Online translation: You input a foreign language sentence → LLM translates in real-time → Presents authentic Chinese.

- When programmers write code → GitHub Copilot (LLM-driven) → Automatically completes code snippets.

- Smart speakers: You say, “What’s the weather like tomorrow?” → LLM understands the semantics → Returns the weather forecast.

MCU Meets LLM

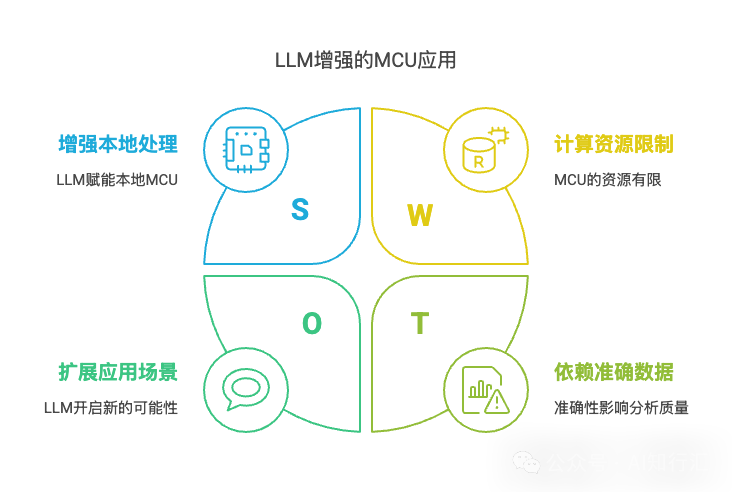

Traditional MCUs also have voice dialogue and image recognition capabilities, but they are like “elementary school students who can only memorize answers”: they can only recognize preset commands (like “on/off”), and more complex requests can cause them to “crash.” The reason is simple: MCUs have limited computing power and storage space. With the advent of LLMs, many scenarios may change, such as:

Smart Home:

-

Past: “Xiao Ai, turn on the light” → “Okay, the living room light is on.” (Requires internet + cloud processing)

-

Future: Directly say to the lamp: “Dim the light a bit, and add some warm yellow; I want to read before sleep.” → MCU parses the command locally and automatically adjusts the PWM parameters.

Industrial Site:

-

Past: When equipment fails, a red light turns on, and the engineer checks the manual for code “E37” → Manual troubleshooting takes 1 hour.

-

Future: The equipment proactively voices an alarm: “The spindle temperature has reached 85℃, it is recommended to stop immediately! Historical data shows that 80% of similar failures are caused by insufficient lubrication.”

AI Steward in the Fields:

-

The farmer asks the sensor: “Will it rain soon? Should I delay fertilization?”

-

MCU combines local weather data + LLM analysis: “There is a 70% chance of rain in the next 3 days, it is recommended to reduce the fertilization amount to 50% today.”

The Rise of AIoT

AIoT, or Artificial Intelligence of Things, combines the capabilities of the Internet of Things (IoT) with Artificial Intelligence (AI) to create intelligent systems that can analyze data, make decisions, and act autonomously. It essentially empowers IoT devices with the ability to process information and intelligently respond to their environment.

In AIoT devices, AI is embedded into infrastructure components such as programs and chipsets, and connected through IoT networks. Application Programming Interfaces (APIs) are then used to ensure that all hardware, software, and platform components can operate and communicate without user intervention.

AI (providing the brain) + Network (providing the nerves) + Edge Computing (providing intuition) = AIoT

The cloud-based AIoT consists of four layers:

- Device Layer. This includes various hardware such as tags, beacons, sensors, vehicles, production equipment, embedded devices, and health and fitness devices.

- Connectivity Layer. This layer includes field and cloud gateways, consisting of hardware or software elements that connect cloud storage to controllers, sensors, and other smart devices.

- Cloud Layer. This includes data processing, data storage, data visualization, data analysis through AI engines, and data access via APIs.

- User Communication Layer. This layer consists of web portals and mobile applications.

Conclusion

With the integration of AI, the Internet of Things creates a smarter system. There is potential to unlock unrealized customer value across multiple industry verticals such as edge analytics, autonomous vehicles, personalized fitness, telemedicine, precision agriculture, smart retail, predictive maintenance, and industrial automation.

After the encounter of MCU and LLM, the use of LLM is no longer limited to computers and smartphones. Instead, it is injected into devices, allowing them to perceive, think, and act, ultimately building a “breathing” intelligent hardware world, making LLM more accessible!

What are your thoughts on the combination of MCU and LLM? Let’s discuss in the comments.

This is AI Knowledge Exchange— Know AI, Act AI, Gather AI, and embark on the AI journey together!

Don’t forget to like, share, and follow us!