Follow “Electronic Engineering Magazine” and add the editor’s WeChat

Regional groups are now open, please send messages 【Shenzhen】【Shanghai】【Beijing】【Chengdu】【Xi’an】 to the public account

Many students may have forgotten that Computex is the “Taipei International Computer Show”—even though its positioning has been adjusted with technological developments, it was originally aimed primarily at “computers” or “personal computers”. Now at Computex, in addition to traditional “computers”, you can also see many IoT, robotics, and data center products.

We feel that a representative event in this transition is that Jensen Huang (NVIDIA CEO) spent 2 minutes announcing the GeForce RTX 5060 during this year’s Computex keynote, while the remaining hour was spent discussing AI infrastructure, Omniverse, and robotics.

He also humorously acknowledged, “GeForce brought us here, although 90% of our keynote is no longer related to GeForce.” “Of course, the reason is not that we no longer love GeForce.” He then turned to discuss the industry ecosystem related to CUDA… (The GeForce launch event clearly only accounted for 1%…..)

After all, from a revenue scale perspective, we recently analyzed that the Gaming business currently accounts for a much smaller proportion of NVIDIA than before. However, from the perspective of AI infrastructure, under NVIDIA’s current landscape, PCs using RTX graphics cards are also part of it—this point we discussed during this year’s GTC.

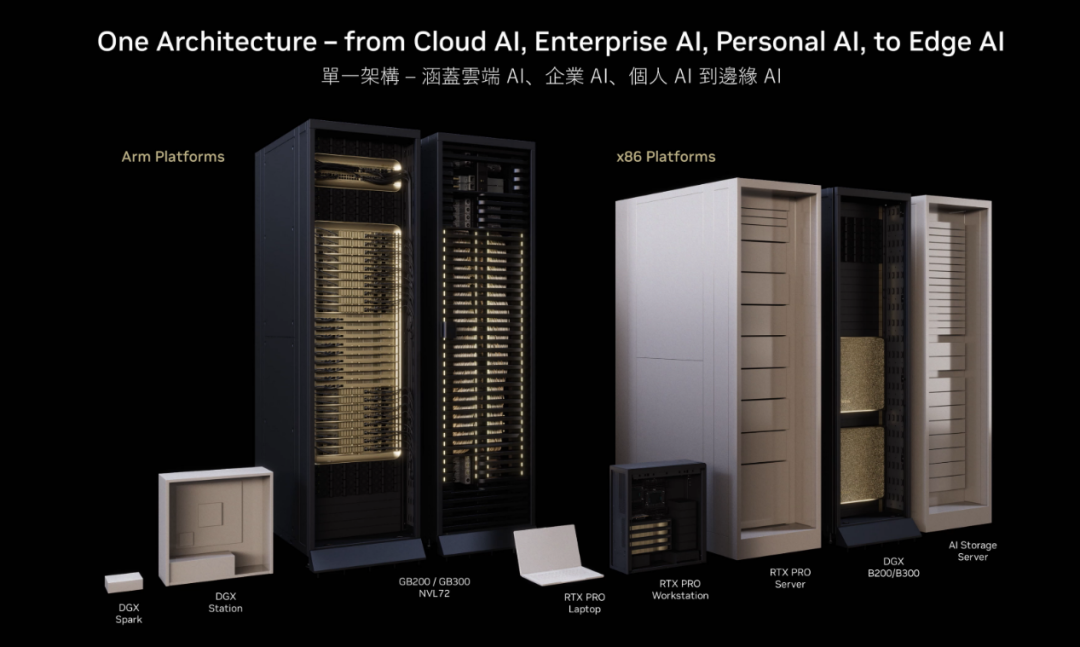

From the perspective of a proper “computer”, the following image shows AI infrastructure of different scales based on NVIDIA GPUs—though it cannot be called a family portrait: it includes the RTX Laptop as a personal computer (though it may not necessarily use consumer-grade GeForce graphics cards), the DGX Spark which was particularly popular in the first half of this year, and of course the nuclear bomb-level Grace Blackwell NVL72 system, which is equipped with 72 Blackwell data center GPUs…

This is the legendary “one architecture” covering various device forms and application scenarios. When Huang announced the AI computing platform RTX PRO Server aimed at enterprises and industries, he also specifically mentioned, “This can run ‘Crysis'” and casually asked, “Are there any GeForce gamers in the audience?” This can be seen as an official acknowledgment that “AI originated from GeForce”.

Moreover, Huang has always referred to devices like DGX as “AI Computers”; no matter how large they are, they are still “computers”—which ties back to the theme of Computex being about “computers”.

Taking advantage of NVIDIA’s release of multiple AI “computers” at Computex, this article will relatively unilaterally attempt to discuss NVIDIA’s layout of AI infrastructure at different scales; and help everyone, including ourselves, understand the logic and technology behind NVIDIA’s current system-level products in terms of performance scaling from edge, personal, to enterprise and cloud…

AI Personal Computers Comparable to Supercomputers

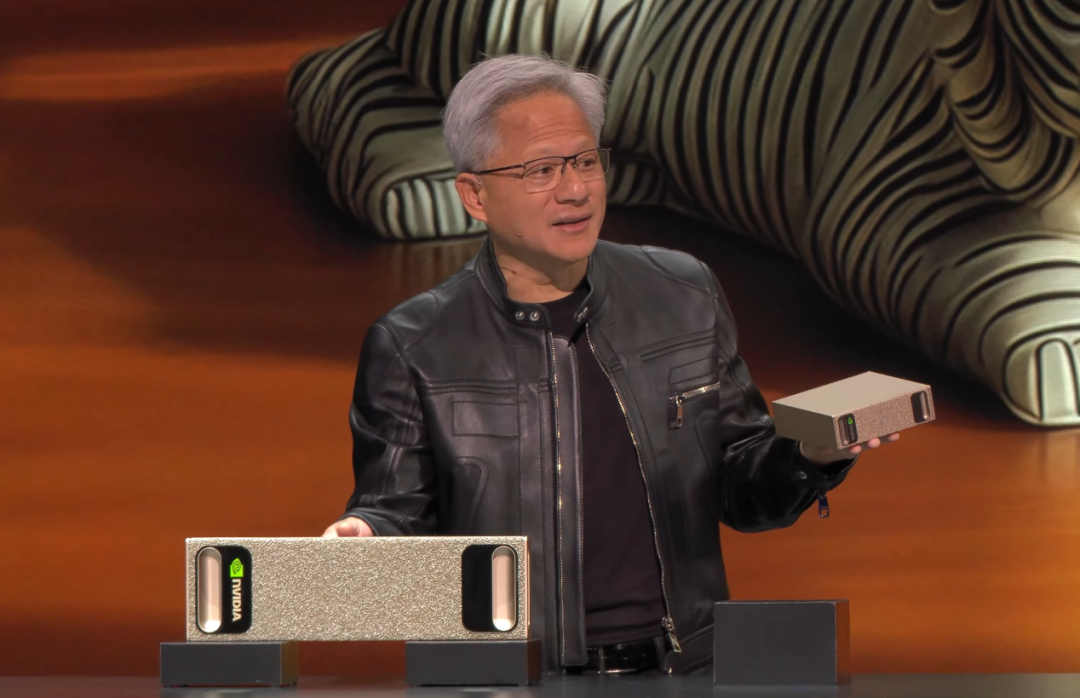

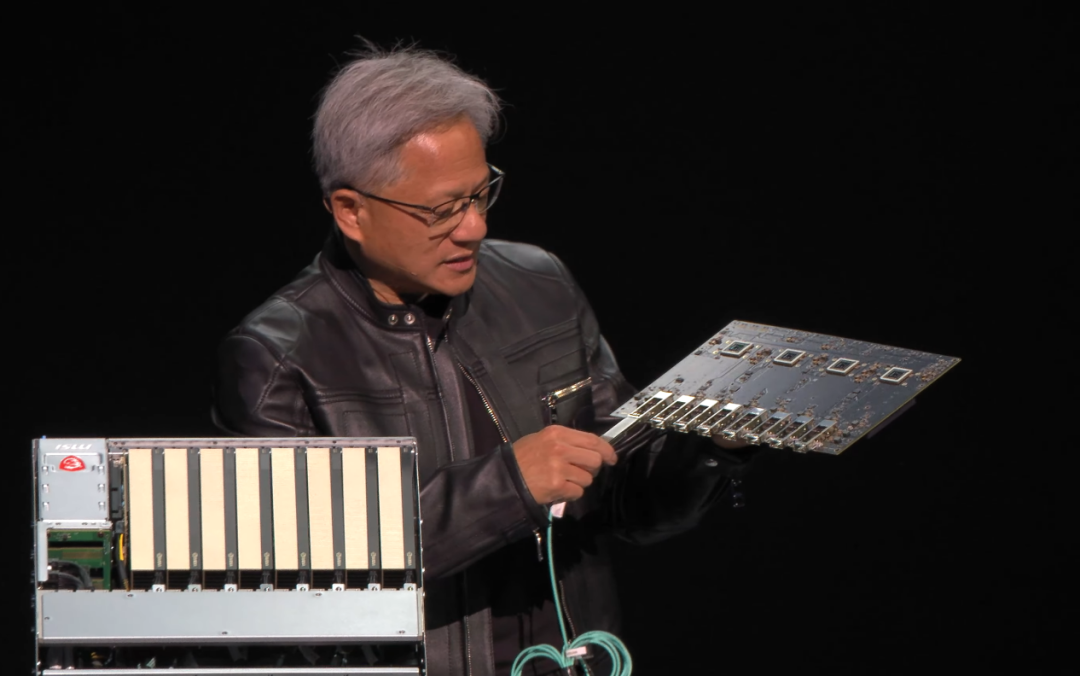

We won’t discuss the GeForce and professional visual cards aimed at personal computers; Electronic Engineering Magazine has already conducted a series of experiences on GeForce graphics card products. In the realm of “personal computers” or what can be called “AI supercomputers on the desktop”, a product that was particularly popular at this year’s CES is a small device that looks like a scaled-down DGX server, which Huang held in his hand—initially called Project DIGITS, and later officially named DGX Spark.

This year’s CES reports also specifically discussed this device: unlike Jetson and other edge-oriented computers, the specifications of DGX Spark are quite serious and abundant: the GB10 chip features a CPU with 10 Cortex-X925 cores + 10 Cortex-A725 cores (this CPU is also labeled as Grace CPU); the Blackwell GPU has an embedded 1000 TOPS computing power (Tensor core, presumably referring to FP4 computing power of 1 PetaFLOPS); the main memory is 128GB LPDDR5X, and it also has 1TB/4TB NVMe SSD.

From the leaked benchmark scores by foreign media (Notebookcheck), the GB10 CPU already performs comparably to high-end Arm/x86 processors in single-core performance. Coupled with support for WiFi 7/Bluetooth 5.3, and equipped with an RJ45 Ethernet interface, it seems quite similar to a PC; although the DGX OS operating system and the specially configured ConnectX-7 NIC (used to interconnect two DGX Sparks for computing power expansion) still differentiate it from traditional PCs.

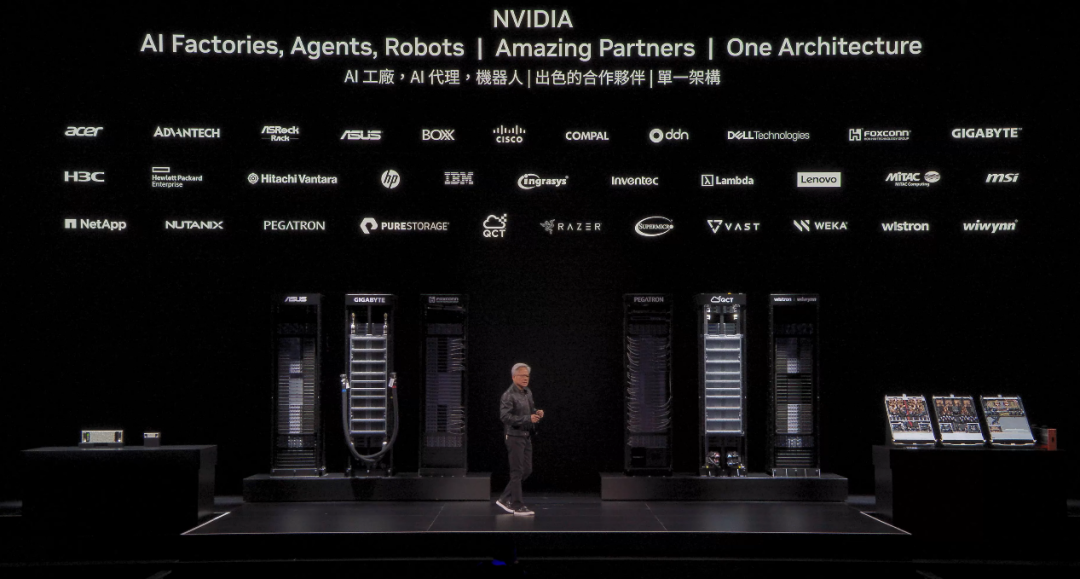

“This is specially prepared for AI native developers.” “For developers, students, and researchers, you might expect to have your own AI cloud right next to you; for prototyping, early development, etc.” At Computex, Huang announced that DGX Spark has already entered full production, and various OEM manufacturers including Dell, HPI, ASUS, MSI, Gigabyte, and Lenovo will launch different OEM devices.

Interestingly, Huang specifically compared the size of the DGX-1 with DGX Spark during the keynote. “The performance of both is quite similar. The same tasks can now be done on this device (DGX Spark).” “In just about 10 years, there has been such progress.” Since NVIDIA often refers to DGX as a Supercomputer, this moment can also be seen as a small desktop (DGX Spark) achieving AI performance comparable to supercomputers (DGX-1).

Many readers have previously wondered if DGX Spark can play games like GeForce devices. We still do not know how much graphical rendering power the GB10 chip has. However, some OEM manufacturers’ materials mention that the GB10 chip uses 6144 CUDA cores and is also equipped with RT cores.

It is roughly speculated that if the accompanying graphical rendering fixed function units are up to par, then its gaming performance is likely similar to that of GeForce RTX 5070. Of course, playing games would require solving the Linux + Arm ecosystem issues, just as we mentioned when we previously tried the Jetson Orin Nano.

DGX Station Internals—this product will likely be primarily pushed by OEM manufacturers

Additionally, at this year’s GTC, NVIDIA also released a DGX Station with specifications higher than DGX Spark and slightly larger in size, which was not mentioned in our March report. NVIDIA positions it as a personal AI device capable of running 10 trillion parameter models.

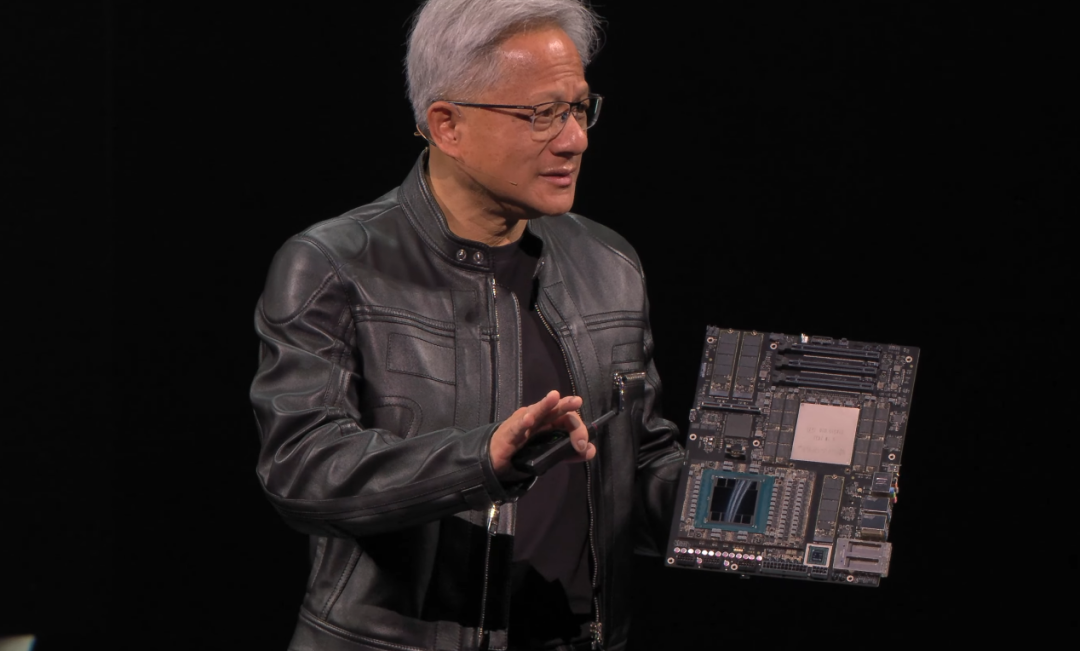

Although still a “personal device”, the configuration of DGX Station is clearly more aligned with server or enterprise products: whether it is based on the Neoverse V2 with a 72-core Grace CPU, or the 288GB HBM3e memory paired with the Blackwell Ultra GPU, and an additional level of 496GB LPDDR5X memory, as well as the interconnect between CPU and GPU utilizing NVLink-C2C.

Note that DGX Station uses the latest GB300—NVIDIA refers to it as the GB300 Grace Blackwell Ultra Desktop Superchip. Of course, based on ConnectX-8 networking, DGX Station also supports multi-device performance scaling. In our view, DGX Station is clearly more like a miniature version of an AI computing cluster.

Besides being an AI native computer, “this may be the most powerful computer on the market that can be powered directly from a wall socket.” “Its programming model is the same as that of our large systems.” Do you feel that, in terms of form and ecosystem, DGX Spark and DGX Station, as more personal AI native computers, are indeed much higher than the neighboring AI Max+? At least their approach is clearer.

Enterprise Servers Capable of Running ‘Crysis’

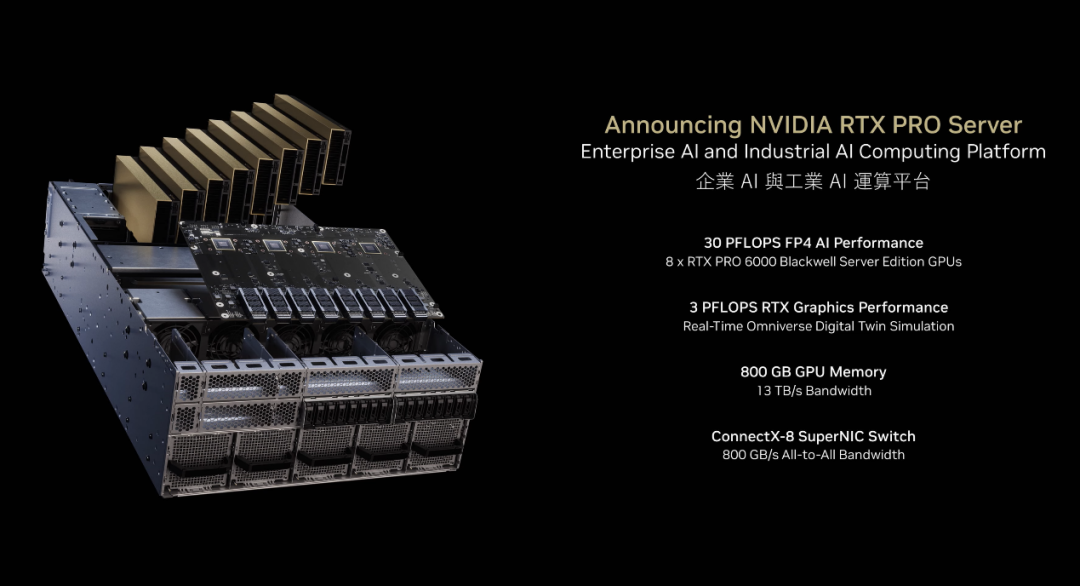

Theoretically, these two products are moving towards higher AI computing power levels, and the next should be DGX B200 (8x Blackwell GPU) and GB200. However, regarding the computing power expansion of the same architecture—whether scale-up or scale-out—we plan to discuss it in the section introducing the GB300 NVL72. The new device with higher AI computing power released at Computex is the NVIDIA RTX PRO Server.

Strictly speaking, the RTX PRO Server should not be compared with the DGX series. The reason Huang jokingly mentioned that it can run ‘Crysis’ is due to its high RTX graphical performance (and paired with x86 CPU), which can be used for real-time Omniverse digital twin simulations (so it is actually more like OVX?).

The RTX PRO series chips themselves have different products aimed at servers, workstations, and mobile workstations; thus, the computing power scaling of RTX-related products should not be below DGX Station/Spark, but rather at the RTX Blackwell professional visual workstations and GeForce gaming devices.

However, under the guiding principle of “one architecture”, in our view, NVIDIA’s different series of chips, systems, and devices are indeed just a matter of resource allocation between AI, HPC, and graphics. So even though OVX and RTX PRO Server are also computers aimed at Omniverse, they also emphasize AI performance.

The RTX PRO Server released this time is aimed at “computing platforms for enterprise AI and industrial AI”: a total of 8 RTX PRO 6000 server version graphics cards (each card has 24,064 CUDA cores, 752 Tensor cores, 188 RT cores, 96GB GDDR7 memory—without considering connections, the computing power and storage resources of a single card are clearly beyond RTX 5090, and gaming is naturally trivial);

Based on ConnectX-8 SuperNIC switching (presumably 4 chips), it can achieve bB/s full connection bandwidth (PCIe Gen 6!); combined to achieve a theoretical peak FP4 computing power of 30 PFLOPS; when used in graphics digital twin simulation scenarios like Omniverse, it provides 3 PFLOPS of graphical performance; 800GB of memory.

By the way, the single card GeForce RTX 5090 has this value of 104.9 TFLOPS. Not considering communication latency, programming compatibility, and other practical issues, if the RTX PRO Server were to play ‘Crysis Remastered Trilogy’, it would roughly estimate that running 10 parallel 4K+ highest quality @60fps should be no problem; with AI enhancements, it would be impossible to estimate…

Of course, no enterprise would use it this way: for enterprise users, it can run various traditional hypervisors, run virtual desktops, etc.; it can also run Omniverse, as well as enterprise AI—including the currently popular Agentic AI.

It is particularly worth mentioning that this server product, in addition to the 8 GPUs, also has a board specifically for data exchange (as shown above), based on ConnectX-8 NIC—”for exchange and networking (switch first, networking second)”, “also the most advanced networking chip in the world right now.” “Each GPU has its own networking interface, and the east-west traffic network achieves full connectivity of GPUs.”

In the GTC keynote, Huang discussed the response speed to individual users and the overall AI throughput capacity (tks/s) in enterprise generative AI applications—two variables that cannot be achieved simultaneously. The improvement of performance and efficiency in hardware and software is beneficial for both. Recently, an article from Electronic Engineering Magazine also explored this issue.

When these two variables are plotted as x and y axes on a graph, for specific hardware and AI models, based on different configurations, a parabolic curve can be obtained (as shown below). The larger the area formed by the curve and the coordinate axes, the greater the value that AI infrastructure creates for enterprises.

For the newly released RTX PRO Server, NVIDIA provided data indicating that when running the Llama 70B model (8K ISL / 256 OSL), under specific configurations (usually at the point of maximum balance on the curve), its performance is 1.7 times that of H200. If switched to DeepSeek R1 (128K ISL / 4K OSL), it leads H100 HGX servers by 4 times.

Scaling Up and Scaling Out of Computing Power

As computing power demands increase, it should reach the cabinet level. Before discussing the GB300 NVL72 system, here is an interesting topic: during GTC 2025, Huang emphasized two terms related to performance scaling: scale-up and scale-out. These two terms seem difficult to translate into Chinese, so we will use the original words to refer to the corresponding concepts.

Generally, our understanding of the direction of computing power scaling is: at the chip level, improving computing power through Moore’s Law, at the packaging level, further scaling through multi-die or chiplet solutions; then at the board level, using multi-chip (multi-package) solutions; and finally, inserting many cards on a single board to form a server; servers can also interconnect through networking to form cabinets, computing clusters, etc…

Why do we often hear that NVIDIA’s so-called “ecological” advantage in the AI field is not only in CUDA and various software but also in switching and networking technology? It is because NVIDIA has achieved almost the best in every link of the aforementioned computing power scaling/stretching, and competitors are unlikely to catch up anytime soon.

In essence, computing power scaling is about bringing a bunch of computers (or chips) together to work together, but how to efficiently “combine” them is key. Scaling computing power within a single system, in NVIDIA’s definition, is scale-up; when it involves inter-system networking interconnections to achieve computing power scaling, it is scale-out. Therefore, Huang said that before scale-out, scale-up must be done first. Of course, this also involves the question of what constitutes a “system”.

We understand Huang’s definition of these two terms as that scaling computing power within a complete NVLink domain is scale-up, such as the Grace Blackwell NVL72 system. Last year at GTC, NVIDIA released the GB200 NVL72—which is a complete system composed of 72 Blackwell GPUs, and the device form has already become a cabinet.

This article does not intend to discuss scale-out—recently popular NVIDIA CPO silicon photonic chips should be classified under the scale-out computing power expansion category, so it is clear that NVIDIA is also putting effort into the scale-out direction.

As for scale-up within the NVLINK domain, “doing scale-up is very difficult.” From this year’s GTC’s new product preview, NVIDIA is preparing to expand the scale-up range to 576 GPU dies (Rubin Ultra NVL576). It seems that NVIDIA is also keen on marketing a fully scaled-up system as “a giant GPU” to emphasize the efficiency of internal collaboration. Last year, Huang mentioned that GPUs have evolved from the early small chips to the current large ones (NVL72 cabinet)…

This year, Huang’s metaphor for scale-up is even more interesting; he views NVLINK as the upper-level technology of CoWoS packaging—doesn’t that sound quite vivid? Since the NVL72 cabinet is considered a whole virtual GPU, CoWoS, as TSMC’s advanced chip packaging technology, connects chiplets together; then NVLINK, as the technology connecting these GPUs, plays a similar role, just at a different level.

In Huang’s view, the GB NVL72 system is also a larger AI computer built to break through semiconductor physical limitations.

At Computex, NVIDIA released the updated Grace Blackwell Ultra (GB300, consisting of 1 Grace CPU + 2 Blackwell Ultra GPUs). Blackwell Ultra is an improved version of Blackwell; GB300 achieves a 1.5 times improvement in FP4 computing power level (40 PFLOPS), a 1.5 times improvement in HBM3e memory performance (567GB @16TB/s), and a 2 times improvement in ConnectX-8 bandwidth (800Gb/s).

An interesting number—recently mentioned by many media, the 40 PFLOPS figure has already surpassed the Sierra supercomputer, which used 18,000 Volta architecture GPUs in 2018. This means that a single computing node can now exceed the entire supercomputer of that time. Clearly, this 4000-fold performance improvement cannot be achieved solely through chip technology advancements.

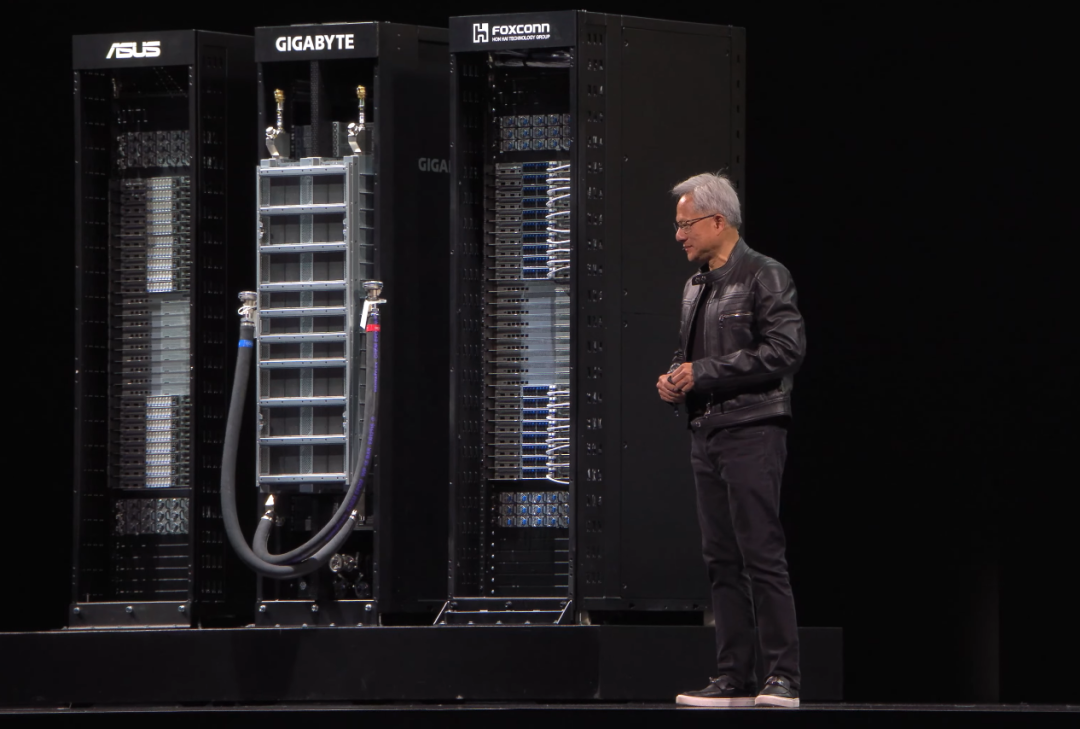

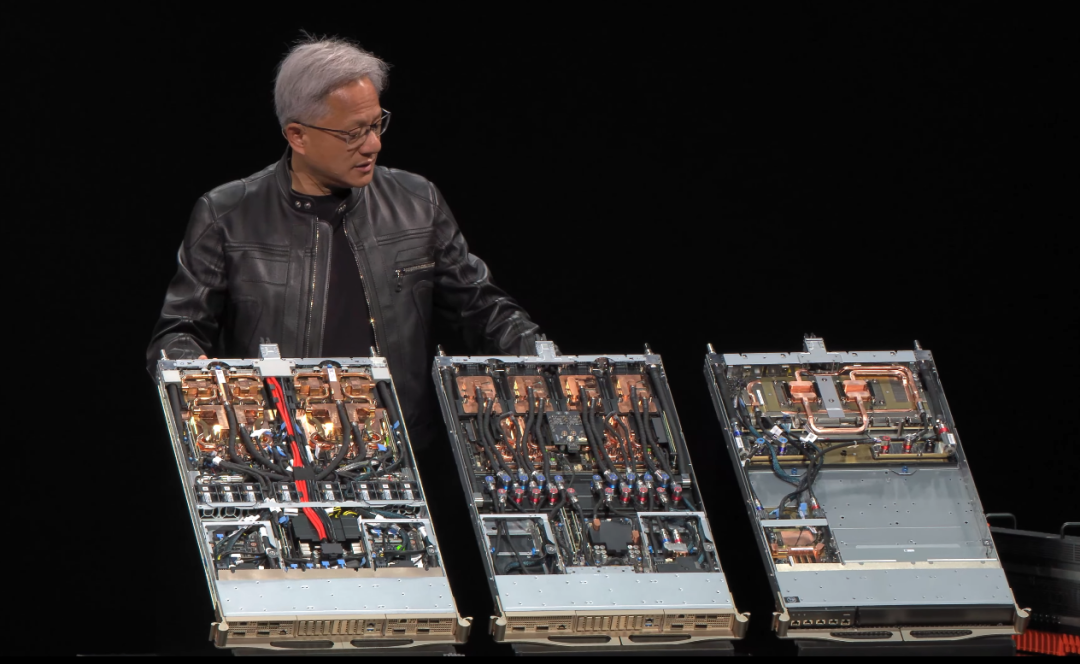

The GB300 (and B300 based on x86) servers will begin to adopt a 100% liquid cooling solution; the far right is the NVLINK switching device, which can achieve a switching rate of 7.2TB/s;

When the GB300 is further configured into the GB300 NVL72 system, the 72 GPUs once again construct a complete NVLINK domain after scaling up. Huang always likes to emphasize that the NVLINK backbone bandwidth is 130TB/s, surpassing the peak traffic rate of the entire internet; sometimes he says NVLINK turns the entire cabinet into a motherboard, and sometimes he says it makes the cabinet into a giant GPU… Overall, it is to highlight the excellence of their NVLINK technology.

However, to build a complete AI computer like the GB NVL72, NVIDIA’s introduction video also clearly stated the joint efforts of the entire industry chain. This “AI computer” cabinet is composed of 12 million components, 2 miles of copper cables, and 130 trillion transistors, weighing 1800kg (these descriptions mainly refer to the GB200 NVL72).

There is also a custom service for the “AI computer”…

Electronic Engineering Magazine now finds it rare to discuss hardware purely in articles related to NVIDIA’s products and technologies, as they repeatedly state that they are “a software company”, and that software and ecology are the driving forces behind the success of the aforementioned hardware.

Of course, during the Computex keynote, Huang inevitably devoted a large portion to the software middleware and tools built for enterprise AI, the efforts made in storage interconnect-related software; and various libraries based on CUDA: quantum computing, communication, meteorology, CAE, lithography, data science, and AI. These can be said to be the foundation for NVIDIA’s survival and development in various application fields (gaming: ???). We have discussed this quite a bit in previous articles.

However, discussing ecology does not necessarily mean discussing software: NVLINK is an ecosystem in itself and can create product differentiation. NVIDIA further advanced this differentiation at this year’s Computex—though it is not closely related to the AI computers discussed in this article—we will delve deeper into this part later.

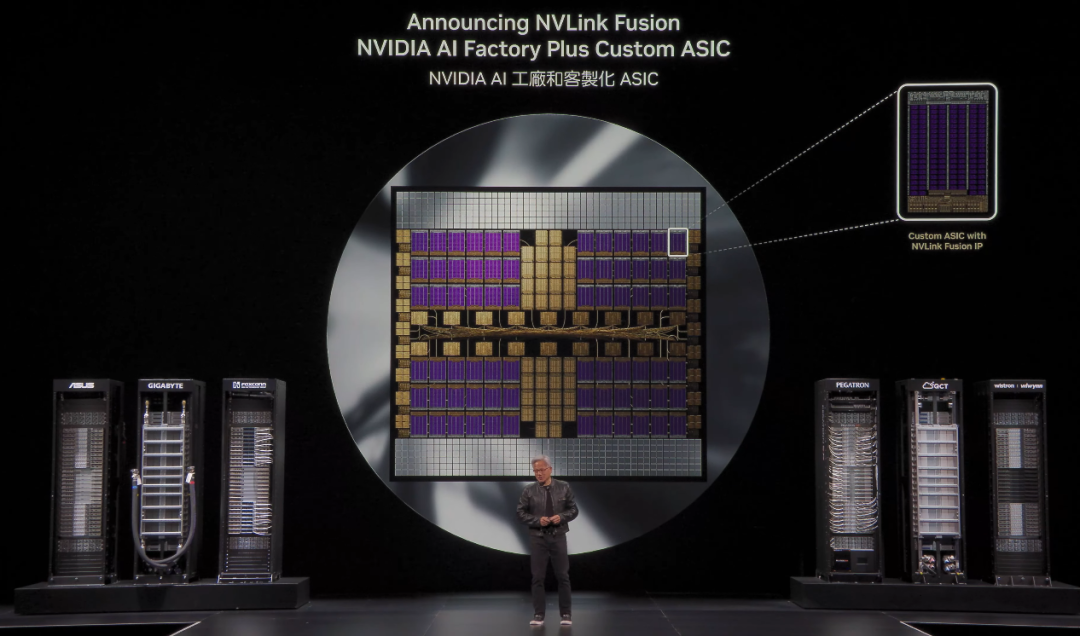

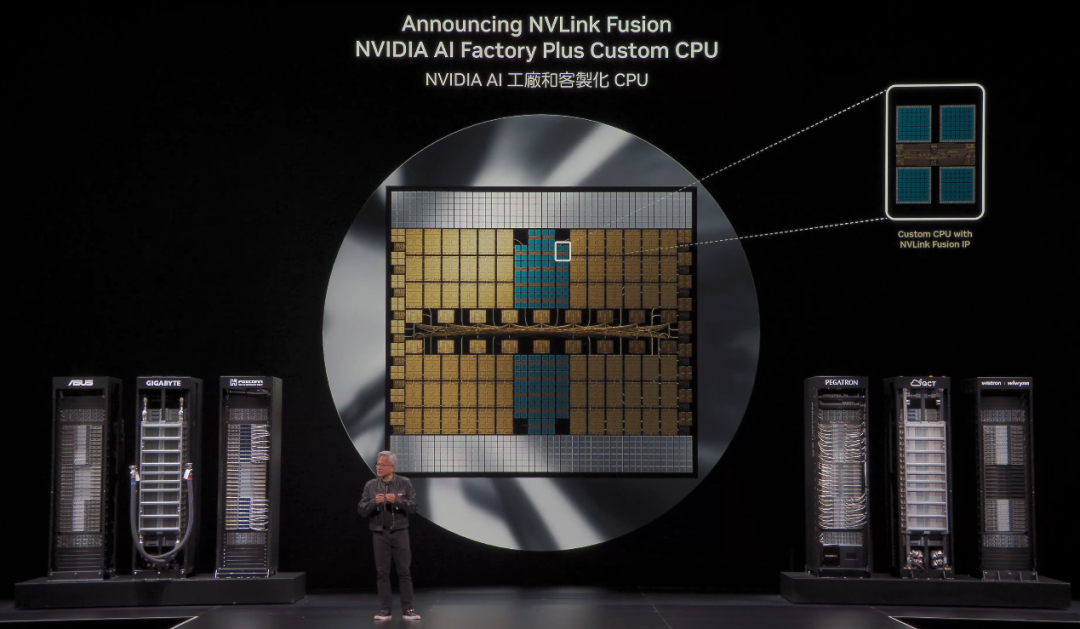

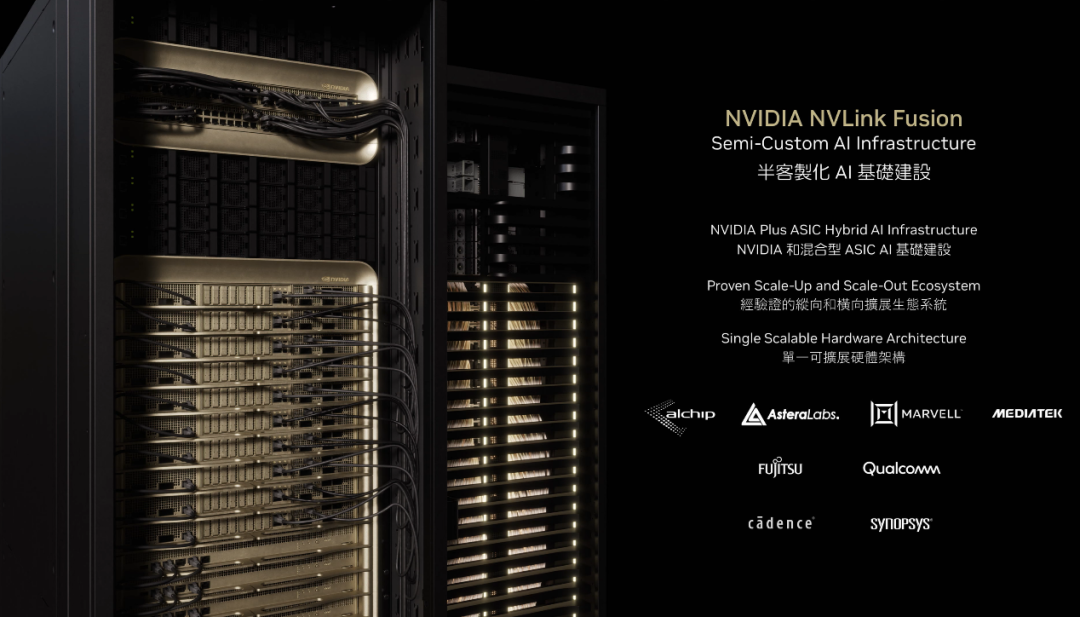

This time, NVIDIA released a custom ASIC support called “NVLink Fusion”. For the general understanding of NVIDIA AI, chips and system products are completely fixed. However, with the support of NVLink Fusion IP, customers can add their own ASICs—whether dedicated AI accelerators or other types of accelerators.

Since we currently do not have a clear understanding of the business model for NVLink Fusion practice, how it operates, and the customization costs, we will fully quote Huang’s words from the press conference:

“We have built NVLink chiplets for data exchange that are close to your (custom) chips; there will be semi-custom ASIC IP integrated. Once completed, it can be integrated into computing boards and the AI supercomputing ecosystem.” Huang explained, “Or you might want to use your own CPU—perhaps you have built a large ecosystem for your CPU and want to integrate NVIDIA into that ecosystem. Now we provide you with this possibility.”

“We will put the NVLink interface into your ASIC, connecting it to the NVLink chiplet—then directly adjacent to Blackwell or Rubin chips.” “Providing everyone with integration flexibility and openness.” In this way, “the AI infrastructure has your own components, as well as NVIDIA GPUs; and can also utilize NVLink infrastructure and ecology, such as connecting to Spectrum-X.”

To truly complete the NVLink Fusion collaboration, NVIDIA’s support alone is not enough, so many participants have joined this service, including Alchip, AsteraLabs, Marvell, MediaTek, Qualcomm, Cadence, Synopsys, Fujitsu, etc. “This includes semi-custom ASIC suppliers; companies that can build CPUs supporting NVLink; and companies like Cadence and Synopsys that provide our IP to them, and they then collaborate with customers to apply these IPs to their chips.”

Although we still know very little about the full picture of NVLink Fusion, and we do not know how difficult it is to operate or the customization costs; the release of NVLink Fusion still surprised us—NVIDIA is actually going to support different manufacturers’ CPUs and even other ASIC accelerators…

This may also be NVIDIA’s response strategy to the diversified application and personalized business needs of the AI era—and a specific action in the application-oriented chip design and software-defined era; but in any case, this is a step to deeply expand NVLink and the NVIDIA AI ecosystem, and a key to generating ecological dependence and stickiness after customer cooperation starts.

“If you buy everything from NVIDIA, nothing gives me more joy than that; but if you just buy something from NVIDIA, I will also be very happy.” (Original: Nothing gives me more joy than when you buy everything from NVIDIA. But it gives me tremendous joy if you just buy something from NVIDIA.) So the trends of NVLink Fusion are definitely worth our further attention.

Returning to the perspective of AI computers, this is a custom AI computer based on NVIDIA’s advanced technology for customers. In Huang’s words: “We are not building AI servers and data centers; we are building AI factories,” “We are not just building the next generation of IT, but a whole new industry.”

The AI factory, often compared by Huang to electrical infrastructure, is called a “factory” because NVIDIA believes that “intelligence” or tokens are the infrastructure on which humanity will rely in the future. “NVIDIA is not just a technology company; it is also a critical infrastructure company.” This is a high-level statement…

Every time we watch NVIDIA’s press conference, we feel a sense of awe from the origin of GeForce to the various scales of AI computers being unveiled to the world. “When I started the company in early 1993, I was still wondering how big our market opportunity could be. At that time, I estimated that NVIDIA’s business opportunity would be very large, probably around 300 million dollars, and we would become very wealthy.”

And now we know, from gaming graphics cards to an 1800kg giant GPU, aimed at data centers and even infrastructure, how can that still be compared to the initial estimate of 300 million?