In-depth study of Apple’s Darwin OS and XNU kernel architecture, tracing its evolution from Mach and BSD roots to support for macOS, iOS, and Apple Silicon. This article explores the design of the hybrid kernel, its adaptability to new hardware and security paradigms, and why XNU remains a unique foundation of resilience and scalability for Apple platforms.

This article is the result of several weeks of research into XNU after reading Thomas Claburn’s article published on Exclaves, which will be detailed later. I have tried to condense all the information into a single blog post. I also attempted to keep sections independent so you can skip using the table of contents, but this does require some repetition in certain places, so thank you in advance for your patience. While I am confident in my understanding of the topic, some errors are inevitable when dealing with such dense content, and if you find any mistakes, please assume they are mine and contact me so I can correct them. You can also email or reach out to me on Mastodon with your thoughts. Thank you in advance, and let’s get started!

Introduction

Apple’s Darwin operating system is a Unix-like core that underpins macOS, iOS, and all modern operating system platforms from Apple. At its core is the XNU kernel—a humorous acronym for “X is Not Unix.” XNU is a unique hybrid kernel that combines the Mach microkernel with components of BSD Unix. This design inherits the rich legacy of Mach (originating from microkernel research in the 1980s) and the robust stability and POSIX compliance of BSD. The result is a kernel architecture that balances modularity and performance by combining microkernel messaging techniques with a monolithic Unix kernel structure. We will explore the evolution of Darwin and XNU chronologically—from the origins of Mach and BSD to modern kernel features in macOS on Apple Silicon and iOS on the iPhone. Next, we will delve into architectural milestones, analyze the internal design of XNU (Mach-BSD interactions, IPC, scheduling, memory management, virtualization), and examine how the kernel and key user-space components have adapted over time to new devices and requirements.

Development History of Darwin and XNU

Origins of the Mach Microkernel (1985–1996)

The story of Darwin begins with Mach, a project at Carnegie Mellon University (1985) led by Richard Rashid and Avie Tevanian. Mach was envisioned as the next-generation microkernel to address the increasing complexity of UNIX kernels. Mach does not provide a single large kernel binary but instead offers only basic low-level functionalities—memory management (virtual memory, address space), CPU scheduling (threads and tasks), and inter-process communication (IPC via messaging). Higher-level services (file systems, networking, device drivers, etc.) are intended to run as user-space servers on top of Mach. This separation was expected to improve reliability (crashing drivers would not bring down the entire system) and flexibility (multiple operating system roles could run simultaneously). In fact, Mach’s design allowed multiple “roles” to run on a single microkernel—similar to modern virtualization.

By 1990, Mach had evolved to Mach 2.5, a microkernel that still co-located some BSD kernel code in kernel space for performance. The true microkernel version, Mach 3.0, emerged between 1991 and 1994. Mach’s virtual memory (VM) system had a profound impact beyond the scope of the project—it was used as the memory management subsystem in 4.4BSD and later FreeBSD. Importantly, Mach introduced the concepts of tasks (encapsulating address space and resources, roughly equivalent to processes) and threads (CPU execution units) as first-class kernel objects. It also implemented an efficient VM with copy-on-write and memory object abstractions, along with a message-based IPC mechanism using Mach ports.

Alongside the development of Mach, NeXT Computer (founded by Steve Jobs in 1985) needed a modern operating system for its workstations. NeXT adopted Mach early on: NeXTSTEP, released in 1989, was built on the Mach 2.5 kernel and added a 4.3BSD Unix subsystem on top. Crucially, the kernel of NeXTSTEP (later named XNU) was not a pure microkernel system with user-space servers; rather, it adopted Mach and integrated BSD code into the kernel address space for speed. In other words, NeXT utilized Mach’s abstractions (tasks, threads, IPC, VM) and ran the BSD kernel in kernel mode on top of Mach primitives. This hybrid approach sacrificed some of Mach’s extreme modularity for performance: it avoided the heavy context switching and messaging overhead that plagued pure microkernel systems at the time. The kernel of NeXTSTEP also included an object-oriented driver framework called DriverKit (written in Objective-C) for developing device drivers as objects, reflecting NeXT’s preference for high-level languages.

By the mid-1990s, Apple’s original Mac OS (Classic Mac OS) had become outdated, lacking modern operating system features such as proper multitasking and memory protection. In 1996, Apple sought an existing operating system as a foundation for its future. The company acquired NeXT in December 1996 and chose NeXTSTEP as the core of the new Mac OS X. With this acquisition, NeXT’s Mach/BSD hybrid kernel came to Apple, bringing Avie Tevanian (co-author of Mach) as the engineering lead for Apple Software. Apple named the new operating system project Rhapsody, which later became Mac OS X.

From Rhapsody to Mac OS X: Integrating Mach 3.0 and BSD (1997–2005)

After acquiring NeXT, Apple set out to merge the NeXTSTEP kernel with additional features and hardware support required by Mac. The kernel was further updated to incorporate newer Mach and BSD technologies. Notably, Apple integrated the code from OSFMK 7.3 (the Open Software Foundation’s Mach 3.0 kernel) into XNU. This meant that the Mach portion of XNU now derived from the true microkernel lineage of Mach 3.0 (including contributions from the University of Utah’s Mach 4 research). On the BSD side, the 4.3BSD subsystem of the NeXTSTEP kernel was upgraded to 4.4BSD and FreeBSD code. This brought a more modern BSD implementation with improved networking and robust file system infrastructure. By combining Mach 3.0 and FreeBSD elements, Apple shaped XNU into a powerful hybrid: Mach provided low-level kernel architecture and abstractions, while BSD offered Unix APIs and services.

Apple also replaced NeXT’s old DriverKit with a new driver framework called I/O Kit, which was written in a subset of C++. I/O Kit introduced an object-oriented device driver model in the kernel, robustly supporting features like dynamic device matching and hot-plugging. The choice of C++ (minus exceptions and multiple inheritance, using an embedded C++ subset) for I/O Kit likely improved performance and avoided the runtime overhead of Objective-C in the kernel. By the late 1990s, XNU consisted of three core components: the Mach microkernel layer (now based on OSFMK 7.3), the BSD layer (primarily sourced from FreeBSD), and the I/O Kit for drivers.

Apple released the first developer previews of Mac OS X in 1999 and open-sourced Darwin 1.0 in 2000, showcasing the XNU kernel and basic Unix user space to developers. The commercial release of Mac OS X 10.0 (Cheetah) came in early 2001 (Darwin 1.3.1). Although the initial versions had poor performance, they solidified the architectural paradigm. Key early milestones included:

-

Mac OS X 10.1 (Puma, 2001) – Improved thread performance and added missing Unix features. Darwin 1.4.1 in 10.1 introduced faster thread management and real-time thread support.

-

Mac OS X 10.2 (Jaguar, 2002) – Darwin 6.0 brought synchronization of the BSD layer with FreeBSD 4.4/5 and a host of new features: IPv6 and IPSec networking, a new mDNSResponder service for discovery (Bonjour/Rendezvous), and journaling in the HFS+ file system. It also upgraded the toolchain (GCC3) and added modern Unix utilities.

-

Mac OS X 10.3 (Panther, 2003) – Darwin 7.0/7.1 integrated improvements from the FreeBSD 5 kernel. This brought fine-grained kernel locking (eliminating the earlier giant lock model) for better multiprocessor utilization. Panther’s kernel also introduced integrated BFS (Basic Firewall) and other performance tweaks, such as improved VM and I/O.

Throughout these versions, XNU remained a 32-bit kernel (with limited 64-bit user process support introduced in 10.4). Apple initially supported PowerPC (the preferred CPU architecture for Macs) while quietly retaining Intel x86 compatibility in the source code (inherited from NeXTSTEP’s x86 support) in preparation for a future transition.

Mac OS X 10.4 (Tiger, 2005) brought significant architectural changes. It was the first version for which Apple announced OS X had achieved UNIX 03 certification, meaning the system conformed to the Single UNIX Specification and could legally use the UNIX name. Darwin 8 (the core of Tiger) received this UNIX certification thanks to the robust BSD layer integrated into XNU. Tiger also introduced new kernel features such as kqueue/kevent (from FreeBSD for scalable event handling) and laid the groundwork for keeping XNU cross-platform for Intel Macs. Apple subsequently announced in 2005 that Macs would transition to Intel x86 processors. The Mach foundation of XNU made this platform adaptability easier, as Mach abstracted many low-level hardware details behind a portability layer. In early 2006, Apple released Mac OS X 10.4.4 for Intel, demonstrating XNU running on x86_32, with most of the code shared with the PowerPC version.

Transition to 64-bit, Multicore, and iPhone OS (2005–2010)

By the mid-2000s, computing had shifted to multicore 64-bit architectures, and Apple’s operating systems had to evolve accordingly. Mac OS X 10.5 Leopard (2007), based on Darwin 9, was a milestone version for XNU. It introduced extensive 64-bit support: while earlier versions could run 64-bit user applications in a limited form, Leopard’s kernel itself could run in 64-bit mode on appropriate hardware (x86-64) and support 64-bit drivers. Leopard also dropped official support for older architectures like PowerPC G3 and introduced stronger security and performance features: Address Space Layout Randomization (ASLR) to prevent exploitations, advanced sandboxing facilities to limit processes, and the DTrace tracing framework from Solaris for low-level tracing. Notably, Leopard was the last version of Mac OS X to fully support PowerPC, as Apple was transitioning its entire product line to Intel.

In 2007, Apple also launched the iPhone, whose “iPhone OS” (later named iOS) was also built on Darwin. The first iPhone OS was based on Darwin 9 (the same core as Leopard). This showcased the versatility of XNU: within the same kernel version, Apple could target high-end PowerPC and x86 servers, consumer-grade Intel laptops, and resource-constrained ARM mobile devices. The kernel gained support for the ARM architecture and underwent tailored modifications for mobile devices. For instance, due to the very limited memory of early iPhones and the absence of swapping, the kernel’s memory management had to incorporate proactive memory pressure handling. Apple introduced the Jetsam mechanism in iPhone OS, which monitored low memory situations and terminated background applications to free up memory (as traditional disk swapping was not feasible on flash storage). iPhone OS also sandboxed all third-party applications by design and required strict code signing for binaries—security measures provided by XNU’s Mach and BSD layers (Mach’s task ports and code signing enforcement in the kernel, with signature verification assisted by user-space daemons like amfid).

Mac OS X 10.6 Snow Leopard (2009) marked the maturity of XNU on 64-bit Intel. Snow Leopard (Darwin 10) completely dropped support for PowerPC, making XNU a dual-architecture kernel (x86_64 and i386 for Intel Macs). It was also the first Mac to come with an optional fully 64-bit kernel (most defaulted to a 32-bit kernel with 64-bit user space, except for Xserve). Snow Leopard brought significant concurrency improvements: it introduced Grand Central Dispatch (libdispatch) for user-space task parallelization and kernel support for scheduling queues. While libdispatch is a user-space library, it works closely with the kernel, which provides the underlying thread pool and scheduling for the queues. Another new feature was OpenCL for GPU computing, which also required tight integration between user frameworks and kernel drivers. Snow Leopard’s streamlined focus on Intel and multicore optimization made XNU more efficient.

On the mobile front, iPhone OS 3 (2009) and iOS 4 (2010) (renamed “iOS” in 2010) followed, adding support for Apple A4/A5 ARM chips and features like multitasking. The scheduler in XNU was adjusted in iOS 4 to handle the concept of background applications with different priority bands (foreground, background, etc.) and to support emerging multicore ARM SoCs (for example, the Apple A5 in 2011 was dual-core). The kernels of iOS and macOS remained largely unified, using conditional code to handle platform differences. Starting with OS X 10.7 Lion (2011), XNU completely dropped support for 32-bit Intel kernels—it required Macs to have 64-bit CPUs, reflecting the industry trend of moving beyond 32-bit. Lion (Darwin 11) also improved sandboxing and added full support for new features like Automatic Reference Counting (ARC) in Obj-C (compiler and runtime changes reflected in the system).

Evolution of Modern macOS and iOS (2011–2020)

From 2011 onward, Apple’s operating system versions have been released annually, with Darwin continuing to receive incremental but significant enhancements to support new hardware and features:

-

OS X 10.8 Mountain Lion (2012) and 10.9 Mavericks (2013) (Darwin 12 and 13) introduced power and memory optimizations in the kernel. Mavericks added compressed memory, a kernel feature where inactive pages are compressed in RAM to avoid swapping to disk. This aligned with iOS’s techniques for dealing with low RAM and benefited Macs by improving responsiveness under memory pressure. Mavericks also implemented timer coalescing, where the kernel aligns wake-ups from idle states to reduce CPU power consumption. These changes demonstrate how the kernel adapts to energy efficiency demands, influenced by mobile design principles. Additionally, around this time, Apple introduced App Nap and increased the use of thread Quality of Service (QoS) classes, which require kernel scheduling awareness to limit or determine thread priorities based on QoS hints (e.g., background tasks vs. user-initiated tasks). The scheduler in XNU has continually evolved to support these multi-priority bands and energy-efficient scheduling.

-

OS X 10.10 Yosemite (2014) and 10.11 El Capitan (2015) (Darwin 14 and 15) continued this trend. A significant security addition in El Capitan was System Integrity Protection (SIP). SIP (also known as “rootless”) is enforced by the kernel’s security framework and can even prevent root user processes from tampering with critical system files and processes. SIP is implemented through the mandatory access control (MAC) framework in the BSD layer, reinforcing the operating system by shifting more trust to the kernel rather than user space. A similar “rootless” concept was applied to iOS (iOS 9 in 2015). Darwin 15 also witnessed Apple further unifying the codebase of OS X and iOS as they launched watchOS and tvOS (both also based on Darwin)—XNU had to adapt to run on small Apple Watch hardware (S1 chip) as well as powerful Mac Pros, with scalable scheduling, memory, and I/O capabilities. By this point, XNU supported ARM64 (64-bit ARMv8, first used in the iPhone 5s in 2013) and would drop support for 32-bit ARM in iOS 11 (2017).

-

macOS 10.12 Sierra (2016), 10.13 High Sierra (2017), 10.14 Mojave (2018) (Darwin 16-18) brought evolutions in the file system and further security. High Sierra introduced APFS (Apple File System) as the new default file system, replacing HFS+. APFS requires the kernel to support snapshots, clones, and container-level encryption. The VFS layer of XNU (in the BSD component) was extended to accommodate the advanced features and performance characteristics of APFS. During this period, kext (kernel extension) security was enhanced—macOS High Sierra required user approval to load third-party kexts, and macOS Mojave introduced stricter code signing checks and reinforced user-space process runtime, which also affected how the kernel verifies and allows certain operations. Another adaptation was in graphics and external device support, as High Sierra’s support for eGPUs via Thunderbolt required improved hot-plug handling in I/O Kit and scheduling for external PCIe devices.

-

macOS 10.15 Catalina (2019) (Darwin 19) was a significant step in modernizing XNU. Catalina was the first version to deprecate most 32-bit code (only 64-bit applications were allowed, and the kernel had been 64-bit for years). More notably, Apple introduced a new approach for device drivers: DriverKit, which reused the name of NeXT’s old driver framework but adopted a new design. DriverKit in modern macOS allows many drivers to run in user space as driver extensions (dexts) outside the kernel. This represents a shift towards the microkernel idea for third-party code—by moving drivers (USB, networking, etc.) to user-space processes, Apple improved system stability and security (if a faulty driver runs outside the kernel, it cannot crash the kernel). XNU was adjusted to facilitate this: the kernel provides controlled access to hardware for user-space drivers (via IPC and shared memory) instead of loading their code as kexts. At the same time, Catalina split the operating system file system into a read-only system volume, enhancing SIP protection in the kernel (the kernel now treats system files as immutable at runtime). These changes indicate that even decades after its inception, XNU’s architecture can incorporate more user-space responsibilities favorably and do so securely using Mach IPC mechanisms.

The Era of Apple Silicon (2020 to Present)

In 2020, Apple underwent another significant transformation: transitioning the Mac product line from Intel CPUs to Apple-custom ARM64 SoCs (Apple Silicon chips, starting with M1). While Darwin had long supported ARM due to iOS, running macOS on ARM64 brought new challenges and opportunities. macOS 11 Big Sur (2020), corresponding to Darwin 20, was the first version for Apple Silicon Macs. XNU was already cross-platform, but now it had to support heterogeneous big.LITTLE CPU architectures: Apple Silicon chips combine high-performance cores with energy-efficient cores. The scheduler was enhanced with heterogeneous awareness, ensuring high-priority and heavy threads run on performance cores, while background and low QoS threads can be scheduled on efficiency cores to save power. Apple may leverage thread QoS classes (introduced in earlier macOS/iOS) to map threads to the appropriate core type—this is an extension of the Mach scheduling concept into the realm of asymmetric multiprocessing.

Another aspect of Apple Silicon is the unified memory architecture (shared memory between CPU and GPU). While the kernel’s memory manager is largely abstracted by frameworks, it works with GPU drivers (now Apple’s own, integrated via I/O Kit) to manage buffer sharing without expensive copying. The Mach VM abstraction is very suitable here—memory objects can be shared between user space and the GPU, with VM remapping instead of copying. Additionally, Apple Silicon introduced hardware features like Pointer Authentication (PAC) and Memory Tagging Extensions (MTE) to ensure security. The ARM64 backend of XNU must support PAC (which mitigates ROP1 attacks by using PAC keys in exception frames and system pointers) and potentially MTE to detect memory errors—these are deep enhancements in the kernel tailored for the architecture to improve the security of new hardware.

In terms of virtualization, Apple Silicon prompted a reevaluation of virtualization strategies. On Intel Macs, XNU had long supported virtualization through the Hypervisor framework (introduced in macOS 10.10 Yosemite), which allowed user-space programs to run virtual machines using hardware VT-x support. With Apple Silicon, macOS 11 introduced a new virtualization framework built on the ARM64 kernel hypervisor (leveraging ARM VMM capabilities). Notably, while the open-source XNU code does not include the Apple Silicon hypervisor, the accompanying kernel initializes hypervisor support if running on appropriate Apple chips. This allows macOS on M1/M2 to run lightweight virtual machines (for Linux, macOS guests, etc.) entirely from user-space controllers, similar to Linux KVM. On iOS devices, Apple has long disabled or restricted hypervisors (with no public API), but hardware capabilities appeared on the A14 chip. Enthusiasts quickly discovered that on jailbroken A14 devices, hypervisors could be enabled to run Linux VMs.

In addition to CPUs and virtualization, Apple Silicon Macs also run many of the same daemons and services as iOS, indicating that the system architecture is converging. The XNU kernel now supports all devices from servers (macOS), personal computers, phones, watches, TVs, and even bridgeOS (a Darwin variant running on the Apple T2/M1 coprocessor for device management). The flexibility and scalability of Darwin stem from its Mach foundation: it abstracts hardware details at the platform layer, so adding new CPU architectures (PowerPC → x86 → ARM64) or scaling down to limited hardware largely requires implementing Mach’s low-level interfaces (like pmap for the MMU, thread context switching, etc.) while keeping the high-level kernel logic unchanged. This design has paid off in Apple’s transitions.

In summary, over the past two decades, XNU has undergone significant transformations while retaining its core identity. Table 1 highlights the timeline of milestones and architectural changes in Darwin/XNU:

| Year | Release (Darwin Version) | Key Kernel Developments |

| 1989 | NeXTSTEP 1.0 (Mach 2.5 + 4.3BSD) | Launch of NeXT’s XNU kernel hybrid: Mach microkernel with BSD co-located in kernel space for performance. Drivers via Obj-C DriverKit. |

| 1996 | NeXT Acquired by Apple | Development of Rhapsody OS begins, based on OpenStep. XNU will be upgraded using Mach 3 and FreeBSD. |

| 1999 | Mac OS X Server 1.0 (Darwin 0.x) | First Darwin version (0.1–0.3) released as Apple integrates OSFMK Mach 3.0 (OSF/1) and FreeBSD into XNU. |

| 2001 | Mac OS X 10.0 (Darwin 1.3) | Darwin 1.x: Core OS X launched with hybrid kernel, BSD user space, and Cocoa API. Early performance tweaks for Mach/BSD integration. |

| 2003 | Mac OS X 10.3 (Darwin 7) | XNU synchronized with FreeBSD 5, bringing SMP scalability (fine-grained locking). |

| 2005 | Mac OS X 10.4 (Darwin 8) | UNIX 03 certified kernel. Ready to support Intel x86 (utilizing Mach’s portability layer). |

| 2006 | Intel-based Mac OS X (Darwin 8.x) | Apple transitions Macs to x86. XNU supports universal binary drivers and Rosetta translation (user-space emulation of PowerPC on x86). |

| 2007 | Mac OS X 10.5 (Darwin 9) | Kernel supports 64-bit (on x86_64); last support for PowerPC. Security: NX support, ASLR, code signing, sandboxing introduced. iPhone OS 1 (Darwin 9) released on ARM, extending XNU to mobile devices (no swapping, sandbox always on). |

| 2009 | Mac OS X 10.6 (Darwin 10) | Intel-only (dropped PowerPC). Fully supports 64-bit kernel on powerful Macs; Grand Central Dispatch (kernel task queues); OpenCL support. iPhone OS -> iOS 3 (Darwin 10) added improved power management. |

| 2011 | Mac OS X 10.7 (Darwin 11) | Dropped support for 32-bit kernel on Macs; requires x86_64. Expanded sandbox, FileVault 2 encryption (kernel encryption). iOS 5 brings dual-core scheduling. |

| 2013 |

OS X 10.9 (Darwin 13) |

Power optimizations: compressed memory, timer coalescing in the kernel. Improved multicore scheduling with the introduction of QoS. |

| 2015 | OS X 10.11 (Darwin 15) | System Integrity Protection (kernel-enforced security). Enhanced AMFI (Apple Mobile File Integrity) for code signing in the kernel and user helpers (amfid). iOS 9 / watchOS (Darwin 15) debut on new device categories, with the kernel running on Apple Watch (ARM Cortex-A7). |

| 2017 | macOS 10.13 (Darwin 17) | New APFS file system default on Mac (already in iOS 10). Kernel changes for cloning and snapshots. Kext loading requires user approval. iOS 11 drops 32-bit ARM, fully 64-bit kernel. |

| 2019 | macOS 10.15 (Darwin 19) | Old I/O Kit model transition: DriverKit introduced for user-space drivers. System extensions modularize networking and endpoint security features, separating them from the kernel. macOS splits system volume (read-only) to enhance kernel protection of OS files. |

| 2020 | macOS 11.0 (Darwin 20) | Apple Silicon support – XNU on ARM64 Macs (M1). Kernel adapts to heterogeneous cores, unified memory. Rosetta 2 translation layer (user-space JIT, kernel-enforced memory protection for translated code). iOS 14 – new virtualization features for developers (e.g., running lightweight VMs on iPadOS). |

| 2022 | macOS 13 (Darwin 22) | Continued improvements for Apple Silicon (e.g., high power mode on M1 Max, kernel scheduling adjustments). iOS 16 – XNU adds support for virtualized iOS/macOS guests (for Xcode simulator and developer mode features). |

| 2024 | macOS 14 (Darwin 23) | Ongoing improvements (memory tagging support and tuning for M2/M3 chips). Darwin remains the universal core for visionOS (Apple Vision Pro AR headset). |

This timeline illustrates how the Mach/BSD core of XNU has become a stable foundation that Apple can incrementally enhance: adding 64-bit support, embracing multicore, strengthening security, and porting to new architectures while maintaining backward compatibility. Next, we will delve into the internal architecture of XNU—the hybrid kernel design that makes all this possible.

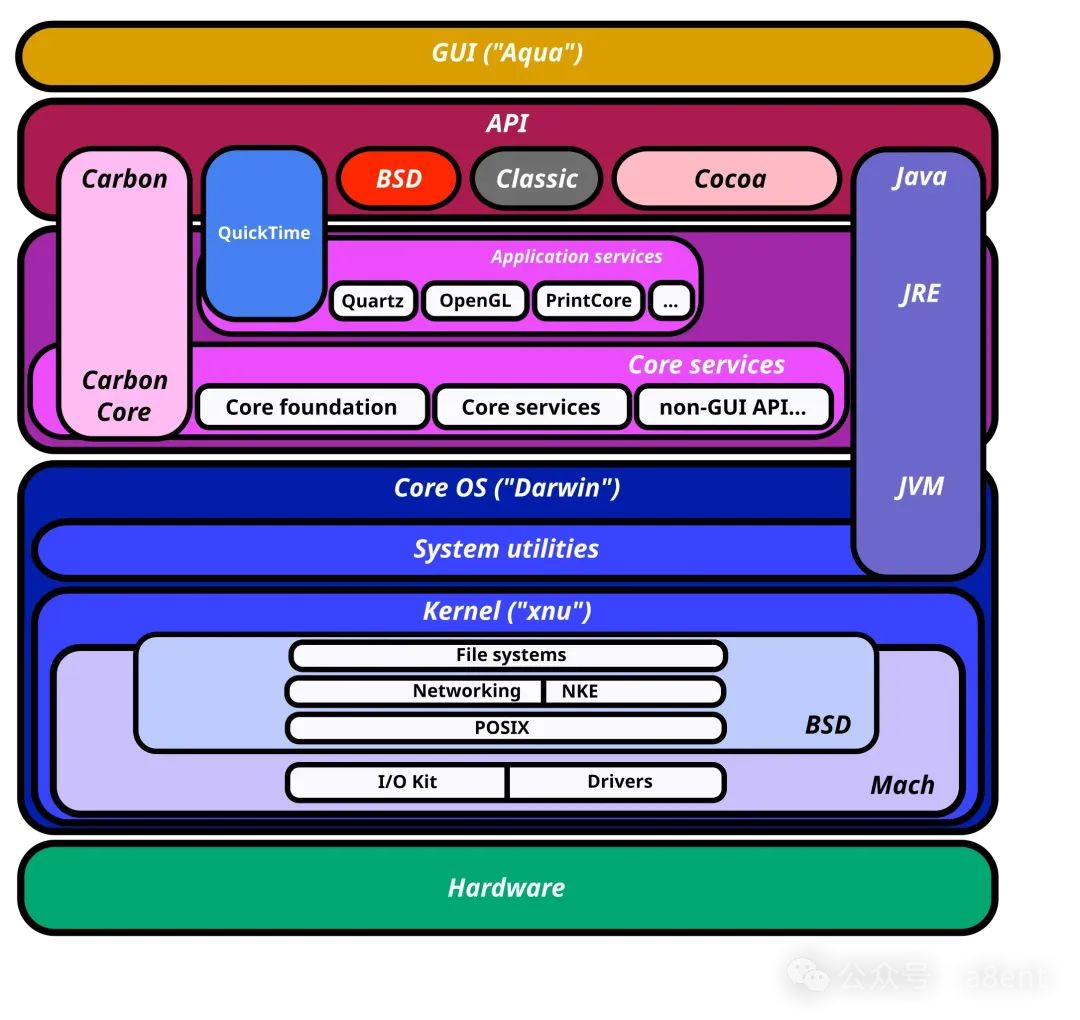

File: Mac OS X Architecture Diagram.svg. (December 29, 2024). Wikimedia Commons. Retrieved April 3, 2025, 22:59 from https://commons.wikimedia.org.

Conclusion

Darwin and XNU provide a compelling case study of an operating system that is neither a pure microkernel nor a monolithic kernel, but a wise combination of both. Its evolution illustrates the trade-offs in operating system design: performance versus modularity, generality versus specialization. The Mach-based kernel of XNU was once considered a performance burden, but it has proven adaptable to new architectures and capable of achieving system-wide functionalities (such as seamless multi-OS integration on Apple Silicon or fine-grained sandboxing). Meanwhile, the BSD layer ensures that developers and applications have a rich, POSIX-compliant environment with all the expected UNIX tools and APIs, greatly simplifying platform adoption and software portability.

In modern times, as hardware trends shift towards dedicated processors and greater parallelism, XNU continues to adopt new technologies (such as scheduling queues, QoS scheduling, and direct support for machine learning accelerators through drivers) while maintaining robustness. The Darwin OS also provides researchers with a window into a commercial-grade hybrid kernel through its open-source version (albeit not a very good window), inspiring efforts in operating system architecture that blend ideas from both camps of the classic microkernel debate.

Thus, Apple’s Darwin has evolved from a niche NeXTSTEP OS into the core of millions of devices, tracing its lineage back to Mach and BSD. Each major transition—whether a new CPU architecture (PowerPC→Intel→ARM), new device categories, or new security paradigms—has seen XNU respond with architectural answers: extending (rather than rewriting) the kernel, tightly integrating components when necessary, and isolating where possible through Mach IPC. This balanced evolution of the Darwin kernel showcases successful long-term operating system design, remaining at the forefront of commercial operating systems built on decades of operating system research.

Tanuj Ravi Rao