1

Introduction

A year ago, I researched an embedded device and found its acoustic configuration logic quite intriguing, but later focused on vulnerability exploitation and did not delve deeper.

Recently, while studying wireless communications like sub-GHz, Bluetooth, and RF, I became interested again in transmitting information via acoustic signals, which led to this article documenting some of my findings and insights.

2

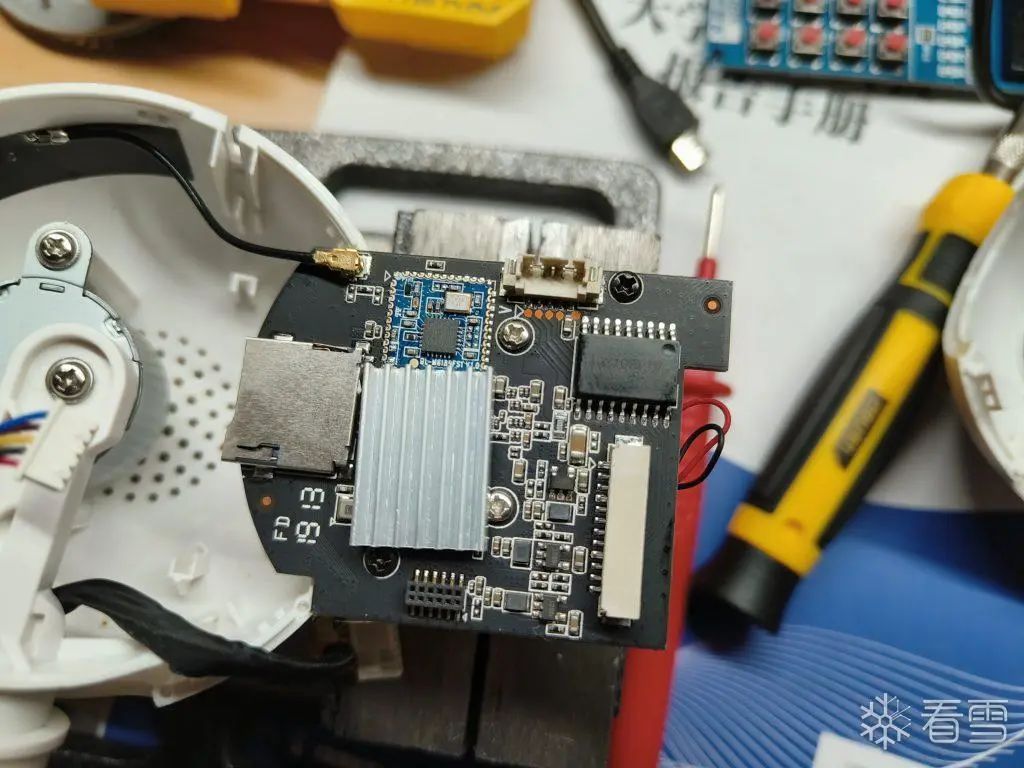

Hardware Analysis

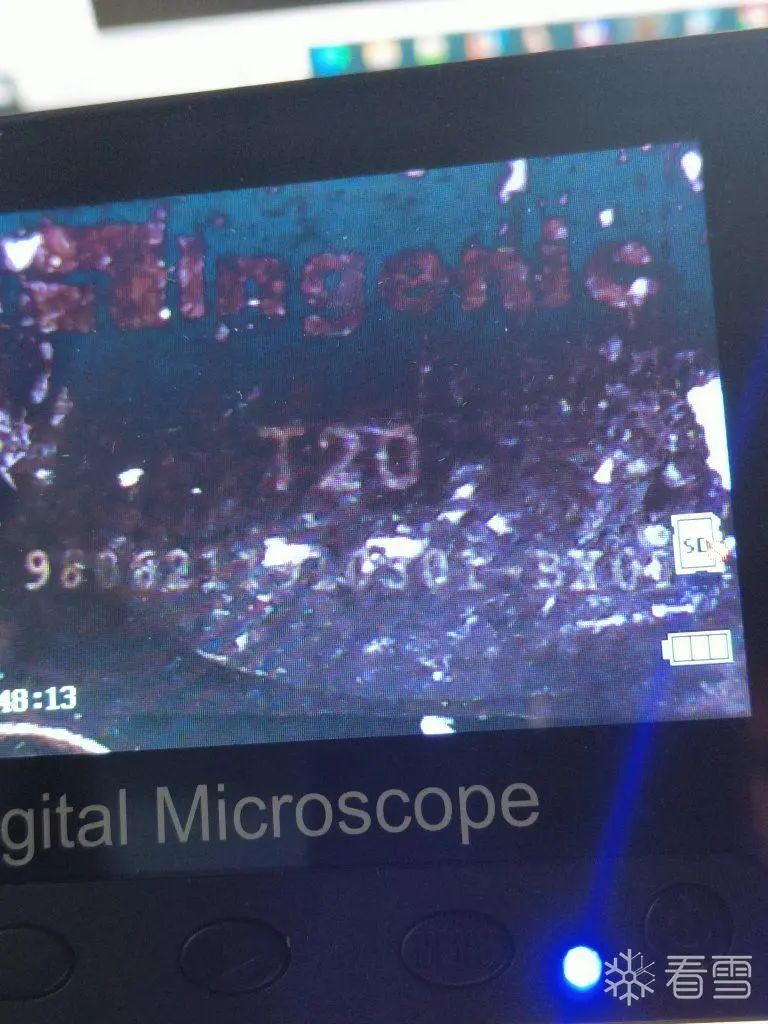

After removing the heat sink from the main control chip, we can read the silk screen on the main control chip using a magnifying glass:

The official website of the chip: Ingenic – Beijing Junzheng Integrated Circuit Co., Ltd. (http://www.ingenic.com.cn/)

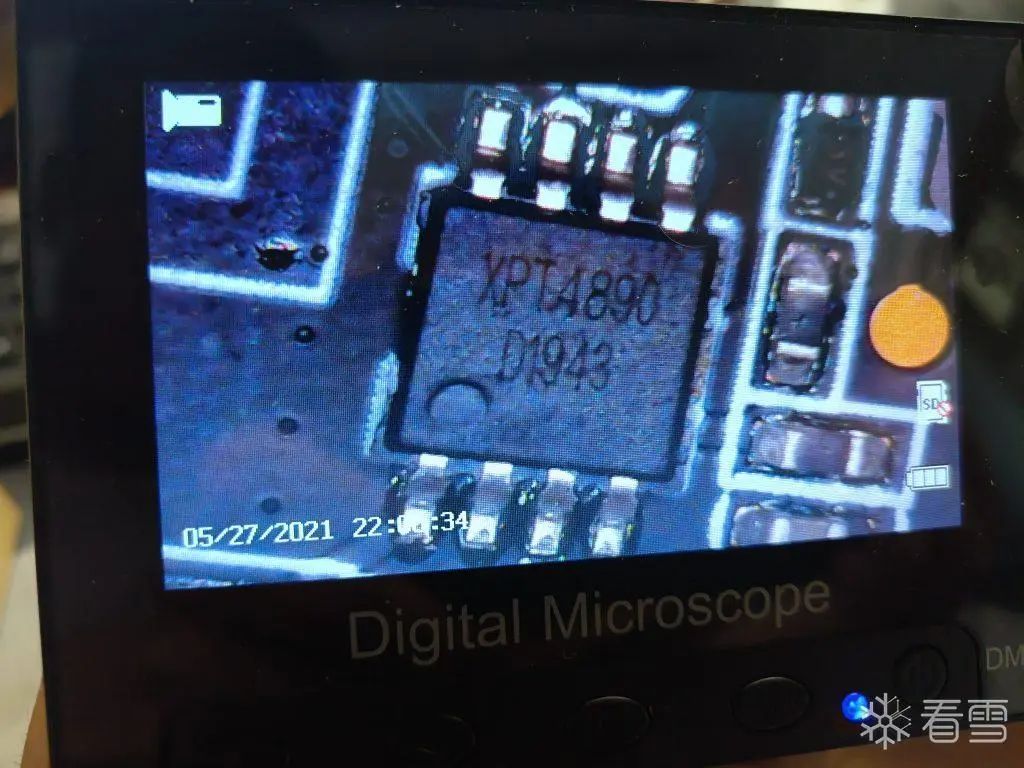

The audio amplifier is the XPT4890:

The audio reception is performed through the microphone on the device. The microphone receives the acoustic signals and generates an electrical signal, which is then processed by the main control chip.

3

Audio Analysis

First, we need to collect a segment of audio during the configuration process for analysis.

Using an AUX male-to-male cable, we can directly output audio from the phone’s headphone jack to the computer. The built-in recording software on Windows or OBS can be used for collection.

Audio Processing

Research shows that the acoustic configuration is closely related to the frequency of sound, so this step mainly involves extracting the frequency characteristics of the acoustic signal using a script.

Here, we use the librosa library, but it does not support m4a and only processes wav files, so we first need to convert m4a to wav.

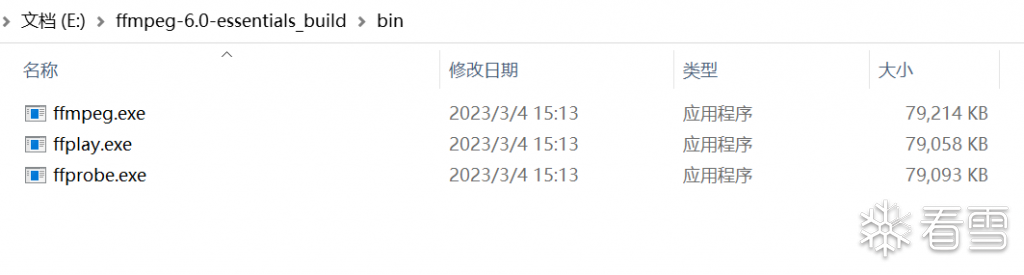

m4a to wav

The conversion uses the pydub library, which requires FFmpeg to be installed:

Tutorial for installing FFmpeg on Windows 10 – CSDN Blog

(https://blog.csdn.net/qq_43803367/article/details/110308401)

Download link: https://www.gyan.dev/ffmpeg/builds/ffmpeg-release-essentials.zip

Add the bin directory to the environment variable:

import librosa

from pydub import AudioSegment

import matplotlib.pyplot as plt

import numpy as np

input_file = './acoustic_configuration_info.m4a'

output_file = './acoustic_configuration_info.wav'

# Convert M4A file to WAV format

audio = AudioSegment.from_file(input_file, format='m4a')

audio.export(output_file, format='wav')

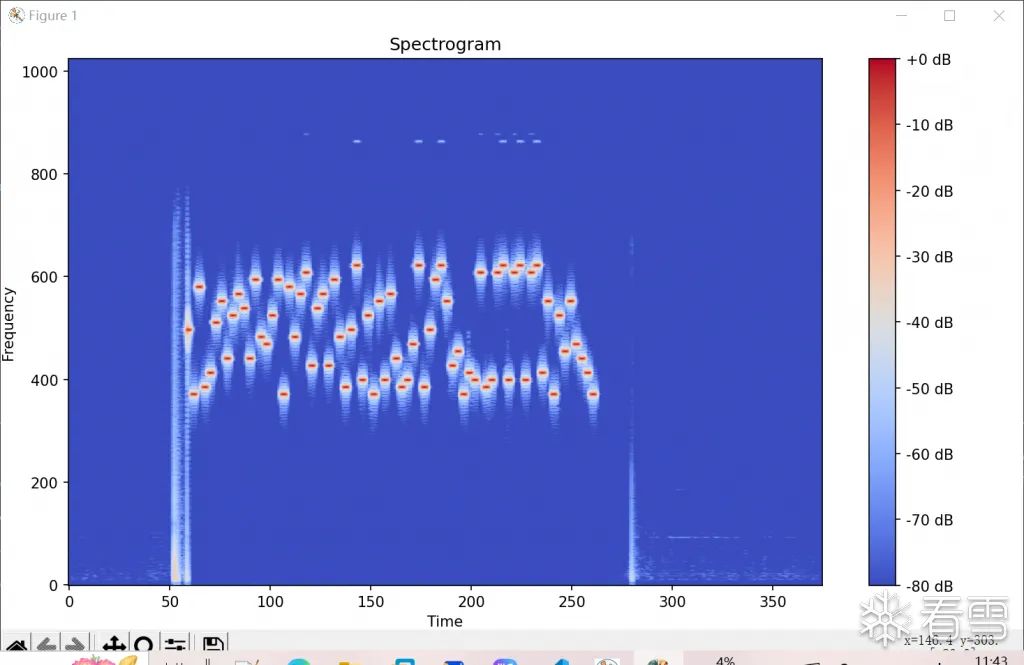

Spectrum Generation

audio_path = './acoustic_configuration_info.wav'

audio_data, sample_rate = librosa.load(audio_path)

spectrogram = librosa.stft(audio_data)

plt.figure(figsize=(12, 8))

plt.title('Spectrogram')

plt.xlabel('Time')

plt.ylabel('Frequency')

plt.imshow(librosa.amplitude_to_db(abs(spectrogram), ref=np.max),

origin='lower', aspect='auto', cmap='coolwarm')

plt.colorbar(format='%+2.0f dB')

plt.tight_layout()

plt.show()

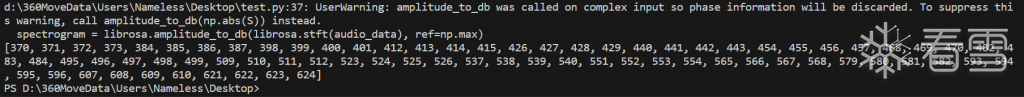

Extract Frequency Array

# Perform spectrum analysis using librosa and convert energy values to decibel units

spectrogram = librosa.amplitude_to_db(librosa.stft(audio_data), ref=np.max)

# Set threshold, only record frequencies greater than -10dB

threshold = -10

# Initialize empty frequency array

frequencies = []

# Iterate through each time frame

for i in range(spectrogram.shape[1]):

# Get the energy values for the current time frame

frame = spectrogram[:, i]

# Get frequency indices with intensity greater than the threshold

freq_indices = np.where(frame > threshold)[0]

# Add qualifying frequencies to the frequency array

frequencies.extend(freq_indices)

# Remove duplicate frequencies and sort in ascending order

frequencies = sorted(set(frequencies))

print(frequencies)

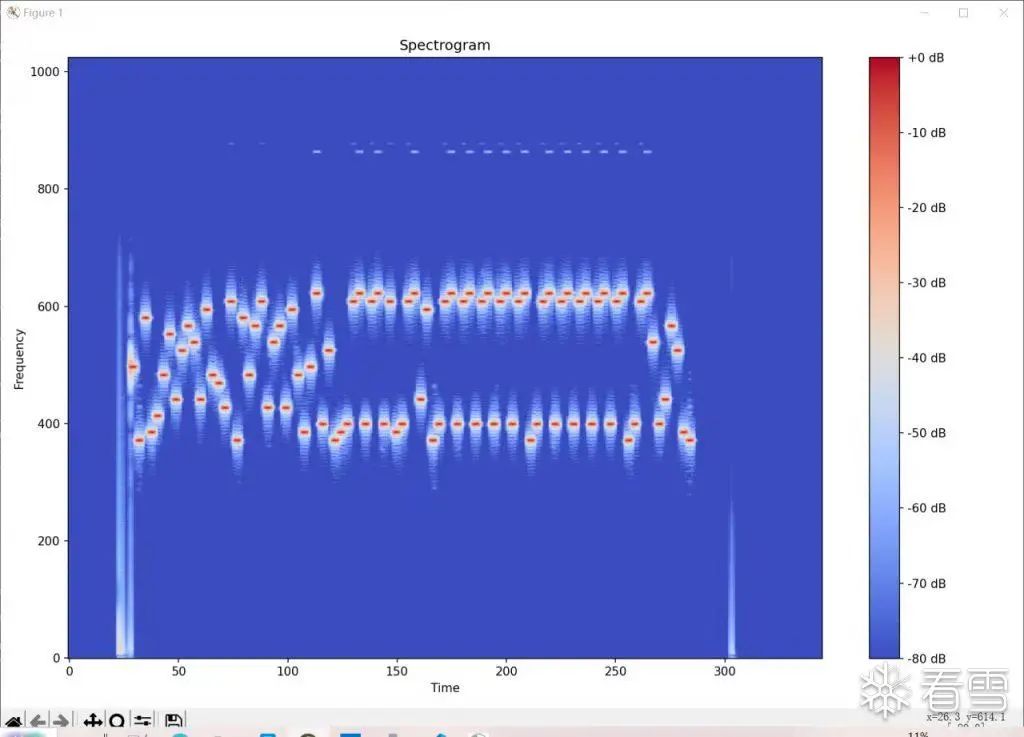

Testing Special WiFi and Password

When the WiFi name is eight ‘1’s and the password is thirty-two ‘1’s.

It was found that part of the frequency distribution overlaps with the previous image, and this image’s frequency distribution is indeed more uniform, indicating a relationship with frequency.

However, it is still unclear what is being transmitted; a more detailed reverse analysis of the communication protocol is needed.

4

Communication Detail Analysis

Communication Overview

The entire communication can be summarized as follows:

The app accompanying the device transmits the WiFi SSID and password to the device through acoustic signals. After receiving the information, the device connects to the corresponding WiFi and accesses the cloud server of the operator, providing feedback to the user about the connection success or failure.

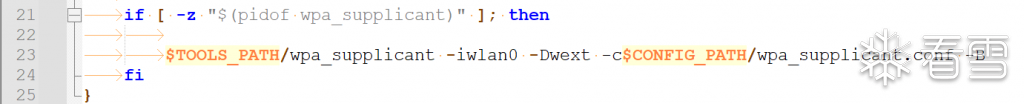

Receiver

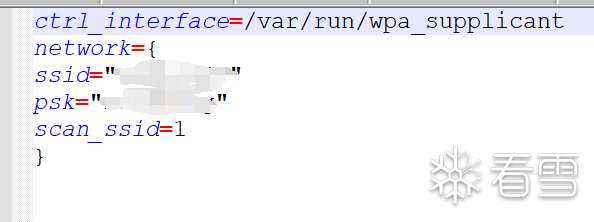

In the firmware, there is a script named “connect_wifi.sh”. The main role of the script is to connect to WiFi based on the contents of wpa_supplicant.conf.

The format of wpa_supplicant.conf is as follows: