When it comes to edge computing, you might find it somewhat obscure, but you are certainly familiar with octopuses.

An octopus is an invertebrate animal filled with neurons, but only 40% of its neurons are in its brain, while the remaining 60% are in its eight arms (tentacles). This means that the octopus has “multiple small brains + one large brain,” and this distributed structure allows it to be very agile when hunting, as its arms can receive signals and hunt nearby.

Image | Octopus (Source: IC photo)

The structure of edge computing is quite similar to that of an octopus; it is a form of distributed computing that allows for local processing of information without the need to upload large amounts of data to a remote management platform.

When discussing edge computing, one cannot overlook sensors. Currently, the number of nodes in sensor networks is growing rapidly, and there is a significant exchange of redundant data between sensor terminals and computing units. How to process large amounts of data while also reducing power consumption is a pressing challenge for edge computing.

In response to this, Dr. Chai Yang, an associate professor in the Department of Applied Physics at the Hong Kong Polytechnic University, recently published a paper titled “Near-Sensor and In-Sensor Computing” in Nature Electronics, creatively proposing methods for near-sensor computing and in-sensor computing.

Image | “Near-Sensor and In-Sensor Computing” (Source: Nature)

Dr. Chai told DeepTech that the methods of near-sensor computing and in-sensor computing can reduce the redundant data movement between sensor terminals and computing units. By partially transferring computing tasks to the sensor terminals, energy consumption and time delays can be reduced, while also saving communication bandwidth and enhancing data security and privacy.

Different Architectures, Different Levels

When discussing the reasons for processing data at the sensor end in certain situations, Dr. Chai explained that the data collected by IoT sensors is mostly unstructured, so it must be processed first. A complete sensing system requires both sensors and computing units. However, the manufacturing processes for sensors and computing units are quite different. For example, image sensors are manufactured using a 65-nanometer node, which is already a very advanced process; whereas for computing, the most advanced semiconductor processes have developed to 5-nanometer nodes.

Moreover, sensors and computing units are typically manufactured using different processes and then assembled into a complete system, resulting in a significant physical distance between them. More often than not, sensors collect data and upload it to the cloud for processing. So, in which situations is it better to process data at the sensor end rather than in the cloud?

Dr. Chai stated that this is mainly due to two rigid requirements: the first consideration is power consumption, as sensors are generally powered by batteries, which are limited in capacity, so they cannot perform overly complex computations; complex computations usually need to be uploaded to the cloud for further processing; the second consideration is time, which refers to real-time processing.

For instance, autonomous driving is very sensitive to latency; if data is sent to the cloud for processing and then sent back, it poses significant challenges for safe driving. Therefore, for relatively simple and time-sensitive data processing, it is better to perform it at the sensor end than in the cloud. Generally speaking, sensors and computing units are made of different materials, so their functions, structures, designs, and processing systems differ.

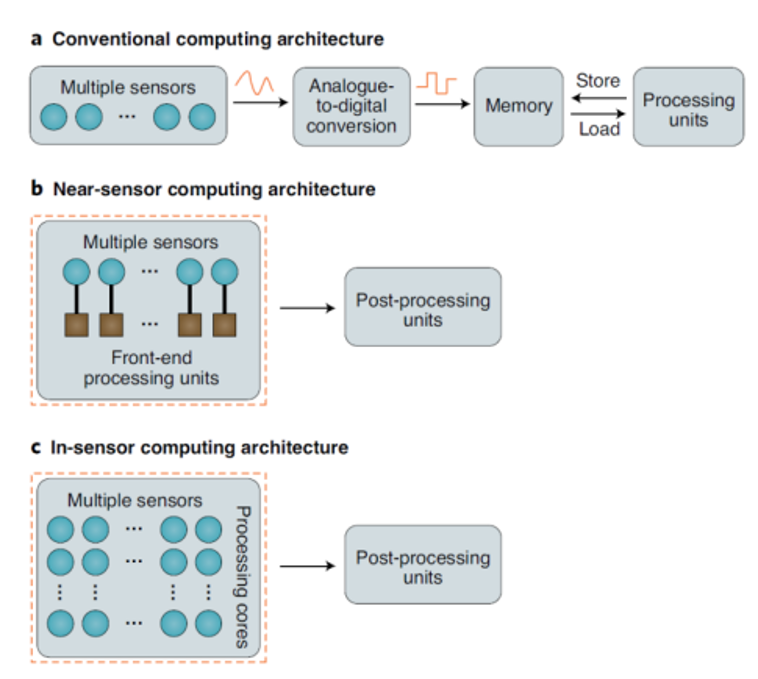

In traditional sensing computing architectures, sensors and computing units are physically separated, with a considerable physical distance between them. In contrast, in near-sensor computing and in-sensor computing architectures, the distance between sensors and computing units is typically significantly reduced or eliminated. For example, in a near-sensor computing architecture, the front-end processing unit is placed next to the sensor, which means that the processing unit can enhance the overall performance of the system and minimize redundant data transmission; in an in-sensor computing architecture, a single sensor or multiple connected sensors can directly process the collected information, allowing for the integration of sensing and computing functions into a single device.

Image | Different Computing Architectures

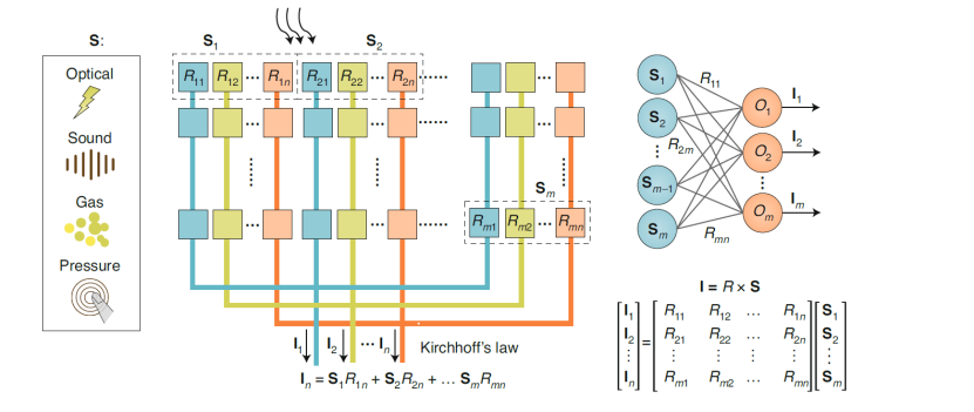

Image | Schematic diagram of in-sensor computing architecture with reconfigurable sensors for multiplication and accumulation operations in neural networks

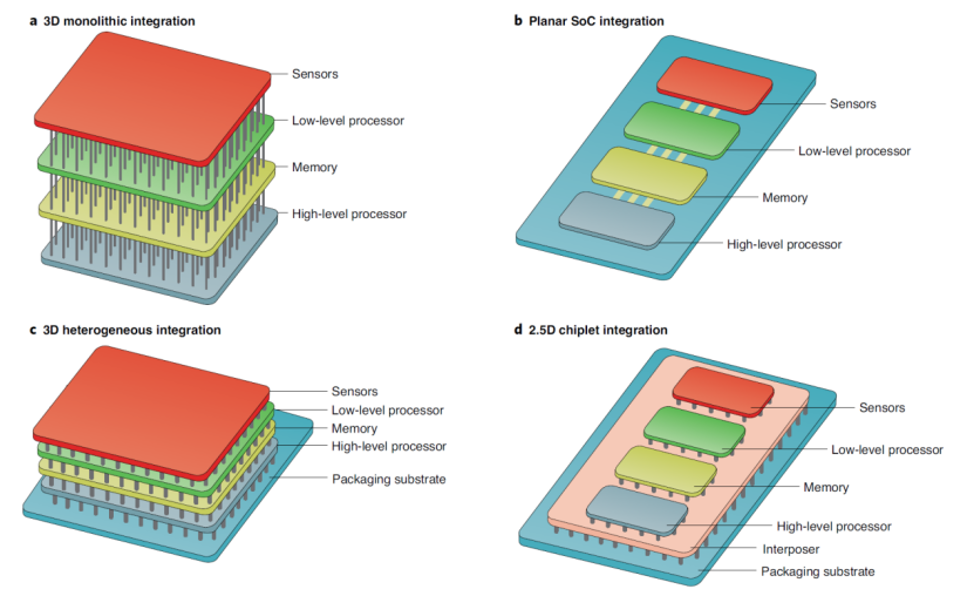

Dr. Chai stated: “A major challenge facing near-sensor computing is the integration of sensing units and computing units. For example, computing units have adopted very advanced technology nodes, while most sensors can perform their functions well based on larger node technologies. Integration technologies for near-sensor computing include heterogeneous integration, 3D monolithic integration, system-on-chip integration, and 2.5D Chiplet technology, among which 3D monolithic integration provides a high-density, short-distance system integration method, but its complex fabrication processes and heat dissipation still face significant challenges.”

Image | Integration technologies for near-sensor and in-sensor computing

Although in-sensor computing architectures have been proven to be a method for combining computing and sensing capabilities, they are typically only applicable to specific scenarios. Furthermore, they can only be realized through new materials and device structures that are still in the early stages of development. “Near-sensor computing and in-sensor computing are interdisciplinary research fields that encompass materials, devices, circuits, architectures, algorithms, and integration technologies,” Dr. Chai said. “These architectures are complex because they need to process a large variety of different types of signals in different scenarios. The successful deployment of near-sensor computing and in-sensor computing requires the joint development and optimization of sensors, devices, integration technologies, and algorithms.”

In this research, the team provided clear definitions for near-sensor computing and in-sensor computing, categorizing information processing into low-level processing and high-level processing. Low-level processing involves selectively extracting useful data from large amounts of raw data by suppressing unnecessary noise or distortion or by enhancing features for further processing; high-level processing involves abstract representation, which relates to cognitive processes that can identify what the input signal is or where it is. Finally, in addition to providing reliable definitions for near-sensor computing and in-sensor computing, the researchers also proposed possible solutions for integrating sensing and processing units. In the future, their work could inspire further research aimed at leveraging advanced manufacturing technologies to realize these architectures or hardware components.

Practical Applications Still Have Distance

In other words, the methods of near-sensor and in-sensor computing are a potential pathway to achieving efficient hardware for intelligent sensing processing. Processing data directly at the sensor end can provide improved area, time, and energy efficiency, which is particularly beneficial in real-time and data-intensive applications.

However, implementing low-level and high-level processing functions near the sensor requires the development of advanced integration technologies and new computing algorithms; implementing computing within the sensor also requires the development of devices with new functionalities and mechanisms, as well as suitable algorithms.

Although there is potential in sensor computing, most devices are currently in the early stages of research and development, and due to limited functionalities, they are confined to specific application scenarios. Furthermore, so far, there have been limited demonstrations of complete processing and large-scale integration with peripheral control, which is crucial for the future of sensor processing architectures. At the same time, Dr. Chai told DeepTech that autonomous driving should be a good entry point; once the current “bottleneck” is broken, more new applications may emerge.

In Shanghai, there is a company called Chipron Technology that has developed a Dynamic Vision Sensor (DVS) used in vehicles to capture images and perform some real-time analysis. They are doing quite well in this field, utilizing near-sensor computing, which is already very close to practical use and is also relatively leading in the domestic market. Although Dr. Chai’s team is currently focusing primarily on visual sensors, the methods of near-sensor and in-sensor computing can also be extended to other types of sensors, such as auditory, tactile, gustatory signals, chemical signals, and even biological signal sensors.

Perseverance and Original Intention

Dr. Chai obtained his PhD in Electronic Engineering from the Hong Kong University of Science and Technology; he then conducted postdoctoral research at Stanford University; and later continued his research in electronic devices at the Hong Kong Polytechnic University.

When discussing the issue of achieving results, Dr. Chai also mentioned that some professors in Hong Kong universities have been very successful, such as DJI Innovations, SenseTime, and Jingke Electronics, all of which have been incubated from Hong Kong universities. In this regard, Dr. Chai expressed his expectations for the future development of the Guangdong-Hong Kong-Macao Greater Bay Area, stating, “The country has proposed some new policies for the Greater Bay Area, and the Hong Kong government has also launched a series of supportive policies for scientific and technological innovation. The entire innovation ecosystem will definitely improve, although this process may be relatively long. I hope that the final research results can solve some existing scientific and engineering problems and produce some practical and usable outcomes.”

-End-

References:

https://www.nature.com/articles/s41928-020-00501-9#citeas

https://www.nature.com/articles/d41586-020-00592-6

https://www.nature.com/articles/s41565-019-0501-3#data-availability

https://www.mdpi.com/1424-8220/20/5/1400/htm

https://techxplore.com/news/2020-12-exploring-potential-near-sensor-in-sensor.html