Introduction: The Collision of C++ and Neural Networks

In today’s programming world, C++ is like a low-key yet powerful “behind-the-scenes hero”, firmly dominating the field of high-performance computing. From the stunningly realistic graphics rendering in game development to precise handling of complex data in scientific computing, C++ builds efficient systems through its near-ultimate control over hardware resources.

As for neural network models, they have long become “capable assistants” in solving complex problems across various cutting-edge fields. Whether it’s the precise identification of diseases in medical imaging or intelligent risk prediction in financial markets, neural networks demonstrate extraordinary capabilities. However, as the saying goes, “the higher you go, the colder it gets”; with the increasing complexity of models and the exponential growth of data volume, the issue of training efficiency becomes even more pronounced. A complex neural network model may take hours or even days to train, which is a significant delay for developers eager to get their models “up and running”.

This is where parallel computing and multithreading technology shine like a “timely rain”! They can cleverly decompose computing tasks, allowing multiple processor cores or threads to work together, significantly shortening training time and injecting powerful momentum into the development of neural network models. Today, let’s delve into how C++ neural network models leverage parallel computing and multithreading technology to achieve “overtaking on a curve” and open a new chapter in efficient training.

1. Initial Understanding of Parallel Computing and Multithreading

Let’s start by understanding the principle of parallel computing through a real-life scenario. Imagine you need to move a large pile of books to a new bookshelf. If you do it alone, moving them one by one would take a lot of time, much like traditional single-threaded computing where tasks are executed sequentially, resulting in low efficiency. But if you call a few friends to help, with each person responsible for moving part of the books simultaneously, the speed of moving the books would definitely increase significantly. This is the basic idea of parallel computing! Decomposing a large computing task into multiple smaller tasks allows multiple processor cores or threads to process them simultaneously, greatly reducing overall processing time.

Multithreading, on the other hand, is an important means of achieving parallel computing. In C++, threads act like diligent “little helpers”; each thread can independently execute a segment of code, sharing data and resources within the program while also working independently to complete complex tasks. For instance, in a graphics rendering program, one thread handles the geometric calculations, another focuses on color filling, and yet another manages lighting effects, each performing its role while cooperating to present the image quickly and beautifully.

Compared to traditional single-threaded programming, the advantages of multithreading are obvious. Single-threading is like a narrow one-lane road where all vehicles (tasks) must queue up sequentially. If the vehicle (task) in front slows down, the ones behind can only wait, easily causing congestion (performance bottlenecks). Multithreading, however, resembles a multi-lane highway, where vehicles (tasks) can move forward in parallel. As long as the lanes (thread task allocation) are planned reasonably, the overall traffic efficiency (program running efficiency) can be greatly improved.

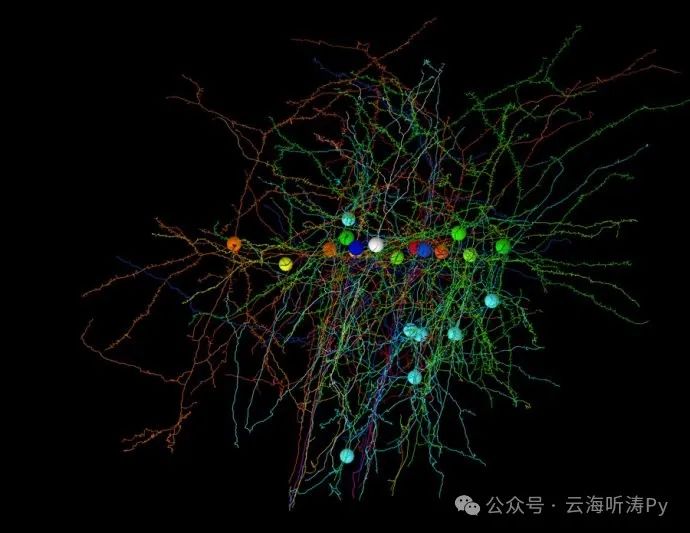

For neural network models, parallel computing and multithreading are a “super strong duo”. During the training process of neural networks, a large amount of data needs to undergo complex calculations repeatedly, from forward propagation to compute the outputs of neurons, to backward propagation to adjust weights based on errors; each step involves massive computations. If only single-threading is used, training the model would be as slow as a snail, with extremely low efficiency. Utilizing parallel computing and multithreading technology allows these computing tasks to be cleverly distributed across multiple processor cores or threads, enabling them to work simultaneously, akin to equipping the neural network model with a powerful “engine” that dramatically increases training speed.

2. The Path to Implementing Parallel Computing in C++

(1) The Wonderful World of Multithreading Libraries

Among the many tool libraries in C++, the multithreading library is the “main force” in achieving parallel computing. Take the classic pthread library, for example; it is like a fully equipped “thread toolbox”, providing us with a series of practical functions for managing threads.

Creating a thread is like recruiting little helpers; using the pthread_create function makes it easy. This function acts like a “job advertisement” where you need to provide the new thread’s identifier (like the helper’s employee number), thread attributes (such as workload, priority, etc., generally default is fine), the function to be executed by the thread (what the helper needs to do), and the parameters to be passed to this function. For example, the following code:

#include <iostream>

#include <pthread.h>

void* threadFunction(void* arg) {

std::cout << "Hello from thread!" << std::endl;

return nullptr;

}

int main() {

pthread_t threadId;

int result = pthread_create(&threadId, nullptr, threadFunction, nullptr);

if (result == 0) {

std::cout << "Thread created successfully!" << std::endl;

} else {

std::cerr << "Error creating thread!" << std::endl;

}

// Further thread management operations will be added later

return 0;

}Once the thread is created, it needs to be activated, and this is where the pthread_join function comes into play. It acts like a “supervisor”, blocking the main thread until the specified thread completes its task, while also retrieving the thread’s exit status to see how well the little helper performed. If you don’t want to block the main thread and prefer the thread to run quietly in the background, you can use the pthread_detach function, allowing the thread to “fly solo”, leaving resource cleanup to the system.

However, when multiple threads are working together, it’s easy to encounter situations where they compete for toys (shared resources), leading to issues of thread synchronization and mutual exclusion. For example, if multiple threads need to modify the same global variable without proper coordination, the data can become chaotic, much like children breaking toys. In this case, a mutex acts like a “protective lock”; whichever thread acquires the lock can monopolize the shared resource, while other threads must wait until it releases the lock before they can compete for access.

Let’s look at an example where there is a global variable count that multiple threads need to increment:

#include <iostream>

#include <pthread.h>

#include <mutex>

std::mutex mutexCount;

int count = 0;

void* incrementCount(void* arg) {

for (int i = 0; i < 10000; ++i) {

// Lock to protect shared resource

mutexCount.lock();

count++;

// Unlock to release resource

mutexCount.unlock();

}

return nullptr;

}

int main() {

const int numThreads = 5;

pthread_t threads[numThreads];

for (int i = 0; i < numThreads; ++i) {

pthread_create(&threads[i], nullptr, incrementCount, nullptr);

}

for (int i = 0; i < numThreads; ++i) {

pthread_join(threads[i], nullptr);

}

std::cout << "Final count: " << count << std::endl;

return 0;

}In this code, each thread must acquire the mutex lock before entering the increment operation, ensuring that the value of count is not altered chaotically, ultimately yielding the correct result. In addition to mutexes, there are other synchronization tools such as condition variables and semaphores, which act like traffic signals and crosswalks, coordinating the “traffic order” between threads to allow multithreaded programs to run stably and efficiently.

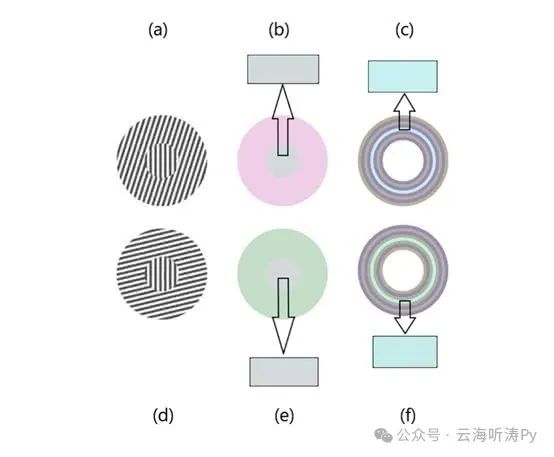

(2) The Efficient Magic of Data Parallelism

Data parallelism is a “sharp weapon” in parallel computing, and its principle can be simply understood as a production line in a factory. Suppose we need to produce a large batch of toys, splitting the process of producing one toy into multiple small steps, with each worker (thread) responsible for one step, continuously feeding raw materials (data) into the production line. Each worker simultaneously processes their respective portion of data, significantly speeding up toy production.