Click the above SRE Operations Group,👉Follow me👈,Select Set as Favorite

High-quality articles delivered promptly

4 Nginx Reverse Proxy

4.1 Basics of Proxy

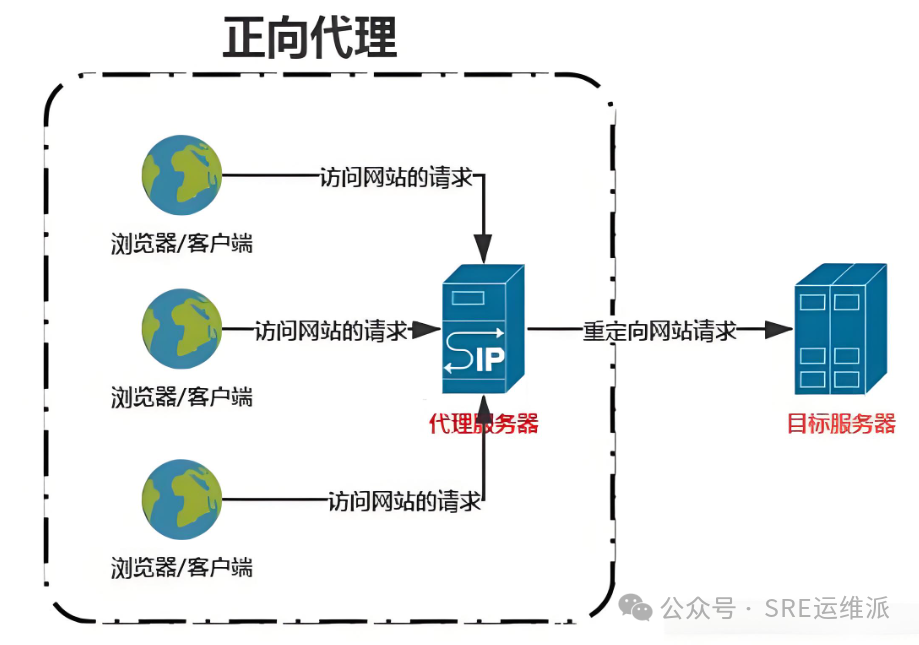

There are two types of proxies: forward proxy and reverse proxy

Forward Proxy and Reverse Proxy are two common types of proxy servers used to handle different directions and purposes in network communication

Forward Proxy

Characteristics

-

The proxy server is located between the client and the target server

-

The client sends a request to the proxy server, which forwards the request to the target server and returns the response from the target server to the client

-

The target server is unaware of the client’s existence; it only knows that a proxy server is sending requests to it

-

When the client accesses internet resources through a forward proxy, it usually needs to be configured to use the proxy

Uses

-

Bypass access restrictions: Used to circumvent network access restrictions and access restricted resources

-

Hide client identity: The client can hide its real IP address through a forward proxy

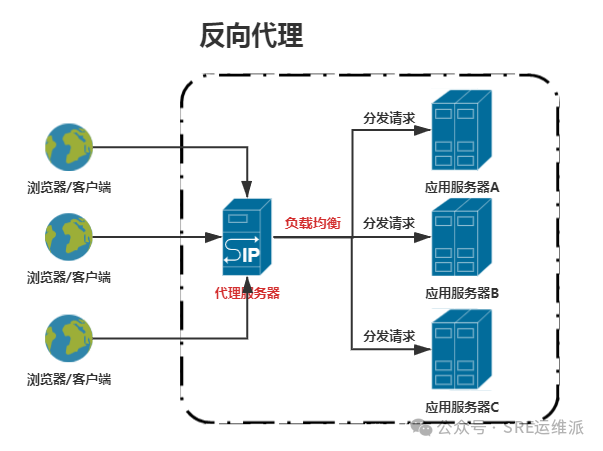

Reverse Proxy

Characteristics

-

The proxy server is located between the target server and the client

-

The client sends a request to the proxy server, which forwards the request to one or more target servers and returns the response from one of the target servers to the client

-

The target server is unaware of the final client’s identity; it only knows that a proxy server is sending requests to it

-

Used to distribute client requests to multiple servers, achieving load balancing

Uses

-

Load balancing: Ensures even distribution of server load by distributing traffic to multiple servers

-

Caching and acceleration: A reverse proxy can cache static content, reducing the load on the target server and improving access speed

-

Security: Hides the real server’s information, enhancing security, and can perform SSL termination

Similarities and Differences

Similarities

-

Intermediate layer: Both forward and reverse proxies are intermediate layers between the client and the target server.

-

Proxy function: They both act as proxies, handling requests and responses, making communication more flexible and secure

Differences

-

Direction: Forward proxy proxies the client, while reverse proxy proxies the server

-

Purpose: Forward proxy is mainly used for access control and hiding client identity, while reverse proxy is mainly used for load balancing, caching, and enhancing security

-

Configuration: The client needs to be configured to use a forward proxy, while a reverse proxy is transparent to the server, and the client does not need to be aware of it

4.2 Nginx and LVS

Nginx and LVS (Linux Virtual Server) are both popular proxy and load balancing solutions, but they have different characteristics and application scenarios

The choice between Nginx and LVS depends on specific application requirements and complexity. Nginx is more suitable as a web server and application layer load balancer, while LVS is more suitable for transport layer load balancing

Similarities

-

Load balancing: Both Nginx and LVS can act as load balancers, distributing traffic to multiple backend servers, improving system availability and performance

-

Performance: Both Nginx and LVS have high-performance characteristics, capable of handling a large number of concurrent connections and requests

Differences

-

Layer: Nginx performs load balancing and reverse proxy at the application layer, while LVS performs load balancing at the transport layer

-

Functionality: In addition to load balancing, Nginx can also act as a reverse proxy and static file server; LVS mainly focuses on load balancing, achieving simple and efficient layer 4 distribution

-

Configuration and management: Nginx configuration is relatively simple and easy to manage, suitable for applications of various scales; LVS requires in-depth knowledge of the Linux kernel and related configurations, suitable for large-scale scenarios with higher performance requirements

LVS does not listen on ports, does not process request data, and does not participate in the handshake process; it only forwards data packets at the kernel level

Nginx needs to receive requests at the application layer, and based on the client’s request parameters and the rules configured in Nginx, it then initiates requests to the backend server as a client

LVS typically does layer 4 proxying, while Nginx does layer 7 proxying

4.3 Implementing HTTP Protocol Reverse Proxy

4.3.1 Related Directives and Parameters

Nginx can provide HTTP protocol reverse proxy services based on the ngx_http_proxy_module module, which is the default module of Nginx

http://nginx.org/en/docs/http/ngx_http_proxy_module.htmlproxy_pass URL; # Address of the backend server to forward to, can specify hostname, domain name, IP address, and optionally specify port # Scope location, if in location, limit_exceptproxy_hide_header field; # Nginx by default does not pass response header information such as Date, Server, X-Pad, X-Accel-... from the backend server to the client, except for these, other response header fields will be returned # You can use proxy_hide_header to explicitly specify the response header fields not to be returned # Scope http, server, locationproxy_pass_header field; # Explicitly specify which fields in the backend server response headers to return to the client # Scope http, server, locationproxy_pass_request_body on|off; # Whether to send the body of the client's HTTP request to the backend server, default on # Scope http, server, locationproxy_pass_request_headers on|off; # Whether to send the client's HTTP request header information to the backend server, default on # Scope http, server, locationproxy_connect_timeout time; # Nginx connection timeout duration with the backend server, default 60S, timeout will return 504 to the client # Scope http, server, locationproxy_read_timeout time; # Nginx wait time for the backend server to return data, default 60S, timeout will return 504 to the client # Scope http, server, locationproxy_send_timeout time; # Nginx timeout duration for sending requests to the backend server, default 60S, timeout will return 408 to the client # Scope http, server, locationproxy_set_body value; # Redefine the body of the request sent to the backend server, can include text, variables, etc. # Scope http, server, locationproxy_set_header field value; # Change or add request header fields and send to the backend server # Scope http, server, locationproxy_http_version 1.0|1.1; # Set the HTTP protocol version when sending requests to the backend server, default value 1.0 # Scope http, server, locationproxy_ignore_client_abort on|off; # Whether Nginx continues to execute connections with the backend when the client interrupts the connection, default off, if the client interrupts, Nginx will also interrupt the backend connection, on means that if the client interrupts, Nginx will continue to process the connection with the backend # Scope http, server, locationproxy_headers_hash_bucket_size size; # When proxy_hide_header and proxy_set_header are configured # Used to set the size of the hash table for storing HTTP message headers in Nginx, default value 64 # Scope http, server, locationproxy_headers_hash_max_size size; # Upper limit of the previous parameter, default value 512, scope http, server, locationproxy_next_upstream error|timeout|invalid_header|http_500|http_502|http_503|http_504|http_403|http_404|http_429|non_idempotent| off ...; # When the currently configured backend server cannot provide services, request the next backend server due to what kind of error # Default value error timeout, indicating that when the current backend server encounters error and timeout errors, request another backend server, scope http, server, locationproxy_cache zone|off; # Whether to enable proxy caching, default off, not enabled, zone indicates cache name # Scope http, server, locationproxy_cache_path path [levels=levels] [use_temp_path=on|off] keys_zone=name:size[inactive=time] [max_size=size] [min_free=size] [manager_files=number][manager_sleep=time] [manager_threshold=time] [loader_files=number][loader_sleep=time] [loader_threshold=time] [purger=on|off][purger_files=number] [purger_sleep=time] [purger_threshold=time]; # After enabling proxy caching, specify the path for storing cached data, scope http, not set by default # path indicates the path for storing cached data, ensure Nginx has write permissions # levels indicates the directory hierarchy of cached data, represented in hexadecimal, levels=1:2 indicates the first level has 16 directories, 0-f, each directory in the first level has 16*16 subdirectories, 00-ff # keys_zone=name:size zone indicates the cache name, defined before use, size indicates the size of that zone # inactive indicates the lifecycle of cached data, default 10 minutes # max_size indicates the maximum disk space occupied by the cacheproxy_cache_key string; # Specify the key for cached data, different keys correspond to different cached files, scope http, server, location # Default value $scheme$proxy_host$request_uriproxy_cache_valid [code ...] time; # Set different cache durations for data with different response status codes, can set multiple, default not set # Scope http, server, locationproxy_cache_use_stale error|timeout|invalid_header|updating|http_500|http_502|http_503|http_504|http_403|http_404|http_429|off ...; # In which cases of errors from the backend server, directly use expired cached data to respond to client requests # Default off, scope http, server, locationproxy_cache_methods GET|HEAD|POST ...; # Cache which request types of data, default value GET HEAD, scope http, server, location4.3.2 Basic Configuration

# Forward to specified IPserver { listen 80; server_name www.m99-josedu.com; #root /var/www/html/www.m99-josedu.com; location / { proxy_pass http://10.0.0.210; # 210 must have WEB service running, requests the default default_Serve configuration }}[root@ubuntu ~]# curl http://10.0.0.210hello world

# Forwarded to backend 210[root@ubuntu ~]# curl http://www.m99-josedu.comhello world

# Forwarded to specified IP and portserver { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://10.0.0.210:8080; }}

# Backend host configurationserver { listen 8080; root /var/www/html/8080;}[root@ubuntu ~]# curl www.m99-josedu.comhello 8080

# Forwarded to specified domainserver { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://www.node-1.com; }}[root@ubuntu ~]# cat /etc/hosts10.0.0.210 www.node-1.com

# Backend host configurationserver { listen 80; root /var/www/html/www.node-1.com; server_name www.node-1.com;}[root@ubuntu ~]# echo "node-1" > /var/www/html/www.node-1.com/index.html

# Test[root@ubuntu ~]# curl www.m99-josedu.comnode-1

# Transparent transmission of specified parameters# In the above request, the client accesses http://www.m99-josedu.com, this host receives the request and then requests http://www.node-1.com as a client

# Client host configuration[root@ubuntu ~]# cat /etc/hosts10.0.0.206 www.m99-josedu.com

# Intermediate host configuration[root@ubuntu ~]# cat /etc/hosts10.0.0.210 www.m99-josedu.comserver { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://10.0.0.210; proxy_set_header Host $http_host; # Pass the Host value from the client's request header to the backend server }}

# Backend host configurationserver { listen 80; root /var/www/html/www.m99-josedu.com; server_name www.m99-josedu.com;}[root@ubuntu ~]# echo "m99" > /var/www/html/www.m99-josedu.com/index.html

# Client test[root@ubuntu ~]# curl http://www.m99-josedu.comm99# If the backend service is unavailable, accessing from the client will return 502

# Stop backend nginx[root@ubuntu ~]# systemctl stop nginx.service# Client access to intermediate Nginx[root@ubuntu ~]# curl http://www.m99-josedu.com<html><head><title>502 Bad Gateway</title></head><body><center><h1>502 Bad Gateway</h1></center><hr><center>nginx</center></body></html>

[root@ubuntu ~]# curl http://www.m99-josedu.com -IHTTP/1.1 502 Bad GatewayServer: nginxDate: Sun, 11 Feb 2025 12:41:53 GMTContent-Type: text/html; charset=utf8Content-Length: 150Connection: keep-alive# If the connection to the backend server times out, it will report 504

# Start Nginx service and set firewall rules, if it is a request from the intermediate server, then DROP[root@ubuntu ~]# systemctl start nginx.service[root@ubuntu ~]# iptables -A INPUT -s 10.0.0.206 -j DROP

# Client test, timeout after 60S, shows 504[root@ubuntu ~]# time curl http://www.m99-josedu.com<html><head><title>504 Gateway Time-out</title></head><body><center><h1>504 Gateway Time-out</h1></center><hr><center>nginx</center></body></html>

real 1m0.057suser 0m0.009ssys 0m0.010s

# Modify timeout duration to 10Sserver { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://10.0.0.210; proxy_set_header Host $http_host; proxy_connect_timeout 10s; }}

# Client test again[root@ubuntu ~]# time curl http://www.m99-josedu.com<html><head><title>504 Gateway Time-out</title><head><body><center><h1>504 Gateway Time-out</h1></center><hr><center>nginx</center></body></html>

real 0m10.032suser 0m0.005ssys 0m0.014s# Modify backend server policy[root@ubuntu ~]# iptables -F[root@ubuntu ~]# iptables -A INPUT -s 10.0.0.206 -j REJECT

# Client test directly returns 502[root@ubuntu ~]# time curl http://www.m99-josedu.com<html><head><title>502 Bad Gateway</title></head><body><center><h1>502 Bad Gateway</h1></center><hr><center>nginx</center></body></html>

real 0m0.013suser 0m0.005ssys 0m0.006s4.3.3 Implementing Static and Dynamic Separation

Schedule based on conditions to achieve static and dynamic separation

| Role | IP |

| Client | 10.0.0.208 |

| Proxy Server | 10.0.0.206 |

| API Server | 10.0.0.159 |

| Static Server | 10.0.0.210 |

# Client configuration[root@ubuntu ~]# cat /etc/hosts10.0.0.206 www.m99-josedu.com

# Proxy Server configurationserver { listen 80; server_name www.m99-josedu.com; #root /var/www/html/www.m99-josedu.com; location /api { proxy_pass http://10.0.0.159; proxy_set_header Host "api.m99-josedu.com";}

location /static { proxy_pass http://10.0.0.210; proxy_set_header Host "static.m99-josedu.com"; }}

# API Server configurationserver { listen 80; root /var/www/html/api.m99-josedu.com/; server_name api.m99-josedu.com;}[root@ubuntu ~]# cat /var/www/html/api.m99-josedu.com/api/index.htmlapi

# Static Server configurationserver { listen 80; root /var/www/html/static.m99-josedu.com; server_name static.m99-josedu.com;}

[root@ubuntu ~]# cat /var/www/html/static.m99-josedu.com/static/index.htmlstatic[root@ubuntu ~]# curl http://www.m99-josedu.com/api/index.htmlapi[root@ubuntu ~]# curl http://www.m99-josedu.com/static/index.htmlstaticDifference between adding and not adding a slash after proxy_pass

# Without a slash is appended# http://www.m99-josedu.com/api/index.html ----> http://api.m99-josedu.com/api/index.htmllocation /api { proxy_pass http://10.0.0.159; proxy_set_header Host "api.m99-josedu.com";}

# With a slash is replaced# http://www.m99-josedu.com/api/index.html -----> http://api.m99-josedu.com/index.htmllocation /api { proxy_pass http://10.0.0.159/; proxy_set_header Host "api.m99-josedu.com";}Implementing proxy for specific resources

location ~ \\.jpe?g|png|bmp|gif$ { proxy_pass http://10.0.0.159; proxy_set_header Host "api.m99-josedu.com";}4.3.4 Proxy Server Implementing Data Caching

Prerequisite: Ensure all servers have the same time and timezone for testing

# Proxy Server configuration# Define cacheproxy_cache_path /tmp/proxycache levels=1:2 keys_zone=proxycache:20m inactive=60s max_size=1g;

server { listen 80; server_name www.m99-josedu.com; location /static { proxy_pass http://10.0.0.210; proxy_set_header Host "static.m99-josedu.com"; proxy_cache proxycache; # Use cache proxy_cache_key $request_uri; proxy_cache_valid 200 302 301 90s; proxy_cache_valid any 2m; # Must write this, otherwise cache will not take effect }}

# Reload to generate cache directory[root@ubuntu ~]# nginx -s reload[root@ubuntu ~]# ll /tmp/proxycache/total 8drwx------ 2 www-data root 4096 Feb 12 23:09 ./drwxrwxrwt 14 root root 4096 Feb 12 23:09 ../

# Static Server configurationserver { listen 80; root /var/www/html/static.m99-josedu.com; server_name static.m99-josedu.com;}

[root@ubuntu ~]# ls -lh /var/www/html/static.m99-josedu.com/static/total 8.0K-rw-r--r-- 1 root root 657 Feb 12 15:14 fstab-rw-r--r-- 1 root root 7 Feb 12 04:42 index.html

# Client test[root@ubuntu ~]# curl http://www.m99-josedu.com/static/fstab

# View cached data on Proxy Server, the filename is the hash value of the key[root@ubuntu ~]# tree /tmp/proxycache//tmp/proxycache/└── 3└── ab└── 2d291e4d45687e428f0215bec190aab3

# Not a text file[root@ubuntu ~]# file /tmp/proxycache/3/ab/2d291e4d45687e428f0215bec190aab3/tmp/proxycache/3/ab/2d291e4d45687e428f0215bec190aab3: data

# View current time and cache file time[root@ubuntu ~]# dateMon Feb 12 11:35:55 PM CST 2025[root@ubuntu ~]# stat /tmp/proxycache/3/ab/2d291e4d45687e428f0215bec190aab3File: /tmp/proxycache/3/ab/2d291e4d45687e428f0215bec190aab3Size: 1270 Blocks: 8 IO Block: 4096 regular fileDevice: fd00h/64768d Inode: 786517 Links: 1Access: (0600/-rw-------) Uid: ( 33/www-data) Gid: ( 33/www-data)Access: 2025-02-12 23:35:50.506517303 +0800Modify: 2025-02-12 23:35:50.506517303 +0800Change: 2025-02-12 23:35:50.506517303 +0800

# After the lifecycle ends, the file is deleted[root@ubuntu ~]# dateMon Feb 12 11:36:54 PM CST 2025[root@ubuntu ~]# stat /tmp/proxycache/3/ab/2d291e4d45687e428f0215bec190aab3stat: cannot statx '/tmp/proxycache/3/ab/2d291e4d45687e428f0215bec190aab3': No such file or directory# However, within the cache validity period, if the backend server content is updated, the client still retrieves cached data# The real data on the backend is deleted, but the client can still access cached data[root@ubuntu ~]# rm -f /var/www/html/static.m99-josedu.com/static/fstab

# Client test[root@ubuntu ~]# curl http://www.m99-josedu.com/static/fstab# Add response header fields in Proxy Serverproxy_cache_path /tmp/proxycache levels=1:2 keys_zone=proxycache:20m inactive=60s max_size=1g;

server { listen 80; server_name www.m99-josedu.com; location /static { proxy_pass http://10.0.0.210; proxy_set_header Host "static.m99-josedu.com"; proxy_cache proxycache; proxy_cache_key $request_uri; proxy_cache_valid 200 302 301 90s; proxy_cache_valid any 2m; add_header X-Cache $upstream_cache_status; # Add response header to check if cache hit }}

# Currently no cached files[root@ubuntu ~]# tree /tmp/proxycache//tmp/proxycache/└── 3 └── ab

# Client test[root@ubuntu ~]# curl -I http://www.m99-josedu.com/static/fstabHTTP/1.1 200 OKServer: nginxDate: Mon, 12 Feb 2025 15:51:48 GMTContent-Type: application/octet-streamContent-Length: 657Connection: keep-aliveLast-Modified: Mon, 12 Feb 2025 15:14:44 GMTETag: "65ca35e4-291"X-Cache: MISS # First access, not a cache hit, MISSAccept-Ranges: bytes

[root@ubuntu ~]# curl -I http://www.m99-josedu.com/static/fstabHTTP/1.1 200 OKServer: nginxDate: Mon, 12 Feb 2025 15:51:54 GMTContent-Type: application/octet-streamContent-Length: 657Connection: keep-aliveLast-Modified: Mon, 12 Feb 2025 15:14:44 GMTETag: "65ca35e4-291"X-Cache: HIT # Cached data hit within the lifecycleAccept-Ranges: bytes# Comparison of with and without cache, stress test# Prepare a large file on the backend server[root@ubuntu ~]# ls -lh /var/www/html/static.m99-josedu.com/static/syslog-rw-r----- 1 root root 242K Feb 13 00:08 /var/www/html/static.m99-josedu.com/static/syslog

# 100 clients concurrently, a total of 1000 requests[root@ubuntu ~]# ab -c 100 -n 10000 http://www.m99-josedu.com/static/syslogThis is ApacheBench, Version 2.3 <$Revision: 1879490 $>Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/Licensed to The Apache Software Foundation, http://www.apache.org/Benchmarking www.m99-josedu.com (be patient)Completed 1000 requestsCompleted 2000 requestsCompleted 3000 requestsCompleted 4000 requestsCompleted 5000 requests......Total transferred: 3190000 bytesHTML transferred: 1620000 bytesRequests per second: 3300.30 [#/sec] (mean) # With cache, processing 3300 requests per secondTime per request: 30.300 [ms] (mean)Time per request: 0.303 [ms] (mean, across all concurrent requests)Transfer rate: 1028.12 [Kbytes/sec] received

# Modify Proxy Server configuration, disable cache and test againproxy_cache_path /tmp/proxycache levels=1:2 keys_zone=proxycache:20m inactive=60s max_size=1g;

server { listen 80; server_name www.m99-josedu.com; #root /var/www/html/www.m99-josedu.com; location /api { proxy_pass http://10.0.0.159/; proxy_set_header Host "api.m99-josedu.com";}

location /static { proxy_pass http://10.0.0.210; proxy_set_header Host "static.m99-josedu.com"; proxy_cache off; proxy_cache_key $request_uri; proxy_cache_valid 200 302 301 90s; proxy_cache_valid any 2m; add_header X-Cache $upstream_cache_status; }}

[root@ubuntu ~]# ab -c 100 -n 10000 http://www.m99-josedu.com/static/syslogThis is ApacheBench, Version 2.3 <$Revision: 1879490 $>Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/Licensed to The Apache Software Foundation, http://www.apache.org/Benchmarking www.m99-josedu.com (be patient)Completed 1000 requestsCompleted 2000 requestsCompleted 3000 requestsCompleted 4000 requestsCompleted 5000 requests......Total transferred: 3190000 bytesHTML transferred: 1620000 bytesRequests per second: 1845.59 [#/sec] (mean) # Without cache, processing 1845 requests per secondTime per request: 54.183 [ms] (mean)Time per request: 0.542 [ms] (mean, across all concurrent requests)Transfer rate: 574.94 [Kbytes/sec] received4.3.5 Implementing Client IP Address Transparency

When using Nginx as a proxy, by default, the backend server cannot obtain the real client IP address

| Role | IP |

| Client | 10.0.0.208 |

| Proxy Server | 10.0.0.206 |

| Real Server | 10.0.0.210 |

By default, the backend server cannot obtain the real client IP

# Proxy Server configurationserver { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://10.0.0.210; }}

# Real Server configurationserver { listen 80 default_server; listen [::]:80 default_server; root /var/www/html; index index.html index.htm index.nginx-debian.html; server_name _; location / { return 200 ${remote_addr}---${http_x_real_ip}---${http_x_forwarded_for}; }}

# Client test, the backend server can only obtain the proxy server IP[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.206------Modify the proxy server configuration to pass through the real client IP

server { listen 80; server_name www.m99-josedu.com; #root /var/www/html/www.m99-josedu.com; location / { proxy_pass http://10.0.0.210; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; }}

# Indicates that the client IP is appended to the request message in the X-Forwarded-For header field, multiple IPs are separated by commas, if there is no X-Forwarded-For in the request, use $remote_addr proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# Client test $remote_addr gets the proxy IP, $http_x_real_ip gets the real client IP, $http_x_forwarded_for gets the real client IP[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.206---10.0.0.208---10.0.0.208Implementing multi-level proxy client IP transparency

| Role | IP |

| Client | 10.0.0.208 |

| Proxy Server – First | 10.0.0.206 |

| Proxy Server – Second | 10.0.0.159 |

| Real Server | 10.0.0.210 |

208-->206-->159-->210# Proxy Server - First configurationserver { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://10.0.0.159; }}

# Proxy Server - Second configurationserver { listen 80 default_server; listen [::]:80 default_server; root /var/www/html; index index.html index.htm index.nginx-debian.html; server_name _; location / { proxy_pass http://10.0.0.210; }}

# Real Server configurationserver { listen 80 default_server; listen [::]:80 default_server; root /var/www/html; index index.html index.htm index.nginx-debian.html; server_name _; location / { return 200 ${remote_addr}---${http_x_real_ip}---${http_x_forwarded_for}; }}

# Client test, Real Server can only get the IP of the previous level proxy through $remote_addr[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159------Modify the first-level proxy server configuration

server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://10.0.0.159; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; }}

# Client test $remote_addr gets the previous level proxy IP, $http_x_real_ip gets the real client IP, $http_x_forwarded_for gets the real client IP[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159---10.0.0.208---10.0.0.208Continue modifying the second-level proxy server configuration

server { listen 80 default_server; listen [::]:80 default_server; root /var/www/html; index index.html index.htm index.nginx-debian.html; server_name _; location / { proxy_pass http://10.0.0.210; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; }}

# Client test# $remote_addr gets the previous level proxy IP# $http_x_real_ip gets the previous level proxy IP# $http_x_forwarded_for accumulates the X-Forwarded-For from the first-level proxy and the second-level proxy[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159---10.0.0.206---10.0.0.208, 10.0.0.206The first-level proxy does not pass through and does not add request header fields

server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://10.0.0.159; #proxy_set_header X-Real-IP $remote_addr; #proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; }}

# Client test# $remote_addr gets the previous level proxy IP# $http_x_real_ip gets the previous level proxy IP# $http_x_forwarded_for gets the previous level proxy IP[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159---10.0.0.206---10.0.0.206— END —

-Click the card below to follow-

Like, share, and view!

Your encouragement is the greatest support for me!