With the full arrival of the 5G era, audio and video have become an indispensable part of our daily lives and work. From the popularity of short video platforms to the rise of online education, and the widespread application in remote meetings, live streaming, filming, and editing, the application scenarios for audio and video are becoming increasingly rich, and the demand is sharply increasing.

However, despite the strong market demand, there is a shortage of qualified audio and video development talent. This situation undoubtedly provides unprecedented opportunities for those passionate about audio and video development and eager to make a mark in this field.

Today, we will share the highlights of the “2024 Audio and Video Technology Development Report” and discuss the employment prospects in audio and video development.

1. Current Status of Audio and Video Employment

1.1 Basic Profile of Technical Personnel

Practitioners in the audio and video industry are mainly concentrated in technical positions, and their profile shows the following characteristics:

-

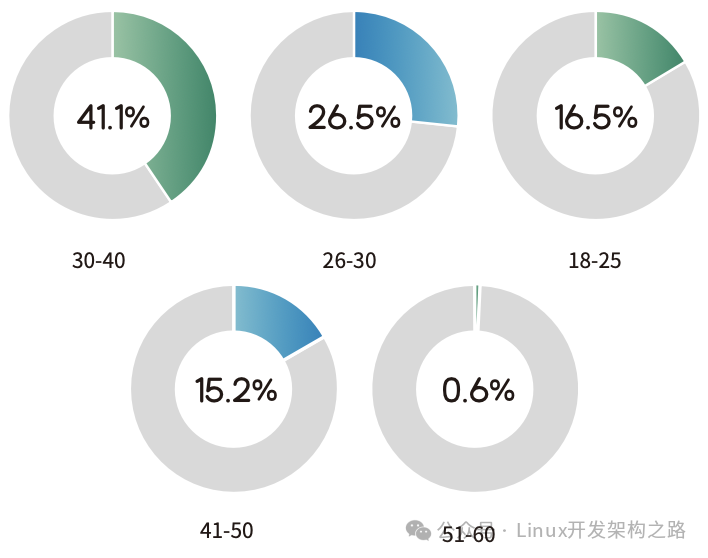

Gender and Age: A significant proportion are male, with age distribution concentrated between 31 and 40 years, reflecting the industry’s reliance on mid-career technical personnel.

-

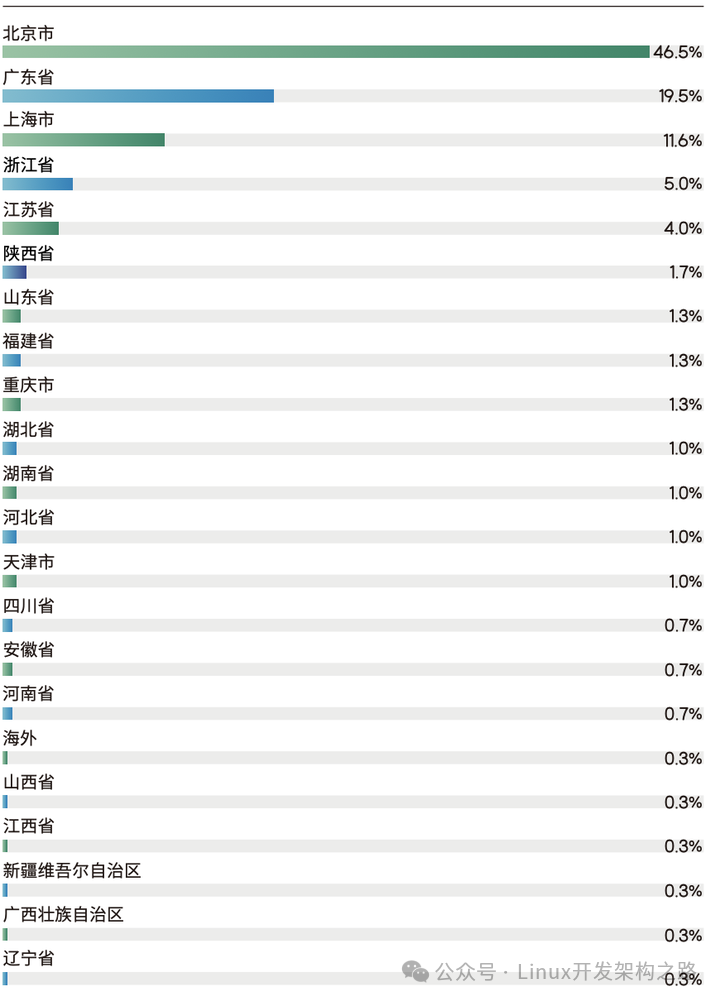

Geographical Distribution: Talent is mainly concentrated in first-tier cities such as Beijing, Shanghai, and Guangzhou, primarily benefiting from the superior industrial environment and technical resources in these areas.

-

Educational Background: A majority hold a bachelor’s degree or higher, indicating a strong demand for high-quality talent in the industry.

-

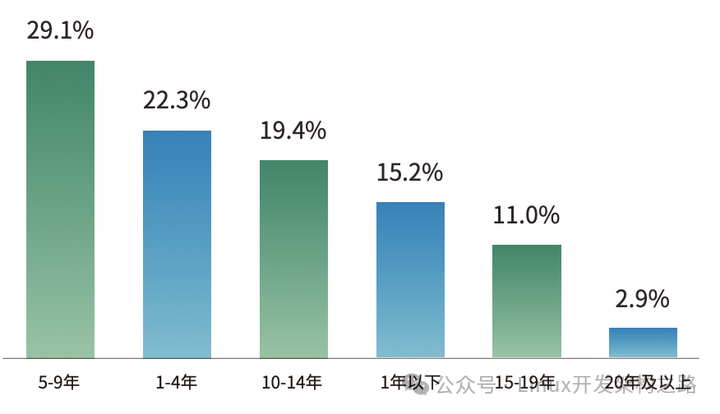

Work Experience: Over 60% of practitioners have more than 5 years of work experience, with those having 5 to 9 years of experience being the majority, showing the industry’s emphasis on professional accumulation.

-

Development Tools Usage:

-

Programming Languages: C++ and C are mainstream, characterized by high-performance computing capabilities and proximity to hardware.

-

Technical Tools: FFmpeg is the preferred framework in the audio and video field, highly regarded for its powerful multimedia processing capabilities.

1.2 Company Size and Position Distribution

-

Company Size: Most practitioners are concentrated in large enterprises with over a thousand employees, indicating a significant industry leader effect.

-

Position Distribution: Senior engineers are the main force, along with technical leaders and executives, reflecting the industry’s demand for technical leadership.

2. Employment and Recruitment Status

2.1 Employment Stability

-

The overall employment market is stable, with most technical personnel not experiencing fixed income issues in the past 24 months, although there are individual cases of job changes.

2.2 Salary Levels

-

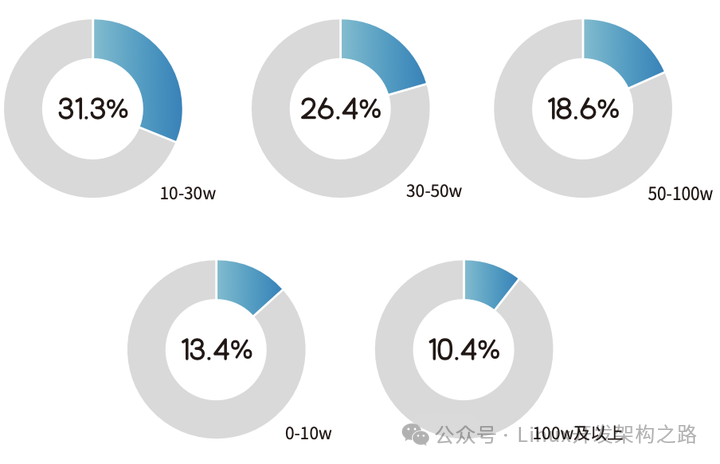

Most technicians earn over 300,000, and those in important company positions can earn over a million.

2.3 Recruitment Trends

-

High complexity of knowledge, increasing requirements for comprehensive abilities of talent.

-

Scarcity of talent in both AI and audio/video technology fields.

3. Analysis of the Audio and Video Industry Chain

3.1 Industry Chain Analysis

The ecosystem of the audio and video industry is mainly divided into three parts:

– Upstream: Basic Resource Providers

Including software and hardware providers, audio and video codec and streaming technology providers, cloud computing service providers, and network transmission service providers. These companies provide necessary support and infrastructure for the audio and video industry.

– Midstream: Audio and Video Solution Providers

Covering SaaS (Software as a Service) and PaaS (Platform as a Service), providing software platforms, application services, and tools for audio and video technology, helping developers quickly build and expand audio and video applications.

– Downstream: Application Scenarios

The application scenarios for audio and video technology are very broad, including social entertainment, online education, finance, healthcare, government and enterprise services, online retail, and corporate office.

3.2 Diagram of Leading Enterprises in the Industry Chain

4. Audio and Video Projects

Currently, salaries in the audio and video market are not low, but there is still a large gap. This is because the knowledge system involved in audio and video development is relatively complex, the learning cost is high, and there is little information available online about audio and video development, with much of it containing incorrect information, making it easy for beginners to encounter difficulties in the learning process, leading many to struggle to persist. Especially for fresh graduates lacking project experience, it is quite challenging to independently complete an audio and video project in a short time, let alone secure an offer.

If you are not yet familiar with audio and video development, you can obtain a set of C++ audio and video development learning materials (it’s worth it), scan the QR code below to receive it.

Complete Learning Path for Audio and Video Development

1. Basics of Audio and Video

1.1 Basic Knowledge of Audio

-

01. How to Capture Sound – Principles of Analog to Digital Conversion

-

02. Why High-Quality Audio Sampling Rate >= 44.1kHz

-

03. What is PCM

-

04. How Many Bits Represent a Sample Point

-

05. Are Sample Values Represented as Integers or Floating Points

-

06. The Relationship Between Volume and Sample Values

-

07. How Many Sample Points Make Up One Frame of Data

-

08. How are the Sample Data of Left and Right Channels Arranged

-

09. What is PCM (Pulse Code Modulation)

-

10. Principles of Audio Encoding

1.2 Basic Knowledge of Video

-

01. RGB Color Principles

-

02. Why YUV Format is Needed

-

03. What is a Pixel

-

04. Resolution, Frame Rate, Bit Rate

-

05. Differences in YUV Data Storage Formats

-

06. YUV Memory Alignment Issues

-

07. Why the Screen Displays a Green Screen

-

08. Principles of H264 Encoding

-

09. Relationships Between H264 I, P, and B Frames

1.3 Basic Knowledge of Demultiplexing

-

01. What is Demultiplexing, such as MP4 Format

-

02. Why Different Multiplexing Formats MP4/FLV/TS are Needed

-

03. Common Multiplexing Formats MP4/FLV/TS

1.4 Setting Up the FFmpeg Development Environment

-

01. Three Major Platforms: Windows, Ubuntu, MAC

-

02. QT Installation

-

03. FFmpeg Command Line Environment

-

04. FFmpeg API Environment

-

05. FFmpeg Compilation

-

06. VS2019 Installation (Windows Platform)

1.5 Common Tools for Audio and Video Development

-

01. MediaInfo, for analyzing video files

-

02. VLC Player, for playback testing

-

03. EasyICE, for analyzing TS streams

-

04. flvAnalyser, for analyzing FLV

-

05. mp4box, for analyzing MP4

-

06. Audacity, for analyzing audio PCM

-

07. Elecard_streamEye, for analyzing H264

-

08. Hikvision YUVPlayer, for analyzing YUV

2. Practical FFmpeg

2.1 FFmpeg Commands

-

01. Extracting Audio PCM/AAC Files

-

02. Extracting Video YUV/H264 Files

-

03. Demultiplexing and Multiplexing

-

04. Audio and Video Recording

-

05. Video Cropping and Merging

-

06. Image/Video Conversion

-

07. Live Streaming Push and Pull

-

08. Watermark/Picture-in-Picture/Nine-Grid Filter

Note: The purpose of mastering FFmpeg commands:

-

1. Quickly grasp what FFmpeg can do;

-

2. Deepen understanding of audio and video.

2.2 Practical SDL Cross-Platform Multimedia Development Library

-

01. Setting Up SDL Environment

-

02. SDL Event Handling

-

03. SDL Thread Handling

-

04. Video YUV Frame Rendering

-

05. Audio PCM Sound Output

Note: SDL beautifies Win, Ubuntu, and Mac platforms, mainly used for subsequent project display and sound output.

2.3 In-Depth FFmpeg Framework

-

01. FFmpeg Framework

-

02. FFmpeg Memory Reference Counting Model

-

03. Demultiplexing Related AVFormat XXX, etc.

-

04. Codec Related AVCodec XXX, etc.

-

05. Compressed Data AVPacket

-

06. Uncompressed Data AVFrame

-

07. Object-Oriented Thinking in FFmpeg

-

08. Packet/Frame Data Zero-Copy

Note: The goal is to familiarize oneself with commonly used FFmpeg structures and function interfaces.

2.4 FFmpeg Audio and Video Demultiplexing + Decoding

-

01. Demultiplexing Process

-

02. Audio Decoding Process

-

03. Video Decoding Process

-

04. FLV Packaging Format Analysis

-

05. MP4 Packaging Format Analysis

-

06. Differences Between FLV and MP4 Seek

-

07. Why FLV Format Can Be Used for Live Streaming

-

08. Why MP4 Cannot Be Used for Live Streaming

-

09. Can MP4 Be Used for On-Demand Streaming

-

10. AAC ADTS Analysis

-

11. H264 NALU Analysis

-

12. AVIO Memory Input Mode

-

13. Audio Resampling Practice

-

14. Does the Duration of Data After Resampling Remain Consistent?

-

15. How is PTS Represented After Resampling?

-

16. Video Decoding YUV Memory Alignment Issues

-

17. Audio Decoding PCM Arrangement Format Issues

-

18. Hardware Decoding dxva2/nvdec/cuvid/qsv

-

19. Data Transfer from Hardware GPU to CPU

-

20. H265 Decoding

Note: FFmpeg API Learning: Video Demultiplexing -> Decoding -> Encoding -> Multiplexing to Synthesize Video.

2.5 FFmpeg Audio and Video Encoding + Multiplexing to Synthesize Video

-

01. AAC Audio Encoding

-

02. H264 Video Encoding

-

03. PCM + YUV Multiplexing to Synthesize MP4/FLV

-

04. Principles of H264 Encoding

-

05. Differences Between IDR Frames and I Frames

-

06. Dynamically Modifying Encoding Bit Rate

-

07. Reference Values for GOP Intervals

-

08. Issues with Audio and Video Synchronization in Multiplexing

-

09. Encoding and Multiplexing Timebase Issues

-

10. Issues with MP4 Synthesis Not Playing on iOS

-

11. How is PTS Represented After Resampling?

-

12. Video Encoding YUV Memory Alignment Issues

-

13. Hardware Encoding dxva2/nvenc/cuvid/qsv

-

14. Principles of H265 Encoding

-

15. H264 and H265 Encoding Interconversion

2.6 FFmpeg Filters

-

01. FFmpeg Filter Chain Framework

-

02. Audio Filter Framework

-

03. Video Filter Framework

-

04. Multi-Channel Audio Mixing amix

-

05. Video Watermarking

-

06. Video Cropping and Flipping

-

07. Adding Logo to Video

Note: Filters are widely used in video editing.

2.7 ffplay Player (Open Source Project Practice)

-

01. Understanding the Significance of ffplay.c

-

02. Analyzing the ffplay Framework

-

03. Demultiplexing Thread

-

04. Audio Decoding Thread

-

05. Video Decoding Thread

-

06. Sound Output Callback

-

07. Frame Rendering Time Interval

-

08. Audio Resampling

-

09. Frame Size Format Conversion

-

10. Differences in Synchronization Between Audio, Video, and External Clocks

-

11. Audio Resampling Compensation Based on Video

-

12. The Essence of Volume Muting and Adjustment

-

13. Audio and Video Packet Queue Size Limitations

-

14. Thread Safety of Audio and Video Packet Queue

-

15. Audio and Video Frame Queue Size Limitations

-

16. Thread Safety of Audio and Video Frame Queue

-

17. Mechanism for Implementing Pause and Play

-

18. Issues with Frame Freezing Caused by Seek Playback

-

19. Data Queue and Synchronization Clock Processing for Seek Playback

-

20. How to Achieve Frame-by-Frame Playback

-

21. Key Points of the Player Exit Process

Note: ffplay.c is the source code for the ffplay command, and the Bilibili player is also based on this source code for secondary development.

2.8 FFmpeg Multimedia Video Processing Tool (Open Source Project Practice)

-

01. Understanding the Significance of ffmpeg.c

-

02. Analyzing the ffmpeg Framework

-

03. Audio and Video Encoding

-

04. Format Conversion for Packaging

-

05. Extracting Audio

-

06. Extracting Video

-

07. Logo Overlay

-

08. Audio and Video File Splicing

-

09. Filter Mechanism

-

10. Command Line Parsing Process

-

11. MP4 to FLV Logic Without Re-encoding

-

12. MP4 to FLV Logic with Re-encoding

-

13. MP4 to FLV Timebase

-

14. MP4 to FLV Scale

Note: ffmpeg.c is the source code for the ffmpeg command, and the purpose of mastering ffmpeg.c is to debug ffmpeg command lines when you can execute commands but cannot write code.

2.9 FFmpeg + QT Player (Self-Developed Project Practice)

-

01. Player Explanation

-

02. Analyzing the Player Framework

-

03. Player Module Division

-

04. Demultiplexing Module

-

05. Audio and Video Decoding

-

06. Player Control

-

07. Audio and Video Synchronization

-

08. DXVA Hardware Decoding

-

09. Audio Spectrum Display

-

10. Audio Equalizer

-

11. Screen Rotation and Flipping

-

12. Adjusting Screen Brightness and Saturation

-

13. Switching Between 4:3 and 16:9 Aspect Ratios

-

14. Stream Information Analysis

Note: This project is quite challenging:

-

1. Hands-on implementation of most functions;

-

2. A few functions will have technical support provided by the instructor.

2.10 OBS Secondary Development – Recording and Streaming Project (Self-Developed Project Practice)

-

01. OBS vs2019 + QT5.15.2 Compilation

-

02. Audio Configuration and Initialization Analysis

-

03. Audio Thread Module Capture and Encoding Analysis

-

04. Video Configuration and Initialization Analysis

-

05. Video Thread Module Capture and Encoding Analysis

-

06. OBS Initialization Process Analysis

-

07. Recording Process Analysis

-

08. Microphone Capture Analysis

-

09. Desktop Capture Analysis

-

10. X264 Encoding Analysis

-

11. Mixing System Sound and Microphone

-

12. Streaming Module Analysis

Note: This project is quite challenging:

-

1. For students who want to delve into streaming projects;

-

2. Provides compilation videos and basic application videos.

3. Streaming Media Client

3.1 RTMP Push and Pull Streaming Project Practice (Self-Developed Project Practice)

-

01. RTMP Protocol Analysis

-

02. Wireshark Packet Capture Analysis

-

03. H264 RTMP Packaging

-

04. AAC RTMP Packaging

-

05. RTMP Pull Streaming Practice

-

06. H264 RTMP Parsing

-

07. AAC RTMP Parsing

-

08. RTMP Push Streaming Practice

-

09. Can it Play Without Metadata?

-

10. Does RTMP Pushing Cause Latency?

-

11. How to Dynamically Adjust Bit Rate for RTMP Pushing

-

12. How to Dynamically Adjust Frame Rate for RTMP Pushing

-

13. Does RTMP Pulling Cause Latency?

-

14. How to Detect RTMP Pulling Latency

-

15. How to Solve RTMP Playback Latency

-

16. Can ffplay and VLC be Used to Test Playback Latency?

-

17. RTMP Pull Playback Speed Adjustment Strategy Settings

-

18. Audio Noise Reduction

-

19. Local Saving

Note: RTMP Push and Pull Streaming is a must-learn project for streaming media.

3.2 HLS Pull Streaming Analysis

-

01. HLS Protocol Analysis

-

02. HTTP Protocol Analysis

-

03. TS Format Analysis

-

04. m3u8 File Parsing

-

05. Wireshark Packet Capture Analysis

-

06. HLS Pull Streaming Practice

-

07. FFmpeg HLS Source Code Analysis

-

08. HLS Multi-Bitrate Mechanism

-

09. How to Solve High Latency Issues in HLS

Note: Understanding the HLS Pull Streaming Mechanism helps to solve high latency issues in HLS playback.

3.3 RTSP Streaming Media Practice (Self-Developed Project Practice)

-

01. RTSP Protocol Analysis

-

02. RTP Protocol Analysis

-

03. H264 RTP Packaging

-

04. H264 RTP Parsing

-

05. AAC RTP Packaging

-

06. AAC RTP Parsing

-

07. RTCP Protocol Analysis

-

08. Setting Up RTSP Streaming Media Server

-

09. RTSP Push Streaming Practice

-

10. RTSP Pull Streaming Practice

-

11. Wireshark Packet Capture Analysis

-

12. The Role of RTP Header Sequence Numbers

-

13. Differences Between RTCP NTP and RTP TS

-

14. RTSP Interaction Process

-

15. Possible Causes of Screen Artifacts

-

16. How SPS PPS are Sent

-

17. SDP Encapsulation of Audio and Video Information

Note: Protocols such as RTCP, RTP, and SDP in RTSP are also applied in WebRTC.

4. Streaming Media Server

4.1 SRS 3.0 Source Code Analysis

-

01. Overall Framework Analysis

-

02. RTMP Push Streaming Analysis

-

03. RTMP Pull Streaming Analysis

-

04. HLS Pull Streaming Analysis

-

05. HTTP-FLV Pull Streaming Analysis

-

06. FFmpeg Transcoding Analysis

-

07. First Screen Instant Opening Technology Analysis

-

08. Forward Cluster Source Code Analysis

-

09. Edge Cluster Source Code Analysis

-

10. Load Balancing Deployment Methods

-

11. Relationship Between Connections and Coroutines

-

12. How to Quickly Master SRS Source Code

-

13. Does the Streaming Media Server Cause Latency?

-

14. How to Reduce Latency in Streaming Media Servers

-

15. How to Obtain Streaming Media Server Push Information

-

16. How to Obtain Streaming Media Server Pull Information

-

17. Can Instant Opening Reduce Latency?

-

18. Push Streaming -> Server Forwarding -> Pull Streaming Latency Analysis

Note: We continuously update the SRS streaming media server, from 3.0 to 4.0 to 5.0.

4.2 ZLMediaKit Source Code Analysis

-

01. Overall Framework Analysis

-

02. Thread Module Division

-

03. RTSP Push Streaming Connection Handling

-

04. RTSP Pull Streaming Connection Handling

-

05. Data Forwarding Model

-

06. SDP Parsing

-

07. RTP H264 Parsing

-

08. RTP AAC Parsing

Note: ZLMediaKit explains RTSP, while other modules such as RTMP/HLS refer to SRS.

5. WebRTC Project Practice

5.1 Intermediate One-to-One Audio and Video Call in WebRTC (Self-Developed Project Practice)

-

01. Analysis of WebRTC Call Principles

-

02. Setting Up WebRTC Development Environment

-

03. Best Setup Method for Coturn

-

04. How to Capture Audio and Video Data

-

05. One-to-One Call Timing Analysis

-

06. Signaling Server Design

-

07. SDP Analysis

-

08. Candidate Type Analysis

-

09. Web One-to-One Call

-

10. Web and Android Call

-

11. AppRTC Quick Demonstration

-

12. How to Set Encoder Priority

-

13. How to Limit Maximum Bit Rate

-

14. What is the Essence of the Signaling Server?

-

15. Why Set SDP Again After Obtaining from the Interface?

-

16. Differences in SDP Between Web and Android

-

17. How Does A Know of B’s Existence to Call?

Note: Recommendations for Learning WebRTC:

-

1. Start from the web side, where you can directly call JS interfaces;

-

2. Have a clear understanding of the WebRTC call process before considering other ends.

5.2 WebRTC Advanced – MESH Model Multi-Party Call (Self-Developed Project Practice)

-

01. Custom Camera Resolution

-

02. Bit Rate Limiting

-

03. Adjusting Encoder Order

-

04. Analysis of MESH Model Multi-Party Calls

-

05. Development of Multi-Party Call Signaling Server

-

06. Dynamically Allocating STUN/TURN Servers

-

07. Web Client Source Code

-

08. Android Client Source Code

5.3 WebRTC Advanced – Janus SFU Model Multi-Party Call (Self-Developed Project Practice)

-

01. Janus Framework Analysis

-

02. Janus Signaling Design

-

03. Implementing a Conference System Based on Janus

-

04. Janus Web Client Source Code Analysis

-

05. Janus Android Client Source Code Analysis

-

06. Janus Windows Client Source Code Analysis

-

07. Deployment Based on Full ICE

-

08. Deployment Based on Lite ICE

-

09. Differences Between Full ICE and Lite ICE

-

10. Publish-Subscribe Model

Note: Janus is a very famous SFU WebRTC server, and many companies have done secondary development based on this open-source project, such as Xueba Jun.

5.4 WebRTC Advanced Development – SRS 4.0/5.0 Source Code Analysis

-

01. RTMP Forwarding WebRTC Logic

-

02. WebRTC Forwarding RTMP Logic

-

03. WebRTC One-to-One Audio and Video Call

-

04. WebRTC Multi-Party Call

-

05. WebRTC SFU Model Analysis

-

06. SRTP Analysis

-

07. RTCP Analysis

-

08. SDP Analysis

-

09. NACK Analysis

-

10. STUN Analysis

Note: SRS is a famous streaming media server with a very active community.

6. Android NDK Development

6.1 Basics of Android NDK Development

-

01. Summary of SO Library Adaptation

-

02. GDB Debugging Techniques

-

03. Makefile Project Organization

-

04. CMake Project Organization

-

05. Generating SO Libraries for Specific CPU Platforms

-

06. JNI Basics and Interface Generation

-

07. JNI Native Layer Building Java Objects

-

08. JNI Exception Handling

6.2 Compiling and Applying FFmpeg on Android

-

01. Compiling x264

-

02. Compiling x265

-

03. Compiling MP3

-

04. Compiling fdk-aac

-

05. Compiling FFmpeg

-

06. Using FFmpeg to Implement MP4 Format Conversion

-

07. Developing a Player Using FFmpeg

6.3 Android RTMP Push and Pull Streaming (Self-Developed Project Practice)

-

01. Implementing RTMP Push Streaming Protocol

-

02. Implementing RTMP Pull Streaming Protocol

-

03. RTMP Pull Streaming Audio and Video Synchronization

-

04. MediaCodec Hardware Encoding

-

05. MediaCodec Hardware Decoding

-

06. OpenSL ES Playing Audio Data

-

07. OpenGL ES Shader Displaying Video

6.4 Android Ijkplayer Source Code Analysis (Open Source Project Practice)

-

01. Compiling Ijkplayer and Practice

-

02. Project Framework Analysis

-

03. Playback State Transition

-

04. Pull Streaming Analysis

-

05. Decoding Analysis

-

06. Audio Playback Process

-

07. Video Rendering Process

-

08. OpenSL ES Playing Audio Data

-

09. MediaCodec Hardware Decoding

-

10. OpenGL ES Shader Displaying Video

-

11. Principles of Speed Adjustment Implementation

-

12. Principles of Low Latency Playback Implementation

-

13. Analysis of Buffer Queue Design Mechanism

7. iOS Audio and Video Development

7.1 Compiling FFmpeg 6.0 on MAC

-

01. Debugging FFmpeg with Xcode

-

02. Debugging FFmpeg with QT, General FFmpeg Knowledge Learning on Mac Platform

-

03. Calling FFmpeg on iOS

7.2 iOS FFmpeg RTMP Push and Pull Streaming

-

01. AVFoundation Video Capture

-

02. Metal Video Rendering

-

03. Audio Unit Audio Capture

-

04. Audio Unit Audio Playback

-

05. FFmpeg Streaming

-

06. FFmpeg Pull Streaming

-

07. Live Streaming Latency and Solutions

7.3 VideoToolbox Hardware Encoding and Decoding

-

01. Process of VideoToolbox Framework

-

02. Steps for Hardware Encoding and Decoding

-

03. CVPixelBuffer Parsing

-

04. How to Obtain SPS/PPS Information

-

05. How to Determine if it is a Key Frame

-

06. Encoding Parameter Optimization

7.4 iOS Ijkplayer Compilation and Application

-

01. Local Video Playback

-

02. RTMP Pull Streaming Playback

-

03. HTTP On-Demand

-

04. Audio Playback Process

-

05. Video Rendering Process

7.5 iOS WebRTC Audio and Video Calls

-

01. One-to-One Call

-

02. Multi-Party Call