The TCP/IP protocol specifies that the minimum frame length for transmission is 64 bytes; any frame shorter than 64 bytes is considered invalid. So, how was this 64 bytes determined?

This can be traced back to the CSMA/CD protocol. As is well known, early Ethernet operated on a shared bus, where multiple computers were connected to the network via a single bus. Even when it evolved to use hubs, it remained a shared bus, simply placing the bus within the hub. What are the characteristics of a shared bus network? At any given moment, only one user can access the network. If two or more users attempt to access the network simultaneously, their transmitted information will collide, resulting in invalid transmissions. This is similar to a room where only one person can speak loudly at a time; if two people speak loudly at the same time, no one can understand what they are saying. So, how can we avoid collisions? One method is to take turns accessing the network, just like taking turns to speak in a room. However, the number of users on the network is not fixed, and the time each user spends online is also variable. Just like people entering and leaving a room, some may want to speak while others may not, making fixed “turn-taking” impossible. To address this issue, Ethernet introduced the CSMA/CD protocol.

CSMA/CD stands for Carrier Sense Multiple Access with Collision Detection.

“Multiple Access” means that many computers are connected to a single bus in a multiple access manner.

“Carrier Sense” means that each station must check whether other computers are transmitting data on the bus before sending data. If there are, it should refrain from sending data to avoid collisions. There is no actual “carrier” on the bus; “carrier sensing” uses electronic technology to detect whether there are signals from other computers on the bus.

“Collision Detection” means that a computer checks the signal voltage on the channel while sending data. When multiple stations transmit data simultaneously, the signal voltage on the bus will increase due to superposition. When a station detects that the signal voltage exceeds a certain threshold, it concludes that at least two stations are transmitting data simultaneously, indicating a collision. This is why “collision detection” is also referred to as “conflict detection.”

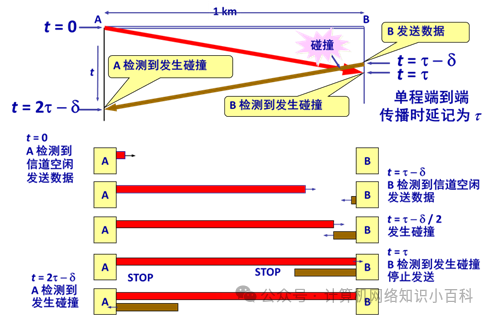

The reason collisions can still occur even after “carrier sensing” is that when a user detects that the channel is free, it may not actually be free, which is the problem! This can be illustrated with the following diagram.

Assuming A checks the channel at time t=0 and finds it free, it sends data. The information sent by A takes a one-way propagation delay to reach point B, which we assume is τ=25.6us. Therefore, A’s information will reach B after 25.6us. If B checks the channel at t=τ-δ=20.6us, it is clear that A’s information has not yet reached B, and B thinks the channel is free, so it also sends information. A and B’s transmissions collide at time t=(25.6+20.6)/2=23.1us (Note that the collision between B and A occurs at the intersection of the two lines in the diagram, which is exactly halfway between τ-δ and τ, thus equal to (τ-δ+τ)/2).

When will B and A discover the collision? B must wait for A’s information to reach point B (at which point the information will overlap), which occurs at τ=25.6us. A must wait for B’s information to reach A, which occurs at τ=20.6+25.6=46.2us.

Now, let’s consider the question: How long must A continuously check for collisions to confirm that no collision will occur, ensuring that this transmission will succeed?

In the previous scenario, B must check the channel before 25.6us to have a chance to send information, which could lead to a collision. If B waits until after 25.6us to check the channel, A’s information will have reached B, and B will receive a busy signal, preventing it from sending information. For A, if a collision is to occur, it must detect the channel before 25.6us + 25.6us = 51.2us. Therefore, as long as A continuously checks for 51.2us without detecting a collision, it can be certain that no collision will occur. We refer to this 51.2us (the round-trip propagation delay) as the collision window. This imposes a requirement on the time A can send information, which must be at least 51.2us!

Imagine if A’s transmission time is less than 51.2us. What could happen? It is possible that by the time the collision reaches A, A has already finished sending the information and left, meaning that even if a collision occurs, A would not know! Referring back to the previous example, if A’s transmission time is 46us and the collision reaches A at 46.2us, A has already completed its transmission and left! (A cannot check the channel after finishing sending information).

In the early days, the mainstream Ethernet operated at 10Mbit/s, and engineering measurements showed that the maximum round-trip propagation delay within a local area network did not exceed 51.2us. During this time, each station could send:

10M × 51.2us = 512bit = 64 bytes.

Therefore, it is required that the information sent by each station must be greater than or equal to 64 bytes; any frame shorter than 64 bytes is considered an invalid frame due to collision and abnormal termination. This is the origin of the minimum frame length of 64 bytes in Ethernet. Even though the CSMA/CD protocol is rarely used today (as most local area networks now use switches, forming point-to-point buses), the standard of a minimum frame length of 64 bytes has been retained.