Imagine if Alan Turing and John McCarthy had Instagram; their posts might crash the servers!

The history of artificial intelligence in the tech world has been like a roller coaster, experiencing thrilling highs and frustrating lows. In 1950, Turing proposed the “Turing Test,” which was like dropping a depth charge in the tech community. Then, at the Dartmouth Conference in 1956, McCarthy officially coined the term “artificial intelligence,” triggering a “major earthquake” in the tech world. However, due to limitations in computing power and data, the beautiful visions proposed for AI back then could not truly materialize, leading to a period of winter in the late 80s and 90s.

With leaps in computing power and the wave of big data, AI finally welcomed its spring in the 21st century. Particularly in 2012, AlexNet burst onto the scene from the lab of Turing Award winner Geoff Hinton. AlexNet not only dominated the ImageNet competition but also showcased the vast potential of AI to change the world. Since then, significant progress has been made in various fields such as speech recognition, natural language processing, autonomous vehicles, and medical diagnosis under the influence of AlexNet.

01

—

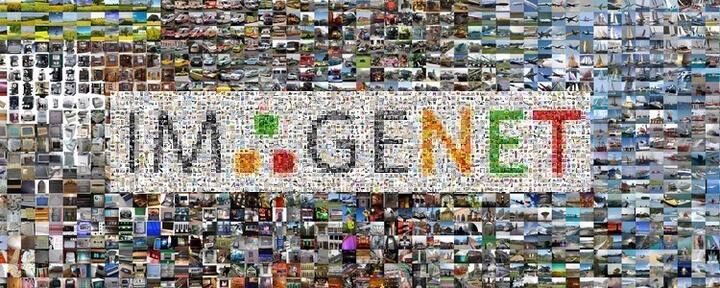

Comparison of Weak AI and Strong AI

“ Weak AI is like an athlete focused on one sport, while strong AI is like an Olympic decathlon champion, capable of anything.“

Although AI has been widely researched and applied in academia and industry, it still feels quite distant for most ordinary people. This is because the past has been dominated by weak AI, which is designed and trained to perform specific tasks. This type of AI does not possess true understanding or consciousness; it merely exhibits intelligence within the specific task areas it has been trained for.

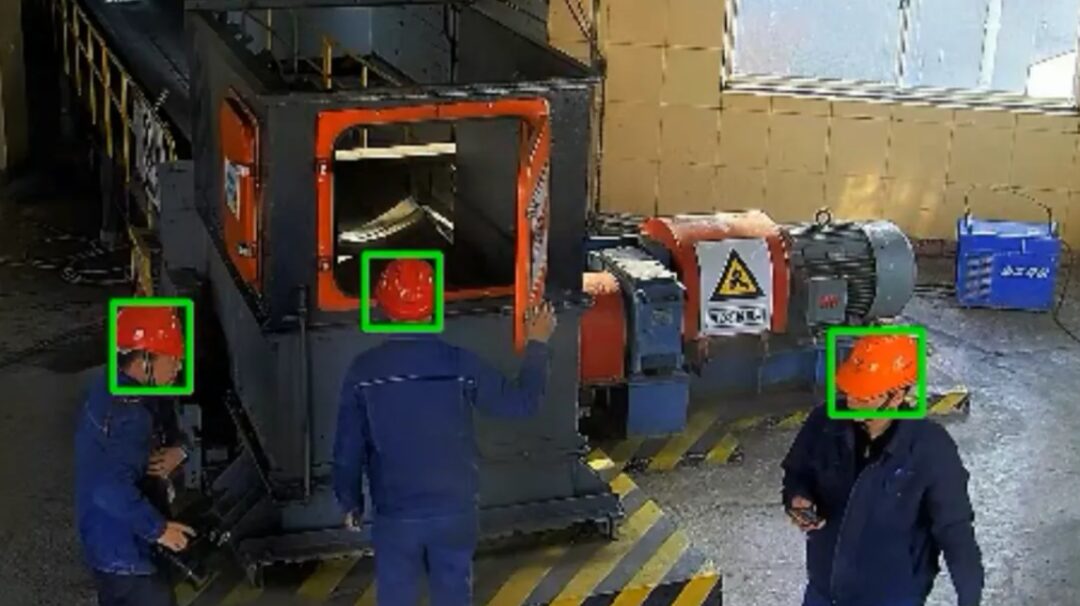

For example, a model using YOLOX to detect hard hats might feel that its work is “hatty”—it can only detect the presence of hard hats but cannot understand whether workers are actually wearing them correctly. If we want to know whether the hard hats are being worn correctly, we might need to adjust the data or implement additional processing logic to truly close the business loop.

Strong AI, although not yet realized, has shown potential for becoming all-encompassing, especially with the push from the Transformer architecture.

02

—

02

—

The Evolution of Chatbots

“ Early chatbots (weak AI) were more suited to being doormen because they only answered “yes” or “no.” However, the emergence of ChatGPT, based on the Transformer, was like a “Renaissance” in the chatbot world. When ChatGPT was first launched, it received widespread attention and became the fastest-growing consumer application in history.“

In the past, AI was often jokingly referred to as “artificial stupidity,” but ChatGPT can answer almost any question, except for sensitive topics (like adult content or politics), and its knowledge base is more extensive than yours, which was unimaginable for previous chatbots.

The main difference lies in the fact that early chatbots primarily relied on pattern matching and fixed response libraries; they lacked true understanding and flexibility. This is also what we referred to earlier as weak AI.

For example, Siri, a common early version of a chatbot for iPhone users, is programmed to respond only within a set scope. For instance, it activates only when it hears location, alarm, or specific patterns. When we provide information beyond its database, Siri tends to refuse to answer, which is why we often find Siri a bit silly.

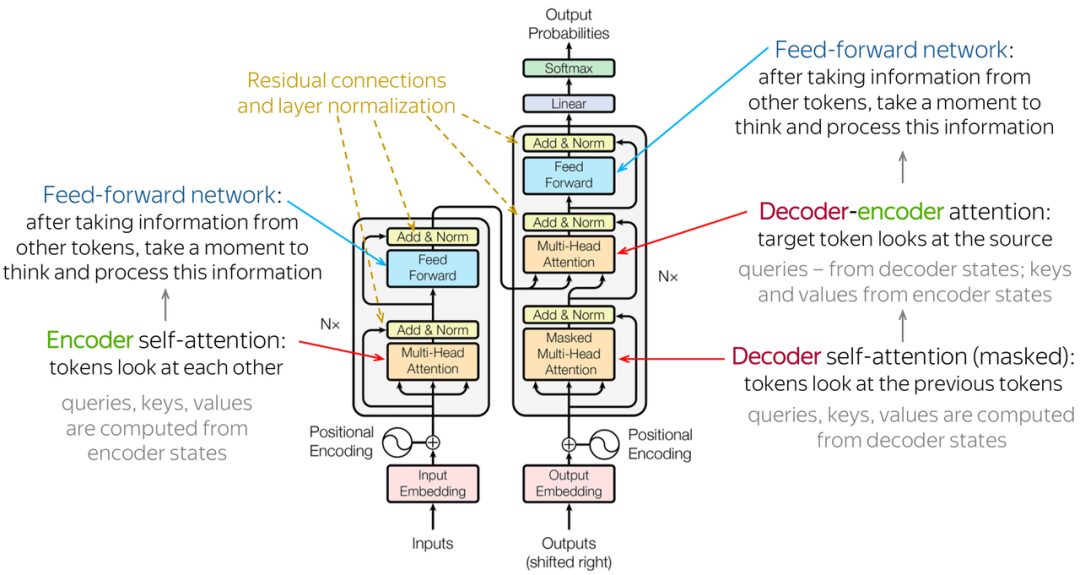

ChatGPT, a large language model based on the Transformer architecture, is fundamentally different from traditional chatbots. This model is pre-trained on vast amounts of text data, learning the deep structure and contextual relationships of language, thus generating coherent and relevant text. This model does not simply follow a set of pre-defined rules; rather, it analyzes massive data from the internet to understand the logical relationships between words and learns to communicate in a human-like manner through reinforcement learning and instruction-following fine-tuning.

This is akin to a baby hearing a lot of language from a young age. As they learn basic grammar and expressions, their language ability gradually matures, eventually allowing them to converse fluently.

Of course, ChatGPT is certainly not just a babbling baby; from my perspective, ChatGPT resembles a college graduate who has learned all knowledge from the internet, capable of using many tools, yet conservative and ethical, but can only engage in short bursts of rational thought and provide limited responses to our questions.

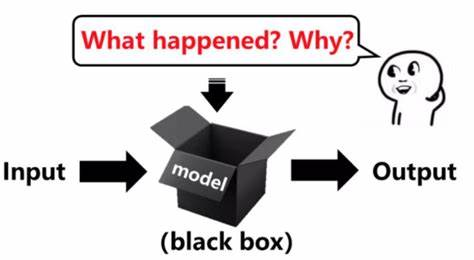

Training such a “college graduate” is far from simple; it involves a meticulously designed deep neural network that simulates the human brain. The model learns the optimal responses in different scenarios through extensive text input. However, due to the multi-layer complexity of neural networks, we find it hard to fully understand their internal workings. This is why it is referred to as a “black box model”—we only know that after inputting a question, the machine generates an answer A, but we do not know the specific principles and mechanisms that triggered this answer. This is somewhat similar to how the brain operates, but ChatGPT’s capabilities have indeed had a significant impact on us.

03

—

03

—

The “Superpowers” of Generative AI

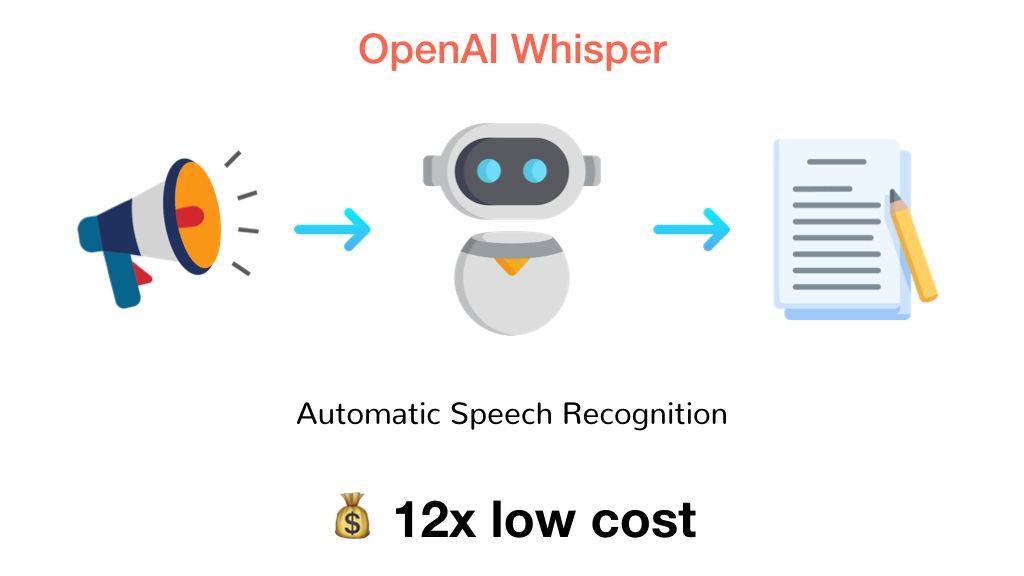

In addition to text writing and communication, current generative AI technology has expanded into listening, speaking, and reading. For instance, ChatGPT can leverage the capabilities of DALL.E 3 for image generation and can interpret the content and information within images. Additionally, OpenAI’s latest mobile app now supports voice interaction with AI. Users only need to verbally pose a question, and OpenAI’s Whisper model can convert speech to text and respond to users with near-human-like voice.

Moreover, there is the recently popular generative video model SORA launched by OpenAI, which breaks the old framework of video generation technology. It can generate high-definition videos lasting over a minute, support continuous video generation, and multi-angle displays, completely overturning our traditional understanding of video production (although it is not yet fully open for user testing).

Since ChatGPT can perform listening, speaking, reading, and writing, it essentially meets the prerequisites of being a tool “person.” If we could build a robotic body for it, it could truly accomplish certain tasks. Of course, some companies are already doing this; for example, the image shown below Figure demonstrates how the combination of robots and ChatGPT enables it to understand what it needs to do next and find the appropriate programs to execute those tasks.

04

—

04

—

The Impact and Changes Brought by Generative AI

The series of technological advancements and breakthroughs I have introduced above all indicate that current generative AI tools are gradually transitioning from specific domain intelligence to a comprehensive multi-domain intelligence direction, which we refer to as strong AI. It can now begin to respond to us through a combination of text, voice, and images. Although the technology, especially in video and image generation, is still not very mature, it has already had a significant impact on our existing lives and work.

Imagine having a conversation with ChatGPT on a webpage as if a knowledgeable and well-informed person were sitting across from you, always on standby 24/7. As long as we can reasonably articulate our needs, it can provide us with answers right away. Regardless of whether the answer is ultimately correct or not, it will still offer us some useful ideas for further work.

For students, since their daily work revolves around learning, having ChatGPT is almost like hiring a private tutor without discrimination. I recall during my graduate studies, when I was learning the basics of machine learning, I had many questions but no one to consult, so I had to watch videos on Bilibili or YouTube one by one. And due to my weak coding skills, I often felt powerless and wanted to give up when bugs appeared in the code. However, with ChatGPT, we can almost get real-time help and responses in data processing and model training, and when encountering bugs, it can analyze where the problem lies and how to improve it.

This customized learning experience is unparalleled. After all, some instructional videos can only address specific problems, and a slight change in the problem may lead to errors. However, ChatGPT can genuinely provide targeted answers based on your specific questions and give real-time feedback, just like a mouse that can obtain pleasure by pressing a button; we click and receive feedback and responses, which may lead us to start relying on this technology for growth and progress.

Additionally, for students, one of the most important tasks is writing papers. In the process of writing, we often make the common mistake of being too colloquial. This has been my personal experience; during my undergraduate thesis writing phase, my advisor pointed out that I had too much colloquial content, which essentially meant it wasn’t concise enough. Generally speaking, if we don’t want to learn or do it ourselves, we need to find specialized editing companies or paid software to handle our papers. However, with ChatGPT, we can simply input parts of our work for it to help us polish them, saving us a lot of time and money. As mentioned by Professor Li Hongyi in his class on generative AI, he instructs all his graduate students to polish their papers before submitting them to him, ensuring that the language is more rigorous and free of grammatical errors.

For working individuals, there is also significant value. For example, many meaningless and hollow “box projects” are essentially content that no one reads but must be completed and placed in a box. Leveraging the power of large language models to generate this meaningless text can be much more efficient than writing it ourselves. Beyond generating meaningless text, enabling individuals without coding skills to use natural language for programming to automate parts of their work is also crucial. The learning curve for programming languages is somewhat steep; even relatively simple programming languages like Python require some time to learn. However, we can now bypass learning programming languages and use large language models like ChatGPT as a “translator” to produce practical code files, which is inherently valuable.

Take unzipping files as an example; manually unzipping each file can be quite complex. But if we send the file path to ChatGPT and let it unzip all the files in that folder using just a few lines of code, it becomes very convenient and quick. Additionally, tasks like converting PDF to Word and extracting text from images can be supported by numerous libraries in Python. Thus, we don’t need to search for additional apps to perform these tasks; we can complete them efficiently and conveniently through code.

Whether for workers or students, utilizing ChatGPT for document summarization or information retrieval is incredibly convenient. This serves as an accelerator; for those who can effectively utilize AI tools, their learning speed or work efficiency will significantly improve. For employers, this is also very pleasing because such efficiency gains mean they can potentially earn more money at lower costs. This is why we see significant layoffs in large tech companies abroad; many job functions have become redundant due to AI acceleration. Furthermore, given the overall downward trend in the economic environment, many companies are also undergoing layoffs and optimization. During such times, capitalists are more motivated to leverage AI technology to optimize personnel and enhance overall profits. Moreover, writing a story about applying AI could positively impact a company’s valuation, so why not do it?

05

—

05

—

The Limitations of Generative AI

Are you starting to feel a sense of crisis, thinking that large models may pose a threat to us? In reality, the threat of large models is still far from imminent. First of all, at this stage, AI finds it very challenging to complete complex tasks independently. Even when using agent technology like AutoGPT, tests show that when attempting to accomplish relatively complex tasks, it frequently makes errors and struggles to form a team to complete specific tasks. For instance, the recently popular AI programmer Devin can handle a lot of programming-related tasks, and its automatic problem-solving rate on SWE-bench is much higher than that of Claude 2 (4.80%) and GPT-4 (1.74%), but its actual accuracy is only about 13.86%, and it is far from being able to replace programmers.

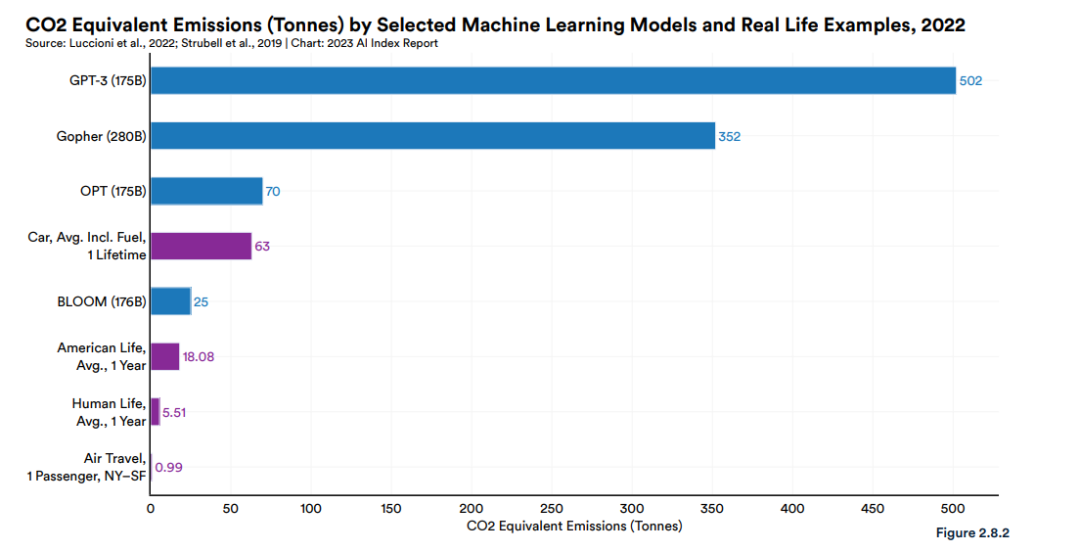

Moreover, the reason why current generative AI struggles to replace humans is largely due to its energy consumption. We all know that training a large model requires substantial time and computational resources; often, a model is trained using tens of thousands of GPUs for months to obtain a basic model, and each GPU’s operation relies on electrical resources. While electricity costs may be cheaper in areas rich in water or solar resources compared to purely coal-fired power generation, the overall energy consumption and carbon emissions generated during the training of large models and subsequent inference from numerous models across the internet are unimaginable. For example, in 2022, it was estimated that training a 175B GPT model produced about 500 tons of carbon emissions, while the annual carbon emissions from human activities amount to only about 5.5 tons. In the future, with domestic targets to achieve carbon peak and carbon neutrality, carbon emissions may become a currency, and we may not even be able to afford enough carbon emission quotas to train models.

Compared to the energy consumed by deep learning-based large models, the human brain has an unparalleled advantage. The human brain is far more energy-efficient than modern computers and AI systems when processing information and executing complex tasks. Studies show that the brain consumes about 20 watts of energy, equivalent to the power of a small light bulb, while current AI systems may require thousands of times more energy to perform similar tasks. Additionally, the human brain exhibits remarkable adaptability and flexibility in handling uncertainty, abstract thinking, and learning new skills, which current AI technology struggles to match. Therefore, while AI excels at processing large amounts of data and executing specific algorithms, the human brain still holds a clear advantage in energy efficiency and handling complexity.

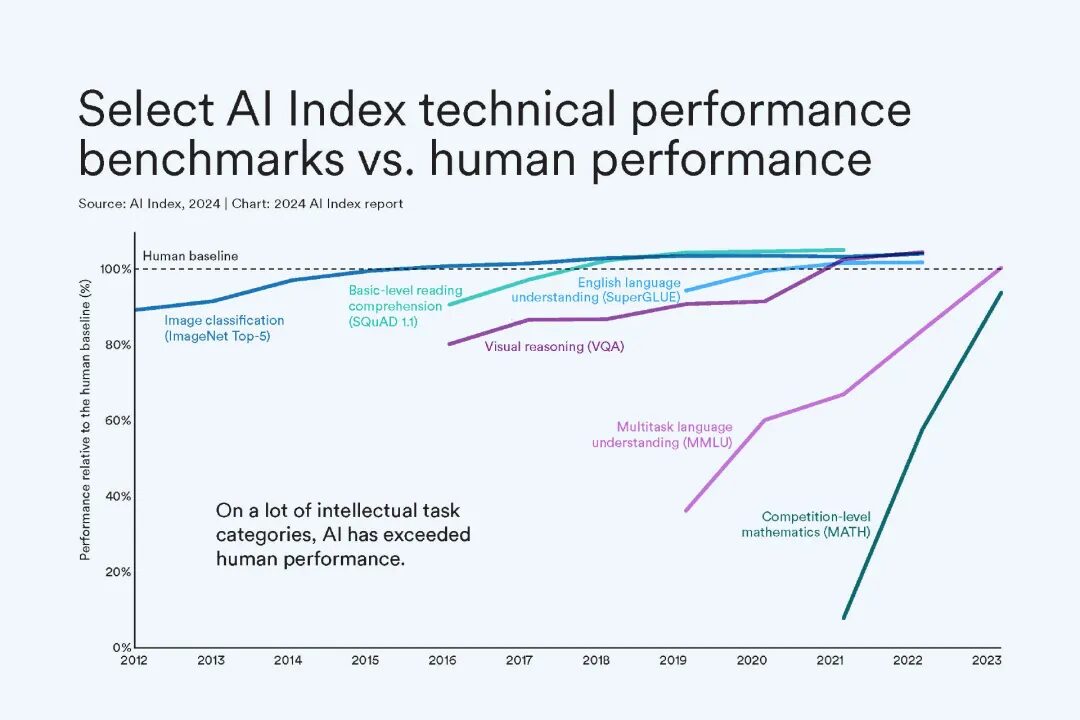

However, we should not be too complacent and dismiss concerns about AI potentially replacing us. According to the “Artificial Intelligence Index Report 2024” released by the Stanford Institute for Human-Centered AI, co-led by Fei-Fei Li, AI has already surpassed humans in certain tasks, such as image classification, visual reasoning, and English comprehension. Of course, it still lags behind humans in more complex tasks like competitive-level mathematics, visual common-sense reasoning, and planning. But with the rapid development of AI technology, more and more models are continuously iterating and upgrading. The GPT-3.5 model was released in November 2022, GPT-4 was launched in March 2023, and there are increasing rumors about the upcoming GPT-5. It is indeed challenging to predict what the future will hold.

06

—

06

—

Conclusion

Overall, in this era, we as humans really need to reflect on the limitations of large models and the strengths of humanity. In areas where AI models excel, we can leverage AI tools to complete simple repetitive tasks, leaving the programming to AI. However, in areas where AI models fall short, which are our strengths, we must actively seize these opportunities and strive to develop competitive differences to avoid falling behind in the future competition with AI. If you want to know what qualities and abilities we need to cultivate, stay tuned for the next article about the qualities and abilities required in the AI era!

Source: WeChat Official Account [Smart Stream]