↑ ClickBlue text Follow the Jishi platform Author丨Song JitaoSource丨ZhuanzhiEditor丨Jishi Platform

Author丨Song JitaoSource丨ZhuanzhiEditor丨Jishi Platform

Jishi Guide

This article discusses three trends in the development of artificial intelligence (from specialized to generalized – pre-trained large models and AI agents, from capability alignment to value alignment – trustworthiness and alignment, from design goals to learning goals – pre-training + reinforcement learning) and four prospects for the future. >> Join the Jishi CV technology exchange group to stay at the forefront of computer vision

Song Jitao, Professor at Beijing Jiaotong University

Table of Contents

Introduction

Trend 1: From Specialized to Generalized – Pre-trained Large Models and AI Agents

- (1) Pre-trained Language Models

- (2) Visual and Multimodal Pre-training

- (3) Applications of Pre-trained Models

- (4) AI Agents

Trend 2: From Capability Alignment to Value Alignment – Trustworthiness and Alignment

-

(1) Trustworthiness: Value Alignment in the Era of Small Models

-

(2) Value Alignment in the Era of Large Models

Trend 3: From Design Goals to Learning Goals – Pre-training + Reinforcement Learning

-

(1) Pre-training to Acquire Basic Capabilities, Reinforcement Learning for Value Alignment

-

(2) Pre-training Imitates Humans, Reinforcement Learning Surpasses Humans

Prospects

- (1) “True” Multimodality: Returning from Fine-tuning to Pre-training

- (2) System 1 vs. System 2

- (3) Understanding and Learning Based on Interaction

- (4) Superintelligence vs. Super Alignment

Introduction

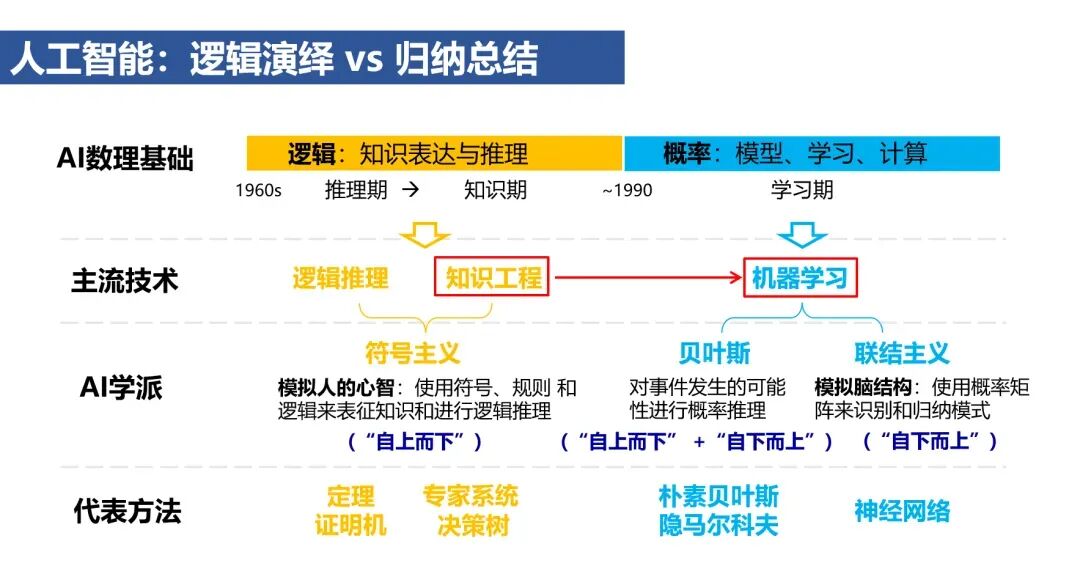

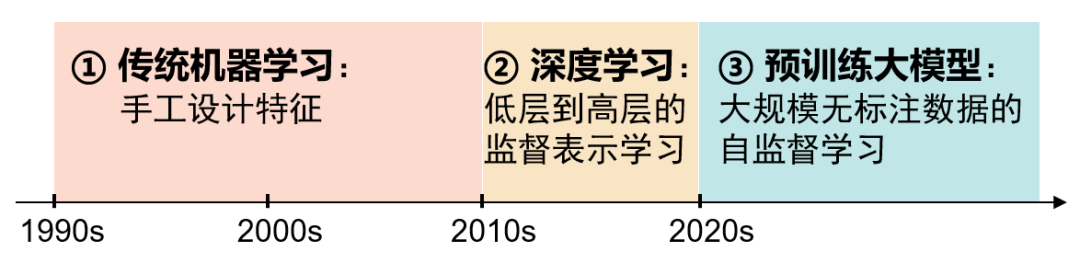

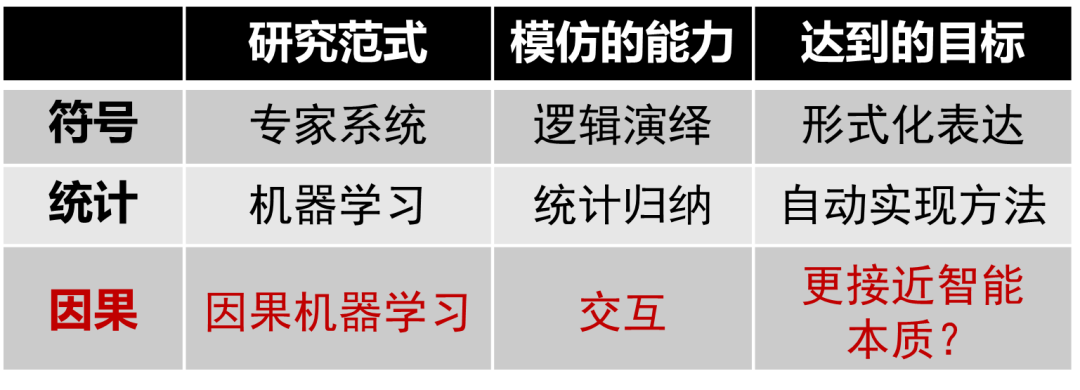

The Dartmouth Conference in 1956 defined “artificial intelligence” as “a series of artificial programs or systems that enable machines to simulate human perception, cognition, decision-making, and execution“. This definition gave rise to two approaches to imitating human intelligence – logical deduction and inductive summarization, which inspired two important stages in the development of artificial intelligence: (1) from 1960 to 1990, the knowledge engineering approach based on logic, focusing on knowledge representation and reasoning; (2) after 1990, the machine learning approach based on probability, emphasizing model construction, learning, and computation.

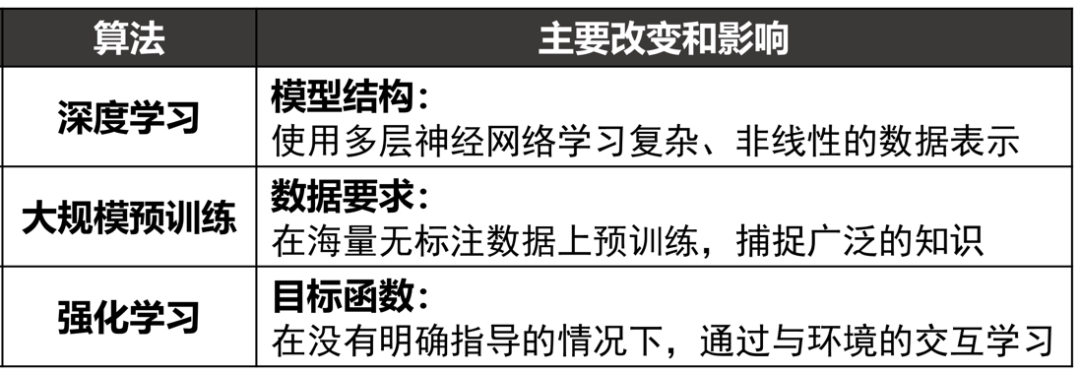

After more than 30 years of development, machine learning methods have roughly gone through three stages: traditional machine learning relying on manually designed features from 1990 to 2010, (traditional) deep learning from 2010 to 2020 focusing on supervised representation learning from low-level to high-level, and post-2020 self-supervised learning with pre-trained large models based on large-scale unlabeled data. Centered around the third generation of machine learning with pre-trained large models, the following discusses three trends in the development of artificial intelligence and four prospects for the future.

Trend 1: From Specialized to Generalized – Pre-trained Large Models and AI Agents

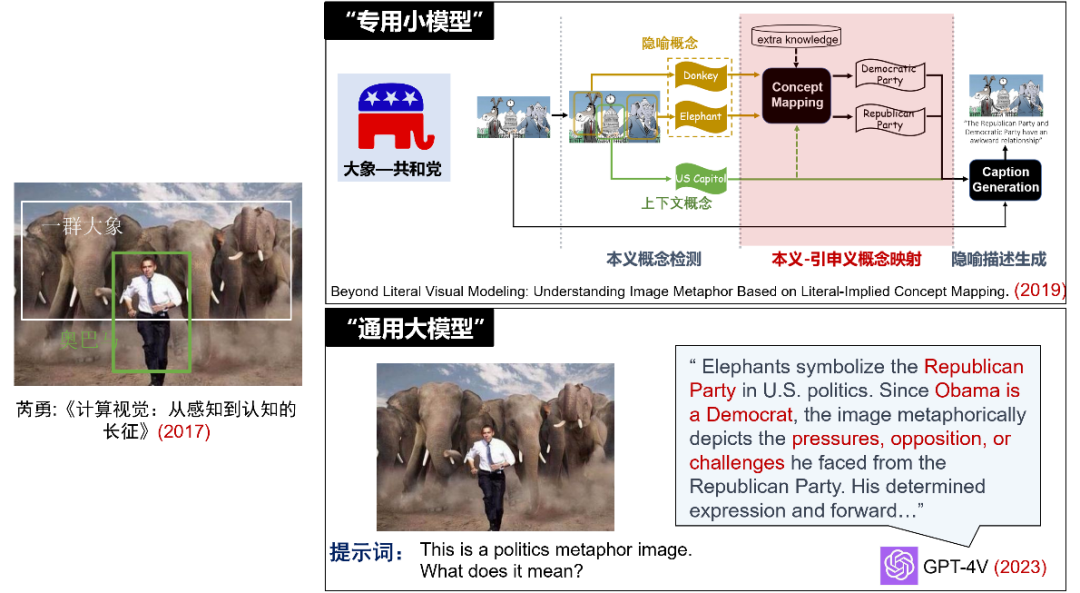

Taking the Chinese-English translation task as an example, the knowledge engineering approach requires linguists to write rule libraries, while traditional machine learning and deep learning learn probabilistic models or perform model fine-tuning based on corpora. These methods are designed for specific machine translation tasks. However, today, the same large language model can not only translate dozens of languages but also handle various natural language understanding and generation tasks such as question answering, summarization, and writing. Combining my own research experience, Professor Rui Yong proposed the cognitive challenge of metaphorical image understanding in 2017 (linking “elephant” with “Republican” to understand the image’s discussion of American politics). In 2019, we attempted to solve this through a pipeline of multiple specialized small models (literal concept detection – metaphorical concept mapping – metaphorical description generation). By 2023, a simple prompt is sufficient for GPT-4V to accurately understand the political metaphor behind the image.

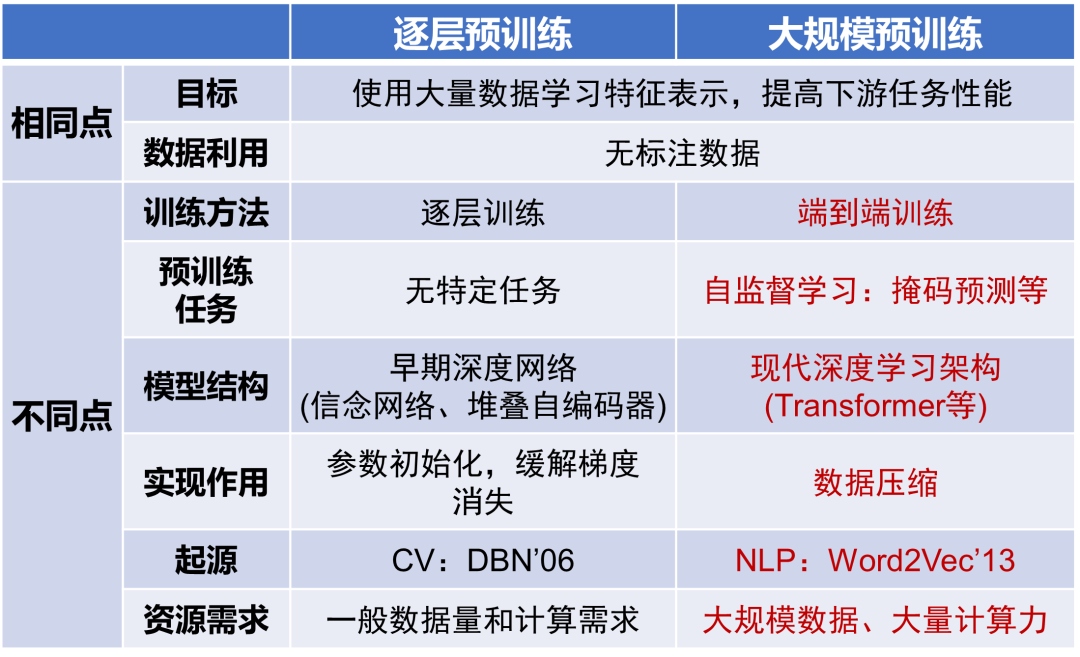

The “specialized” nature of small models vs. the “generalized” nature of large models: the large-scale pre-training technology used by pre-trained large models and the layer-wise pre-training technology in early deep learning, while both learn feature representations from unlabeled data, differ significantly in training methods, pre-training tasks, model architectures, functionalities, origins, and resource requirements. From an origin perspective, layer-wise pre-training technology was initially applied in the field of computer vision to learn visual feature representations. In contrast, large-scale pre-training technology originated from language models NNLM and Word2Vec in the field of natural language processing.

-

(1) Pre-trained Language Models

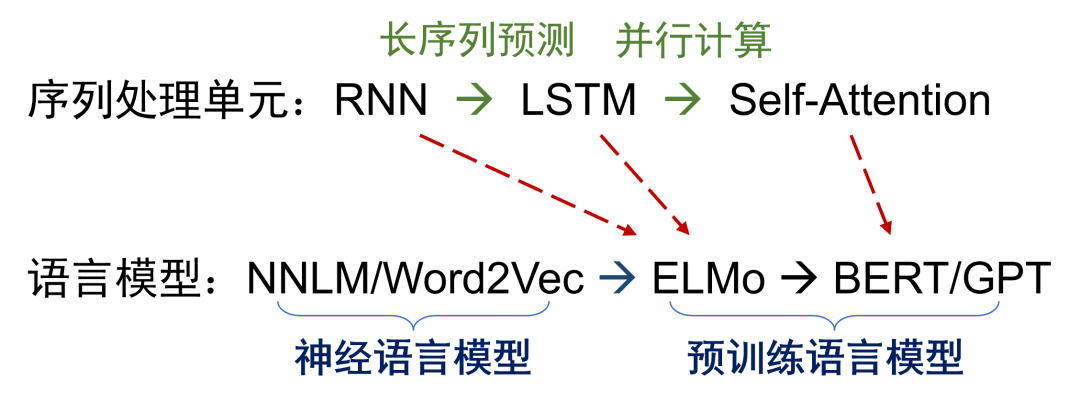

The core of a language model is to compute the probability of a sequence of text. It has roughly gone through several development stages: statistical language models, neural language models, and pre-trained language models. Unlike neural language models based on static word vectors (e.g., Word2Vec), pre-trained language models began learning dynamic word representations that can perceive context since the ELMo model, allowing for more accurate predictions of text sequence probabilities. In the development of sequence processing units, from RNN to LSTM, and then to Self-Attention, issues of long sequence prediction and parallel computation have been gradually addressed. Therefore, pre-trained language models can efficiently learn from large-scale unlabeled samples. According to the scaling law regarding the relationship between computing power, data volume, and model scale, the performance of current pre-trained language models has not yet reached a ceiling.

-

(2) Visual and Multimodal Pre-training

The success of pre-trained language models has brought two insights to the field of computer vision: first, utilizing unlabeled samples for self-supervised learning, and second, learning general representations that can adapt to various tasks. From iGPT, Vision Transformer, BEiT, MAE to Swin Transformer, issues such as the computational resource consumption of the self-attention mechanism and the preservation of local structural information have been gradually resolved, promoting the development of visual pre-training models.

Multimodal pre-training simulates the multimodal process of human understanding of the physical world. Comparing large language models to the brain of machines, multimodal provides the eyes and ears for perceiving the physical world, greatly expanding the machine’s perception and understanding range. The core issue of multimodal pre-training is how to effectively achieve alignment between different modalities. Based on different modality alignment strategies, multimodal pre-training has roughly gone through two stages: multimodal joint pre-training models and multimodal large language models. Early models processed data from different modalities in parallel for pre-training, with key technologies including single-modal local feature extraction, modality alignment enhancement, and cross-modal contrastive learning. CLIP successfully bridged the language and visual modalities through contrastive learning on 400 million image-text pairs. Since 2023, models like LLaVa, Mini-GPT, and GPT-4V have inherited the rich world knowledge and excellent interaction capabilities from language models by fine-tuning to integrate other modality data. Google’s Gemini model has re-adopted a joint pre-training multimodal architecture. Recently, with the emergence of new models like LVM, VideoPoet, and Sora, multimodal pre-training has shown the following trends: (1) emphasizing the role of language models in multimodal understanding and generation; (2) typically including three key modules: multimodal encoding, cross-modal alignment, and multimodal decoding; (3) cross-modal alignment tends to adopt Transformer architecture, with models using autoregressive (VideoPoet) or diffusion (Sora) methods.

-

(3) Applications of Pre-trained Models

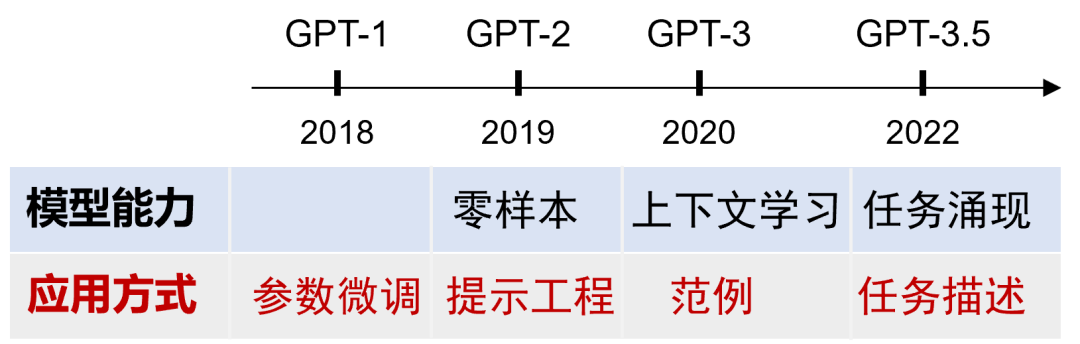

According to Leslie Valiant’s perspective, large-scale pre-training can be likened to the innate structural priors formed by biological neural networks through billions of years of data accumulation, i.e., population genes or physiological evolution. The application of pre-trained models is similar to the fine-tuning that individuals perform when facing small data. Following the development of the GPT series, we can clearly see the changes in the capabilities of pre-trained models and their corresponding application methods: from the parameter fine-tuning of the pre-trained model in GPT-1 (full fine-tuning/parameter-efficient fine-tuning), to prompt engineering after GPT-2 demonstrated zero-shot capabilities, to example design after GPT-3 exhibited contextual learning abilities, and finally to guiding the model directly through task descriptions after task emergence in GPT-3.5. OpenAI’s success largely stems from its leading cognition and consistent commitment: driving intelligence towards a more generalized direction by increasing data and model scale.

The transition from specialized models to generalized models has brought about the following four specific changes:

- From Closed Set to Open Set: Pre-trained models learn general knowledge from large-scale data, breaking the limitation of task solutions confined to specific categories. For example, CLIP can handle zero-shot visual understanding tasks by establishing associations between language and visual modalities; SAM can effectively segment unseen objects and scenes.

- Old Problems, New Understandings: The evolution of model application methods also provides us with new perspectives on traditional problems. For instance, few-shot learning has shifted from relying on labeled samples during the training phase to injecting contextual examples through prompts during the inference phase; zero-shot learning has gradually transformed into an open vocabulary learning problem due to the widespread existence of implicit knowledge bases like CLIP.

- Marginalization of Intermediate Tasks: The importance of intermediate tasks such as tokenization, part-of-speech tagging, and NER in the field of natural language processing is decreasing. Classic natural language processing borrowed from computational linguistics, where intermediate tasks were often designed by humans. For example, traditional dialogue systems were designed to include three modules: natural language understanding, dialogue management, and natural language generation, each subdivided into several intermediate tasks. However, as pre-training data reaches a certain scale in an autoregressive manner, these intermediate tasks and modules have been unified into the problem of predicting the next token. From the metaphorical image understanding example mentioned above, we can also observe similar changes in the visual and multimedia fields.

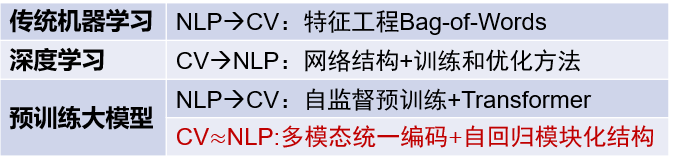

- Blurring of Domain Boundaries: The boundaries between computer vision (CV) and natural language processing (NLP) are increasingly blurring. In the traditional machine learning era, CV borrowed basic Bag-of-Words representation methods from NLP; in the early deep learning stage, NLP introduced network structures like MLP and ResNet from CV, as well as training and optimization techniques like dropout and batch normalization. In the era of pre-trained large models, CV first borrowed self-supervised pre-training and self-attention mechanisms from NLP, and with the introduction of visual GPT and video generation GPT models like LVM and VideoPoet, the two fields are moving towards a unified direction of multimodal encoding and autoregressive modular structures.

-

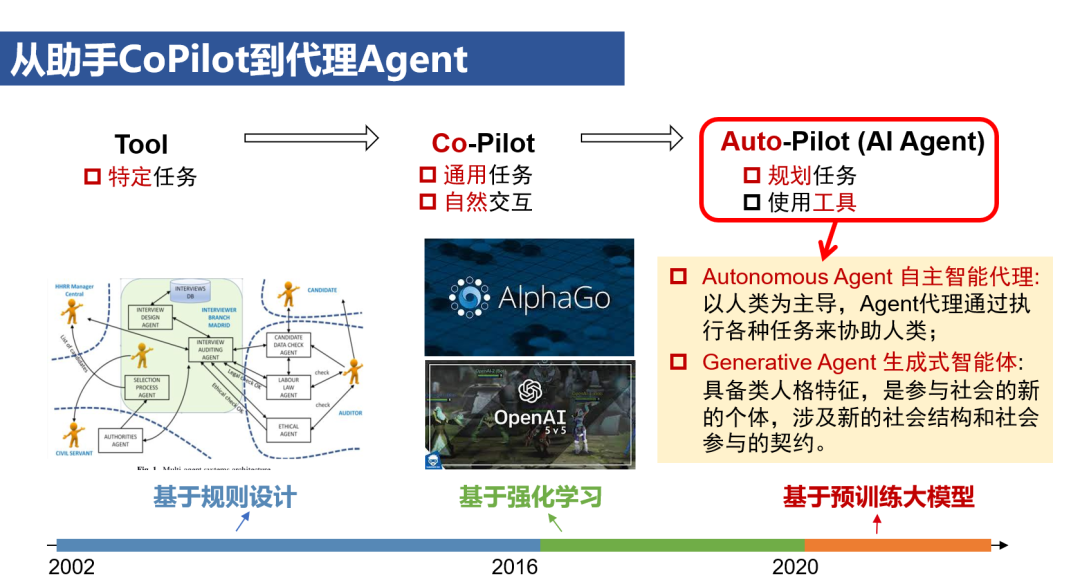

(4) AI Agents

The generality of pre-trained large models is not only reflected in content understanding and generation but also extends to thinking and decision-making capabilities. AI systems like Jasper and Midjourney, which handle general tasks and possess natural interaction capabilities, can be classified as CoPilots, while AI systems capable of planning tasks and using tools can be referred to as AutoPilots, i.e., AI Agents. In the CoPilot mode, AI acts as a human assistant, collaborating with humans in the workflow; in the AI Agent mode, AI acts as a human agent, independently undertaking most of the work, with humans only responsible for setting task goals and evaluating results.

It is worth noting that the concept of AI Agents has existed since the early days of artificial intelligence, having gone through two stages before the advent of pre-trained large models: rule-based design and reinforcement learning. The current discussion of AI Agents, more accurately, refers to AI Agents based on pre-trained large models. Compared to the previous two stages, which were designed for specific tasks and scenarios, the core characteristic of AI Agents based on pre-trained large models is their adaptability to general tasks and scenarios.

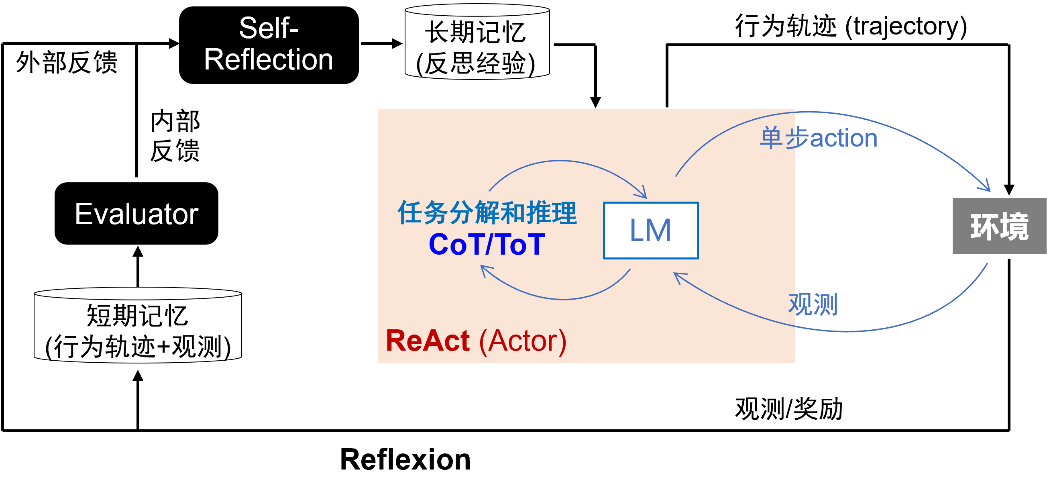

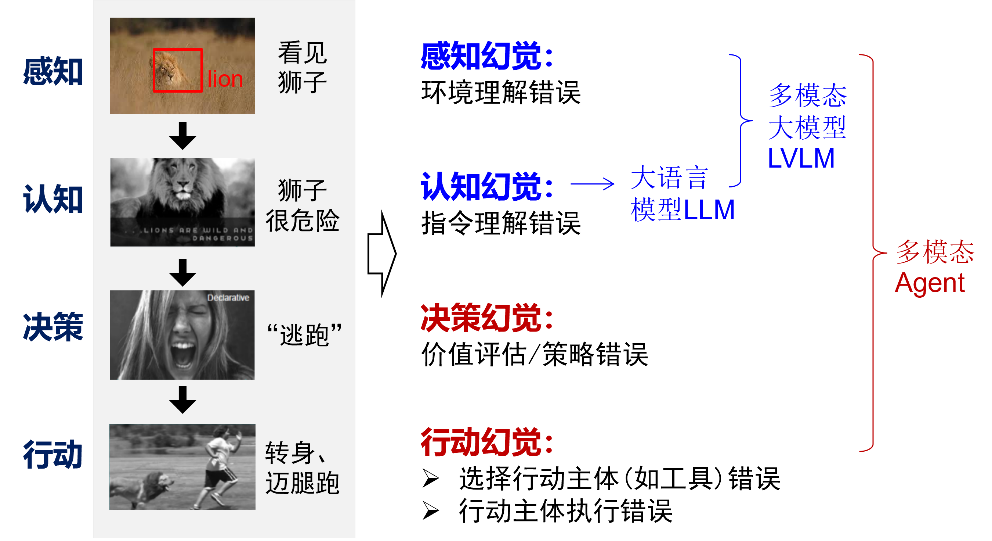

The main architecture of AI Agents includes Perception, Planning, Action, and Memory. In the action phase, AI Agents can rely on their large model capabilities or call external APIs or other models and tools to execute tasks. The planning process involves task decomposition and continuous optimization based on feedback. Task decomposition currently mainly adopts methods like chain of thought and thought trees, which mimic the human system two reasoning process by structurally organizing and refining thoughts to tackle complex problems. Feedback-based corrections are primarily achieved through two methods: one is ReAct, which combines reasoning and action in a single round, embodying the principle of “learning (acting) without thinking (reasoning) leads to confusion, while thinking without learning leads to danger”; the other is Reflexion, which can be seen as a language-based online reinforcement learning method capable of multi-round reflection from mistakes.

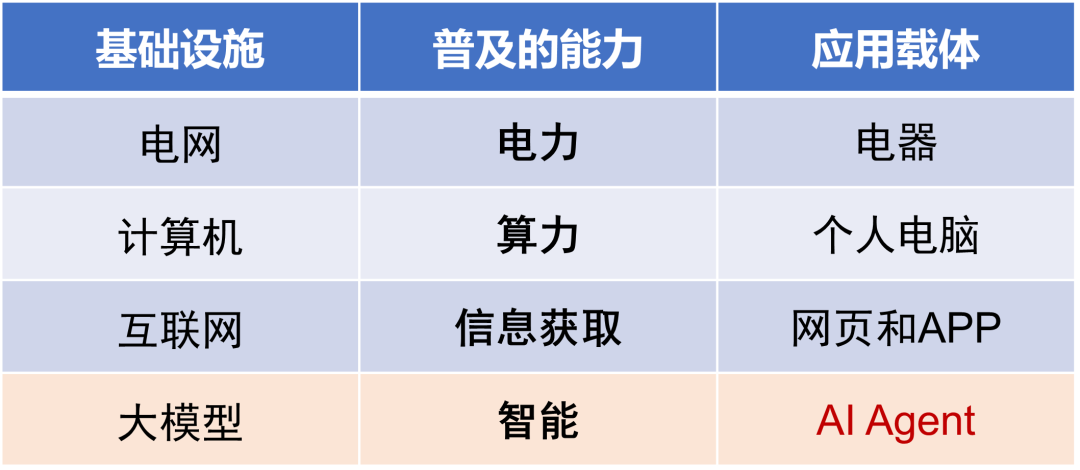

As large models gradually become the infrastructure of future society, similar to how the power grid, computers, and the internet became the infrastructure for the widespread availability of electricity, computing power, and information access, the cost of intelligent services will also significantly decrease. AI Agents, as the application carriers of intelligent services, will drive the transformation of AI-native technologies.

From the C-end perspective, AI Agents will become the information entry point of the intelligent era: users will no longer need to log into different websites/apps to complete various tasks but will interact uniformly with various services through AI Agents. AI-native applications, operating systems, and even hardware will surpass the current graphical user interface (GUI), integrating more natural language user interfaces (LUI) to provide a more intuitive and convenient interaction experience.

From the B-end perspective, Machine Learning as a Service (MaaS) provides machine learning models as a service, achieving an intelligent upgrade of cloud services compared to SaaS. Furthermore, Agent as a Service (AaaS) further positions intelligent agents as a service, promoting further automation upgrades of cloud services. Some believe that software production will enter a 2.0 era similar to 3D printing, characterized by (1) AI-native – designing natural language interfaces for AI use, (2) solving complex tasks – planning and executing task chains, (3) personalization – meeting long-tail demands. In this trend, software aimed at enterprises may no longer merely be tools to assist employees but may act as digital employees, replacing part of the basic and repetitive work.

AI Agents based on pre-trained large models still face several technical challenges:

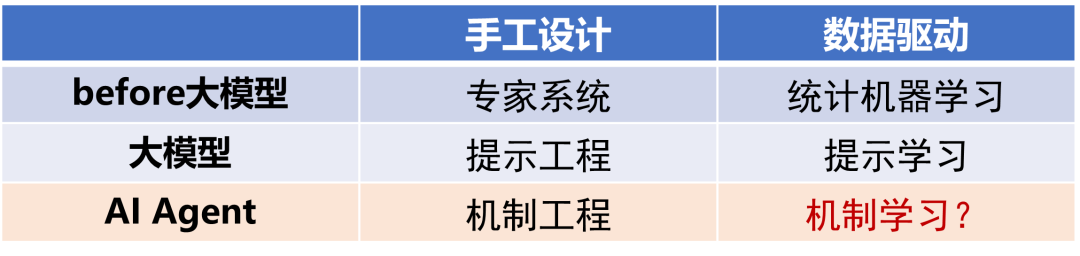

- Complexity of Mechanism Engineering Design and Application Generalization: Currently, the tool invocation and task planning of AI Agents often involve complex mechanism engineering, which requires manually writing prompt frameworks containing logical structures and reasoning rules through heuristic methods. This manual design approach is difficult to adapt to the ever-changing environment and user needs. According to the development pattern from manual design to data-driven learning, mechanism learning for AI Agents is a possible solution to achieve more flexible and adaptive intelligent agent behavior.

- Trustworthiness and Alignment: With the addition of memory, execution, and planning stages, new issues regarding trustworthiness and alignment for AI Agents need to be addressed. For example, in terms of adversarial robustness, it is essential to consider not only the model’s resistance to attacks but also the safety of memory carriers, toolsets, and planning processes; when dealing with hallucination issues, it is necessary to consider not only hallucinations during perception and cognition stages but also those during decision-making and action stages.

- Consistency of Long Context Planning and Reasoning: When handling long dialogues or complex tasks, Agents need to maintain contextual coherence, ensuring that their planning and reasoning processes align with the user’s long-term goals and historical interactions.

- Reliability of Natural Language Interfaces: Compared to the strict syntax and structure of computer languages, natural language has ambiguity and vagueness, which may lead to errors in understanding and executing instructions.

Trend 2: From Capability Alignment to Value Alignment – Trustworthiness and Alignment

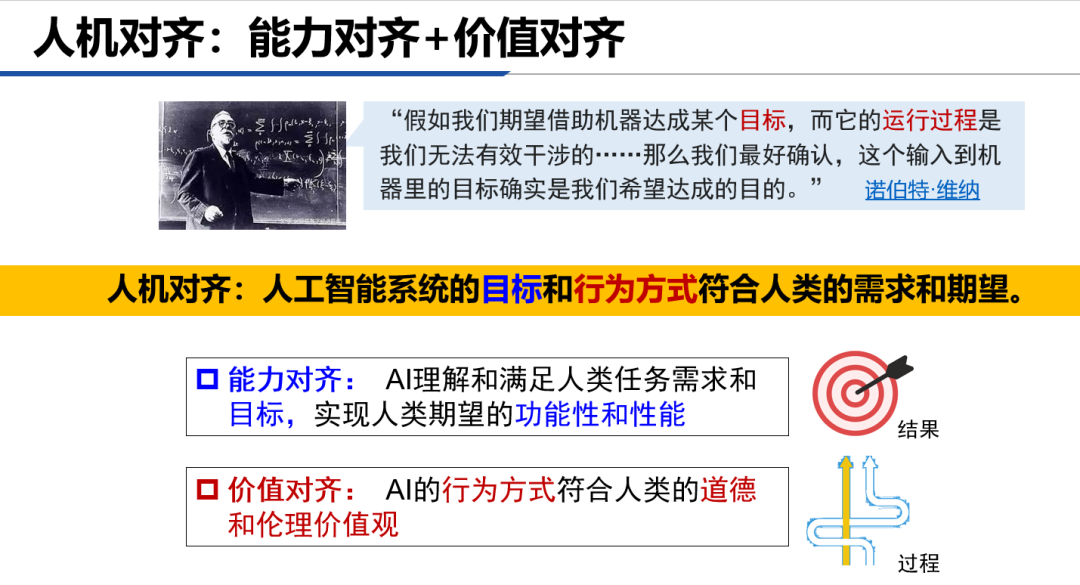

From the definition of artificial intelligence, its original intention to align with humans can be seen. Whether based on logical deduction knowledge engineering methods or inductive summarization statistical machine learning methods, the goal is to achieve alignment with humans. Taking machine learning methods as an example, in the supervised learning paradigm, humans label training datasets (X,Y), and the model learns the mapping f() from input X to output Y, which can be seen as a form of human knowledge distillation; in unsupervised and self-supervised paradigms, the similarity measures and proxy tasks defined by humans (e.g., generative proxy tasks aimed at reconstructing human language or natural images) also convey human knowledge to the model. By aligning the training objective function with humans, the model has successively passed the Turing test on a series of tasks representing different human capabilities, achieving a certain degree of capability alignment with humans.

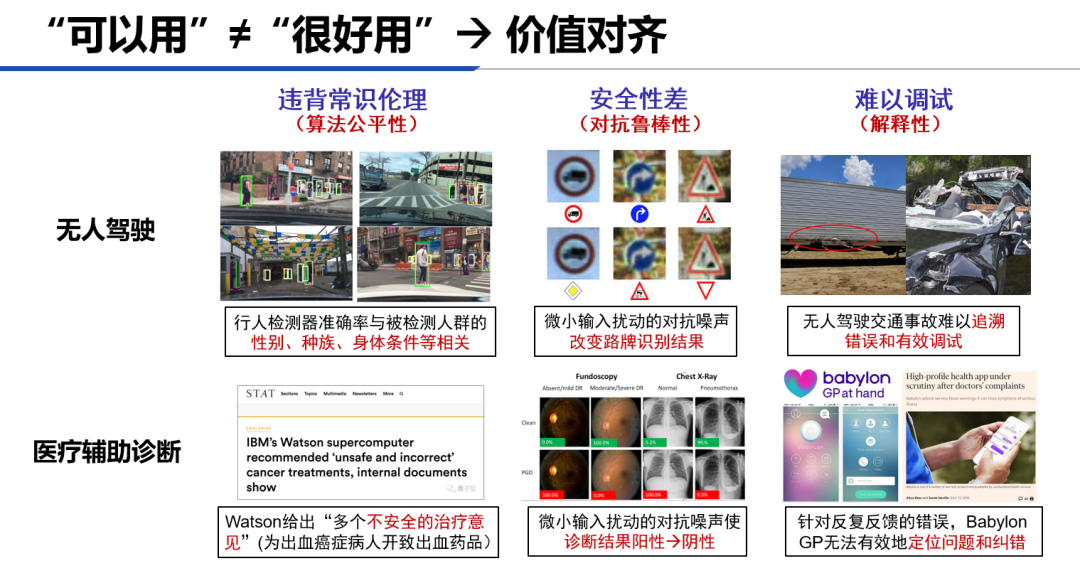

However, in some areas with strict requirements for robustness, fairness, and interpretability, AI models still struggle to achieve industrial-scale applications.

Currently, there is no consensus on the standards for achieving AGI. If human-machine alignment is viewed as a standard for achieving AGI, then in addition to capability alignment at the goal level, value alignment at the behavioral level must also be considered. Capability alignment and value alignment can be likened to results and processes: just as reaching a destination is the result, the mode of transportation and the route chosen can vary.

-

(1) Trustworthiness: Value Alignment in the Era of Small Models

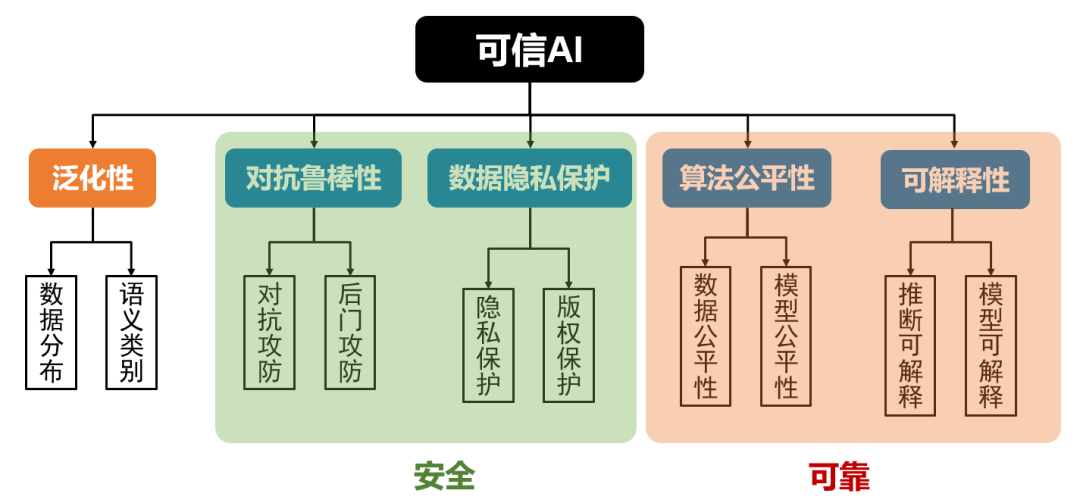

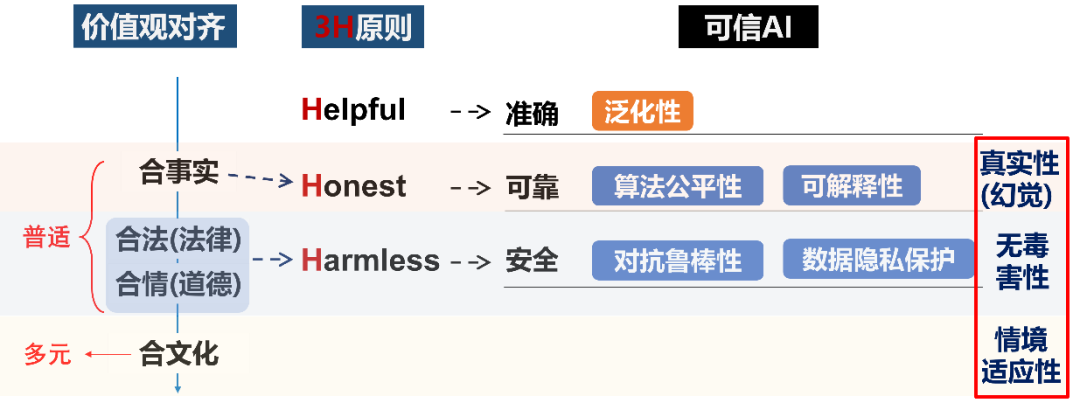

It can be observed that the adversarial robustness, algorithmic fairness, interpretability, and data privacy protection required by value alignment are the four core dimensions constituting classic trustworthy AI. Trustworthy AI is built on the foundation of generalization, which also corresponds to the fact that capability alignment is a prerequisite for value alignment. Conceptually, we can view value alignment as the extension of trustworthy AI, encompassing not only technical alignment but also broader ethical and social responsibilities.

-

(2) Value Alignment in the Era of Large Models

In the era of pre-trained large models, as AI capabilities continue to improve, the breadth and depth of applications will significantly increase. With greater capability comes greater responsibility. As people enjoy the convenience and productivity improvements brought by AI, their attitude towards AI will gradually shift from adaptation to reliance. When people begin to rely on AI to replace their own learning, thinking, and even decision-making, higher demands for AI’s trustworthiness and value alignment will arise. In addition to transferring the research focus of classic trustworthiness issues from specialized small models to pre-trained large models, we also face a series of new trustworthiness and value alignment challenges.

Anthropic proposed the “3H Principle” of human-machine alignment: Helpful corresponds to the requirement for accuracy in capability alignment; while Honest and Harmless roughly correspond to the requirements for reliability and safety in value alignment. The framework proposed by Professor Qin Bing from Harbin Institute of Technology sets universal and diverse value alignment goals from the dimensions of factuality, legality, emotionality, and cultural appropriateness. Compared to the issues in classic trustworthy AI, under the new value alignment framework, especially considering the characteristics of generative AI, new value alignment issues such as authenticity, non-harmfulness, and contextual adaptability need to receive more attention.

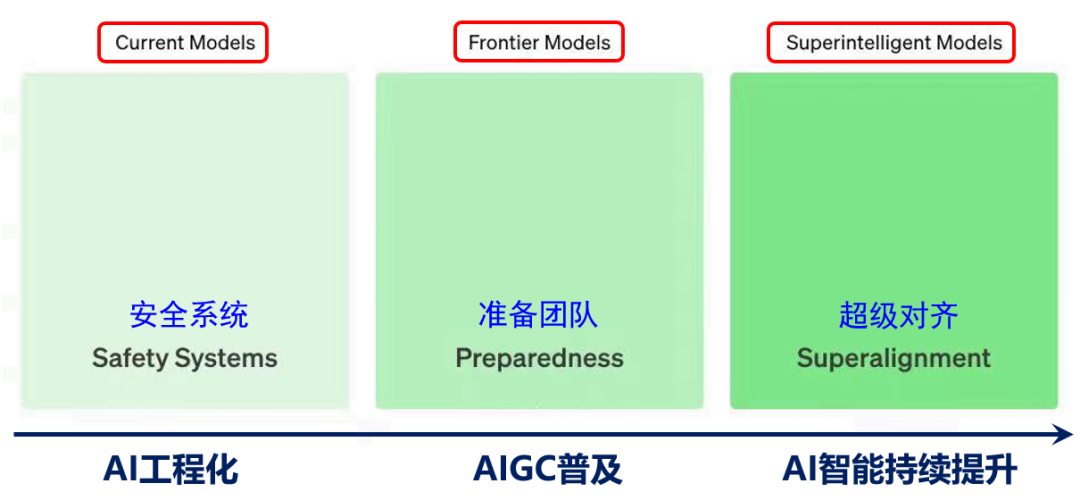

According to different time frames, OpenAI has established three alignment and safety teams, focusing on current cutting-edge models, transitional models, and future supermodels. Based on this setup, the following discusses three stages of value alignment research in the era of large models, combined with our own research examples.

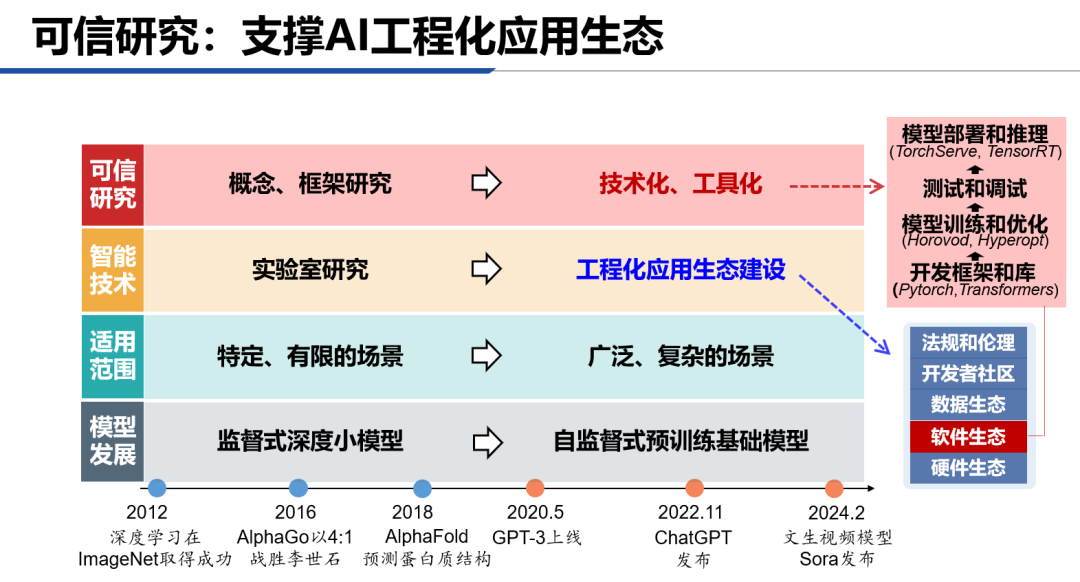

AI Engineering: Trustworthy Large Model Testing, Diagnosis, and Repair

The construction of an application ecosystem is a hallmark of technological maturity. Taking the development from software science to software engineering as an example, a complete software development cycle has been formed through the establishment of testing environments, toolchains, development platforms, and other infrastructures, improving key DevOps processes such as software construction, deployment, and maintenance. The AI engineering application ecosystem involves managing the entire intelligent lifecycle. The software ecosystem AIOps/LMOps provides necessary tools and services to ensure the efficiency and stability of model development, testing, deployment, and operation. Research on trustworthiness and value alignment needs to delve into the construction and implementation of the AI application ecosystem, shifting from conceptual and framework research to more practical technical practices, and supporting model research and application developers with solutions in the form of tools and integrated modules.

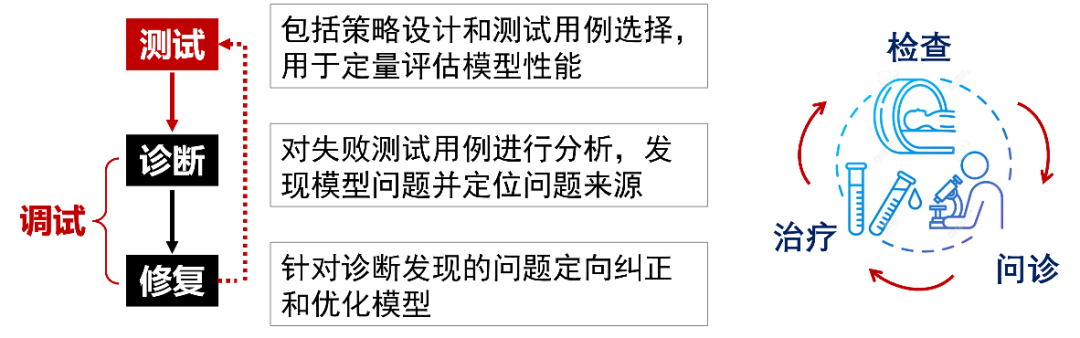

Using software engineering as an example, the core of its application ecosystem lies in constructing a complete testing-debugging closed-loop system, including performance evaluation, defect identification and localization, and regression testing. Implementing a testing-debugging closed loop can improve software reliability and reduce failure rates and safety risks. Due to the black-box problem brought by data-driven approaches, machine learning models cannot be debugged directly like software. By using interpretability methods to diagnose pre-trained models, problematic modules or model parameters can be identified, and targeted repair measures can be taken. The process of testing, diagnosing, and repairing models can be likened to the examination, consultation, and treatment stages of visiting a hospital. After completing model repairs, retesting is necessary to confirm that the issues have been resolved and that no new problems have been introduced, thus forming a testing-debugging closed loop.

Testing, diagnosis, and repair technologies should support large model research and downstream application development in a modular or tool form:

- Support for Model Research and Development: Testing and debugging technologies should be integrated into modules embedded in existing research and development processes. These modules need to be custom-designed for the characteristics of pre-trained models, enabling researchers to quickly assess model performance, accurately locate issues, and implement effective optimization measures.

- Support for Downstream Application Development: For downstream application development based on large models, testing and debugging tools can be provided as cloud services on large model platforms. This way, developers can conduct detailed assessments and adjustments of models based on specific application scenarios, simplifying the deployment and operation processes of models and enhancing the reliability and safety of downstream applications.

AIGC Popularization: OOD Issues of Natural-Synthetic Data

From ChatGPT, Midjourney to Sora and Suno, the quality of AI-generated content in text, images, videos, and music continues to improve, making it increasingly difficult for humans to distinguish. The high authenticity of AIGC content can confuse human judgment, with the foremost challenge being digital forensics and forgery detection, raising concerns about false information. On the other hand, as AI generation becomes a tangible productivity tool, the trend of AIGC being ubiquitous is likely unstoppable. Recently, the fully AI-generated trailer “Barbie × Oppenheimer” sparked viral discussions, and Gartner predicts that by 2030, the proportion of AI-generated content in major film works will rise from 0% in 2022 to 90%.

As society gradually adapts to and accepts AI-generated content, especially in scenarios where AI replaces humans, many situations will arise where AI tools interact with AI-generated content. This raises a new question: currently, models designed for natural data in terms of training data, structure, and training methods may encounter issues when applied to AI-generated content. For instance, AI-generated text and images may introduce biases in information retrieval and amplify such biases in the retrieval loop; compared to natural images, AI-generated images are more prone to hallucinations. It seems that AIGC not only confuses humans but also confuses AI itself.

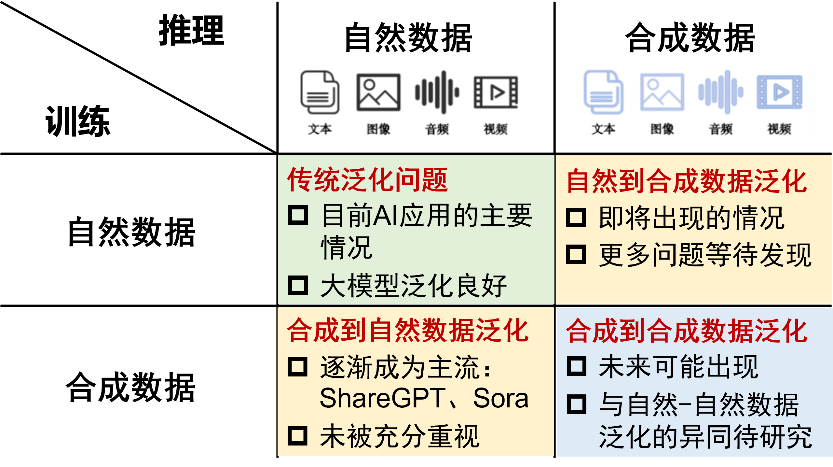

As AI-generated data continues to increase, we may encounter the following situations:

- Traditional Generalization Problem: Training on natural data and applying it to natural data. This has been the primary focus of research for the past few decades, with many tasks being effectively solved under laboratory conditions.

- Natural to Synthetic Data Generalization: Training on natural data and applying it to synthetic data. This is the situation discussed in the previous work.

- Synthetic to Natural Data Generalization: Training on synthetic data and applying it to natural data. For example, the ShareGPT dataset is widely used in training large language models, and Sora may use game engines to synthesize training data. Synthetic data can compensate for the shortcomings of natural data, driving continuous improvement in model capabilities. This situation is expected to continue growing.

- Synthetic to Synthetic Data Generalization: Training on synthetic data and applying it to synthetic data, which is an internal generalization problem of synthetic data.

Situations 2 and 3 can be viewed as a broad OOD problem, termed “Natural-Synthetic OOD”. In fact, even situation 4 should consider mixing natural and synthetic data in some way for model training. Understanding the differences between natural and synthetic data is crucial not only for authenticity discrimination but also for effectively using synthetic data for training in the future and interacting with synthetic data in applications.

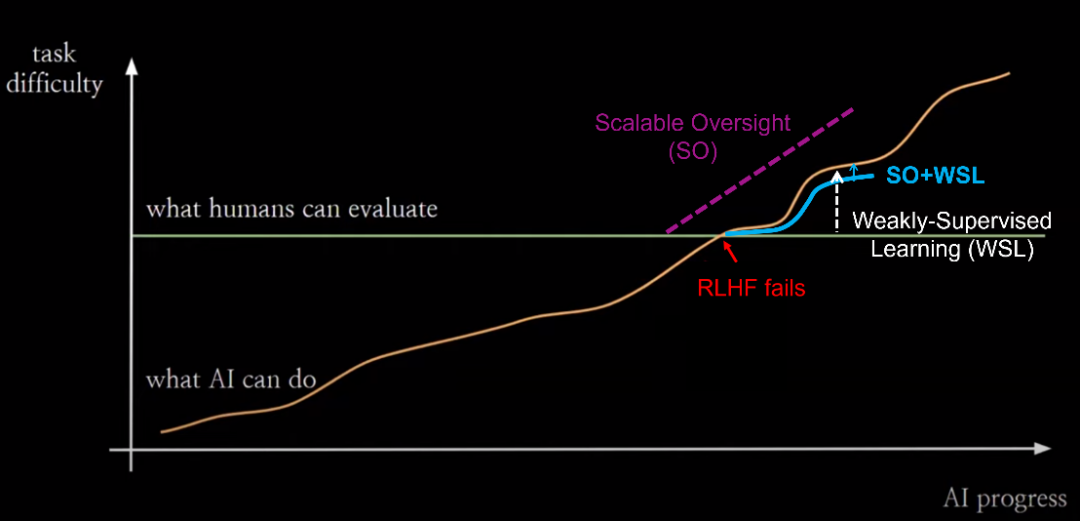

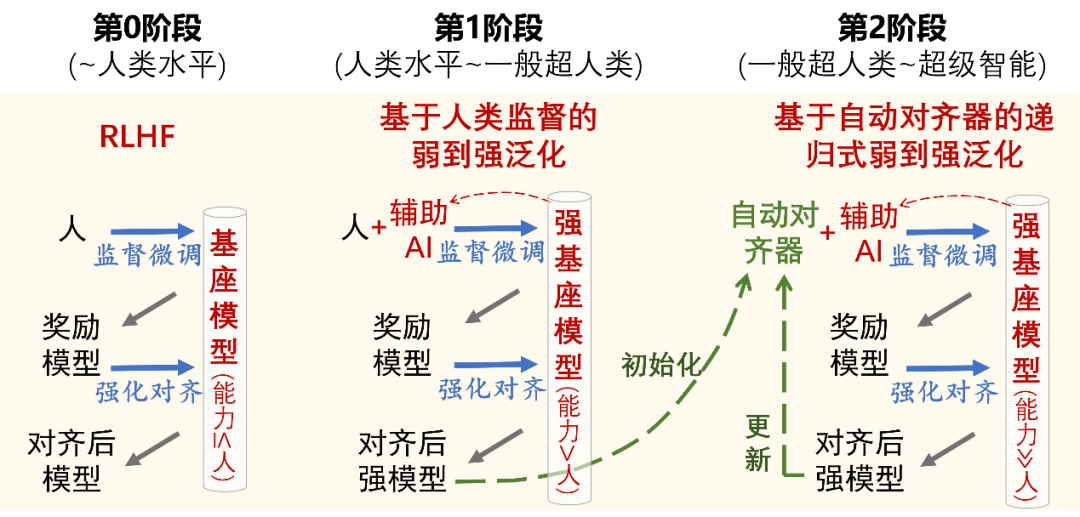

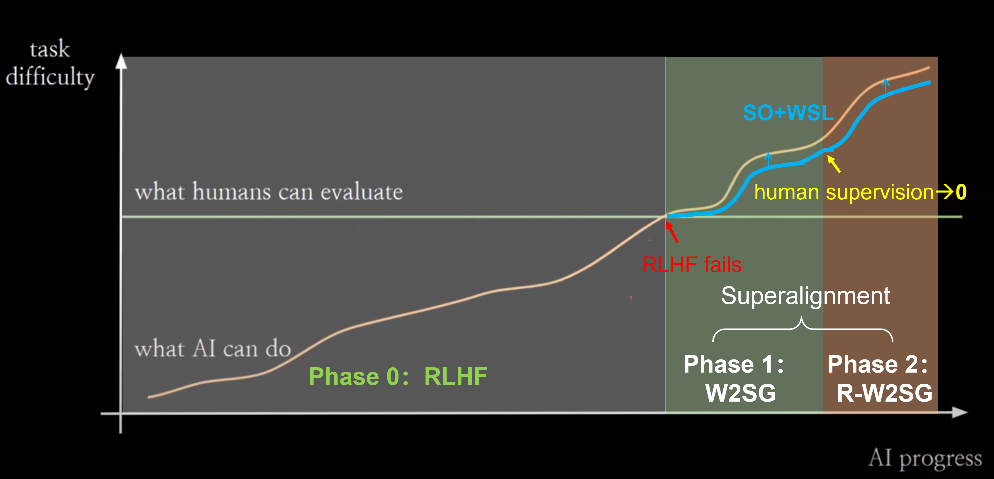

Continuous Improvement of AI Intelligence: Super Alignment

Currently, the mainstream method for achieving value alignment is RLHF. RLHF is very effective when human evaluators can provide high-quality feedback signals. However, on the timescale of AI capability evolution, human evaluators’ assessment abilities are relatively fixed. Beyond a certain critical point, humans will no longer be able to provide effective feedback signals for aligning AI systems.

The core issue of super alignment is how to allow weak supervisors to control models that are much smarter than they are when this situation occurs.

The Weak-to-Strong Generalization (W2SG) framework proposed by OpenAI’s super alignment team provides a new solution for super alignment by simulating human supervisors with weak teacher models and strong student models simulating supervised models that exceed human capabilities, making empirical research on super alignment possible. Scalable Oversight (SO) aims to enhance supervisory systems, and combining scalable oversight can reduce the capability gap between weak supervisors and strong students, better tapping into the potential of the weak-to-strong generalization framework. Furthermore, weak supervision learning and weak-to-strong generalization share similar problem settings: how to better utilize incomplete and flawed supervisory signals. Therefore, integrating scalable oversight and weak supervision learning under the weak-to-strong generalization framework can stimulate the capabilities of more powerful models from the perspectives of enhancing supervisory signals and optimizing the utilization of supervisory signals.

As AI capabilities further improve, a second critical point may be reached: the role of human supervision gradually diminishes to zero. At this point, the already aligned strongest student model can replace humans, becoming an automated alignment evaluator, which continues to supervise even stronger student models as a new weak teacher model. The automated aligner can undergo recursive updates (Recursive W2SG, abbreviated as R-W2SG): using the supervised aligned strong student model to update the automated aligner, achieving the next generation of weak-to-strong generalization, ensuring that there is only one generation of capability gap between the weak teacher model and the strong student model.

Trend 3: From Design Goals to Learning Goals – Pre-training + Reinforcement Learning

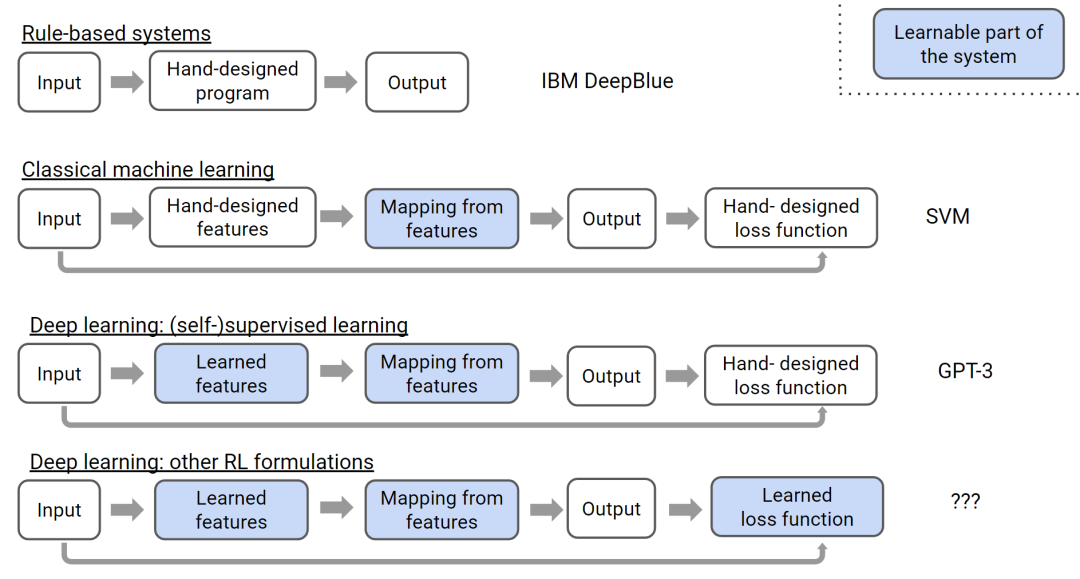

OpenAI researcher Hyung Won Chung summarized the development of artificial intelligence from expert systems, traditional machine learning, deep (supervised) learning to deep reinforcement learning from the perspective of changes in manually designed and automatically learned modules. In traditional machine learning and deep learning, the objective function needs to be manually designed, focusing on learning feature representations and the mapping from features to objectives. Reinforcement learning treats the objective function as a learnable module, capable of solving tasks where the objectives are difficult to define directly.

Comparing the recent major development nodes in machine learning, deep learning and large-scale pre-training correspond to changes in model structure and data labeling requirements, while reinforcement learning focuses on changes in objective functions: learning through interaction with the environment in the absence of clear objective guidance. Combining pre-training with reinforcement learning is a research direction with great potential: pre-training compresses existing human knowledge and experience, but is constrained by probabilistic modeling, making it difficult to create unknown knowledge as low-probability events; reinforcement learning introduces randomness by balancing the use of existing information and exploring unknown knowledge, providing opportunities to break the limitations of pre-trained models that rely on human design, achieving higher levels of intelligence.

-

(1) Pre-training to Acquire Basic Capabilities, Reinforcement Learning for Value Alignment

Currently, large model training typically follows three main steps: pre-training, supervised fine-tuning, and reinforcement learning based on feedback. The pre-training phase learns basic capabilities such as semantics and grammar from a large amount of unlabeled data. Supervised fine-tuning enhances the model’s instruction-following capabilities through high-quality prompt-answer pairs, ensuring that the output format of answers meets expectations. The third step aims to align the model’s output with human preferences and values. Due to the complexity of human values, direct definition is very challenging. Therefore, in RLHF, a reward model is first learned as a proxy for human preferences; then, through interaction with the environment (i.e., the reward model), the model learns and gradually aligns with human values.

-

(2) Pre-training Imitates Humans, Reinforcement Learning Surpasses Humans

AlphaGo’s training involved both imitation learning and self-play reinforcement learning, exploring strategies that surpass human experience; in contrast, current pre-trained large models rely solely on imitation learning, learning from corpora that reflect human activities, imitating existing human knowledge and expressions. Drawing from AlphaGo’s reinforcement learning reward design, designing a self-play task for language modeling similar to winning or losing in Go may break through the limitations of human knowledge. Of course, the complexity and diversity of language make defining what constitutes “victory” challenging; a possible approach is to assign different roles or positions to the pre-trained model, enhancing capabilities through competitive or collaborative game tasks.

Demis Hassabis believes that creativity can be divided into three levels: interpolation, extrapolation, and invention. According to this classification, pre-trained large models currently remain at the first level: interpolating and combining existing knowledge, but have clearly reached a top-level performance. The 37th move in the second game of AlphaGo against Lee Sedol represents the second level of extrapolation: a strategy that human players had never seen before. By combining pre-training with reinforcement learning to break through the limitations of human supervision, it can be seen as exploring the second level of intelligence for large models. Regarding the third level, Hassabis believes it focuses on “not making a good move in Go, but inventing a new board game.” Correspondingly, for large models, it may require them to discover new mathematical conjectures or theorems. Reflecting on AlphaGo Zero, which abandoned imitation learning of human game records and autonomously learned from scratch under only win-loss rules, could a similar approach, letting models explore from zero without fitting to training data, break through human grammatical limitations or even develop their own language, be a solution for achieving third-level intelligence?

Prospects

-

(1) “True” Multimodality: Returning from Fine-tuning to Pre-training

Although the success of large language models in the past year has prompted many multimodal models to choose fine-tuning visual and audio encoders based on existing large language models, in the long run, the development of multimodal large models tends to return to joint pre-training of various modal data from scratch. While language is key to distinguishing human intelligence from other animals, the evolution of language ability in humans took about 500,000 years to develop. The evolution of the human visual system took hundreds of millions of years, completing long before language ability formed. Additionally, educational experiences suggest that coordinating multimodal perception aids in children’s intellectual development.

Currently, we have seen some models, such as Gemini, re-adopt the joint pre-training approach. Many predict that the next generation of multimodal large models, such as GPT-5, especially when incorporating video generation capabilities, will more likely adopt a unified joint pre-training method. Joint pre-training can comprehensively understand and integrate information from different modalities at a foundational level, establishing deeper connections and synergies.

-

(2) System 1 vs. System 2

While AI Agents force models to engage in slow reasoning through complex prompt designs, the system one-style processing of corpora during the pre-training phase limits their complex reasoning capabilities. It is rumored that DeepMind and OpenAI are planning to enhance the utilization of training data by incorporating strategies like tree search, essentially guiding models to engage in system two-style learning during the training phase. This training method is expected to enable models to better leverage their system two capabilities during reasoning.

An interesting question arises: if system two learning is employed during the training phase while system one quick responses are used during reasoning, what effects will this produce? Reflecting on MuZero, which employed MCTS self-play methods during training but did not execute online MCTS search during reasoning, especially in scenarios requiring quick responses, it directly used the trained policy network for decision-making. This can be understood as the model acquiring complex reasoning capabilities through system two reinforcement training and solidifying these capabilities into system one intuition. This may represent a more ideal application scenario: models deeply learn and master complex reasoning capabilities during training, while applying these capabilities in a more direct and straightforward manner in applications.

-

(3) Understanding and Learning Based on Interaction

Li Feifei pointed out that the North Star task for AI from 2020 to 2030 is to achieve proactive perception and interaction with the real world. If statistical methods acquire intelligence by mimicking the results of intelligence, interaction can be seen as a means of acquiring intelligence through simulation. Compared to the formal expressions and automatic implementation methods of logic deduction and inductive summarization, causality may be an important solution for achieving intelligence through interaction. Causality is expected to address the limitations of statistical machine learning in terms of data assumptions, optimization objectives, and learning mechanisms through interventions, uncertain inference, and counterfactual reasoning.

Understanding and learning based on interaction, especially within the framework of combining pre-training with reinforcement learning, provides new perspectives for the development of artificial intelligence. For instance, placing multimodal pre-trained models within the framework of embodied intelligence allows them to learn and self-enhance through interactions with their environment. By interacting with physical and social environments, models can enhance their common-sense understanding and adaptability to the physical world and social interactions.

-

(4) Superintelligence vs. Super Alignment

The recent events at OpenAI have brought the concepts of “superintelligence” and “super alignment” into the public eye. As OpenAI’s technical leader, Ilya Sutskever has long been committed to promoting the continuous enhancement of AI intelligence. With the establishment of the super alignment team, and his personal leadership of the team, his focus has shifted to alignment and safety issues.

Superintelligence and super alignment represent a major thread in the future development of artificial intelligence: one explores the limits of capability, while the other ensures the safety baseline, one for crafting the sharpest spear, the other for constructing the sturdiest shield.

References:

[1] Leslie Valiant: “Evolution as Learning.” Talk @Theory-Fest 2019-2020: Evolution.

[2] Mobile-Agent: Autonomous Multi-Modal Mobile Device Agent with Visual Perception. 2024.

[3] Benign Adversarial Attack: Tricking Models for Goodness. 2022.

[4] Towards Accuracy-Fairness Paradox: Adversarial Example-based Data Augmentation for Visual Debiasing. 2020.

[5] A Review of Interpretability Research for Deep Models Aimed at Image Classification. 2022.

[6] Adversarial Privacy-Preserving Filter. 2020.

[7] Towards Adversarial Attack on Vision-Language Pre-training Models. 2022.

[8] Counterfactually Measuring and Eliminating Social Bias in Vision-Language Pre-training Models. 2022.

[9] Exploring the Privacy Protection Capabilities of Chinese Large Language Models. 2024.

[10] Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback. 2022.

[11] Qin Bing. “Safety Testing and Human Value Alignment of Large Language Models” Report, 2023.

[12] An LLM-free Multi-dimensional Benchmark for MLLMs Hallucination Evaluation. 2024.

[13] CValues: Measuring the Values of Chinese Large Language Models from Safety to Responsibility. 2024.

[14] CDEval: A Benchmark for Measuring the Cultural Dimensions of Large Language Models. 2024.

[15] LLMs may dominate information access: Neural retrievers are biased towards LLM generated texts. 2024.

[16] AI-Generated Images Introduce Invisible Relevance Bias to Text-Image Retrieval. 2024

[17] AIGCs Confuse AI Too: Investigating and Explaining Synthetic Image-induced Hallucinations in Large Vision-Language Models. 2024.

[18] Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision. 2023

[19] Improving Weak-to-Strong Generalization with Scalable Oversight and Ensemble Learning. 2024 WeChat public account article: “Superintelligence is the spear, super alignment is the shield.”

[20] WeChat public account article: “Two Conjectures about Q*(Q-star).”

[21] A Reconfigurable Data Glove for Reconstructing Physical and Virtual Grasps. 2023.

[22] Emergent Tool Use from Multi-Agent Interaction. 2020

Reply “Dataset” in the public account to obtain a compilation of over 100 resources in various directions of deep learning

Jishi Insights

Technical Column: Detailed Interpretation Column on Multimodal Large Models|Understanding the Transformer Series|ICCV2023 Paper Interpretation|Jishi LiveJishi Perspective Dynamics: Welcome to apply for the Jishi Perspective 2023 Ministry of Education Industry-University Cooperation Collaborative Education Project|New Horizons + Smart Brain, “Drones + AI” become good helpers for intelligent road inspection!Technical Review: A 40,000-word detailed explanation of Neural ODE: Using Neural Networks to Describe Non-discrete State Changes|What are the details of the transformer? 18 Questions about Transformers!

Click to read the original text and enter the CV community

Gain more technical insights