From HTTP 1.0 to HTTP/2: The Evolution of Internet Protocols (Part 1)

1. Introduction: Why is HTTP the “Universal Language” of the Internet?

When you open the Taobao homepage, there may be over 50 instances of “client-server” communication happening simultaneously in the background. The rules governing these communications are defined by the HTTP protocol. From HTTP 1.0 in 1996 to the current HTTP/2, how does it support hundreds of billions of web requests daily? This article will dissect the evolution of HTTP from the perspective of its underlying protocol logic.

2. HTTP 1.0: The “First Generation Design” of Internet Protocols

(1) Core Working Mode of HTTP 1.0

The communication rules of HTTP 1.0 can be summarized as “one request, one connection”:

1. The client initiates a TCP connection → sends an HTTP request

2. The server returns a response → immediately closes the connection

A typical scenario: Early web pages contained only text content, requiring only 1-2 requests per visit, which was relatively efficient. However, as web pages became more complex, issues gradually emerged:

(2) Two Fatal Flaws of HTTP 1.0

1. Severe performance loss

– Each resource (image, JS, CSS) requires a new TCP connection

– Modern web pages average over 30 resource files, with the time spent on frequent connections exceeding 50%

2. Lack of server push

– Only supports unidirectional communication of “client request → server response”

– Cannot achieve message pushing, real-time data updates, and other needs

(3) The First Optimization of HTTP 1.0: Keep-Alive and Content-Length

1. Keep-Alive connection reuse

– The client adds `Connection: Keep-Alive` to the request header

– The server does not close the connection after processing the request, waiting for subsequent requests to reuse

– The server automatically closes idle connections after a `Keep-Alive timeout` parameter (e.g., 15 seconds)

2. Content-Length data identifier

– Problem: How to determine if the response is fully received when the connection is not closed?

– Solution: The server adds `Content-Length: 1024` in the response header

– The client confirms the response is complete after receiving 1024 bytes

3. HTTP 1.1: The “Protocol Revolution” that Dominated for 20 Years

(1) Connection reuse becomes standard

1. Keep-Alive is enabled by default

– No need to explicitly declare `Connection: Keep-Alive`, unless using `Connection: Close` to force closure

2. Chunk mechanism solves the dynamic content problem

– Problem: It is difficult to calculate Content-Length for dynamically generated pages in advance

– Solution: Introduce chunked transfer (Transfer-Encoding: chunked)

▶ The response body is divided into multiple data chunks, each starting with a hexadecimal length value

▶ The end is marked with `0\r\n\r\n`

HTTP/1.1 200 OKContent-Type: text/plainTransfer-Encoding: chunked25 # First chunk length (37 bytes)This is the first chunk1C # Second chunk length (28 bytes)and this is the second0 # End marker(2) Pipeline mechanism: The trade-off between efficiency and flaws

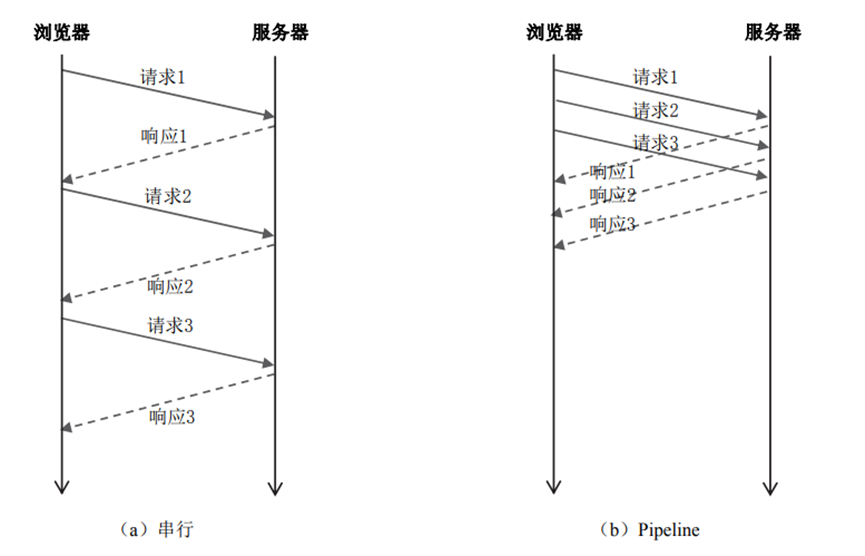

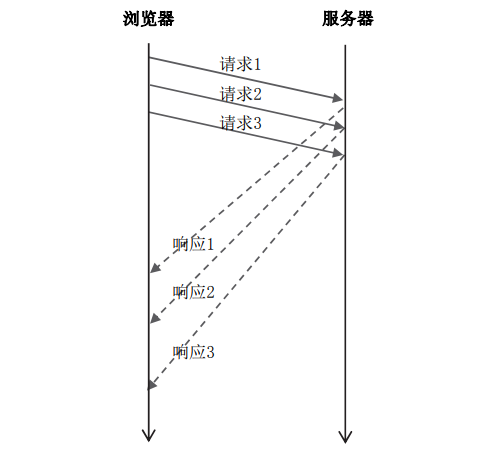

1. Pipelined request design

– The client can send multiple requests consecutively over the same TCP connection

– No need to wait for the previous response to return, theoretically improving loading efficiency by over 30%

2. Head-of-Line Blocking

– Pain point: Requests are sent and received in order

– Example: If request 1 is blocked due to network latency, the responses for requests 2 and 3 will also be blocked

– Consequence: Modern browsers default to disabling the Pipeline mechanism

(3) “Performance Breakthrough” Solutions Before HTTP/2

1. Front-end optimization techniques

– Image merging (Spriting): Combine 20 small icons into one large image to reduce the number of requests

– Inlining resources: Embed small images as Base64 in CSS

.icon { background: url(data:image/png;base64,xxx) no-repeat; }– JS merging and compression: Combine 10 JS files into one, reducing size by 40%

2. Domain sharding technology

– Principle: Browsers limit 6-8 connections per single domain

– Solution: Distribute resources across multiple domains like cdn1.com/cdn2.com

– Effect: Increases parallel connection count to 24-32

(4) “Curve-saving” Solutions for Server Push

1. Client-side periodic polling

– Sending a request every 5 seconds is inefficient and has been eliminated

2. HTTP long polling

– After the client sends a request, if the server has no data, it “hangs” the connection

– It only returns a response when there is data or the timeout period expires (e.g., 30 seconds)

3. HTTP Streaming

– Uses the Chunk mechanism to send continuous data streams

– Keeps a single connection open to avoid the overhead of repeated request headers

(5) Practical Function: Resuming Downloads

1. Implementation principle

– The client specifies the download range with the request header `Range: bytes=1024-2048`

– The server only returns the corresponding byte data, avoiding the need to re-download all content

2. Application scenarios

– Resuming downloads of large files (e.g., videos, installation packages) after interruption

– Note: Only supports download scenarios; uploads need to be implemented manually

4. Preview of the Next Article: How HTTP/2 Breaks Through Performance Bottlenecks?

HTTP 1.1 has supported the development of the Internet for 20 years through various optimizations, but issues like head-of-line blocking and protocol overhead remain unresolved. The next article will analyze the three major innovations of HTTP/2: binary framing, multiplexing, and server push, and how it improves web loading speeds by over 50%.

✅ Summary of Key Points

1. The “one connection, one request” of HTTP 1.0 leads to performance bottlenecks

2. HTTP 1.1 becomes mainstream through optimizations like connection reuse and the Chunk mechanism

3. Front-end optimization techniques and server push solutions before HTTP/2