1. Introduction to HTTP

1.1 Versions of HTTP

HTTP/0.9

Overview

Released in 1991, it has no headers and is very simple, supporting only GET requests.

HTTP/1.0

Released in 1996, it has poor security for plaintext transmission and large headers. It has the following enhancements over 0.9:

-

Added headers (decoupling metadata from data)

-

Introduced status codes to declare the result of requests.

-

Content-type can transmit other file types.

-

Request headers include the HTTP/1.0 version number.

Supports GET, POST, and HEAD methods.

Cache strategy: HTTP/1.0’s caching strategy mainly relies on the If-Modified-Since and Expires headers.

Disadvantage: Short connections, a new TCP connection is established for each resource request.

HTTP/1.1

Released in 1997, it is the most widely used version today. It has the following enhancements over 1.0:

-

Can set keep-alive to reuse TCP connections (requests must be sent serially), long connections.

-

Supports pipelining, allowing subsequent requests to be sent after the first request is made.

-

Added HOST header to inform the server which domain the user is requesting.

-

Added headers for type, language, encoding, etc.

-

Supports resumable downloads (by setting parameters in the header).

-

New methods: OPTIONS, PUT, PATCH, DELETE, TRACE, and CONNECT.

Cache strategy: HTTP/1.1’s caching strategy is slightly more complex than HTTP/1.0, including Entity Tag (ETag), If-Unmodified-Since, If-Match, If-None-Match, etc.

Updated in 2014:

-

Added TLS support, i.e., HTTPS transmission.

-

Supports four models: short connections, reusable TCP long connections, server push model (server actively pushes data to the client cache), and WebSocket model.

Disadvantage: Still a text protocol, both client and server need to use CPU for decompression.

HTTP/2.0

Released in 2015, mainly to enhance security and performance. Enhancements over 1.1 include:

-

Header compression (merging identical parts of requests sent simultaneously).

-

Binary framing for easier transmission of only the differing parts of headers.

-

Stream multiplexing, allowing multiple requests to be sent over a single connection, saving connections.

-

Server push, allowing multiple responses to a single client request.

-

Can concurrently send requests over a single TCP connection.

Disadvantage: Based on TCP transmission, it suffers from head-of-line blocking issues (packet loss stops window sliding), and TCP will retransmit lost packets. TCP handshake delays are long, leading to protocol rigidity.

HTTP/3.0

Released in 2018, based on Google’s QUIC, using UDP at the lower level instead of TCP.

This resolves the head-of-line blocking issue and eliminates the need for handshakes, greatly improving performance, with TLS encryption by default.

2. Significance of HTTP Application Layer Parameters

Range: Download range

Connection: close for short connections, keep-alive for long connections.

Differences between HTTP/1.0 and HTTP/1.1:

a. HTTP/1.0 only supports short connections, while HTTP/1.1 supports long connections.

b. HTTP/1.1 introduces the Host field, supporting virtual hosting. Multiple virtual IPs may correspond to different websites on the same physical host, and the Host field can distinguish between them.

c. Request methods

HTTP/1.0 defines three request methods: GET, POST, and HEAD.

HTTP/1.1 adds five new request methods: OPTIONS, PUT, DELETE, TRACE, and CONNECT.

3. Head-of-Line Blocking Issue

Head-of-Line blocking (HOL blocking) is a well-known issue in the HTTP protocol that increases latency. It refers to the situation where the first packet (the head) is blocked, causing the entire queue of packets to be delayed. HOL blocking occurs at multiple layers of the OSI model, such as:

HTTP head-of-line blocking

TLS head-of-line blocking

TCP head-of-line blocking

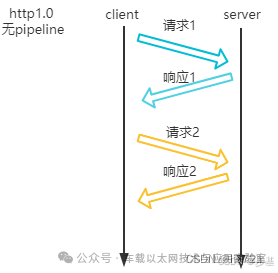

In HTTP/1.0, HTTP head-of-line blocking means that on the same TCP channel, the first HTTP request from the client blocks subsequent HTTP requests until the client receives the response to the first request. As shown in the figure: after request 1 is sent, request 2 cannot be sent until response 1 is received. Key point: Blocking occurs at the client request.

HTTP/1.0 head-of-line blocking

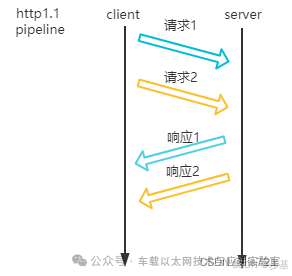

In HTTP/1.1, HTTP head-of-line blocking means that on the same TCP channel, the first HTTP response from the server blocks subsequent HTTP responses until the server finishes sending the first HTTP response. Key point: Blocking occurs at the server response.

HTTP/1.1 head-of-line blocking

The reason for HTTP head-of-line blocking is that the HTTP protocol design does not support out-of-order responses. The solution is to assign a unique ID to both requests and responses. In HTTP/2, a stream ID is added, and each request and response pair carries the same stream ID.

TLS head-of-line blocking means that on the same TCP channel, the receiver must wait for all TCP packets that make up the complete data to be received before TLS can decrypt.

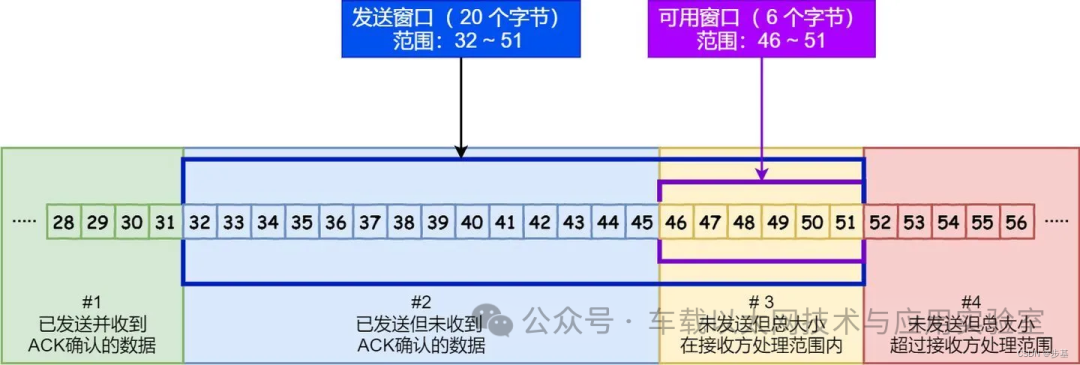

TCP head-of-line blocking includes both sender and receiver blocking.

TCP sender head-of-line blocking means that on the same TCP channel, multiple packets within the first TCP sliding window block the sending of subsequent sliding windows until all are sent and acknowledged.

TCP receiver head-of-line blocking means that on the same TCP channel, if a packet with a smaller sequence number is lost, all subsequent packets with larger sequence numbers will be queued in the receiver’s buffer and cannot be accessed by the application layer.

4. Detailed Explanation of QUIC Protocol

4.1 Retransmission Mechanism

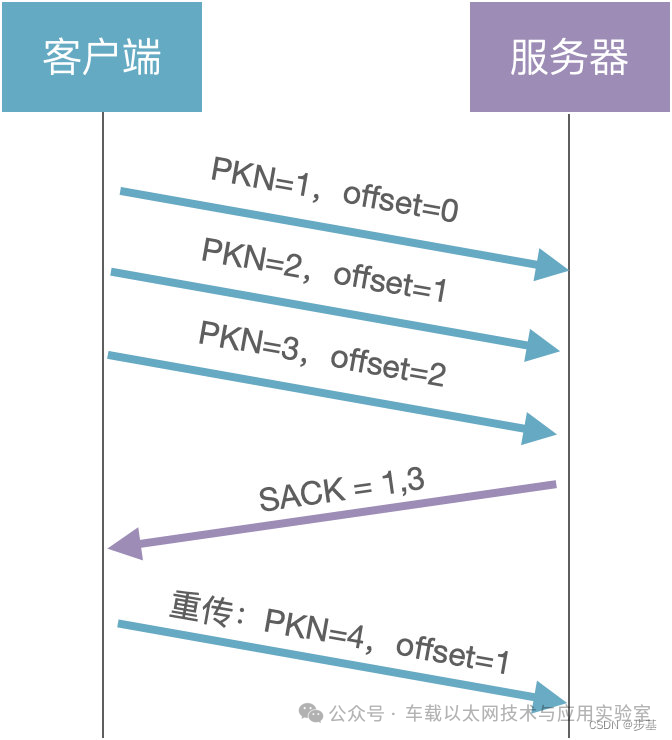

Packet number; PKN=4, retransmitted PKN=2 data packet. Offset indicates the position of this packet in the total data.

4.2 Flow Control

Similar to TCP flow control sliding window principle.

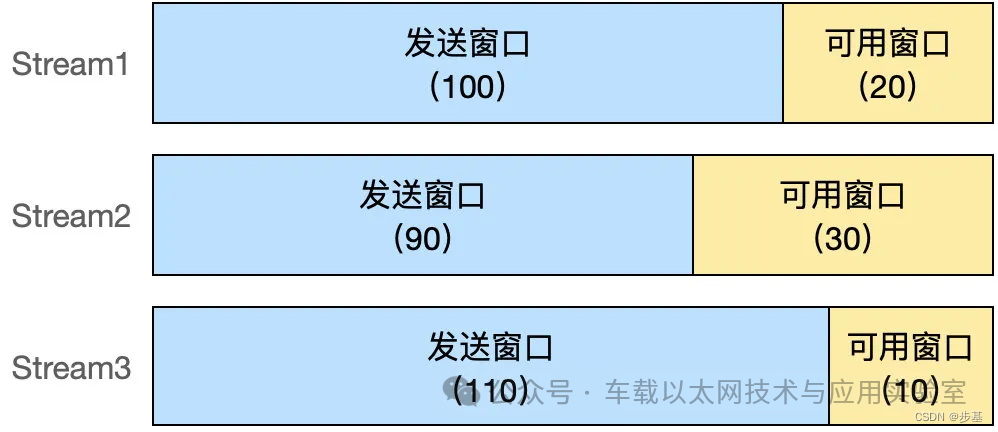

Unlike TCP, QUIC’s sliding window is divided into two levels: Connection and Stream. Connection flow control specifies the total window size for all data streams; Stream flow control specifies the window size for each stream.

The total available window size for the entire Connection is: 20+30+10 = 60

4.3 Connection Migration

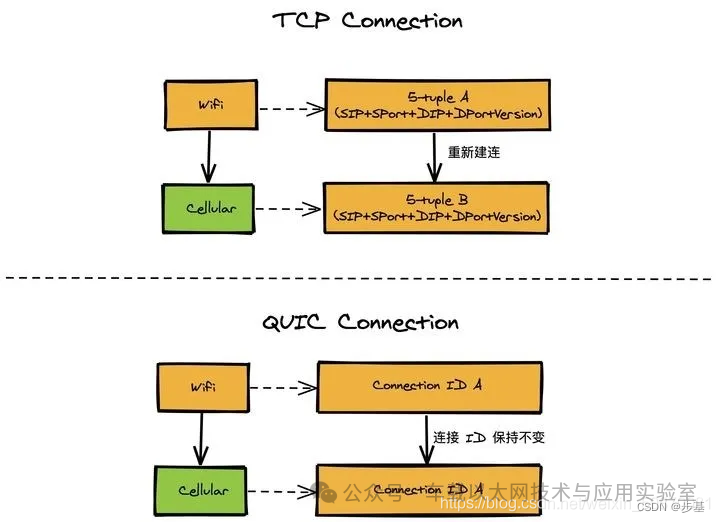

When the client switches networks, the connection to the server does not drop, allowing for normal communication, which is impossible with TCP. This is because TCP connections are based on a 4-tuple: source IP, source port, destination IP, destination port; any change in one requires a new connection. However, QUIC connections are based on a 64-bit Connection ID, and network switching does not affect the Connection ID, so the connection remains logically intact.

Disclaimer: We respect originality and value sharing; the text and images are copyrighted by the original authors. The purpose of reprinting is to share more information and does not represent our stance. If your rights are infringed, please contact us promptly, and we will delete it immediately. Thank you!

Original link:

https://blog.csdn.net/wangbuji/article/details/130724848