Produced by Big Data Digest

Author: Caleb

Young people, do you crave power?

That kind of power that can summon lightning at your fingertips, causing massive damage with just a throw.

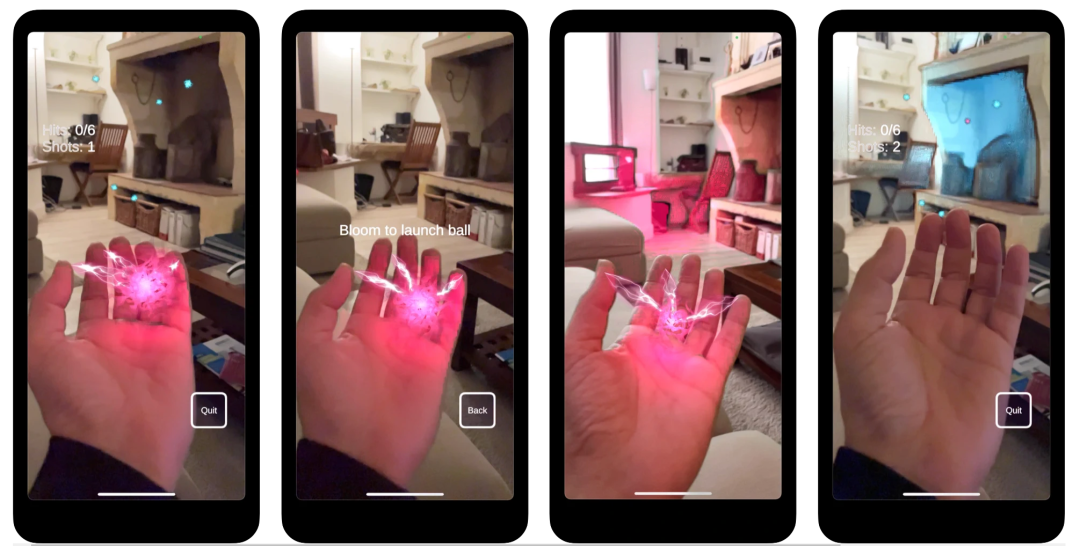

Now, you just need to move your fingers to create a surge of energy that can interact with the real world. This lightning will dissipate into small currents, flowing up and down the edges of objects, leaving a pink glow.

Just like this:

This application, called Let’s All Be Wizards!, is now available on the App Store for $2.99. However, it requires LiDAR, so currently only iPhone 12 Pro and iPhone 13 Pro users can harness this power.

Surprisingly, such magical power is hidden in your phone.

Related videos have sparked heated discussions and views on Reddit and LinkedIn, with users praising, “The interaction with the room is incredible!”

Others commented, “This must be a fantastic NFT.”

Next, let’s explore the source of this power together!

Deep Sensors + Machine Learning, with LiDAR as a Key Component

First, in addition to an Apple phone that supports LiDAR, you will also need Unity 2020.3 LTS and ARFoundation.

Since the power is to be displayed from the hands, it is essential to capture the hands, which requires 60 FPS hand detection.

and 3D bones world detection.

and 3D bones world detection.

Based on this, LiDAR becomes particularly necessary, as it allows for accurate lighting (subsurface scattering) or edge detection (for the sparks returned) to truly merge the native world with the virtual world. Without real-time depth estimation, these features would not be possible.

Lighting on the spatial mesh is also achieved through five rendering channels:

-

Normals and distances for each pixel (screen space)

-

Background camera and hand occlusion

-

Subsurface scattering lighting for hands

-

Halo and game space effects (in fact, each energy ball passes through once)

-

Transparent and opaque objects

As project author Olivier Goguel summarizes, “Thanks to depth sensors and vision-based machine learning, we can create a digital version of the hand and its surrounding environment in real-time, generating a 3D environment for seamless interaction between virtual and real objects.“

The project has also been open-sourced on GitHub, and you can check the detailed process there:

Project link:

https://github.com/ogoguel/realtimehand

Mixed Reality and Object Recognition Break the Boundaries of Games and Data

Flipping through Olivier Goguel’s LinkedIn profile, I was dazzled by his impressive resume.

As a video game enthusiast, Goguel has worked for over 25 years at major video game companies such as Argonaut, Lagardere Active, Mimesis Republic, Namco, Microsoft, and Asobo Studio. It is these experiences that have allowed him to apply various technologies and ideas to many entertainment projects, including AR/VR experiences.

He currently serves as CTO at HoloForge Interactive.

On HoloForge Interactive’s official website, they state that they aim to break the boundaries in the experience of merging people, places, and data to fully unleash their potential.

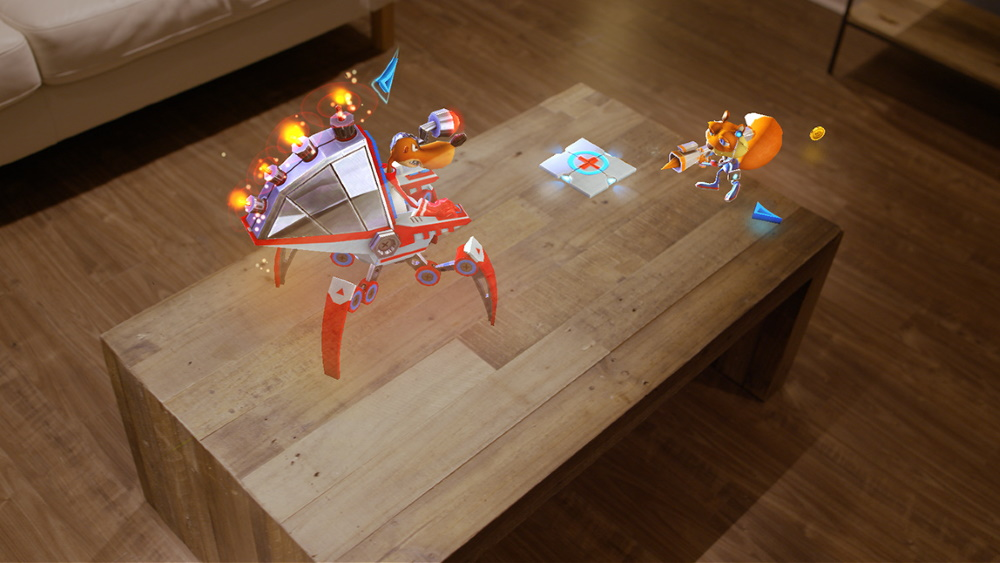

For example, in 2016, they collaborated with Microsoft to develop the game Young Conker, which utilizes mixed reality and object recognition for the experience.

In the game, scenes are directly constructed in the room, such as enemies appearing around the coffee table, items to collect hiding in your sofa, and needing a trampoline to watch TV, etc. Levels adapt and change with the environment.

In this project, developers need to overcome the following challenges:

-

Environment Recognition: The game detects the topology of the environment and identifies its components. The system needs to detect the floor, walls, and ceiling in the room, as well as various types of furniture, creating unique interactions with game characters.

-

Interaction: Players can move the protagonist with their gaze, controlling game actions in real-time without a joystick.

-

Room Solver AI: The game uses spatial mapping tools and employs AI to automatically generate levels and character placements.

-

True Connection with Characters: Since the game knows the player’s location and the location they are looking for, each character can respond to the player’s presence in different and surprising ways.

How Local Lenses Change the Way We View Cities

Speaking of AR, one of the most impressive projects is Snapchat’s outdoor AR project Local Lenses launched two years ago.

This AR project differs from competitors focused on maps, as Snap plans to allow users to change the appearance of their communities using digital content, enabling users to “decorate nearby buildings with colorful paint,” with effects visible to friends.

From the official promotional video, Snapchat’s AR is a boon for those with less dexterity; you can freely paint the city, and it’s easy to operate—just activate the camera on Snapchat, and City Painter lets you spray red and blue “fountains” above the streets, then decorate wall bricks with pre-designed graffiti.

While it looks fun, the development process for Local Lens was not so simple. The first challenge was the lack of 3D data for important public landmarks, and secondly, it was necessary to choose streets where users need not worry about traffic. Moreover, while users may not take photos of every angle of the street, the absolute size of the space is also a significant challenge for developers. In this sense, City Painter, which is part of Local Lens, innovatively created a 3D model of the entire Carnaby Street, allowing users to paint from any angle, thus changing the way people view the city. Snapchat extracted visual data of the streets from multiple sources, including public photos shared by users. “For the scenes reflected by Local Lens, we utilized 360-degree camera images,” Pan stated, “People can draw maps while walking down the street and combine it with any public news photos we might have about the area.”City Painter also supports experience sharing. As Pan said, “When you do something to the external environment, others can almost see the results in real-time. Even if you leave later, new visitors the next day will still see the changes, meaning these newcomers can see the spaces altered by themselves and others.”Now, as the concept of the metaverse is gradually being understood and researched, we believe that more interesting VR and AR projects will be developed in the future!

Those who click “Looking” have become more attractive!

Those who click “Looking” have become more attractive!