Source: Content from Mo Shang Feng Qi Lu Kan IC, thank you..

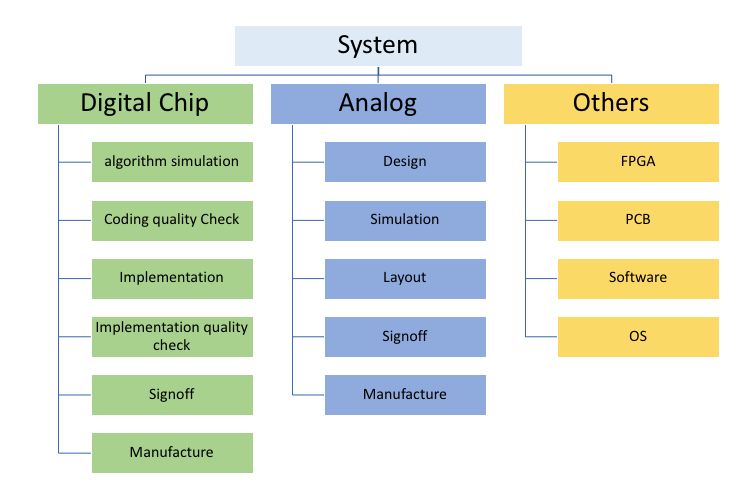

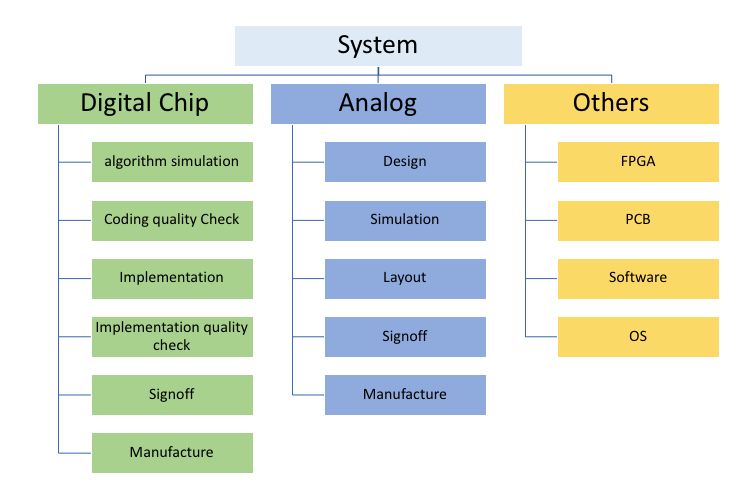

With the support of various parties, integrated circuits have become a hot topic of the era, with numerous articles discussing the complexity and difficulties of chip design. The old donkey plans to review the chip design process from the perspective of EDA usage. After drawing the first diagram, he realized that he was only familiar with a small segment of the digital circuit part, completely ignorant of the system, software, and upper-level applications, and could only categorize it as Others.

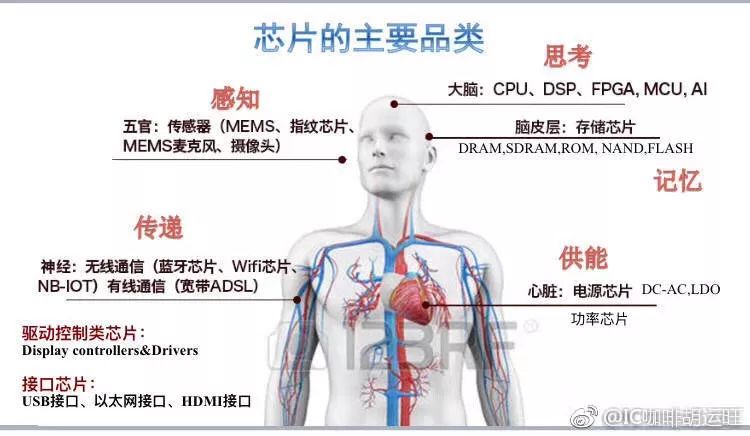

For consumers, a usable system includes digital integrated circuit parts, analog integrated circuit parts, system software, and upper-level application parts. The functions of each part can be clearly understood by borrowing the exquisite diagram from IC Coffee’s Mr. Hu. The external world is an analog world, so all parts that need to interface with the external world require analog integrated circuits, which convert the external information collected into 0/1 for digital integrated circuit processing, and then convert the processed signals back into analog signals for output; and all these operations are completed under the command and monitoring of the system software, hence it is said: The chip is the skeleton, while the system software is the soul.

Image source: Internet, infringement will be deleted, thank you~

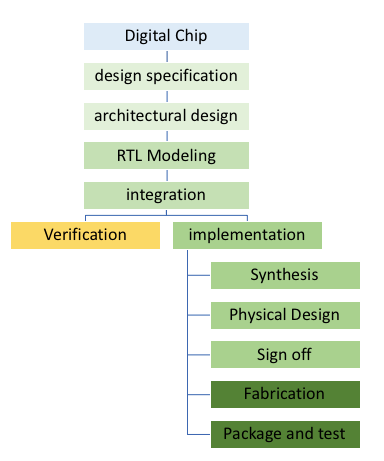

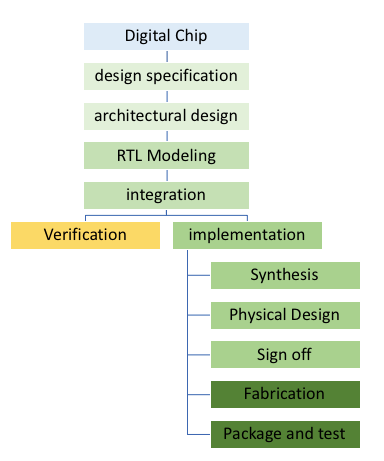

The design implementation process of digital integrated circuits is a lengthy process. Taking mobile phone baseband chips as an example, engineers initially encounter countless pages of protocol documents for 3G, 4G, and 5G. Architects need to determine based on the protocols: Which parts of the protocol can be implemented in software, and which parts need to be implemented in hardware; Algorithm engineers need to study every part of the protocol in depth and select the algorithms to be used for implementation; Chip design engineers need to describe the algorithms selected by the algorithm engineers in RTL; chip verification engineers need to design test vectors based on the algorithms selected by the algorithm engineers to perform functional and performance verification on the RTL; Digital implementation engineers need to reshape the RTL into GDS based on the target PPA set by the algorithm engineers and design engineers; chip production is too complex to be entirely handled by foundries, as is packaging; For testing, most companies rent third-party testing platforms completed by their own testing engineers, only a few wealthy companies will have their own testing platforms.

For consumers, a usable system includes digital integrated circuit parts, analog integrated circuit parts, system software, and upper-level application parts. The functions of each part can be clearly understood by borrowing the exquisite diagram from IC Coffee’s Mr. Hu. The external world is an analog world, so all parts that need to interface with the external world require analog integrated circuits, which convert the external information collected into 0/1 for digital integrated circuit processing, and then convert the processed signals back into analog signals for output; and all these operations are completed under the command and monitoring of the system software, hence it is said: The chip is the skeleton, while the system software is the soul.

Image source: Internet, infringement will be deleted, thank you~

The design implementation process of digital integrated circuits is a lengthy process. Taking mobile phone baseband chips as an example, engineers initially encounter countless pages of protocol documents for 3G, 4G, and 5G. Architects need to determine based on the protocols: Which parts of the protocol can be implemented in software, and which parts need to be implemented in hardware; Algorithm engineers need to study every part of the protocol in depth and select the algorithms to be used for implementation; Chip design engineers need to describe the algorithms selected by the algorithm engineers in RTL; chip verification engineers need to design test vectors based on the algorithms selected by the algorithm engineers to perform functional and performance verification on the RTL; Digital implementation engineers need to reshape the RTL into GDS based on the target PPA set by the algorithm engineers and design engineers; chip production is too complex to be entirely handled by foundries, as is packaging; For testing, most companies rent third-party testing platforms completed by their own testing engineers, only a few wealthy companies will have their own testing platforms.

Image source: Internet, infringement will be deleted, thank you~

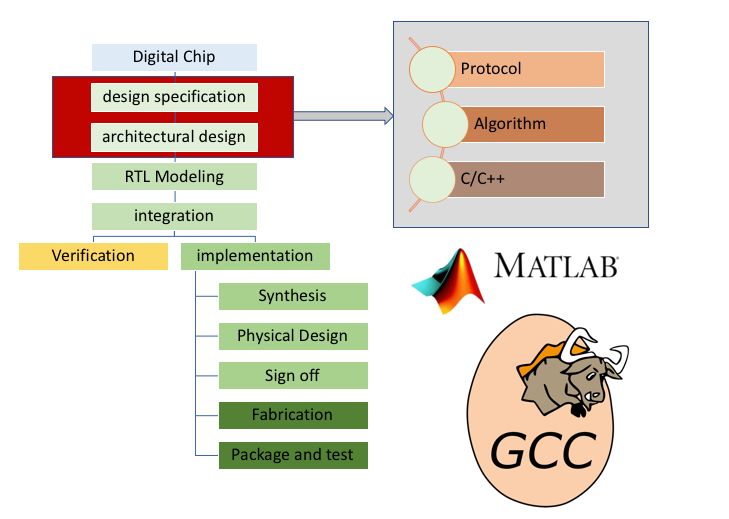

A chip’s performance is 60% dependent on the architect, and in China, there are no more than a hundred good architects, and no more than ten extremely good architects. Architects are the creators of the chip’s soul, at the top of the food chain, and are impressive existences. From the old donkey’s superficial understanding, besides office software, there seems to be no EDA tools used for architectural design; Once the architecture is finalized, numerous algorithm engineers follow suit, choosing appropriate algorithms for every point stipulated by the protocol, using C/C++ for precise simulation to ensure functionality, accuracy, efficiency, throughput, etc. Matlab and GCC should be the tools they use the most.

Image source: Internet, infringement will be deleted, thank you~

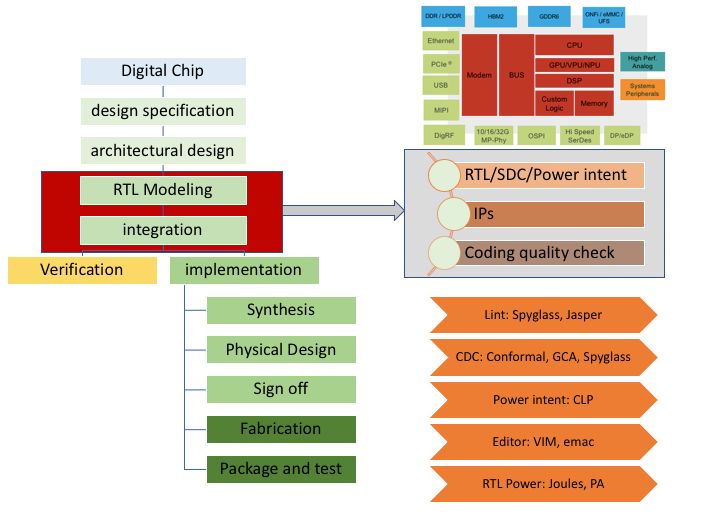

Design engineers convert the abstract descriptions or fixed-point C selected by the algorithm engineers through repeated simulation and selection into RTL. During the design process, they need to simulate and synthesize repeatedly to determine the correctness of the design function and the achievable PPA. Besides RTL, design engineers also need to write SDC and power intent according to design goals and conduct corresponding quality checks.Design engineers need to use a lot of EDA tools:

Image source: Internet, infringement will be deleted, thank you~

A chip’s performance is 60% dependent on the architect, and in China, there are no more than a hundred good architects, and no more than ten extremely good architects. Architects are the creators of the chip’s soul, at the top of the food chain, and are impressive existences. From the old donkey’s superficial understanding, besides office software, there seems to be no EDA tools used for architectural design; Once the architecture is finalized, numerous algorithm engineers follow suit, choosing appropriate algorithms for every point stipulated by the protocol, using C/C++ for precise simulation to ensure functionality, accuracy, efficiency, throughput, etc. Matlab and GCC should be the tools they use the most.

Image source: Internet, infringement will be deleted, thank you~

Design engineers convert the abstract descriptions or fixed-point C selected by the algorithm engineers through repeated simulation and selection into RTL. During the design process, they need to simulate and synthesize repeatedly to determine the correctness of the design function and the achievable PPA. Besides RTL, design engineers also need to write SDC and power intent according to design goals and conduct corresponding quality checks.Design engineers need to use a lot of EDA tools:

-

-

Lint: RTL quality check, Spyglass, Jasper;

-

CDC: SDC quality check, Spyglass, Conformal, GCA;

-

CPF/1801: power intent quality check, CLP;

-

Power: RTL level power analysis, Joules, PA;

-

Simulator: C, S, M all have their own simulation tools;

-

Image source: Internet, infringement will be deleted, thank you~

The old donkey believes that from integration onwards, it transitions from mental labor to physical labor. Comparing it to building a house, it is like moving from designer to bricklayer. Integration engineers need to connect all modules used in the chip together, the guiding ideology is determined by the architecture engineer, how the various IPs connect is determined by the owner of each IP, and integration engineers only need to ensure that they do not connect too many, too few, or incorrectly. It is said that currently there are no effective integration tools, and emac is commonly used.

Continuing, in actual projects, verification and synthesis will begin to overlap from RTL coding, iterating repeatedly.

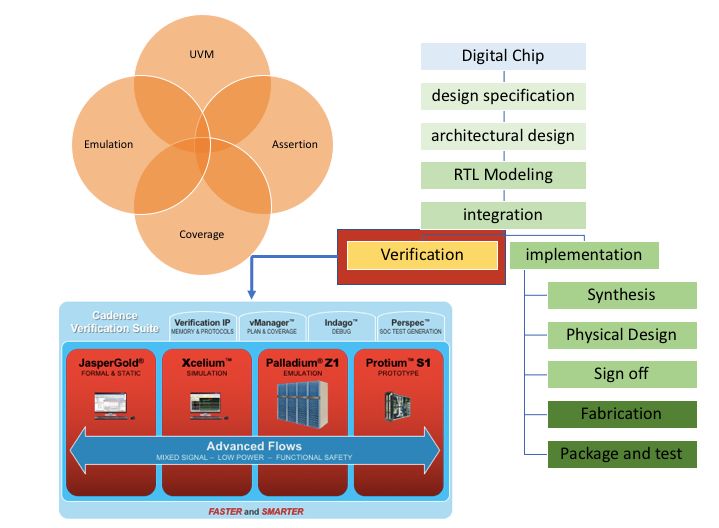

Verification occupies a large proportion in digital chip design. In recent years, driven by design complexity, verification methodologies and means have been constantly updated, from OVM to UVM, from dynamic verification to static verification, from FPGA to emulator, all innovations can be summarized as: fast, complete, and easy to debug. Verification involves many aspects, and verification engineers must have a sufficient understanding of the relevant protocol algorithms to design simulation vectors according to the goals set by the architecture and algorithm engineers; on the other hand, they must have a sufficient understanding of the design itself to improve verification efficiency and shorten verification time.Verification engineers need to master many technologies and tools.

-

Languages: Various scripting languages, C/C++, SystemVerilog, Verilog;

-

Protocols: Various interface protocols, various communication protocols, various bus protocols;

-

Tools: Dynamic simulation tools, static simulation tools, FPGA, emulator;

Image source: Internet, infringement will be deleted, thank you~

In the field of digital verification, C, S, and M almost dominate. The old donkey has not done verification for many years and knows almost nothing about the verification-related tools of S and M besides VCS, Verdi, Modelsim, so this time he uses the complete verification suite of company C as an example.

-

Static Verification: Jasper Gold is a newly launched static verification tool from company C. The old donkey understands static verification as a verification methodology based on assertions, and static means that no input testing stimulus is needed; the verification process is purely mathematical.

-

Dynamic Verification: Xcelium is the dynamic verification tool from company C. The old donkey understands dynamic verification as a verification methodology based on UVM, which monitors simulation results through input testing stimuli, analyzing coverage to complete functional verification.

-

Emulator: Hardware simulation accelerator, simply understood as: A giant programmable array with debugging functions and rich interfaces; Features: Ultra-fast verification, supports system software debugging. Palladium is the star product of company C in the verification field and is a leader in the industry. It is said that there are often special envoys visiting the silicon factory, lingering in front of Palladium, admiring its beautiful appearance and praising its powerful functions.

-

Verification IP: Verification requires various verification models, various IPs, various buses, and various high-speed interfaces.

Image source: Internet, infringement will be deleted, thank you~

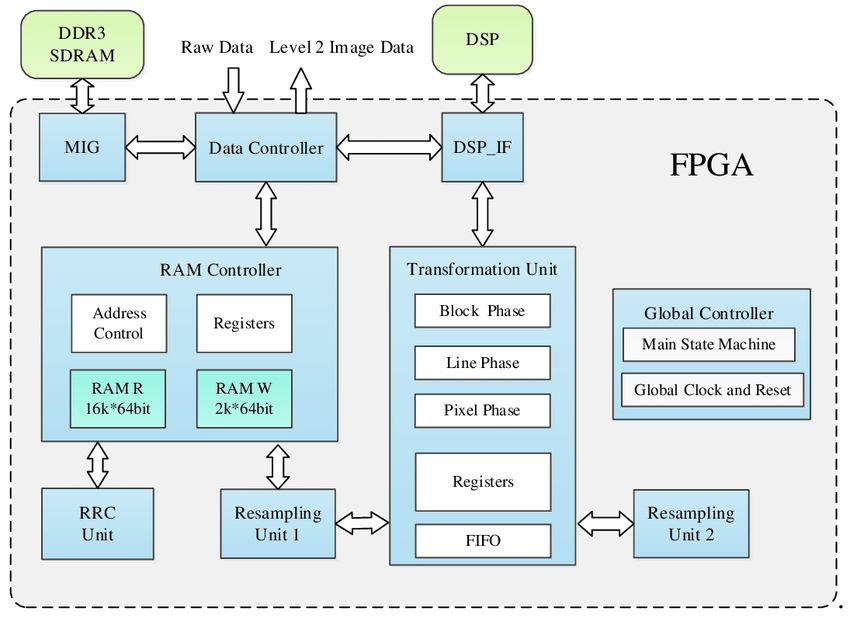

FPGA has a significant application in verification, so it’s worth mentioning. There were once two impressive FPGA companies in the world, one was Altera and the other was Xilinx. Later, Altera, like Mentor, found a big boss to sell itself. Inside FPGA, in addition to programmable logic, various IPs are usually integrated, such as CPU, DSP, DDR controller, etc. Each FPGA has various configurations, and depending on the integrated IPs, the scale of programmable logic, and achievable speeds, the price can vary greatly. Compared to ASICs, FPGAs also have a corresponding set of EDA tools used for synthesis, placement, routing, programming, and debugging. Such as: Synplify, Quartus.

Image source: Internet, infringement will be deleted, thank you~

Current situation in China: Static Verification, Dynamic Verification, Emulator are almost blank; There are some FPGA companies in China that have done very well in the mid-to-low end field, but the high-end field is almost blank. There is a long way to go, no embellishment, just practical work!

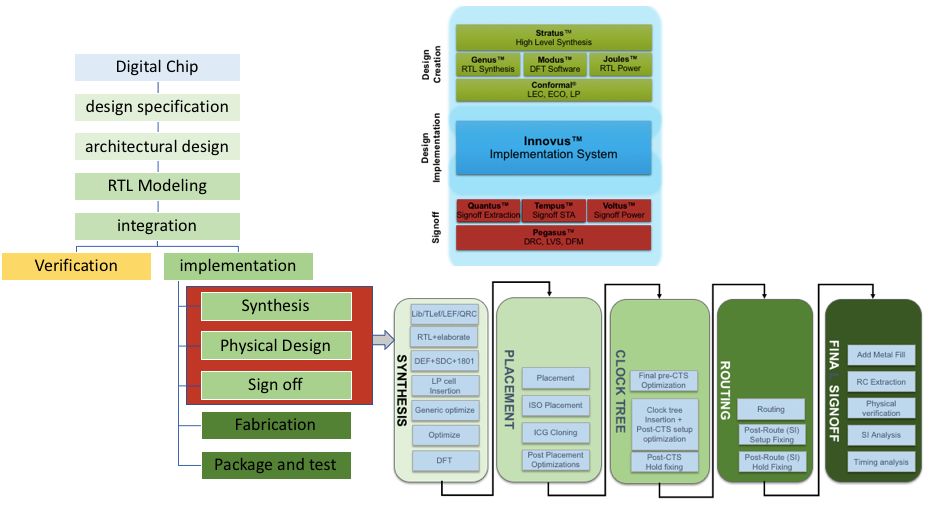

Continuing from the previous discussion, we continue with the digital chip design implementation process, today entering the implementation stage. The old donkey is only familiar with synthesis, formal verification, low-power verification, RTL power analysis, STA, and other parts are only partially understood, so he will not delve deeper, just outline the process.

Image source: Internet, infringement will be deleted, thank you~

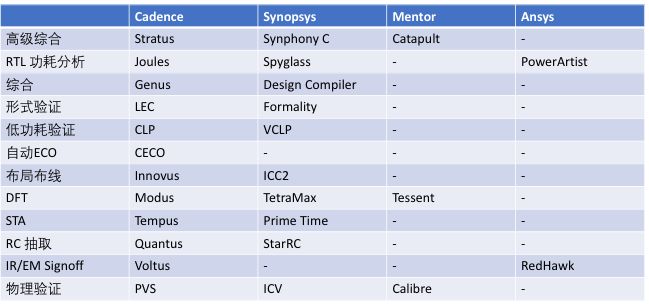

The entire implementation stage can be summarized as playing with EDA tools and methodologies based on EDA tools. EDA tools are undoubtedly the dominant force in the implementation stage. The quality of a chip largely depends on the engineers’ capabilities before the implementation stage, and afterwards, it largely depends on how well EDA tools are utilized. The entire design implementation process involves many tools, here are four major participants, the blank parts do not mean there are none, just that the old donkey does not know.

Image source: Internet, infringement will be deleted, thank you~

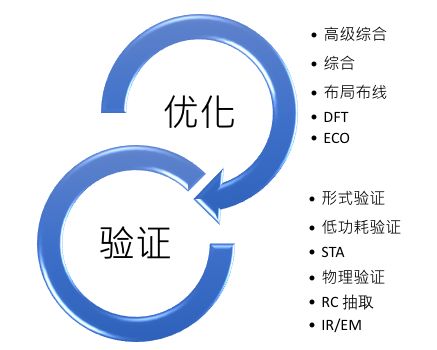

The digital circuit implementation process can be broadly divided into two parts: optimization and verification. Optimization will change the logical description method, change the logical structure, and insert new logic. All these actions carry the risk of introducing errors, so verification tools are needed for monitoring. Verification ensures that the logic optimization process does not change the logical functionality, ensures that timing meets established target requirements, ensures no physical rule violations, and ensures signal integrity. All these verifications have a corresponding set of passing rules. If any one does not meet the standard, it cannot be sent for production.

Image source: Internet, infringement will be deleted, thank you~

Advanced synthesis: Advanced synthesis refers to translating designs described in C/C++/System C into RTL described in Verilog/System Verilog. It is mainly used in designs dominated by computational logic. Besides the three giants, many small companies are also doing well in this area.

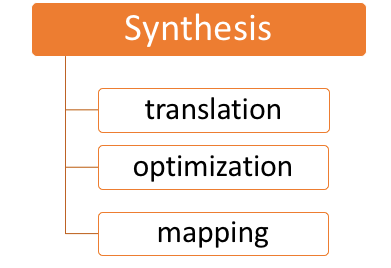

Synthesis: In the implementation process, synthesis is undoubtedly the most difficult and complex regarding the underlying algorithms. Synthesis first converts logic described in Verilog/System Verilog/VHDL into logic described by Gtech, then optimizes the Gtech logic, and finally maps the optimized Gtech description to the corresponding process library. The optimization process involves multiple aspects. In recent years, the development direction of EDA tools can be summarized as: capacity, speed, correlation. Capacity: Refers to the design scale that can be handled; Speed: Refers to the optimization speed of EDA tools; Correlation: Refers to the correlation with layout and routing. Mainstream tools: Genus, Design Compiler. In this regard, it is almost difficult for any newcomers to emerge unless one day the entire digital circuit design methodology undergoes a revolutionary change.

Image source: Internet, infringement will be deleted, thank you~

DFT: Insert compression and decompression logic, insert scan chains, insert Mbist, insert Lbist, insert Boundary Scan, insert OCC, insert Test Points, generate ATPG patterns, and perform fault diagnosis. DFT engineers are like traditional Chinese medicine practitioners, inserting, observing, and diagnosing. Currently, there is a shortage of DFT engineers in the market, and they are expensive! Mainstream tools: Tessenst, Modus, TetraMax.

ECO: Whenever new elements are introduced, bugs may be introduced. Early detection of bugs allows the implementation process to be revisited. However, if bugs are found later in the process, the cost of redoing the entire process is too high, so the usual approach is ECO. For simple bug fixes, manual ECO is sufficient, but for complex bug fixes, manual ECO may be insufficient, so EDA tools are needed to complete the corresponding work. Currently, the best automatic ECO tool on the market is Conformal ECO. Recently, some startups have also developed corresponding point tools, and their entire approach is similar to CECO, but without their own synthesis tools to optimize the patches after ECO, it is difficult to achieve good results.

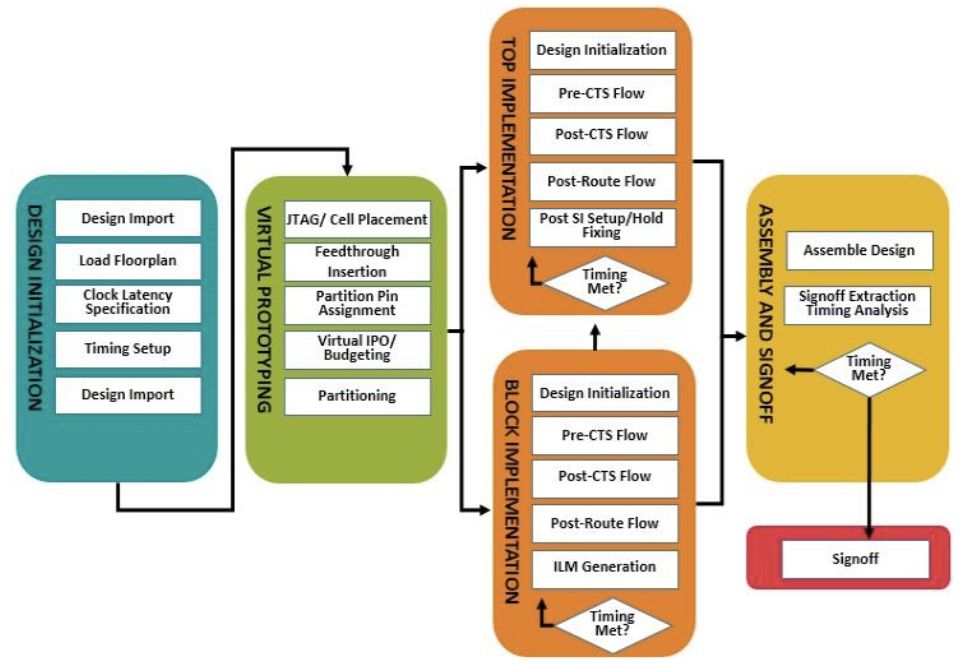

Layout and Routing: Before entering the nanometer era, layout and routing were not that complex. Starting from 90nm to the current 3nm, the complexity of layout and routing has exponentially increased. From floor planning to placement to CTS to routing, the algorithms involved in each step have undergone revolutionary innovations in recent years. With the advent of Innovus, layout and routing have entered a new era. In the wave of AI, both C and S have dived in, aiming to create the world’s smartest layout and routing tools. Perhaps one day, it will be like conversing with a virtual assistant:

Image source: Internet, infringement will be deleted, thank you~

FPGA has a significant application in verification, so it’s worth mentioning. There were once two impressive FPGA companies in the world, one was Altera and the other was Xilinx. Later, Altera, like Mentor, found a big boss to sell itself. Inside FPGA, in addition to programmable logic, various IPs are usually integrated, such as CPU, DSP, DDR controller, etc. Each FPGA has various configurations, and depending on the integrated IPs, the scale of programmable logic, and achievable speeds, the price can vary greatly. Compared to ASICs, FPGAs also have a corresponding set of EDA tools used for synthesis, placement, routing, programming, and debugging. Such as: Synplify, Quartus.

Image source: Internet, infringement will be deleted, thank you~

Current situation in China: Static Verification, Dynamic Verification, Emulator are almost blank; There are some FPGA companies in China that have done very well in the mid-to-low end field, but the high-end field is almost blank. There is a long way to go, no embellishment, just practical work!

Continuing from the previous discussion, we continue with the digital chip design implementation process, today entering the implementation stage. The old donkey is only familiar with synthesis, formal verification, low-power verification, RTL power analysis, STA, and other parts are only partially understood, so he will not delve deeper, just outline the process.

Image source: Internet, infringement will be deleted, thank you~

The entire implementation stage can be summarized as playing with EDA tools and methodologies based on EDA tools. EDA tools are undoubtedly the dominant force in the implementation stage. The quality of a chip largely depends on the engineers’ capabilities before the implementation stage, and afterwards, it largely depends on how well EDA tools are utilized. The entire design implementation process involves many tools, here are four major participants, the blank parts do not mean there are none, just that the old donkey does not know.

Image source: Internet, infringement will be deleted, thank you~

The digital circuit implementation process can be broadly divided into two parts: optimization and verification. Optimization will change the logical description method, change the logical structure, and insert new logic. All these actions carry the risk of introducing errors, so verification tools are needed for monitoring. Verification ensures that the logic optimization process does not change the logical functionality, ensures that timing meets established target requirements, ensures no physical rule violations, and ensures signal integrity. All these verifications have a corresponding set of passing rules. If any one does not meet the standard, it cannot be sent for production.

Image source: Internet, infringement will be deleted, thank you~

Advanced synthesis: Advanced synthesis refers to translating designs described in C/C++/System C into RTL described in Verilog/System Verilog. It is mainly used in designs dominated by computational logic. Besides the three giants, many small companies are also doing well in this area.

Synthesis: In the implementation process, synthesis is undoubtedly the most difficult and complex regarding the underlying algorithms. Synthesis first converts logic described in Verilog/System Verilog/VHDL into logic described by Gtech, then optimizes the Gtech logic, and finally maps the optimized Gtech description to the corresponding process library. The optimization process involves multiple aspects. In recent years, the development direction of EDA tools can be summarized as: capacity, speed, correlation. Capacity: Refers to the design scale that can be handled; Speed: Refers to the optimization speed of EDA tools; Correlation: Refers to the correlation with layout and routing. Mainstream tools: Genus, Design Compiler. In this regard, it is almost difficult for any newcomers to emerge unless one day the entire digital circuit design methodology undergoes a revolutionary change.

Image source: Internet, infringement will be deleted, thank you~

DFT: Insert compression and decompression logic, insert scan chains, insert Mbist, insert Lbist, insert Boundary Scan, insert OCC, insert Test Points, generate ATPG patterns, and perform fault diagnosis. DFT engineers are like traditional Chinese medicine practitioners, inserting, observing, and diagnosing. Currently, there is a shortage of DFT engineers in the market, and they are expensive! Mainstream tools: Tessenst, Modus, TetraMax.

ECO: Whenever new elements are introduced, bugs may be introduced. Early detection of bugs allows the implementation process to be revisited. However, if bugs are found later in the process, the cost of redoing the entire process is too high, so the usual approach is ECO. For simple bug fixes, manual ECO is sufficient, but for complex bug fixes, manual ECO may be insufficient, so EDA tools are needed to complete the corresponding work. Currently, the best automatic ECO tool on the market is Conformal ECO. Recently, some startups have also developed corresponding point tools, and their entire approach is similar to CECO, but without their own synthesis tools to optimize the patches after ECO, it is difficult to achieve good results.

Layout and Routing: Before entering the nanometer era, layout and routing were not that complex. Starting from 90nm to the current 3nm, the complexity of layout and routing has exponentially increased. From floor planning to placement to CTS to routing, the algorithms involved in each step have undergone revolutionary innovations in recent years. With the advent of Innovus, layout and routing have entered a new era. In the wave of AI, both C and S have dived in, aiming to create the world’s smartest layout and routing tools. Perhaps one day, it will be like conversing with a virtual assistant:

-

Silicon farmer: Innovus, please analyze file A and produce a power-optimized result according to the set goals;

-

Innovus: Target file read, based on design data analysis, this design requires approximately 250G of memory to complete within 5 hours. Please select whether to automatically proceed to the next program upon completion of the task……

Image source: Internet, infringement will be deleted, thank you~

RTL Power Analysis: This step can be done during implementation or before implementation. The analysis process is relatively simple: read in RTL, SDC, simulation stimuli, and calculate the average and instantaneous power to identify “power defects” in the design, guiding the designer for power optimization. Mainstream tools include: Joules, Spyglass, PowerArtist.

Formal Verification: In the entire implementation process, formal verification acts as the inspector of logical functional equivalence. After any optimization, it is necessary to pass formal verification to ensure that the logical functionality has not changed during the optimization process. Mainstream tools: LEC, Formality. With the explosive growth of design scale and the rapid development of optimization technologies, the difficulty of formal verification has gradually increased, and the time consumed has also increased. SmartLEC is a pioneer for complex designs.

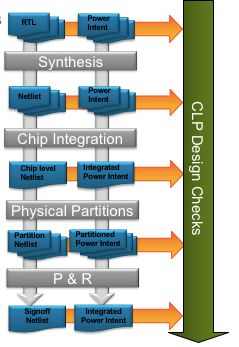

Low Power Verification: For low-power designs, low-power verification must verify the syntax semantics and descriptive intents of CPF/UPF/1801, ensuring that low-power units are not excessively inserted, missed, or incorrectly inserted, ensuring that the connections of power and ground meet design intents, and verifying the integrity of electrical characteristics. Mainstream tools: CLP.

Image source: Internet, infringement will be deleted, thank you~

STA: Timing signoff, STA seems complicated, but it is not. Compared to the optimization process, it is much simpler. Excluding Timing ECO, all actions in STA are merely calculations rather than solving problems. An inappropriate analogy: STA is like kindergarten arithmetic problems; the addends are already there, and you just need to find the sum. In contrast, the optimization process seeks the optimal or near-optimal solution and is much more difficult. In recent years, STA EDA tools have focused on several directions: how to simulate random process variations in manufacturing, how to handle ultra-large-scale designs, and how to simulate the impact of new process nodes on timing characteristics.

Power Signoff: Verify whether the design’s power network is strong enough, analyze, discover, and correct: IR-drop and EM. Mainstream tools: Voltus, RedHawk.

Physical Verification: Verify whether all transistors, vias, and routes meet the rules set by the Foundry. It is a physical task, somewhat like cleaning up after the construction of a house. Mainstream tools: Calibre, PVS, ICV.

The entire digital implementation process involves numerous tools, with the three giants leading the way, and there are basically no followers behind. Occasionally, some newcomers excel in specific tools, but most are absorbed by the three giants, which is also a routine of the industry. In terms of market value, the combined value of the three giants is not as much as one leg of an internet company, yet they are indispensable in the entire chip design implementation process. For our country to strengthen its chip industry, investment in EDA must be increased to move further away from being constrained.

[IC Consulting/Free Trial]