Digital network audio has gradually integrated into daily work, bringing great convenience to data transmission, signal scheduling, content distribution, and other related applications. In the early development of network audio, the incompatibility of various protocols and the inability of different brand devices to communicate directly limited its application. With the advancement of technology, network audio protocols have evolved towards compatibility and interoperability. This article compares the characteristics and applicable fields of several mainstream network audio protocols, using the audio system of Jiangsu TV’s 4K broadcast vehicle as a case study to illustrate the application of network audio and provide ideas for system optimization.

1 Overview of Mainstream Network Audio Protocols

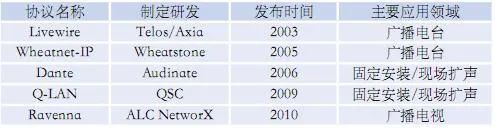

Network audio primarily utilizes standard Ethernet constructed with general IT equipment, employing uncompressed, low-latency, high-quality audio signal transmission technology to achieve real-time transmission of high-fidelity digital audio signals over ordinary Ethernet. In audio transmission over the public internet, high compression algorithms are used, leading to long buffering times, significant audio quality loss, and frame dropping. Professional network audio transmission has a signal sampling rate above 44.1 kHz, linear quantization above 16 bits, and low latency in the microsecond to millisecond range, without using compression. Below is a brief description of several mainstream network audio protocols launched by various companies, as shown in Table 1.

Table 1 Comparison of Network Audio Protocols

CobraNet is an Ethernet audio transmission solution launched by PeakAudio in the United States in 1996. It is a pioneer in network audio, with media matrices being its primary application area. CobraNet uses a proprietary scheme for audio data encapsulation, transmission, and synchronization, with its core architecture based on layer 2 network technology, requiring a separate dedicated network. CobraNet is a non-public internal protocol with a capacity in the hundred megabit range, lacking advantages in bandwidth, latency, and access channels, and cannot interconnect with current other network audio systems.

Livewire was developed by Axia Audio in the United States and released at NAB 2003. It is a comprehensive audio network based on Ethernet that provides digital audio transmission, routing, monitoring, and synchronization, achieving low-latency and high-reliability audio transmission, mainly used in radio stations. Livewire+ is its second-generation product, fully compliant with AES67 requirements, allowing interoperability with other network audio devices.

Wheatnet-IP is a proprietary network audio protocol developed by Wheatstone in the United States, enabling audio signals to be intelligently distributed to devices across an extended network, including audio transmission, control, and various audio processing tools, allowing all audio sources to be utilized by all devices and providing a complete end-to-end solution.

Dante is an interoperable solution launched by Audinate in Australia in 2006, including hardware modules and chips, software tools, and development tools. It is a non-open corporate internal protocol, founded by network engineers, mainly collaborating with audio manufacturers to support product development, providing solutions for high-performance digital media transmission systems running on standard IP network architectures.

Q-LAN is a proprietary network audio protocol developed by QSC Audio Products in the United States in 2009 for the Q-sys audio processing platform, used for audio and video distribution, device discovery, synchronization, control, and management. Q-LAN is a collection of general IT standard protocols and solutions, utilizing core switches to ensure media stream transmission and synchronization among all connected devices, mainly applied in fixed installations and live sound production in cinemas and theme parks.

Ravenna is a fully open network audio protocol released by ALC, a subsidiary of LAWO, in 2010, designed as an open-source network audio solution tailored to the needs of the broadcasting industry, and has become the basis for AES67. AES67 specifies parameters for sampling rates, data packets, and data streams based on it, making AES67 a specific parameter version of Ravenna.

2 Standards for Interoperability of Various Protocols

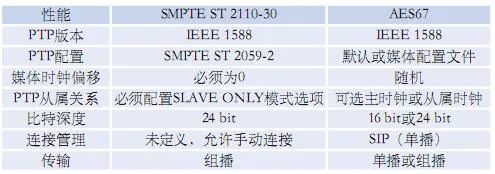

To achieve interoperability among the widely used protocols, industry organizations such as AES and SMPTE have established interoperability standards, as shown in Table 2, forming a unified standard to enhance compatibility among them.

Table 2 Comparison of SMPTE ST 2110-30 and AES67 Performance Specifications

AES67 defines the standards for interoperability under IP network architecture based on existing protocols, enabling cooperative operation of multiple audio networks. For different network audio protocols to achieve interoperability, they must adhere to the definitions and requirements of this standard, supporting corresponding protocols and functions. AES67 is not a complete network management mechanism; different protocols have their own solutions at the control layer. AES67 defines the basic contents related to network audio interaction in an open manner to achieve audio network interoperability, solving basic issues of unicast and multicast stream interfacing, allowing more protocols to be compatible with each other.

AES67 provides comprehensive operational specifications for synchronization and media clock, encoding transmission and streaming formats, session description, and connection management. When transmitting audio data streams using multicast, AES67 maintains a latency of less than 10 ms. It employs PTP for precise clock synchronization, achieving accuracy at the nanosecond level. The transmission process involves encoding data into packets, transmitting audio samples within network packets, with packets sent at regular intervals to ensure all devices on the network create replicated audio samples at the same jitter frequency and time. AES67 differs from traditional baseband audio point-to-point connection models, using SDP (Session Description Protocol) and SIP (Session Initiation Protocol) for connection management, allowing network audio to adopt a divergent architecture where any device on the same network can send data to any other terminal.

SMPTE ST 2110 is a standard for network transmission composed of multiple individual professional media under a dedicated IP architecture. SMPTE ST2110-10 defines system overview and synchronization content, SMPTE ST 2110-20 describes uncompressed video formats, SMPTE ST 2110-30 defines uncompressed PCM digital audio formats, and SMPTE ST 2110-40 outlines how to carry all important metadata over IP networks.

SMPTE ST 2110-30, based on the AES67 standard, requires the use of PCM linear audio encoding, transmitting only audio data. There are some differences in details with AES67, such as its PTP configuration file using SMPTE ST 2059-2, which imposes restrictions on clock domains, announcement intervals, synchronization intervals, and other parameters. It stipulates that audio devices must be configured with a PTP Slave only switch to prevent AES67 devices from becoming the master clock of the system. AES67 does not specify a data backup scheme; for television production, signal safety is paramount. SMPTE ST 2022-7 establishes a seamless switching protection scheme, defining redundancy methods for IP data streams, where primary and backup networks simultaneously transmit data streams. If a problem occurs in one network data transmission, the other data stream will back up in real time, ensuring uninterrupted signal transmission.

3 Application Case

3.1 Audio System Architecture

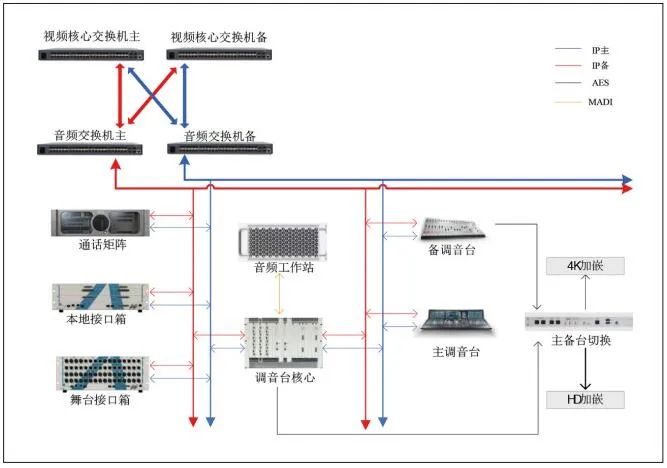

The audio system of Jiangsu Broadcasting and Television General Station’s 4K broadcast vehicle (Figure 1) consists of a switching system made up of primary and backup core IP switching equipment, with the transmitted audio data stream supporting SMPTE ST 2110-30/SMPTE ST 2022-7/AES67 standards, ensuring that audio IP streams can directly interconnect with the video core switch. The system employs two relatively independent production systems composed of primary and backup mixing consoles operating in parallel, as shown in Figure 2, retaining part of the baseband architecture to back up the IP link. The primary and backup mixing consoles output 8 channels of digital audio signals to the primary and backup switch for 4K/HD embedding. The system adopts a tree network architecture, reserving MADI baseband interfaces as backup.

Figure 1 4K Ultra HD Broadcast Vehicle Audio Control Room

Figure 2 Audio System Schematic

Considering compatibility, the primary and backup mixing consoles use the same brand, LAWO mc²56 (MKIII), which is entirely based on network audio architecture. All signal interface boxes are interconnected with the system core Nova73 through an IP switch; primarily using the Ravenna network audio transmission protocol, compatible with various network audio interoperability protocols, allowing parameter modification based on demand. The audio-video system employs network transmission to achieve interconnectivity, with audio streams from video players, slow-motion devices, microphones, etc., sent to the mixing console, retaining part of the baseband signal as backup. The NOVA73 audio matrix serves as the core device of the mixing console, containing 32 AES channels, 8 MADI ports, and 12 Ravenna ports, providing 256 DSP channels. The local interface box A-stage48 offers 16 Mic in, 16 Line out, 8 AES in/out, and dual network ports to meet SMPTE ST 2022-7 seamless redundancy switching. The system is equipped with two stage interface boxes, with A-stage64 containing 32 Mic in/16 Line out, 8 AES in/out, and two groups of interfaces connected to two switches for audio stream transmission.

Ruby serves as the backup mixing console, adopting a separated architecture for the operating interface and the DSP core processor PowerCore (Figure 3). PowerCore has 96 input channels and can handle 80 output buses, ensuring that the mixing console surface’s power failure does not affect signal transmission. All buttons can be customized for functionality and logic, configured with 2 control network ports and 2 256-channel Ravenna/AES67 ports, supporting SMPTE ST2022-7 seamless switching redundancy. The primary and backup consoles share audio signal input and output through the switching system, allowing for gain linkage adjustments of microphone signals. It is used in conjunction with the Vistool control software on a PC platform, connecting with the DSP core through the IP network for remote control and monitoring functions.

Figure 3 Backup Mixing Console Core Processor

3.2 Switch Configuration and Considerations

Network audio is built on general IP technology and can operate on various protocol topologies. For small network structures, star or tree network architectures are typically used. In large networks spanning subnets, a leaf spine topology is adopted to alleviate the operational pressure on upper-layer switches, facilitating access expansion. If audio data transmission requires hundreds of audio channels or shares the network with other data, gigabit switches or higher must be selected to avoid excessive load on network ports, which can impact network performance, resulting in packet loss, delays, and variations. The scale of the switch should meet current needs while allowing for future system expansion. For mixed networks or large networks with high loads, managed switches are typically used to meet multicast, QoS, and other configuration and debugging requirements.

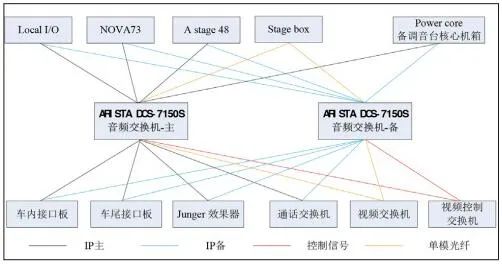

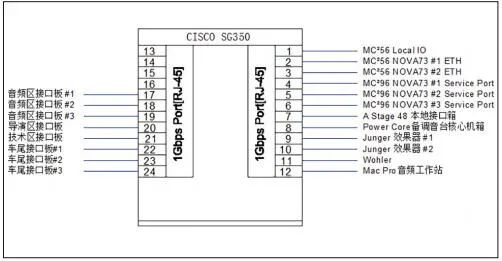

The network architecture of the 4K broadcast vehicle audio system is shown in Figure 4, using 2 ARISTA DCS-7150S as primary and backup core switches, each containing 24 gigabit ports, interconnected with various system devices via cables and fibers. The primary mixing console’s local interface box, NOVA73 core chassis, stage interface box, backup mixing console, internal and external interface boards, as well as intercom and video switches are all connected to the primary and backup core audio switches. CISCOSG350 is used as the control switch, with all device control ports connected separately to the control switch, as shown in Figure 5.

Figure 4 Network Architecture of the Audio System

Figure 5 Control Switch Configuration

In one-to-many transmission scenarios, multicast methods are typically used. For large data transmissions, multicast management is necessary. To avoid multicast flooding, the switch needs to be configured with IGMP (Internet Group Management Protocol) functionality. The network group management protocol facilitates communication between hosts and routers to determine which network hosts require specific multicast groups, registering to forward multicast traffic. Each VLAN should have only one switch configured as the IGMP querier, broadcasting query information to all hosts in the network. The switch enables IGMP snooping, forming a multicast snooping table that reports which ports require multicast information, mapping multicast traffic to the corresponding ports, thereby preventing unregistered multicast traffic from being forwarded.

The switch’s QoS functionality provides specific service levels for applications, improving bandwidth utilization and optimizing network traffic management. Proper use of QoS ensures that critical data packets are prioritized for forwarding in the network. Network audio QoS uses DiffServ, with 64 different DSCP priority tags, marking labels on individual IP packets of different categories. The switch checks each priority tag and forwards packets in the predetermined order. Typically, PTP traffic is marked for expedited forwarding, receiving the highest forwarding priority, while audio packets are marked with the second highest priority, and the remaining traffic has lower priority markings or none. Devices themselves assign fixed DSCP values, which can also be freely configured as needed.

3.3 Signal Transmission and System Synchronization

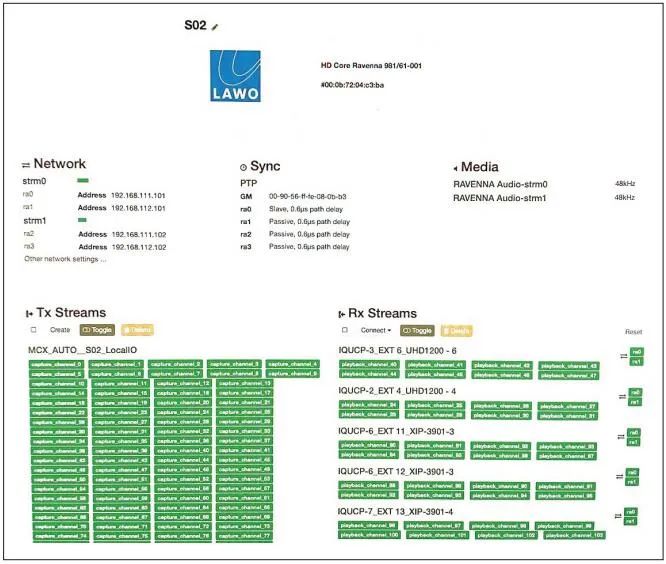

The 4K broadcast vehicle audio system uses the open-source network audio transmission protocol Ravenna, with all audio send/receive stream parameters open to users for configuration based on demand. Users access the send/receive stream configuration interface via a browser, entering the corresponding device’s IP address, as shown in Figure 6. Each device’s dual network ports have independent MAC addresses and IP addresses, representing two independent network interfaces, with connection status and transmission rates displayed on the webpage. Sending audio streams from Ravenna devices requires selecting the appropriate format, including payload information, multicast address, number of audio stream channels, samples per channel, encoding method, and other parameter information. After creating the audio stream, relevant information must be announced within the network for the audio stream to be automatically discovered and fetched by other devices. The AES67 standard does not explicitly specify this; it is recommended to use both mDNS and SAP protocols for publication. Ravenna devices support both announcement protocols, while Dante devices must use the SAP protocol to display in the Dante Controller. If neither announcement protocol is selected, automatic discovery will not occur. In the interface, users can select to receive audio streams sent via mDNS and SAP protocols, observing audio stream status through color indicators, and if automatic discovery fails, must input audio stream names, channel numbers, multicast addresses, and other parameter information through SDP (Session Description Protocol) files to receive successfully. In SMPTE ST 2110 systems, extensive use of SDP files is required to receive audio streams.

Figure 6 Ravenna Audio Stream Configuration Interface

The system employs two SPG8000 as master clock generators, receiving GPS clock synchronization signals and converting them into PTP synchronization clocks, connecting to the primary and backup switches, and distributing them to all network devices. It also outputs BB, Word Clock, and other synchronization signals to audio and video devices requiring baseband synchronization signals. Depending on the application scenario, PTP synchronization includes Master, Slave, and Passive states. Jitter time is used to observe the jitter status of the PTP clock; when jitter is significant and even distorted, network optimization is required. A PTP subnet represents a PTP clock domain, where each clock synchronization device can only be configured in one clock domain, and different clock domains do not affect each other. Each clock domain has its own PTP master clock, with the clocks of other devices in a subordinate state. To prevent the system link from forming a closed-loop structure, only one port of the same device can be in a subordinate state, while others are locked but not used for synchronization. The best master clock algorithm automatically ranks the priority order of clocks based on hierarchical relationships and domain levels, determining port states.

4 Summary and Outlook

According to statistics from audio consulting firm RH consulting, there are already thousands of network audio products in the professional audio field, and the number continues to grow, with new network products constantly being introduced. These products are based on different network protocol standards, with the proportion of interoperability standards continually increasing, and different standards moving towards compatibility.

Currently, all audio devices, including microphones, mixing consoles, audio processors, power amplifiers, intercoms, etc., have corresponding networked products, enabling full-link network audio transmission for audio systems. Among these, the category with the most products is I/O interface devices, which convert analog or digital audio formats into network audio formats. This category can be considered transitional products that will gradually decrease as more audio devices become networked, eliminating the need for separate devices to convert other format audio signals into network audio formats. In the future, every device will be a terminal assigned by the IP system, capable of operating on any network.

Excerpt from “Performance Technology” 2021 Issue 9, Ge Jian“Analysis of Network Audio Protocols and Their Application in 4K Broadcast Audio Systems“, please cite: Performance Technology Media. For more details, please refer to “Performance Technology”.

(This article is for industry exchange and learning purposes only and not for commercial use. The views expressed in this article are independent opinions of the author. If any third-party rights are inadvertently infringed, please contact us, and we will delete it promptly.)

Related Readings

Subscribe to “Performance Technology” Magazine