Source: Chip Theory

Introduction: Today’s CPUs or SoCs are basically integrated with multiple CPU cores on a single chip, forming what is commonly referred to as 4-core, 8-core, or more core CPUs or SoC chips. Why adopt this method? How do multiple CPU cores work together? Is more CPU cores always better? With these questions in mind, I consulted some materials, learned relevant concepts and key points, and edited this article in an attempt to answer these professional questions in layman’s terms. On one hand, this article serves as a learning record for myself, and on the other hand, I hope it provides reference value for readers. Any inaccuracies are welcome for discussion and correction.

To explain what a multi-core CPU or SoC chip is, we must first start with the CPU core. We know that CPU stands for Central Processing Unit, which has the ability to control and process information, serving as the control center for computers and smart devices. If we exclude the packaging and auxiliary circuits (such as pin interface circuits, power circuits, and clock circuits) from traditional CPU chips and only retain the core circuits that complete control and information processing functions, this part of the circuit is the CPU core, also referred to as a CPU core. A CPU core is essentially a fully independent processor that can read instructions from internal memory and execute control and computation tasks specified by those instructions.

If a CPU core and its related auxiliary circuits are packaged into a chip, this chip is a traditional single-core CPU chip, referred to as a single-core CPU. If multiple CPU cores and their related auxiliary circuits are packaged into a chip, this chip is a multi-core CPU chip, referred to as a multi-core CPU. Of course, multi-core CPU chips will include more auxiliary circuits to address communication and coordination issues between multiple CPU cores.

If some other functional components and interface circuits are further integrated into a multi-core CPU chip, it forms a complete system, and this chip becomes a multi-core SoC chip, referred to as a multi-core SoC. In a loose sense, SoCs can also be referred to as CPUs.

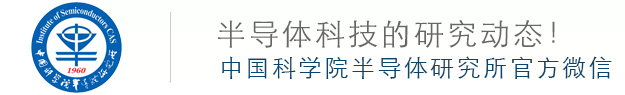

Figure 1 illustrates the single-core CPU and multi-core CPU using ARM as an example. The parts marked with a red dashed line in the figure are individual CPU cores. Figure 1a is a schematic diagram of the ARM Cortex-A8 single-core CPU chip based on the ARMv7 microarchitecture. Figures 1b and 1c are schematic diagrams of the ARM Cortex-A9 MPCore, which consists of 2 and 4 Cortex-A9 cores, representing 2-core and 4-core CPU chips, respectively.

Figure 1. Schematic Diagram of ARM Single-Core and Multi-Core CPU Chips

The ARM Cortex-A8 CPU is the first application processor based on the new generation ARM v7 architecture. It uses the Thumb-2 technology, which has higher performance, power efficiency, and code density, and it only has a single-core architecture. The first company to obtain the Cortex-A8 CPU license was Texas Instruments, followed by Freescale, Matsushita, and Samsung, among others. The application cases of Cortex-A8 include the MYS-S5PV210 development board, TI OMAP3 series, Apple A4 processor (iPhone 4), Samsung S5PC110 (Samsung I9000), Rockchip RK2918, MediaTek MT6575, etc. Additionally, Qualcomm’s MSM8255, MSM7230, etc., can also be considered as its derivative products.

The ARM Cortex-A9 MPCore CPU belongs to the Cortex-A series and is also based on the ARM v7-A microarchitecture. It provides an optional architecture of 1 to 4 CPU cores. Most of the 4-core CPUs we see today belong to the Cortex-A9 series. The application cases of ARM Cortex-A9 include Texas Instruments OMAP 4430/4460, Tegra 2, Tegra 3, Newland NS115, Rockchip RK3066, MediaTek MT6577, Samsung Exynos 4210, 4412, Huawei K3V2, etc. Additionally, Qualcomm APQ8064, MSM8960, Apple A6, A6X, etc., can be considered as improved versions based on the A9 architecture.

1. The Development History of Multi-Core CPUs

The initial motivation for developing multi-core CPUs stems from the simple principle that “many hands make light work.” From this perspective, when the chip integration was not high, the Intel 8086 CPU and i8087 coprocessor can be considered the prototypes of multi-core CPUs, where multiple chips collaborated to form a processing core, requiring many techniques to solve the cooperation and coordination issues between the CPU and the coprocessor.

Today, chip integration is very high, and integrating several or even dozens of CPU cores on a single chip is no longer a challenge. However, it still cannot meet the needs of supercomputing, which requires thousands of high-performance CPU chips to work together. This can be viewed as a multi-core CPU cluster with multiple cores inside the chip and multiple chips outside.

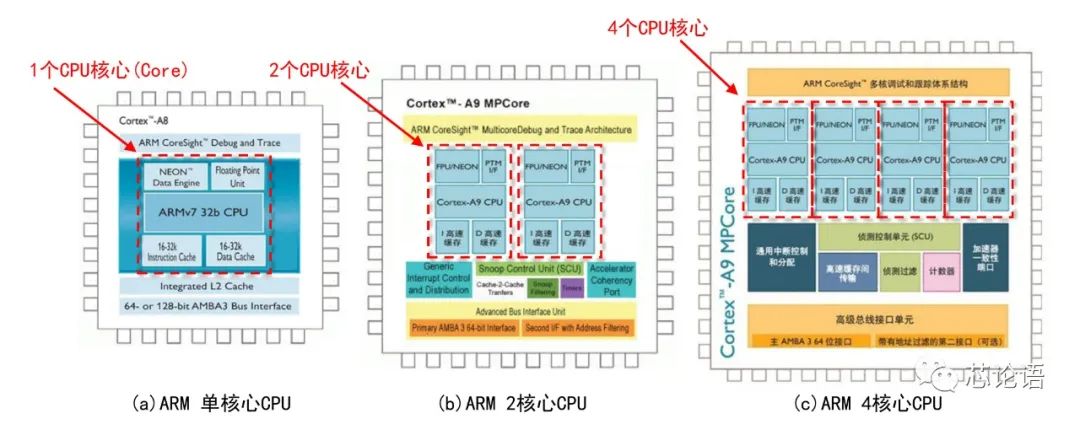

From the outside, a CPU chip appears as a single chip, but upon opening the package, it may contain only one die, or it may be multiple dies packaged together, known as a Multichip Module (MCM), as shown in Figure 2b. However, from a software perspective, the form of packaging is irrelevant; what matters is the number of CPU cores, which determines the system’s parallel computing and processing capabilities. The clock frequency and the communication methods between cores determine the system’s processing speed.

Figure 2. Schematic Diagram of Single Die Packaging, Multi-Die MCM Packaging, and Multi-Chip Systems (Source: Reference Material 14)

Moreover, today’s desktop computer CPUs and mobile SoCs also integrate many Graphics Processing Unit (GPU) cores, Artificial Intelligence Processing Unit (APU) cores, etc. Should these also be considered as “cores” in multi-core CPUs and SoCs? I believe they should be considered as such from a broad perspective.

Therefore, to review the development of multi-core CPUs, it can be roughly divided into the following stages: 1. Prototype stage; 2. Single-chip single-core; 3. Single-chip multi-core; 4. Multi-core single-chip; 5. Multi-core multi-chip. These development stages do not necessarily follow this order; there may be overlapping periods, and the order may be reversed. The second and third cases are generally applied to CPU chips in desktop computers and mobile terminals such as smartphones, while the fourth and fifth cases are applied to CPU chips in servers and supercomputers. Due to space limitations and the need for thematic focus, this article mainly discusses the third case of single-chip multi-core, where the CPU operates in the Chip Multi-Processor (CMP) mode.

From 1971 to 2004, single-core CPUs went solo. Intel launched the world’s first CPU chip, the i4004, in 1971, and it wasn’t until 2004 that the hyper-threading Pentium 4 CPU series was released, spanning a total of 33 years. During this period, CPU chips developed well according to the trajectory predicted by Moore’s Law, continuously doubling integration, increasing clock frequency, and rapidly increasing transistor count, marking a path of continuous iteration and upgrading for single-core CPUs.

However, when the significant increase in transistor count led to a sharp rise in power consumption, making CPU chip heat unbearable and reliability significantly affected, the development of single-core CPUs seemed to reach a dead end. The proposer of Moore’s Law, Gordon Moore, also vaguely felt that the path of “decreasing size” and “clock frequency supremacy” was about to come to an end. In April 2005, he publicly stated that the Moore’s Law that had led the chip industry for nearly 40 years would become invalid within 10 to 20 years.

In fact, as early as the late 1990s, many industry professionals called for using CMP technology to realize multi-core CPUs to replace single-thread single-core CPUs. High-end server manufacturers such as IBM, HP, and Sun successively launched multi-core server CPUs. However, due to the high cost and narrow application of server CPU chips, they did not attract widespread attention from the public.

In early 2005, AMD preemptively launched a 64-bit CPU chip, and it was not until Intel publicly guaranteed the stability and compatibility of its 64-bit CPU that it remembered to use “multi-core” as a weapon for a “counterattack.” In April 2005, Intel hastily launched the simple packaged 2-core Pentium D and Pentium 4 Extreme Edition 840. Shortly thereafter, AMD also released dual-core Opteron and Athlon CPU chips.

2006 is considered the first year of multi-core CPUs. On July 23 of that year, Intel released the CPU based on the Core architecture. In November, Intel also launched the Xeon 5300 and Core 2 dual-core and quad-core Extreme Edition series CPUs aimed at servers, workstations, and high-end PCs. Compared to the previous generation of desktop CPUs, the Core 2 dual-core CPU improved performance by 40% while reducing power consumption by 40%.

In response to Intel, on July 24, AMD announced a significant price reduction for the dual-core Athlon 64 X2 processor. Both CPU giants emphasized energy-saving effects when promoting multi-core CPUs. The low-voltage version of Intel’s quad-core Xeon CPU had a power consumption of only 50 watts, while AMD’s “Barcelona” quad-core CPU did not exceed 95 watts. According to Intel’s senior vice president Pat Gelsinger, Moore’s Law still has vitality, as “the transition from single-core to dual-core to multi-core may represent the fastest period of performance improvement for CPU chips since the advent of Moore’s Law.”

CPU technology development has been faster than software technology development, and software support for multi-core CPUs has lagged behind. Without operating system support, the performance advantages of multi-core CPUs cannot be realized. For example, under the same conditions running Windows 7, the difference in experience brought by 4-core and 8-core CPUs is not obvious, due to the lack of optimization for 8-core CPUs in Windows 7. However, after Windows 10 was released, the experience speed brought by 8-core CPUs was significantly faster than that of 4-core processors, due to optimizations made by Microsoft for multi-core CPUs on Windows 10. Furthermore, Microsoft will continue to optimize for multi-core CPUs on Windows 10.

Currently, the server CPU with the most cores is the Intel Xeon Platinum 9282, which has 56 cores and 112 threads, with up to 5,903 solder balls and an estimated price of about $40,000; the AMD EPYC 7H12 has 64 cores and 128 threads, with a thermal design power of 280W. Both of these CPUs require liquid cooling. The desktop CPU with the most cores is the Intel Core i9-7980XE Extreme Edition, which has 18 cores and 36 threads, with a thermal design power of 165W and a price of $1,999; AMD’s Ryzen 9 5950X has 16 cores and 32 threads, with a thermal design power of 105W and a price of $6049. The mobile SoCs with the most cores include the Apple M1, Kirin 9000, Qualcomm Snapdragon 888, etc. Multi-core CPUs or multi-core SoCs seem to have become a trend, but is more cores always better? Without considering other influencing factors, purely from a technical and integration perspective, some people even predict that by 2050, we may use CPU chips with 1024 cores.

2. Examples of Multi-Core CPUs and SoC Chips

Examples of chips include: 1. Multi-core CPU chip for servers: Intel Xeon W-3175X; 2. Multi-core CPU chip for desktop PCs: Intel Core i7-980X; 3. Multi-core SoC chip for smartphones: Huawei Kirin 9000/E; 4. Multi-core CPU chip for ARM architecture PCs: Apple M1; 5. Multi-core AI CPU chip compatible with x86 architecture: VIA CHA; 6. Domestic multi-core server CPU chip: Tianyun S2500.

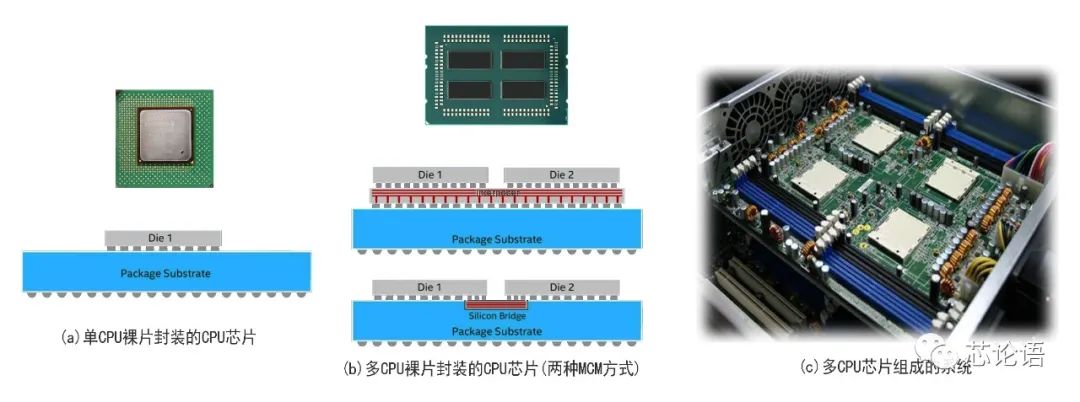

1. Intel Xeon W-3175X: The Intel Xeon W-3175X server CPU chip launched by Intel in 2018 is manufactured using a 14nm process, has 28 cores and 56 threads, a base frequency of 3.1 to 4.3GHz, a 38.5MB L3 cache, and supports six-channel DDR4-2666 ECC/512GB memory, with a packaging interface of LGA3647, paired with the C621 chipset. Its price is as high as $2,999, equivalent to over 20,000 RMB.

This chip adopts a new 6×6 mesh architecture, with I/O located at the top, and memory channels positioned in the middle on both sides, with a maximum of 28 CPU cores, quadrupled L2 cache (the cache for each core upgraded from 256KB to 1MB), reducing shared L3 cache but improving utilization.

Figure 3. Architecture Diagram of Intel Xeon W-3175X with 28 Cores (Source: Reference Material 11)

The Xeon W-3175X is the top configuration for this architecture with 28 CPU cores. However, the cost is extremely high power consumption and heat generation, with a nominal thermal design power of 255W, and the default frequency can easily reach 380W in practical tests, exceeding 500W when overclocked. In daily use, high-end liquid cooling is a must. When Intel released the Xeon W-3175X, it specifically recommended the Asetek 690LX-PN all-in-one liquid cooling solution, which is currently the only cooling solution designed for the W-3175X. Asetek claims that this liquid cooling solution has a maximum cooling capacity of 500W, so as long as extreme overclocking is not done, this solution should handle the W-3175X’s cooling issues adequately.

Figure 4. Asetek 690LX-PN Liquid Cooling Solution (Source: Reference Material 13)

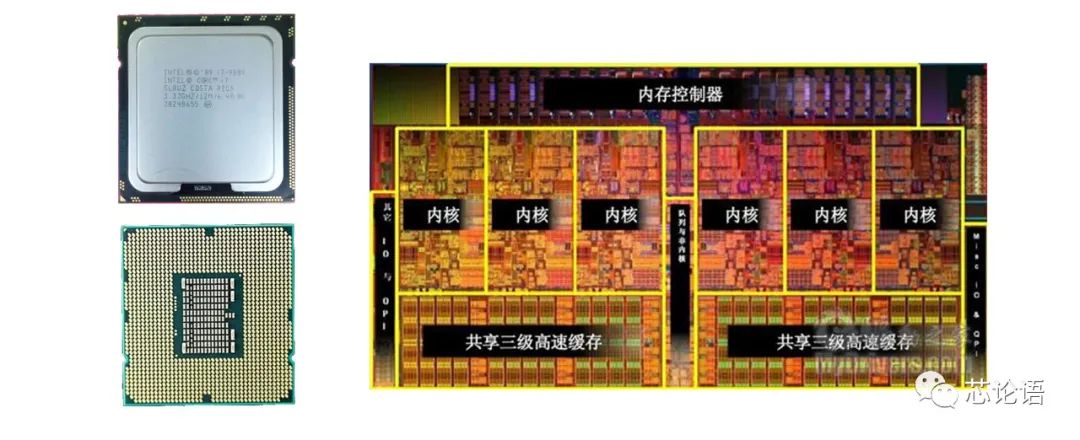

2. Intel Core i7-980X: The Intel Core i7-980X, manufactured using a 32nm process and Gulftown core, is the first 6-core CPU launched by Intel for the desktop PC market. Compared to the previous Bloomfield core CPUs, it features a more advanced process, more cores, and a larger cache while maintaining excellent backward compatibility, strictly speaking, it belongs to the Nehalem microarchitecture derivative category. Notably, the CPU still uses the LGA 1366 interface, allowing users to upgrade their existing X58 motherboards with just a BIOS update to continue supporting the new 32nm 6-core CPU, experiencing the supreme experience brought by 6 cores and 12 threads. It possesses all the characteristics of Intel’s high-end desktop CPUs, including hyper-threading technology, turbo boost technology, a triple-channel DDR3 memory controller, and a three-level cache, especially since the Core i7-980X is unlocked, allowing overclocking users to challenge limits more easily.

Figure 5. Architecture Diagram of Intel Core i7-980X with 6 Cores (Source: Reference Material 5)

3. Huawei Kirin 9000: The Huawei Mate40 smartphone is equipped with the self-developed Kirin 9000 chip, which is currently the most powerful 5G multi-core SoC chip. As shown in the figure below, this chip integrates 8 CPU cores, 3 NPU cores, and 24 GPU cores, manufactured using a 5nm process, integrating 15.3 billion transistors. In performance tests, it significantly outperforms MediaTek’s strongest 5G mobile chip, Dimensity 2000. Unfortunately, the unreasonable blockade by the United States has cut off Huawei’s production channels for high-end smartphone chips, making the Huawei flagship Mate40 series potentially become a rare edition.

Figure 6. Multi-Core Architecture Diagram of Huawei’s Strongest 5G Mobile Chip Kirin 9000

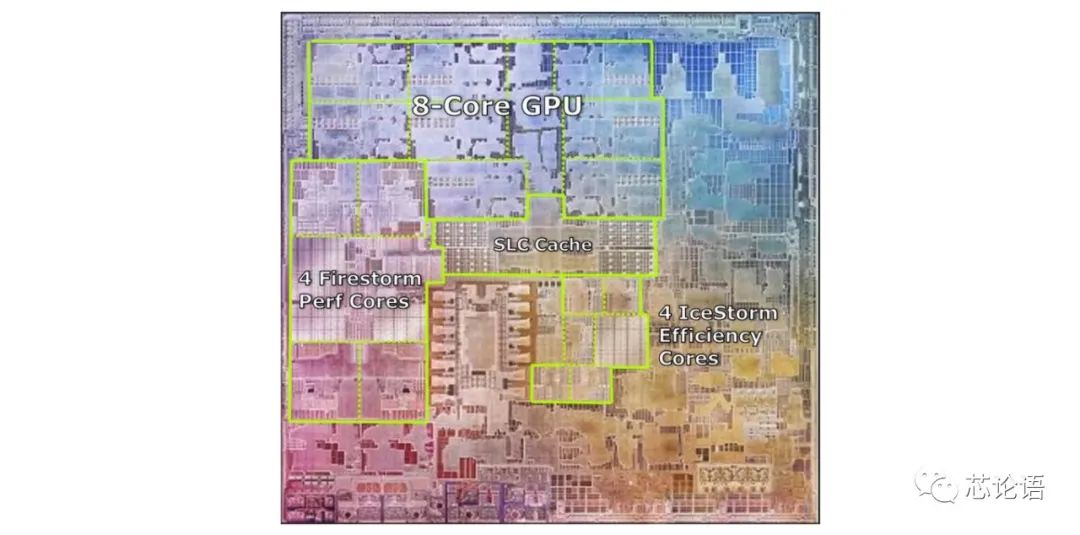

4. Apple M1: The following image shows Apple’s first self-developed Mac computer 8-core SoC chip layout. It features 4 efficient Icestorm small cores, 4 high-performance Firestorm large cores, and an 8-core GPU, making it exceptionally powerful. This chip is manufactured using a 5nm process, integrating 16 billion transistors. Apple has initiated a new SoC naming scheme for this new processor series, called Apple M1.

Figure 7. Layout of Apple’s Self-Developed Mac Computer SoC Chip Apple M1 (Source: Reference Material 19)

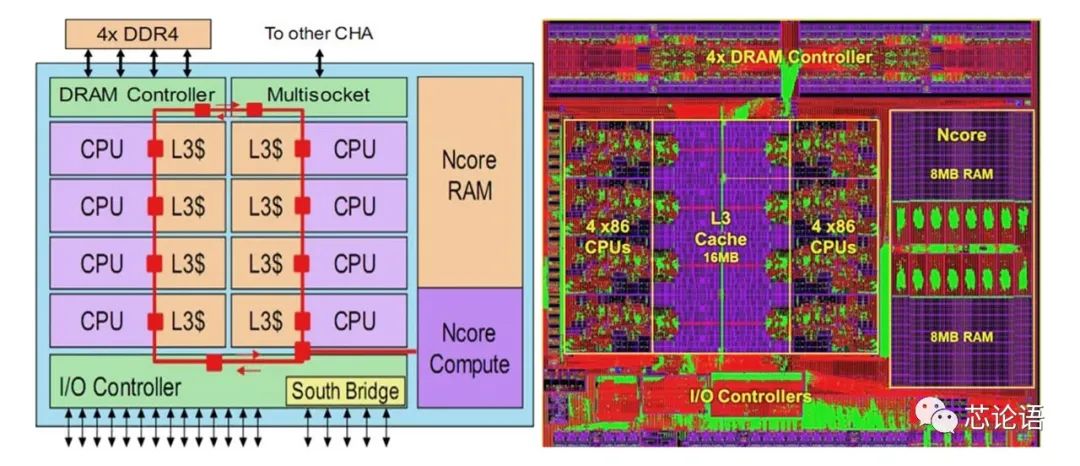

5. VIA CHA: VIA’s latest x86-based AI processor is an 8-core SoC, manufactured using TSMC’s 16nm process, with a chip area of no more than 195 square millimeters. It adopts a ring bus design, integrating eight x86 CPU cores, a 16MB shared L3 cache, a four-channel DDR4-3200 memory controller, PCIe 3.0 controller (44 lanes), southbridge, and I/O functions, making it a complete SoC. Reportedly, this chip is temporarily named CHA.

Figure 8. Layout of VIA’s 8-Core AI Processor (Left) and Layout (Right) (Source: Reference Material 15)

6. Tianyun S2500: Reportedly, the domestic CPU manufacturer Feiteng released a multi-core CPU chip for server applications called Tianyun S2500 in July. This chip is manufactured using a 16nm process, with a chip area of 400mm2, and can be configured with up to 64 FTC663 architecture CPU cores, a frequency of 2.0 to 2.2GHz, a 64MB L3 cache, and supports eight-channel DDR4 memory, providing 800Gbps bandwidth through four direct connection ports, supporting 2 to 8-way parallelism. A single system can provide configurations of 128 to 512 CPU cores, with a thermal design power of 150W. At the Feiteng 2020 Ecological Partner Conference, 15 domestic manufacturers, including Great Wall, Inspur, Tongfang, Dawning, and ZTE, also released their multi-channel server products based on the Tianyun S2500, achieving a gratifying breakthrough in software ecological construction.

Figure 9. Feiteng Company’s 64-Core CPU Chip Tianyun S2500 (Source: Online Image)

3. Why Use Multiple Cores?

Let’s first look at this issue from the perspective of task processing. If we consider the tasks processed by the CPU as tasks, previously, the CPU only had one core, and it would only process one task at a time, completing one task before moving on to the next. This is professionally referred to as serial single-task processing. This was suitable during the DOS operating system era, where the only pursuit for CPUs was to maximize processing speed. With the advent of the Windows operating system, the demand for multi-task processing emerged, requiring CPUs to be able to handle multiple tasks simultaneously. This is professionally referred to as time-sharing multi-task processing. During this period, the pursuit for CPUs was not only to maximize processing speed but also to maximize the number of tasks that could be processed simultaneously. In essence, this “multi-tasking” approach allocates time to multiple tasks, increasing the overall number of tasks processed by the CPU, but slowing down the processing speed for any individual task.

To achieve more tasks and faster processing speed, people naturally thought of integrating multiple CPU cores on a chip, adopting a “multi-core multi-tasking” approach to handle transactions, leading to the demand for multi-core CPUs, which is especially pressing in server CPU applications.

Next, let’s look at this issue from the perspective of increasing CPU clock frequency to accelerate processing speed. Whether it is “single-tasking,” “multi-tasking,” or “multi-core multi-tasking,” increasing the CPU’s clock frequency will accelerate its processing speed. Whether single-core or multi-core, the CPU clock frequency is an important indicator for selecting CPU chips.

For a long time, as Intel and AMD CPUs became faster and faster, the performance and speed of software on x86 operating systems naturally improved. System integrators could reap the benefits of overall performance improvements by making slight adjustments to existing software.

However, as chip technology developed along Moore’s Law, the increasing integration and transistor density of CPUs, and the rising clock frequency, directly led to an increase in power consumption, making heat dissipation a barrier that could not be overcome. It has been calculated that for every 1GHz increase in CPU clock frequency, power consumption rises by 25 watts. When chip power consumption exceeds 150 watts, existing air cooling solutions can no longer meet the requirements. Around 2003, Intel’s Pentium 4 Extreme Edition CPU chip with a frequency of 3.4GHz had a maximum power consumption of 135 watts, earning it the nickname “electric stove,” and some even used it to cook eggs. Today’s server CPU chip, the Xeon W-3175, has a nominal power consumption of 255W, with a default frequency that can reach 380W in practical tests, exceeding 500W when overclocked, necessitating the use of high-end liquid cooling systems for temperature control.

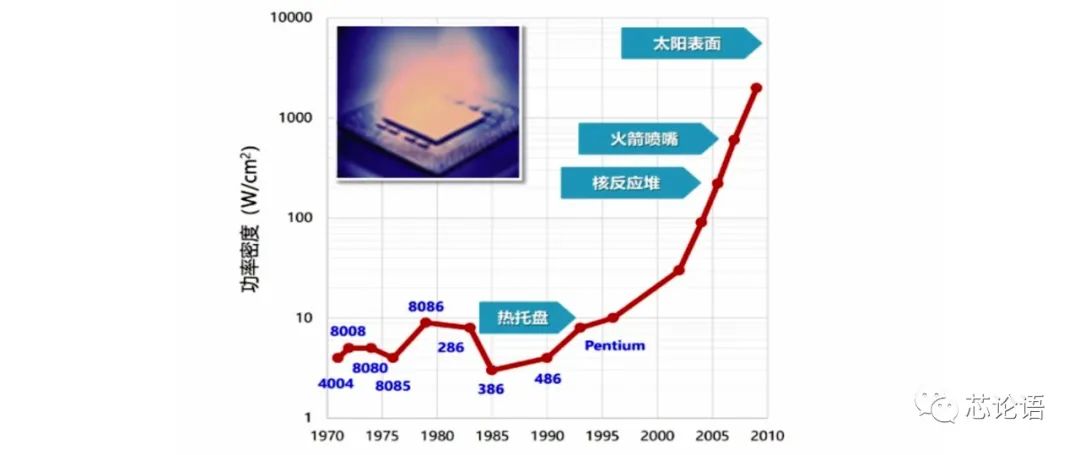

Thus, power consumption severely limits the improvement of CPU frequency. The following diagram shows the trend of CPU power density over time. After the Intel Pentium, CPU chips have seen a sharp rise in power density due to increased transistor density and clock frequency, generating heat that exceeds that of the sun’s surface.

Figure 10. Trend of CPU Power Density Over Time (Source: Professor Wei Shaojun’s Lecture)

In summary, pursuing multi-task processing capabilities and improving processing speed are the two main goals of CPU chip design. The pursuit of increased CPU clock frequency to accelerate processing speed is constrained by the limits of CPU power consumption, making multi-core CPU chips a necessary path to resolve these contradictions. Currently, multi-core CPUs and SoCs have become the mainstream in processor chip development.

4. What Technologies Are Used in Multi-Core CPUs?

Compared to single-core CPUs, multi-core CPUs face enormous challenges in architecture, software, power consumption, and security design, but they also harbor significant potential. This article references the materials attached at the end and provides a brief introduction to the technologies used in multi-core CPUs.

1. Hyper-Threading Technology

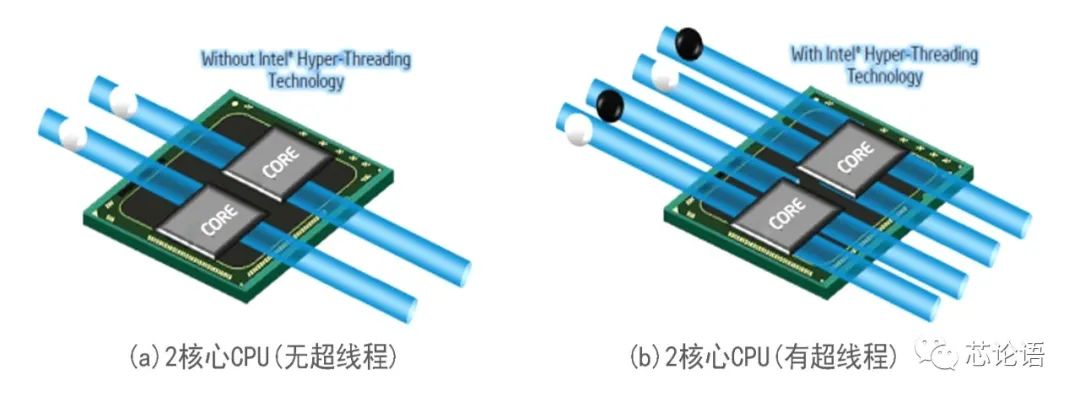

A traditional CPU core has only one Processing Unit (PU) and one Architectural State (AS), allowing it to process only one software thread at a time. A CPU core with Hyper-Threading (HT) technology contains one PU and two ASs, with the two ASs sharing the PU. When software runs on the CPU core, the AS connects with the software thread and assigns the thread’s tasks to the relevant units within the PU. Therefore, two ASs can process two software threads.

To illustrate with a factory analogy, the PU represents the production department, with several machines used for production; the AS is the order handler, who can only manage one task order at a time. If the production facility only has one AS, it can only handle one task order simultaneously, leaving some machines idle. If there are two ASs, it can handle two task orders and assign tasks to different machines.

Thus, a CPU core with hyper-threading has a minimal increase in integration, but with two ASs, it appears as two logical CPU cores, allowing it to process two software threads simultaneously, potentially increasing processing capacity by about 40%. This is why we often see CPU chip advertisements stating that a multi-core CPU chip has N cores and 2×N threads, which is the benefit brought by hyper-threading. Otherwise, if hyper-threading technology is not employed, the parameters of a multi-core CPU chip can only state N cores and N threads. The following diagram illustrates a 2-core CPU without hyper-threading and with hyper-threading.

Figure 11. Illustration of Hyper-Threading in Multi-Core CPUs (Source: Reference Material 20)

2. Core Structure Research

Multi-core CPU structures can be divided into homogeneous multi-core and heterogeneous multi-core. Homogeneous multi-core refers to multiple CPU cores on the chip having the same structure, while heterogeneous multi-core refers to multiple CPU cores on the chip having different structures. Researching the implementation methods of core structures for different application scenarios is crucial for the overall performance of the CPU. The core’s structure directly affects the chip’s area, power consumption, and performance. How to inherit and develop the achievements of traditional CPUs also directly impacts multi-core performance and implementation cycles. Additionally, the instruction system used by the core is also crucial for system implementation, determining whether multi-core uses the same or different instruction systems and whether it can run operating systems.

3. Cache Design Technology

The speed gap between the CPU and main memory is a prominent conflict for multi-core CPUs, necessitating the use of multi-level caches to alleviate it. This can be divided into shared L1 cache, shared L2 cache, and shared main memory. Multi-core CPUs generally adopt a structure with shared L2 cache, where each CPU core has its private L1 cache, and all CPU cores share the L2 cache.

The design of cache architecture directly affects the overall system performance. However, in multi-core CPUs, whether shared cache or private cache is better, whether to establish multi-level caches on-chip, and how many levels of caches to establish all significantly impact chip size, power consumption, layout, performance, and operational efficiency, requiring careful study and consideration. Additionally, the consistency issues arising from multi-level caches must also be addressed.

4. Inter-Core Communication Technology

Multi-core CPUs execute programs simultaneously across cores, sometimes requiring data sharing and synchronization between cores, thus necessitating hardware structures to support communication between CPU cores. An efficient communication mechanism is essential for the high performance of multi-core CPUs. The two mainstream on-chip efficient communication mechanisms are one based on bus-shared cache structure and one based on on-chip interconnect structure.

The bus-shared cache structure means each CPU core has a shared L2 or L3 cache to store frequently used data and communicates via inter-core connection buses. Its advantage is a simple structure and high communication speed, while its disadvantage is poor scalability. The on-chip interconnect structure means each CPU core has independent processing units and caches, connected through crossbar switches or on-chip networks. Each CPU core communicates through messages. This structure’s advantage is good scalability and guaranteed data bandwidth, while its disadvantage is a complex hardware structure and significant software changes.

5. Bus Design Technology

In traditional CPUs, cache misses or memory access events negatively impact CPU execution efficiency, and the efficiency of the Bus Interface Unit (BIU) determines the extent of this impact. In multi-core CPUs, when multiple CPU cores simultaneously request memory access, or when multiple CPU cores experience cache misses, the efficiency of the BIU’s arbitration mechanism for these access requests and the efficiency of the conversion mechanism for external memory access determine the overall performance of the multi-core CPU system.

6. Operating Systems for Multi-Core

For multi-core CPUs, optimizing operating system task scheduling is key to enhancing execution efficiency. Task scheduling algorithms can be divided into global queue scheduling and local queue scheduling. The former refers to the operating system maintaining a global task waiting queue; when a CPU core is idle, the operating system selects a ready task from this global queue to execute on that core. Its advantage is higher CPU core utilization. The latter means the operating system maintains a local task waiting queue for each CPU core; when a core is idle, it selects a ready task from that core’s queue to execute. Its advantage is improving local cache hit rates. Most multi-core CPU operating systems adopt global queue-based task scheduling algorithms.

Multi-core CPU interrupt handling is significantly different from single-core CPUs. CPU cores need to communicate and coordinate through interrupts, so the local interrupt controllers for CPU cores and the global interrupt controller that arbitrates interrupts among all CPU cores need to be integrated within the chip.

Additionally, multi-core CPU operating systems are multi-task systems. Since different tasks compete for shared resources, the system needs to provide synchronization and mutual exclusion mechanisms. The traditional solutions used for single-core CPUs cannot meet multi-core situations, necessitating the use of hardware-provided “read-modify-write” primitive operations or other synchronization and mutual exclusion mechanisms to ensure this.

7. Low Power Design Technology

Every two to three years, the density of CPU transistors and power density doubles. Low power and thermal optimization design have become focal points in multi-core CPU design, requiring consideration at multiple levels, including operating system, algorithm, architecture, and circuit levels. The effects of implementations at each level vary, with higher abstraction levels yielding more significant reductions in power consumption and temperature.

8. Reliability and Security Design Technology

In today’s information society, CPUs are ubiquitous, and there are higher demands for CPU reliability and security. On one hand, the complexity of multi-core CPUs increases, with low voltage, high frequency, and high temperature posing challenges to maintaining safe chip operation. On the other hand, external malicious attacks are becoming increasingly numerous and sophisticated, making reliable and secure design technology increasingly important.

5. How Do Multi-Core CPUs Work?

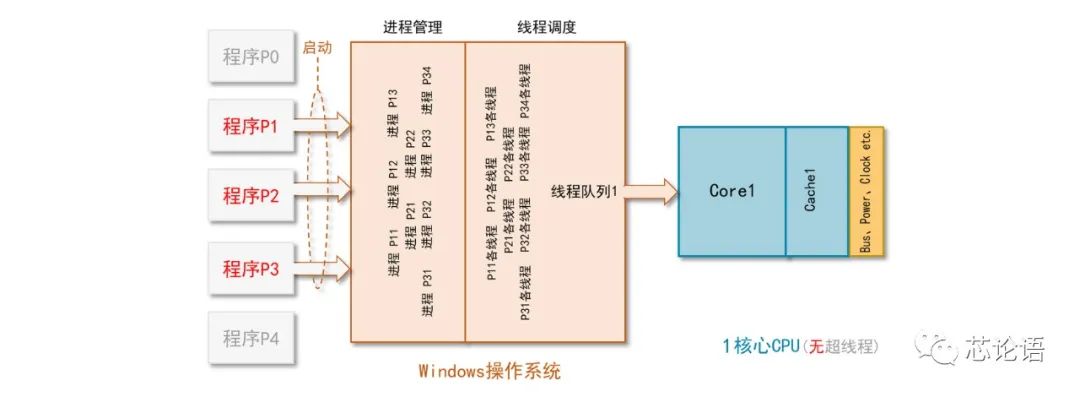

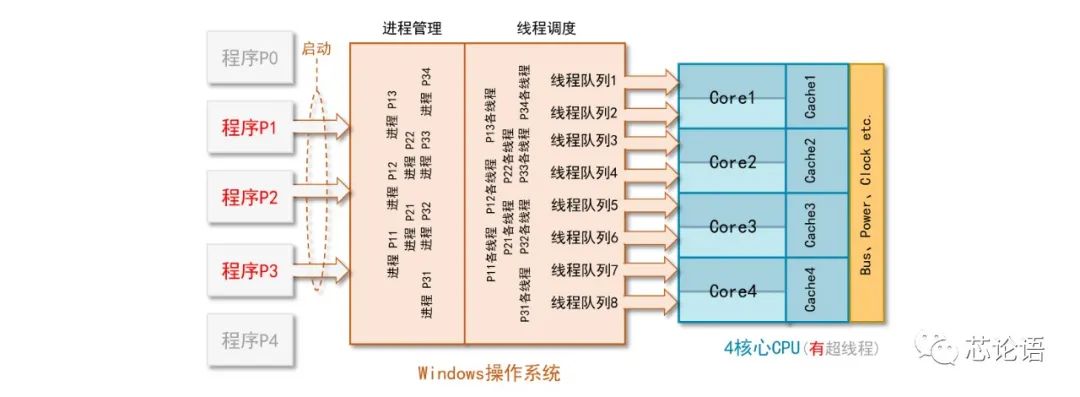

To understand how multi-core CPUs work, we must analyze the application programs, operating systems, and CPU cores together. The Windows operating system, as the task scheduler, allocates hardware resources—CPU cores—to application programs based on processes and threads. One process corresponds to one application program, but one application program can correspond to multiple processes, completing the execution of this program through multiple processes.

When an application program is not executed, it is “static”; once the user starts executing the program, it is taken over by the operating system and becomes “dynamic.” The operating system manages a batch of programs initiated by the user through processes. Thus, a process can be seen as an “executing program,” which includes the basic resources allocated by the operating system to this program.

A process can be subdivided into multiple threads, and only threads can gain CPU core usage rights through the operating system. A process containing only one thread can be called a single-threaded program, while a process containing multiple threads can be called a multi-threaded program.

For a program’s threads to gain CPU time, they must queue in the operating system’s thread queue. After being scheduled by the operating system, they can gain execution time on a CPU core. The allocation of CPU cores by the operating system is a complex process, and no one can clarify the specific details in a brief text. Below, I will illustrate how the threads of a program process are allocated to CPU cores for execution in two scenarios: single-core CPU and 4-core CPU.

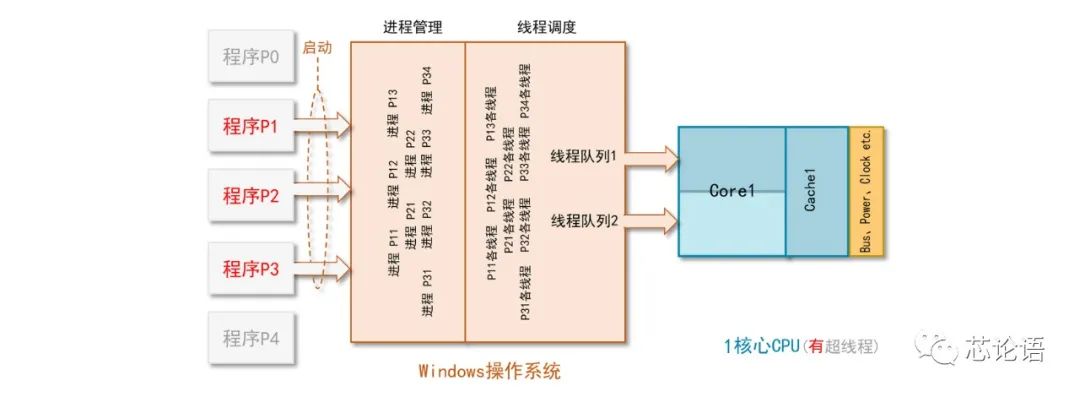

If the CPU is single-core and does not employ hyper-threading technology, the thread queue has only one entry, and the selection of threads is limited to one. If hyper-threading technology is employed, the single core expands to two logical cores, resulting in two thread queues and two selection options, as shown in the diagram below.

Figure 12. Illustration of Application Program Scheduling Execution on a Single-Core CPU (No Hyper-Threading)

Figure 13. Illustration of Application Program Scheduling Execution on a Single-Core CPU (With Hyper-Threading)

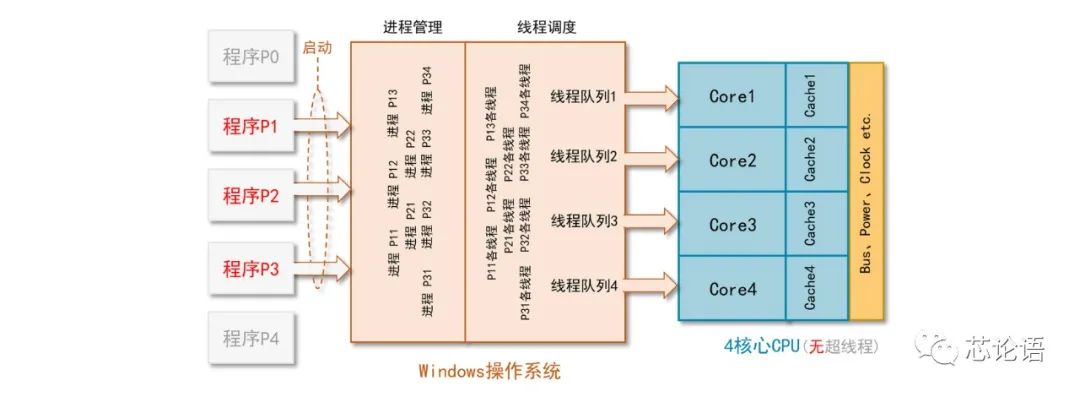

If the CPU is 4-core and does not employ hyper-threading technology, the thread queue has four entries, and the selection of threads is limited to four. If hyper-threading technology is employed, the 4-core expands to 8 logical cores, resulting in an 8-entry thread queue and 8 selection options, as shown in the diagram below.

Figure 14. Illustration of Application Program Scheduling Execution on a 4-Core CPU (No Hyper-Threading)

Figure 15. Illustration of Application Program Scheduling Execution on a 4-Core CPU (With Hyper-Threading)

From the perspective of multi-core CPUs, each CPU core continuously receives software threads to execute from the operating system, completing specified tasks according to program instructions. This may involve using memory, arithmetic units, input/output components, and communicating and transferring data with other CPU cores. After completing the tasks, they must report back. These processes can be seen as individual events that need to be coordinated by the event interrupt handling components. The hardware scheduling processing mode of multi-core CPUs can be roughly classified into three types: [8][18]

1. Symmetric Multi-Processing (SMP) is the most widely used mode. In SMP mode, a single operating system equally manages all CPU cores and allocates workloads to each core. Currently, most operating systems support SMP mode, such as Linux, Windows, Vxworks, etc. Additionally, this mode is typically used in homogeneous multi-core CPUs, as implementing SMP is more complex for heterogeneous multi-core CPUs due to their differing structures.

2. Asymmetric Multi-Processing (AMP) refers to multiple cores running different tasks relatively independently, where each core may run different operating systems, bare-metal programs, or different versions of operating systems, but there is a dominant CPU core that controls other subordinate CPU cores and the entire system. This mode is often used for heterogeneous multi-core CPUs, such as MCU + DSP, MCU + FPGA, etc. However, homogeneous multi-core CPUs can also utilize this mode.

3. Bound Multi-Processing (BMP) is fundamentally similar to SMP, with the only difference being that developers can define that a specific task only executes on a particular CPU core.

The above is a simple theoretical introduction. To understand the hardware scheduling principles and implementation details of multi-core CPUs, one might need to delve into Intel or AMD company internals for more technical details.

6. Perspectives on Multi-Core CPUs

Is it always better to have more cores in multi-core CPUs? Is it also better to have more CPU chips in multi-CPU systems under the same conditions? Is having hyper-threading better than not having it? The answer varies based on individual perspectives and intelligence, primarily depending on the specific application scenarios, and cannot be generalized.

First, multi-core CPUs or multi-CPUs need synchronization and scheduling, which comes at the cost of time overhead and computational power loss. If increasing the number of CPU cores or CPU chips enhances the system’s processing capacity, then the time overhead and computational power loss from synchronization and scheduling would detract from that benefit. If the net gain is greater than the loss and the cost increase is acceptable, then the solution is feasible; otherwise, it is not worthwhile. Evaluating a system’s solution must consider not only the number of CPU cores but also the differences in operating systems, scheduling algorithms, application characteristics, and driver programs, all of which collectively influence the system’s processing speed. Here are some discussion points from various articles.

1. More CPU cores do not necessarily mean faster execution speeds. The phrase “not necessarily” is used here because one thread may need to wait for others to complete before it can continue executing. While it awaits the completion of other threads or processes, even if it is its turn in the queue, it must relinquish execution rights and continue waiting, allowing subsequent threads in the queue to execute. For that particular thread’s program, it appears to be slower, but for the system, it at least makes way for other threads to continue running. Multi-core CPUs can certainly accelerate batch process execution, but for a specific process or type of program, it may not be the fastest.

2. Smartphones must provide users with an excellent experience, which relies not only on CPU performance. In addition to the number of CPU cores, factors such as the performance of the baseband chip that determines communication quality, the performance of the GPU, and the performance of gaming and VR applications also play vital roles. Comprehensive system performance is what truly matters.

3. MediaTek launched a 10-core SoC chip technology with a triple architecture in 2015, followed by the 10-core, quadruple architecture Helio X30, which aimed to reduce power consumption through multi-architecture techniques. While MediaTek undoubtedly has technological advantages in multi-core SoCs, Qualcomm launched the Snapdragon 820 chip with only four cores at the end of 2015, and the SoC chips used in Apple smartphones were only dual-core at the time. This indicates that, concerning smartphones, the significance of multi-core CPUs or SoCs cannot be definitively stated; a system-level analysis is necessary to arrive at the correct conclusion.

Conclusion: Multi-core CPUs and SoCs are designed to meet the increasing demands for processing capability and speed in complete systems. As single-core CPUs developed along Moore’s Law, they faced obstacles due to power limits, leading to the necessity of choosing a breakthrough route in multi-core CPUs. Multi-core CPUs drive updates and upgrades in operating systems, which in turn determine the effectiveness of multi-core CPUs. The challenges in multi-core CPU technology lie in information transfer, data synchronization, and task scheduling among multiple cores. The evaluation of system performance should not only consider the number of CPU cores but also the operating system, scheduling algorithms, applications, and driver programs. Multi-core CPU technology and technologies like FinFET and 3D chip technology can be seen as two critical technologies extending the life of Moore’s Law.

References:

1. Popular Science China, Multi-Core Processors, Baidu Baike: https://baike.baidu.com

2. Anonymous, Illustrated ARM Learning, Kanzhun: https://www.kanzhun.com

3. Cortex-A8, Sogou Baike: https://baike.sogou.com/v54973111.htm

4. IT168 Long Xingtianxia, Golden Years: Review of Intel Desktop Processor History, Sina: http://tech.sina.com.cn/n/2006-08-02/133156397.shtml, 2006.8.2

5. Anonymous, Global First 6-Core i7-980X Launch Detailed Test, Fast Technology: https://news.mydrivers.com/1/158/158379_1.htm, 2010.3.11

6. Anonymous, From ARM7, ARM9 to Cortex-A7, A8, A9, A12, A15, to Cortex-A53, A57, Electronic Products World: http://www.eepw.com.cn/article/215182_5.htm, 2014.1.6

7. Ada_today, How Do Multi-Core and Single-Core CPUs Work? CSDN Blog: https://blog.csdn.net/u014414429/article/details/24875421/, 2014.5.2

8. Zamely, Basics of Multi-Core Processors SMP&&BMP, CN Blog: https://www.cnblogs.com/zamely/p/4334979.html, 2015.3.14

9. Weight V4216, The Development History of Multi-Core Processors, Zhihu: https://zhidao.baidu.com/question/435213422142183884.html, 2016.5.14

10. Anonymous, Source from TechNews, Multi-Core Processor Development Faces Bottlenecks, MediaTek Under Siege, Electronic Products World: http://www.eepw.com.cn/article/201607/294583.htm, 2016.7.27

11. Qishilu, 8 Images to Quickly View New Xeon: Skylake Architecture, Appearance, and Model, Sohu: https://www.sohu.com/a/156424490_281404, 2017.7.12

12. Shangfangwen Q, Intel Xeon W-3175X Power Consumption Measured: 28 Cores Overclocking Breaks 500W, Fast Technology: http://viewpoint.mydrivers.com/1/613/613590.htm, 2019.1.31

13. Driver Home, Asetek Releases 500W All-in-One Liquid Cooling Solution: 28-Core W-3175X Exclusive, Baidu: https://baijiahao.baidu.com/s?id=1624176225325117756&wfr=spider&for=pc, 2019.1.31

14. Old Wolf, What Is the Difference Between Multi-Core CPUs and Multiple CPUs? Zhihu: https://www.zhihu.com/question/20998226, 2019.6.9

15. Driver Home, VIA’s x86 AI Processor Architecture and Performance Released: Comparable to Intel’s 32-Core, Highlight Report: https://kuaibao.qq.com/s/20191212A009EG00, 2019.12.12

16. Allway2, Development of Multi-Core Processors, CSDN Blog: https://blog.csdn.net/allway2/article/details/103614463, 2019.12.19

17. Fast Technology, How Far Can Multi-Core Processors Go? By 2050, People May Use 1024-Core CPUs, Baidu: https://baijiahao.baidu.com/s?id=1658158800729500154&wfr=spider&for=pc, 2020.2.10

18. Tccxy_, Classification and Operating Methods of Multi-Core Processors, CSDN Blog: https://blog.csdn.net/juewukun4112/article/details/105537832, 2020.4.15

19. AnandTech, 16 Billion Transistors! Completely Outperforming Intel! Detailed Analysis and Review of Apple’s First Mac Processor! WeChat Public Account [EETOP], 2020.11.11

20. Tian Mengjie, What Is Hyper-Threading Technology? Zhongguancun Online: https://m.zol.com.cn/article/2737340.html, 2012.2.17

21. Shangfangwen Q, The Strongest Domestic CPU Unveiled! 128 Cores, 16 Channels DDR5, WeChat Public Account [Hardware World], 2020.12.29

Editor: Summer Solstice