Deployment of vLLM Enterprise Large Model Inference Framework (Linux)

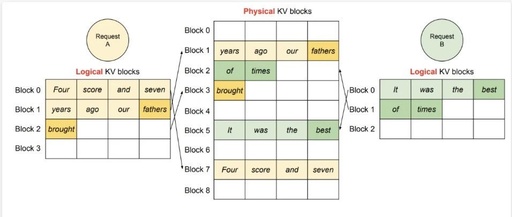

Introduction Compared to traditional LLM inference frameworks (such as HuggingFace Transformers, TensorRT-LLM, etc.), vLLM demonstrates significant advantages in performance, memory management, and concurrency capabilities, specifically reflected in the following five core dimensions:1. Revolutionary Improvement in Memory Utilization By utilizing Paged Attention technology (inspired by the memory paging mechanism of operating systems), the KV Cache (Key-Value … Read more