Tina: A Lightweight Inference Model Based on LoRA

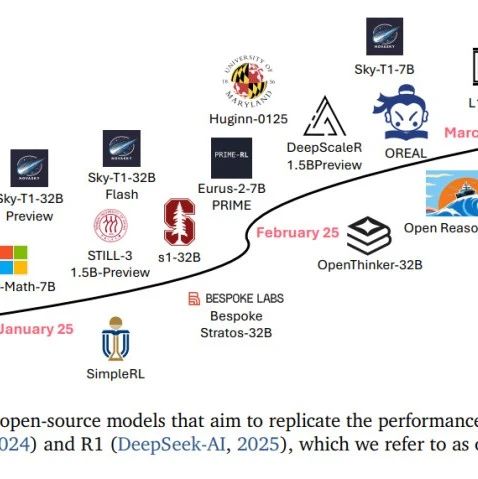

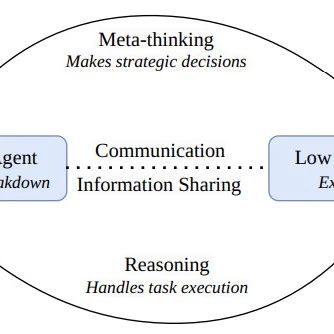

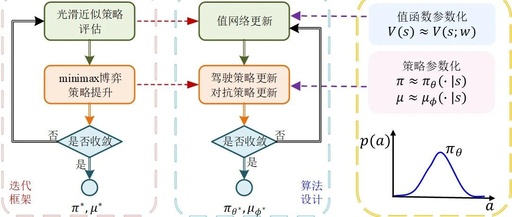

How can we achieve strong inference capabilities in language models at a low cost? Driven by this fundamental question, we propose Tina — a family of lightweight inference models realized with high cost efficiency. Tina demonstrates that significant improvements in inference performance can be achieved even with minimal resources. This achievement is realized through the … Read more