Author | Yang Tao

1

Concept

1

Concept

In the field of computer science and artificial intelligence, the term ‘Agent’ is generally translated as ‘intelligent entity,’ which is defined as a software or hardware entity that exhibits one or more intelligent characteristics such as autonomy, reactivity, sociality, proactivity, reflectiveness (thoughtfulness), and cognition in a certain environment. An AI Agent refers to an intelligent entity driven by LLM. Currently, there is no widely accepted definition for it, but we can describe it as a system that uses LLM to reason about problems, can autonomously create plans to solve problems, and will utilize a series of tools to execute those plans.

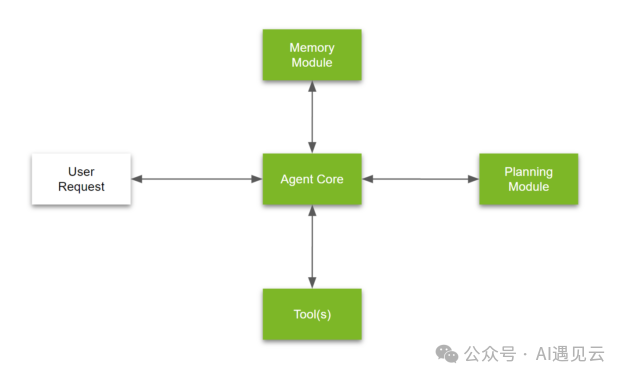

In short, an AI Agent is a system with complex reasoning capabilities, memory, and task execution abilities, as shown in the figure below:

-

Core of the Agent

The central coordination module manages core logic and characteristics of agent behavior and can make critical decisions. Here we need to define the following:

-

Overall Goals of the Agent The overall goals and objectives that the agent must achieve. -

Execution Tools A brief list of all tools that the agent can use (or a ‘user manual’). -

Explanation of How to Use the Planning Module A detailed explanation of the functions of different planning modules and when to use them. -

Relevant Memory This is a dynamic part that fills in the most relevant content from the user’s past conversations. ‘Relevance’ is determined based on the questions posed by the user. -

Agent Personality (Optional) If the LLM is required to prefer certain types of tools or exhibit certain characteristics in the final response, the desired personality can be clearly described.

-

Memory Module

The memory module plays a very important role, recording the internal logs of the agent and the history of interactions with users. There are two types of memory modules:

-

Short-term Memory The thoughts and actions that the agent experiences while trying to answer a single question posed by the user. This is usually the context in prompt engineering; once the context limit is exceeded, the LLM will forget the previously input information. -

Long-term Memory Behaviors and thoughts related to interactions between the user and the agent, containing conversation records spanning weeks or months. This is usually an external vector database that can retain and quickly retrieve historical information almost indefinitely.

-

Toolset

-

Planning Module

Complex problems, such as analyzing a set of financial statements to answer higher-level business questions, often require a step-by-step approach. For LLM-driven agents, planning capability is essentially the advanced practice of many advanced techniques in prompt engineering. Complex problems can be addressed by using a combination of two techniques:

-

Task and Problem Decomposition

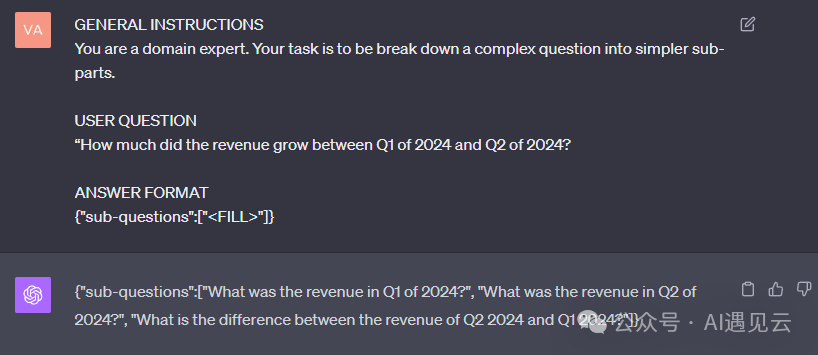

Composite problems or inferred information require some form of decomposition. For example, the question ‘How much did profits grow between Q1 and Q2 of FY2024?’ can be decomposed into multiple sub-questions:

-

What is the profit for Q1?

-

What is the profit for Q2?

-

What is the difference between the two results above?

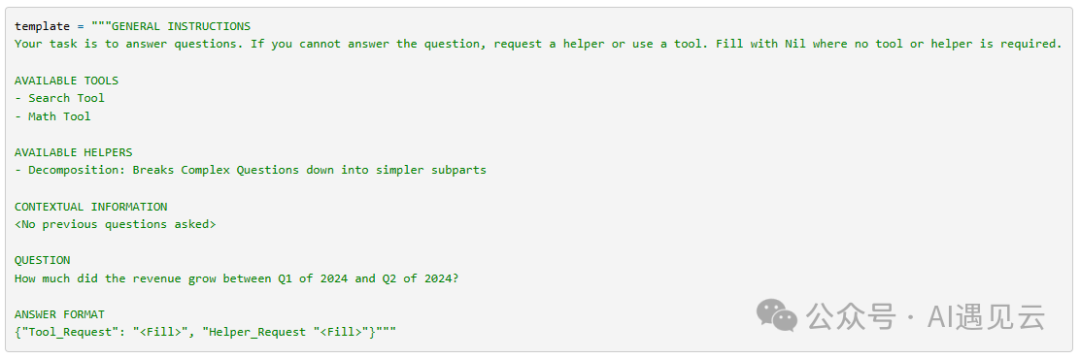

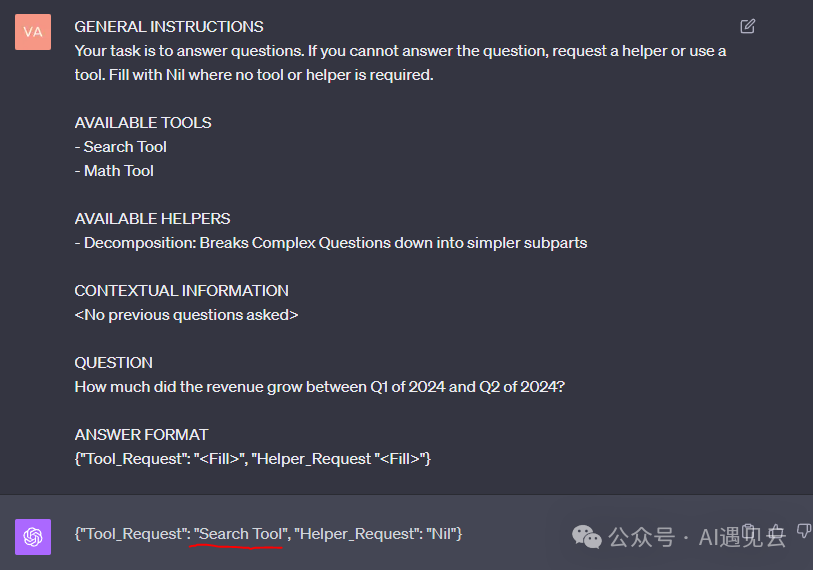

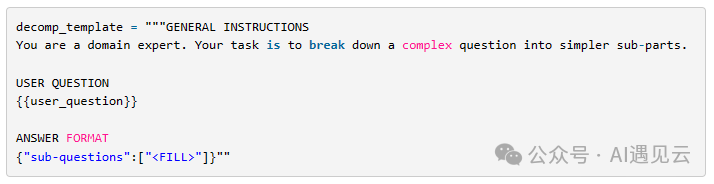

A dedicated AI Agent must be able to lead this decomposition, for example by using the following prompt template:

When the specific problem is input to the LLM, the LLM will respond with the results of the problem decomposition:

-

Reflection or Critique

Techniques such as ReAct (Reason and Act), Reflexion, Chain of Thought, and Graph of Thought have become frameworks based on critique or evidence-based prompting. They have been widely used to enhance the reasoning and responsiveness of LLMs. These techniques can also be used to optimize the execution plans generated by the agent.

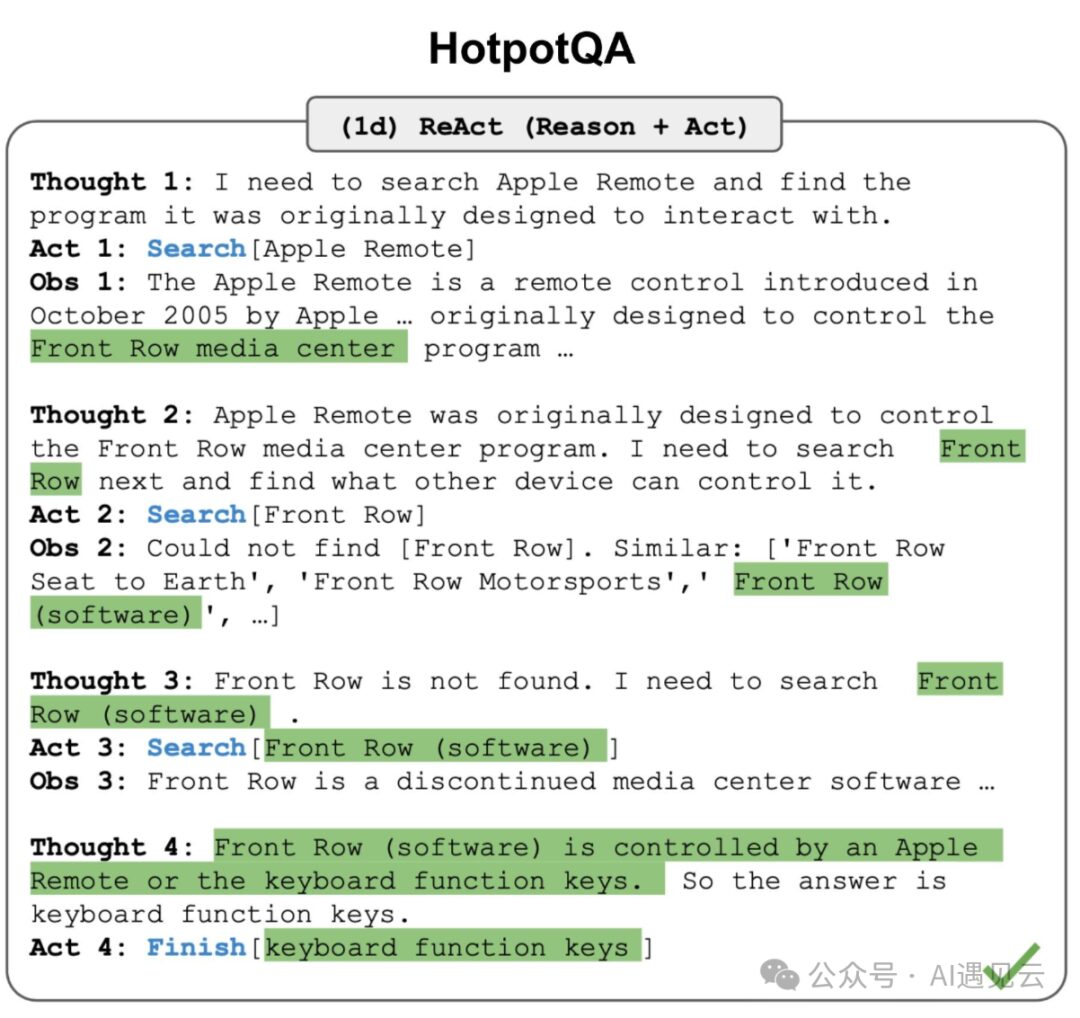

For example, the ReAct model supported by the LangChain agent framework, which is the Reason+Act model, breaks down complex problems, retrieves missing content from external sources through tools, and then supplements it into the problem response.

The ReAct prompt template generally includes repeated iterations of these contents:

-

First Thought: I need to find the program that Apple Remote was originally designed to interact with. First Action: Search for ‘Apple Remote’ on the search engine. Thus obtaining an introduction about Apple Remote.

-

Second Thought: Find in the results that Apple Remote was originally designed to control the Front Row program; I need to look for information about Front Row. Second Action: Search for ‘Front Row’. The search engine returns no relevant information.

-

Third Thought: I should add ‘software’ and search again. Third Action: Search for ‘Front Row (software)’. Obtaining an introduction to Front Row software.

-

Fourth Thought: In the results, key information is found: Front Row software can be controlled by Apple Remote or keyboard multifunction keys. Fourth Action: Complete, return the user’s answer: ‘keyboard multifunction keys’.

2

Challenges

-

Limited Context Length

-

Insufficient Long-term Planning and Task Decomposition Capability

-

Unreliable Natural Language Interface

3

Outlook

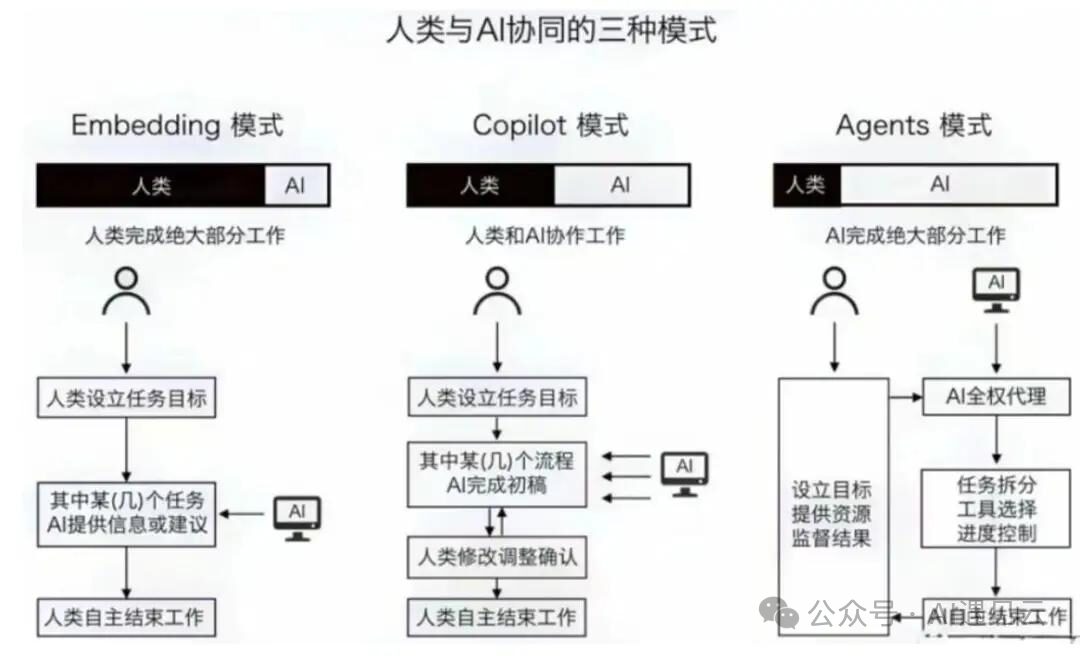

The intelligent revolution of generative AI has evolved to the point where three collaboration models between humans and AI have emerged:

In the Agents mode, humans set goals and provide necessary resources, and then AI independently takes on most of the work, with humans supervising the process and evaluating the final results. In this mode, AI fully embodies the interactivity, autonomy, and adaptability characteristics of agents, approaching the role of an independent actor, while humans play more of a supervisory and evaluative role. The Agents mode is undoubtedly more efficient compared to the Embedding mode and Copilot mode and may become the main mode of human-machine collaboration in the future.

AI Agents are a significant driving force for artificial intelligence to become infrastructure. Looking back at the history of technological development, the end of technology is to become infrastructure; for instance, electricity has become an infrastructure that is as unnoticed as air yet indispensable, as has cloud computing. Almost everyone agrees that artificial intelligence will become the infrastructure of future society. And agents are promoting the infrastructure of artificial intelligence. AI Agents can adapt to different tasks and environments and can learn and optimize their performance, making them applicable in a wide range of fields, thus becoming the foundational support for various industries and social activities.

References

[1]https://arxiv.org/abs/2304.03442

[2] https://arxiv.org/abs/2210.03629

[3] https://lilianweng.github.io/posts/2023-06-23-agent/

[4] https://zhuanlan.zhihu.com/p/641322714

[5] https://developer.nvidia.com/blog/introduction-to-llm-agents/

[6] https://developer.nvidia.com/blog/building-your-first-llm-agent-application/

[7] https://zhuanlan.zhihu.com/p/676828569

[8] https://zhuanlan.zhihu.com/p/643799381

[9] https://zhuanlan.zhihu.com/p/676544930

[10] https://zhuanlan.zhihu.com/p/664281311

[11] https://python.langchain.com/docs/modules/agents/